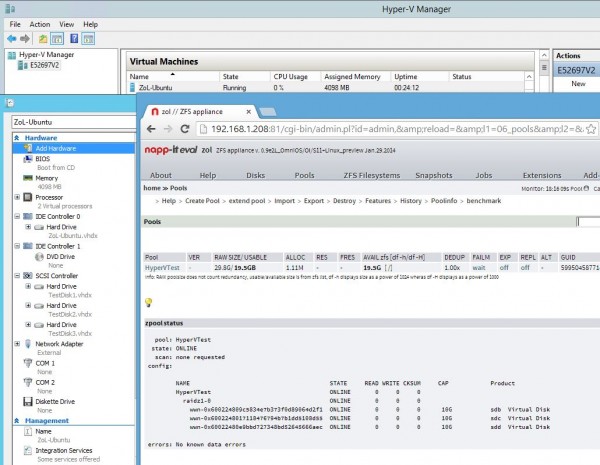

Recently the napp-it team announced on their site and the STH forums that there is a new napp-it version out, napp-it 0.9e2L. That version has a new feature, it supports ZFS on Linux or ZoL. Officially napp-it supports 12.04 LTS however we decided to try something slightly different: Use the newest Ubuntu 13.10. To make things slightly more fun, we are utilizing Hyper-V Server 2012 R2 as our host. As a note to readers: this is an unsupported configuration in just about every way. DO NOT USE THIS FOR PRODUCTION ENVIRONMENTS. Of course, for testing and home lab fun, this is very easy to do.

Why Hyper-V?

Hyper-V does one thing extremely well: it allows one to pass raw disks directly to virtual machines. While this may not seem like a major benefit, for low power and smaller storage implementations it does make a huge difference. With Microsoft’s ability to pass raw physical disks directly to Hyper-V virtual machines, one does not require VT-d or passing an entire controller (with associated disks) through to the virtual machine.

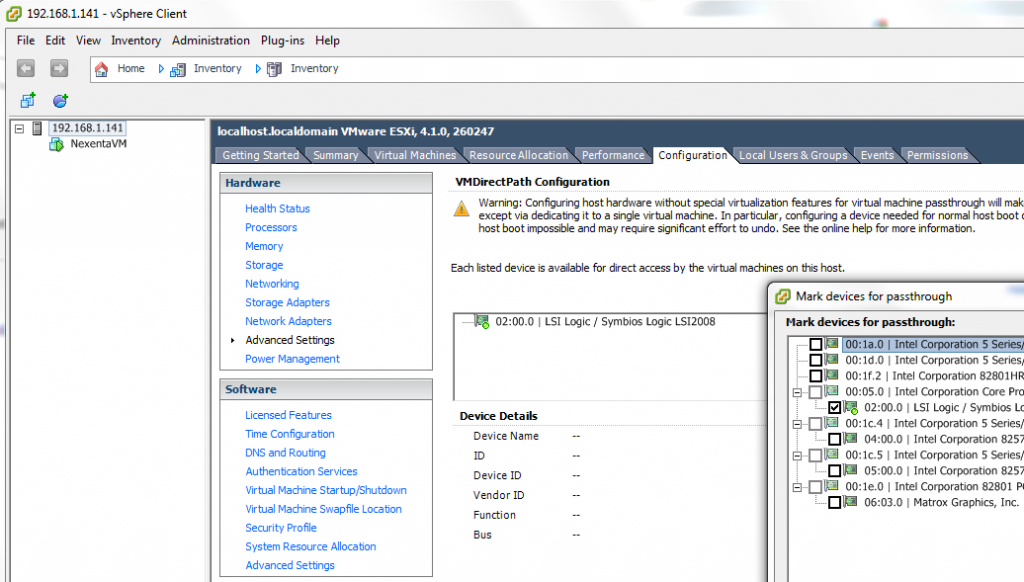

In the standard ESXi model, the easiest way to allow a virtual machine to control raw disks is by using VMDirectPath which requires VT-d to pass through a HBA such as a LSI SAS 2008 based card. Once that HBA is passed through to the VM, one can use all eight ports worth of drives, and more with expanders, for storage. This works very well and we have had a guide to setting up VMDirectPath HBA and disk pass-through for years.

The problem with the VMware ESXi VMDirectPath method is that unless you do raw device mapping, you end up needing a HBA (say $100 and 8w) to pass through disks. This is because you are passing through the entire HBA, not individual disks. Have 8 ports on one controller and want to pass 2 drives to one VM and 2 drives to another? This model does not work and you end up getting two HBAs each with two drives. You then pass each HBA to its respective VM. In larger installations this is possible but far from ideal.

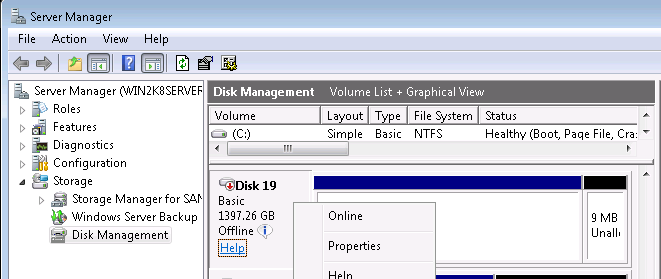

Hyper-V has a different model. One can pass-through disks basically in two steps (here is the Hyper-V disk passthrough guide from over three years ago that still works): 1. “offline” the disks in the Hypervisor such as Windows 8.1, Hyper-V Server 2012 R2 or Windows Server 2012 R2:

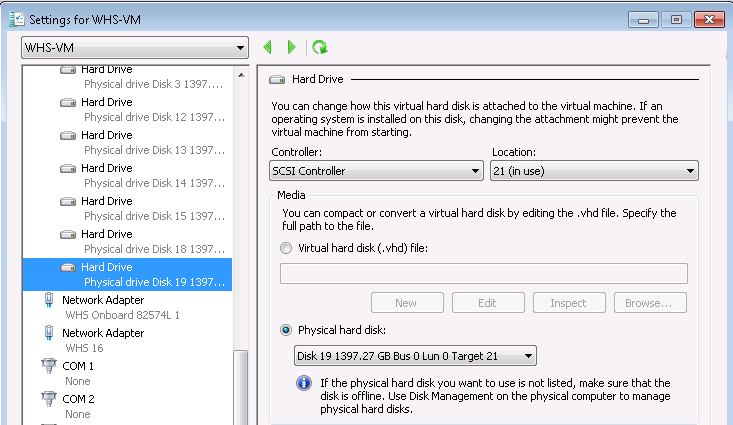

and 2. Assign the disk to be controlled by the VM instead.

What this means is that you could use a standard 6-port onboard controller and have the Hypervisor (e.g. Windows 8.1, Hyper-V Server 2012 R2 or Windows Server 2012 R2) on a disk on the first port. You could then have two more disks on the onboard SATA controller and pass them through to each VM. You could also pass two disks from a four port HBA to be used in two different VMs. For small systems, this is a much less expensive model than the ESXi model for home labs. Furthermore, it works on most Windows 8 desktops without requiring new hardware or VT-d support. So it works with Intel K series overclockable processors (again not recommending this for production, only a lab environment.)

Further, the Hyper-V hardware compatibility list is significantly larger than the ESXi one so there are simply more options for supported controllers, especially onboard ones from Marvell and others.

Why napp-it?

Napp-it is a well-known graphical web interface for ZFS on Solaris derived platforms such as OmniOS, NexentaCore, Solaris 11 and others. Whereas FreeBSD has FreeNAS as a great web management interface for its ZFS implementation, the ZFS on Linux project has not had a similar web interface until now.

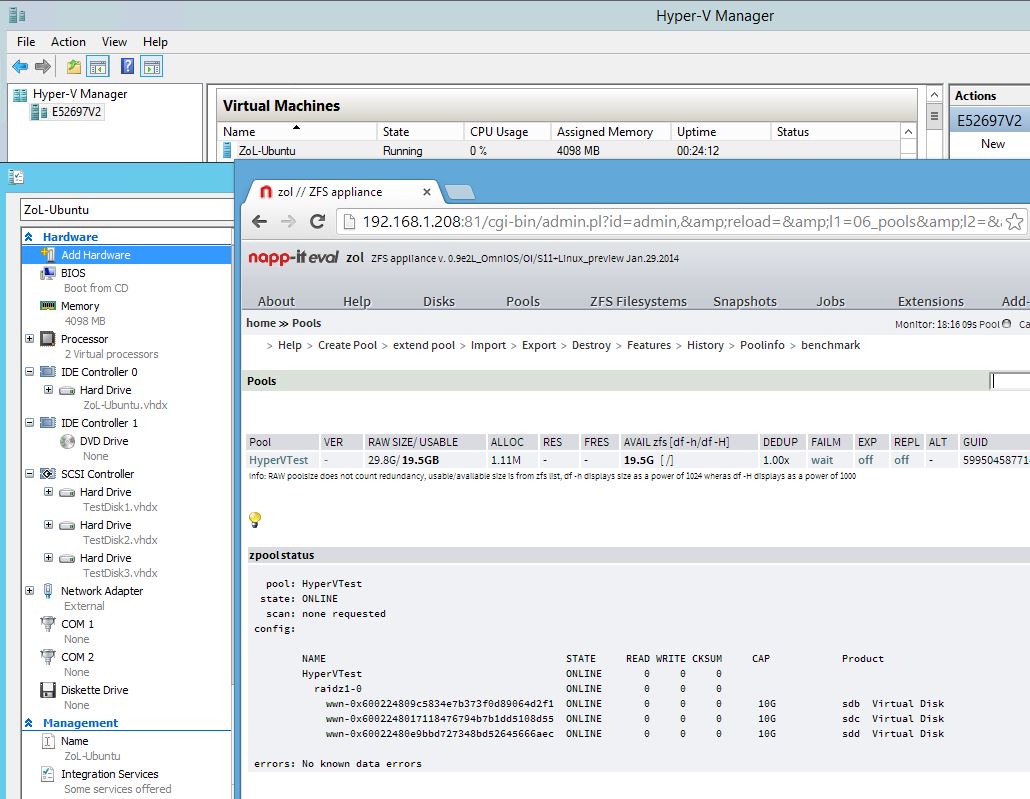

While the idea of having a ZFS on Linux Hyper-V test bed was possible, one can now quickly create setups such as RAID-Z pools using the napp-it web interface. In the example above we utilized virtual disks (vhdx format) however raw disk pass-through worked also.

Final thought – this is big

Microsoft has been making major pushes for having better storage integrated into Windows. ReFS, SMB3, native iSCSI targets and initiators are all relatively recent additions to the Microsoft feature list. Still, ZFS is awesome. napp-it is great. Hyper-V works well with passing through single disks across controllers or on a single controller and works well with Ubuntu. Again – this should be for test lab purposes ONLY, but it works fairly well in its first release. There is little doubt that this setup is going to be the #1 way to introduce ZFS to beginners soon. Hyper-V has one huge advantage over ESXi – all of the functionality you need to start playing with ZFS is likely already on your desktop or notebook so long as virtualization extensions are enabled on your platform and the Hyper-V role is installed. Creating a ZFS test machine, even with a simple three or four disk setup to play with RAID-Z and RAID-Z2 pools can now be accomplished easily without installing another piece of software beyond what you get with Windows 8 or Windows 8.1. A common concern we heard previously with ZFS on Linux was the lack of a solid user interface to start learning on. napp-it has now removed that barrier.

Great work Patrick.

I only recently became aware of physical disk passthrough in Hyper-V and have been wanting to take it for a spin with either Napp-it or FreeNAS.

Would love to see some performance benchmarks from the Hyper-V hosts perspective with regards to iSCSI, NFS, and CIFS shares.

Similarly, it would be fantastic to do the same in ESXi.

I’ve just added some agenda items for my home lab.

Keep it up!

One thing that should be noted, is that Disk passthrough in Hyper-V do not pass the S.M.A.R.T data with it.

Diskes can also be passed through in ESXI one at a time, it’s just harder to do. Also here no S.M.A.R.T Data.

Quote:

“The problem with the VMware ESXi VMDirectPath method is that unless you do raw device mapping, you end up needing a HBA (say $100 and 8w) to pass through disks. This is because you are passing through the entire HBA, not individual disks. Have 8 ports on one controller and want to pass 2 drives to one VM and 2 drives to another? This model does not work and you end up getting two HBAs each with two drives. ”

Of course you can use 1-1 drive mapping in ESXi .. what are you on about..

Are you implying there’s something wrong with raw device mapping?

I can easily map 4 drives to one VM and 4 others to the other with an 8 drive HBA without using vt-d .

http://i.imgur.com/aMgBbtR.png

Next article would be to compare ZFS implementation on Ubuntu vs OmniOS and FreeBSD 10 ? I thought ZFS on LInux is much slower than OmniOS?

The lack of SMART is what bothers me, and I am a windows guy too.

I tried this now myself and I am quite impressed. However, if I put stress on the linux-VM, in particular through the network, ubuntu’s spitting errors (while not crashing and everything seems to work in the end). I have described the problem in more detail in the MS-forums (https://social.technet.microsoft.com/Forums/en-US/dcd4c13d-d997-4632-991d-e290f470427f/error-quothvvmbus-buffer-too-smallquot-how-to-increase-buffer-in-linux-isdriver?forum=linuxintegrationservices). Anyone any idea where else I could look for help?

The reason that it is recommended to pass the whole HBA to the guest-vm on ESXi is because that is the only way for the guest to get complete HW access to the block devices talking AHCI directly top the disks. What you are doing on Hyper-V is, as Bob_Builder says, completely possible on ESXi by installing the driver for the HBA into ESXi. But that is not recommended for ZFS because you add a layer (a shim) between ZFS and the raw drives. ZFS should be given direct access to the drives in order to better guarantee data integrity on disk.