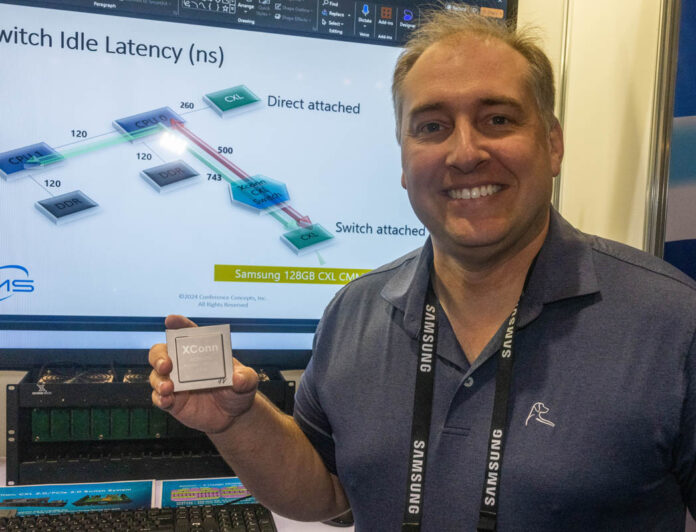

At the Future of Memory and Storage 2024 event (previously Flash Memory Summit) we saw one of the products we are most excited about. We have been watching the XConn CXL 2.0 switch since 2022, and we are about to see it ship soon. We also found that there will be a PCIe-only version of this switch chip coming, which may be really interesting for AI clusters.

XConn Shows its CXL 2.0 and PCIe Switch Off at FMS 2024

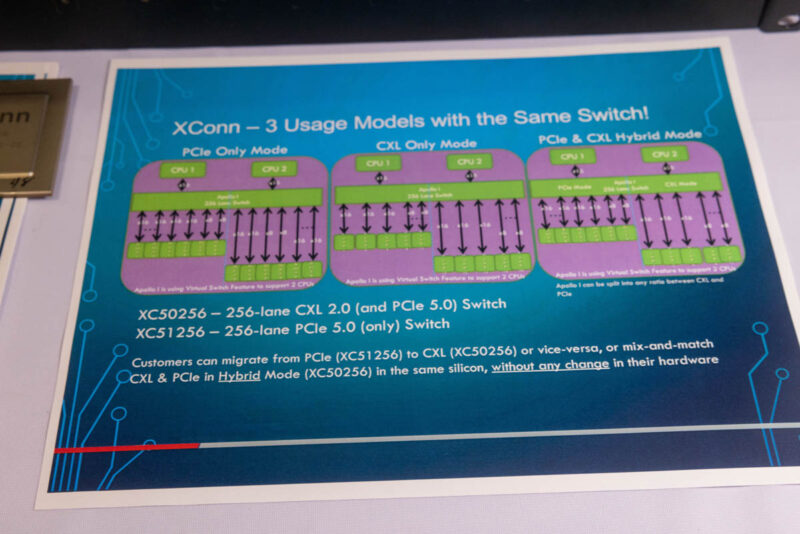

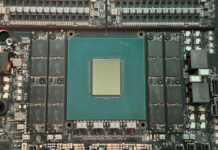

The chip we saw was the XConn XC50256. This is a 256-lane CXL 2.0 and PCIe Gen5 switch chip. That is huge since it can handle 16 x16 links to hosts and CXL devices like CXL memory expansion devices.

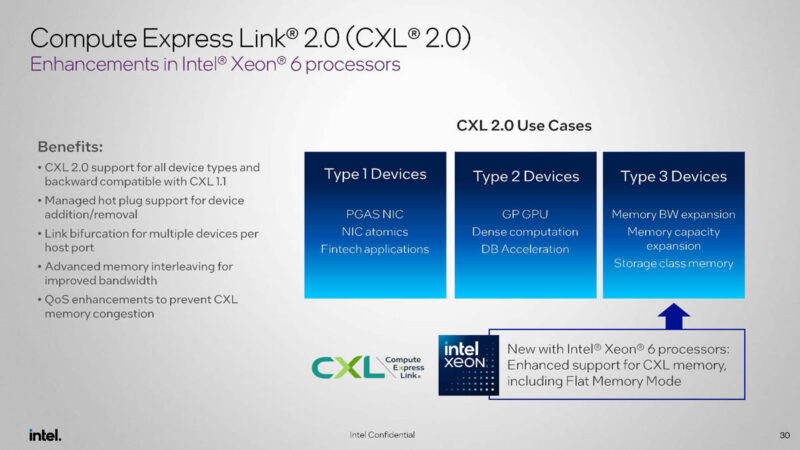

CXL 2.0 is in CPUs like the Intel Xeon 6 6700E Sierra Forest series and we expect it is a standard feature in CPUs launched in the second half of this year.

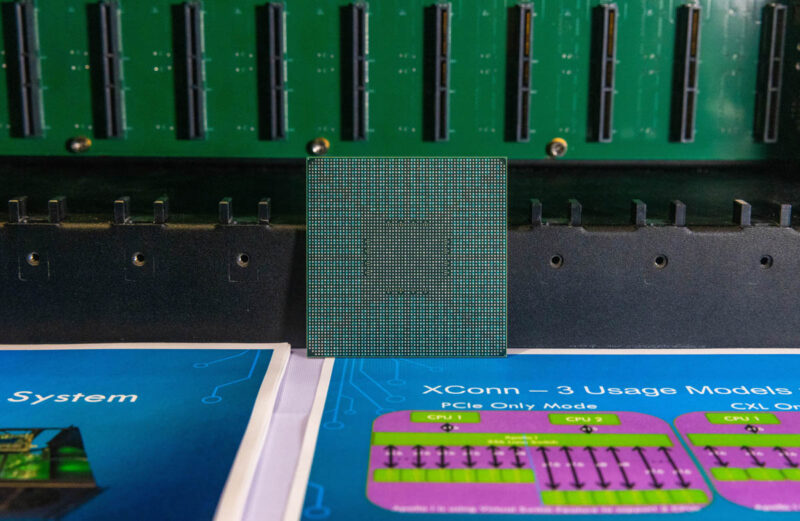

Here is the back of the chip.

XConn has a really cool technology. Aside from having the XConn XC50256 CXL 2.0 and PCIe Gen5 switch, there is also the XConn XC51256 which is the PCIe version. A massive PCIe switch is great, but XConn also has a Virtual Switch mode. One can connect two CPUs and then parition the XConn switch to run CXL or PCIe, or both in hybrid mode.

Those that have used big PCIe switch systems should be salivating at the above. When we see PCIe systems like the Supermicro systems we used in our NVIDIA L40S piece, the common architecture is to use two Broadcom PLX switch chips, one connected to each CPU. The XConn switch is big enough that it can have four NICs and ten GPUs connected to it directly along with the two CPU connections. One could also use PCIe GPUs along with things like CXL Memory Expanders to greatly expand the memory footprint of a server with both CPUs attached to the same switch.

Final Words

This is one of those technologies that I saw in 2022, but it keeps getting more exciting as it develops. 256 lanes itself is significant since that is a big jump from the Broadcom PEX89144 a 144-lane PCIe Gen5 switch. The ability to use the virtual switch function and also the ability to use CXL devices on the switch really sets itself apart in this generation of PCIe switch architectures.

I still need to figure out how to get one of the test rigs for these because this is one of those devices that will completely change what you can build with servers.

Am I seeing things or is that XConn chip really as big as a Cavium ThunderX2 CPU? Judging by the relative size of the package and Patrick’s hand, I’m guessing it is.

I’d like to see Panmnesia’s chip in action: double digit nanosecond round trip latency, CXL 3.1, PCIe 6; it will allow GPU to SSD or huge memory expansion, with lower latency.

https://panmnesia.com/news_en/

Why is it that while AMD was first on the market with cxl 1.1 and cxl.mem support, we’re only now getting cxl.mem capable machines that are Intel only?