Server Memory Expansion

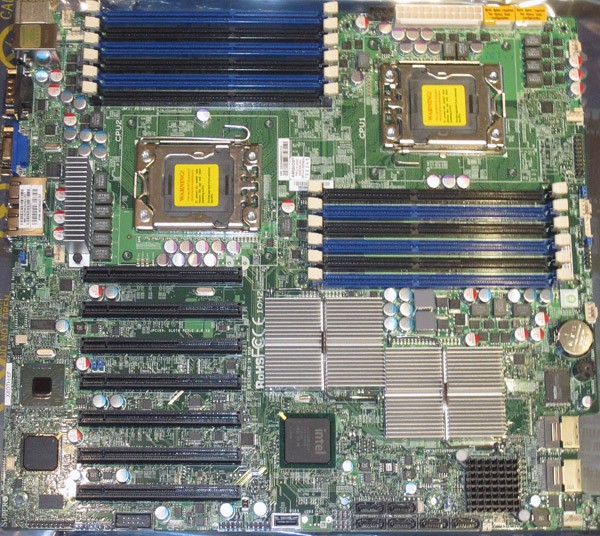

Going back to the STH archives, here is an example of a dual-socket server from the Nehalem-EP and Westmere-EP (2008-2010 in the Intel CPU TDP chart earlier in this piece.) Here you can see there is 3-channel memory for each CPU, and two DIMMs per channel, for six DIMMs per CPU, or twelve in a dual socket system with a full set fo DIMM slots.

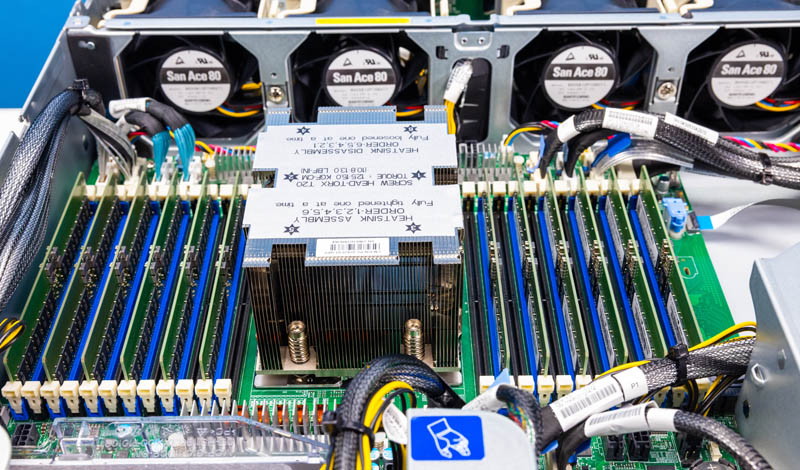

Today’s servers not just use faster and higher capacity DIMMs and on more channels, but there are more DIMM slots. A single AMD EPYC Genoa CPU now uses four times as many DIMMs as a 2008-2010 Xeon CPU, three times as many DIMMs as a 2011-2016 Xeon, and two times as many DIMMs as a 2017-2020 Xeon.

While we often discuss CPU TDP, to get full memory capacity and bandwidth from a modern socket also means that we are increasing memory devices as well. There is now a movement around CXL to add additional plug-in devices with even more memory. Each of these DIMMs uses ~5W of power, so the memory in a modern server can use more power than an entire socket with its memory in the Xeon E5 era.

TDP is NOT the Only Factor

Someone may look at the charts above and rightly conclude that the performance and power consumption per node have increased over time. It would, however, be incorrect to conclude that a well-utilized server is less power efficient than previous generations.

We can do more work on a single node by making larger CPUs, GPUs, NICs, and other accelerators. That decreases the number of nodes that need to complete a task. Every additional node requires additional chassis, power supplies, motherboards, boot drives, PDU ports, management processors, management network ports, network ports, and so forth. By consolidating to fewer larger nodes, an enormous amount of overhead is dedicated to inter-node communication.

That is a big driver in the NVIDIA GB200 NVL72 design, integrating as much computing and interconnect into a single rack as possible. In the 100-120kW range of power consumption, we discussed in the video that this rack is challenging for data centers that were not designed to handle this density.

In the video, we went into the idea that the GB200 NVL72 rack will use about as much power as an entire Tesla Cybertruck battery’s capacity but in an hour. That is an interesting way to think about rack power consumption.

Final Words

As part of the video for this series, we also discussed power consumption and some of the data center infrastructure. While liquid cooling has been a topic at STH for some time, power is becoming the #1 challenge in data centers. Whereas companies were trying to keep servers in thermal and power bands years ago to maintain existing rack infrastructure, the new direction is to get more power to your racks. That is a fundamental and essential industry shift.

Stay tuned for more on this series coming over the next few weeks.

Phrasing it in terms of EV power consumption is really interesting. This rack uses as much power as 3 or 4 fully loaded semi-trucks barreling down the highway at 70mph, 24/7/365. That’s astonishing.

One thing not really mentioned, but if you know you know….

Why we only talk about power requirements rather than focusing on cooling requirements – The power usage directly informs the BTU load for cooling. 8KW of power into any silicon electronics ~= 8kw of waste heat. Unlike a motor or lights might impart work or light as well as waste heat (where heat = lost efficiency), a cpu is all waste-heat. The transistor gates do not appreciably move/work to reduce the heat (not work in the abstract but work in the physical). 500W cpu puts out 500W of heat at load.

This is why a colocation/datacenter will only bother with billing you for power usage…not cooling, one = the other. The facility will cool things certainly, but power in is heat out.

As to why watercooling now? Because the parts are so close together and higher powered – close together. You are packing 120kw per rack…you could still cool the aisle for 120kw of power, but you gotta cool a tesla-worth of power usage every hour, now big in-case fans, big fins for heatsinks. Facility water will have giant cooling towers outside which will have gobs more room to dissipate the heat. These are usually direct to air though and do waste water from evaporation.

As a point of reference a DECsystem-20 KL from around 1976 took 21kW for the processor not counting disks, terminals and other peripherals.

The way I see it AMD introduced their 64-bit instruction set around 2000 after which x86 systems started being considered as possible servers. The dot-com bubble burst and by 2010 the cost effectiveness of x86 was more important than performance.

14 years later power consumption has gone up because the market niche changed. Those x86 systems are now the top-tier performers while ARM–from Raspberry Pi to Ampere Ultra–fill the low-power cost effective roles.

In terms of power efficiency, rather than raw power draw, are there any places where we are seeing regressions?

I know that, on the consumer side, it’s typical for whoever is having the worse day in terms of design or process or some combination of the two to kick out at least a few ‘halo’ parts that pull downright stupid amounts of power just so that they have something that looks competitive in benchmarks; but those parts tend to be outliers: you either buy from the competitor that is on top at the time or you buy the part a few notches down the price list that’s both cheaper and not being driven well outside its best efficiency numbers in order to benchmark as aggressively as possible.

With the added pressure of customers who are operating thousands of systems(or just a few, but on cheap colo with relatively tight power limits) do you typically even see the same shenanigans in datacenter parts; and, if so, do they actually move in quantity or are they just there so that the marketing material can claim a win?

My understanding is that, broadly, the high TDP stuff is at least at parity, often substantially improved, in terms of performance per watt vs. what it is replacing; so it’s typically a win as long as you don’t fall into the trap of massively overprovisioning; but are there any notable areas that are exceptions to the rule? Specific common use cases that are actually less well served because they are stuck using parts designed for density-focused hyperscalers and ‘AI’ HPC people?

what would be the theoretical power burn of a modern server if we were still stuck on 45nm Nehalem process? :)

While the TDP keeps going up, the efficiency of the chips is much better or just the overall CPU is much faster. The E5 2699 v4 was a 22c/44t CPU with a 145W TDP or 6.59W/core. The Epyc 9754 is a 128c/256t CPU with a 360W TDP or 2.81W/core. The Broadwell Xeon has a TDP 2.35x higher per core than the Epyc and is quite a lot slower core per core as well.

“A modern AI server is much faster, but it can use 8kW.”

“In 2025, we fully expect these systems to use well over 10kW per rack”

Dang. How are they going to manage that kind of exponential efficiency increase in less than a year? Using only 25% more power for an entire rack vs. a single system today. Impressive to be sure.