ZFS is the popular storage system that was born out of Sun Microsystems (now Oracle.) If you are looking for a piece of software that has both zealots for and against, ZFS should be at the top of your list. Today we are seeing many storage systems predicated on flash storage but ZFS was born of a different era. ZFS was born in an era disk-based storage. That is an important piece of background information because with disk-based arrays performance is always a concern due to the slow media. While reads can be easily cached in RAM, writes need to be cached on persistent storage to maintain the integrity of the storage. The ZFS ZIL SLOG is essentially a fast persistent (or essentially persistent) write cache for ZFS storage.

In this article, we are going to discuss what the ZIL and SLOG are. We are then going to discuss what makes a good device and some common pitfalls to avoid when selecting a drive. As we have hinted at STH, we just did a bunch of benchmarking on current options so we will have some data for you in follow-up pieces. We are going to try keeping this at a high enough level so that a broad audience can understand what is going on. If you want to help by coding new features for OpenZFS, this is not the ultra-technical guide you need.

Background: What happens when you write data to storage?

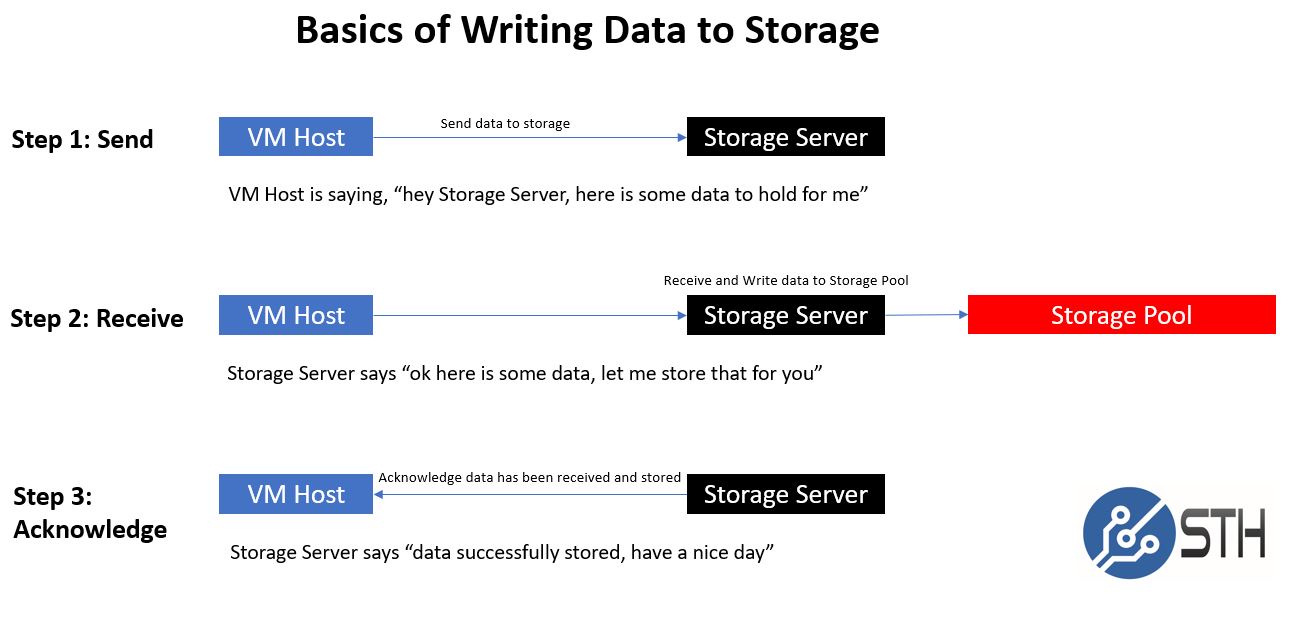

In the original draft of this article, we started with ZFS. Instead, we wanted to provide a bit of background as to what happens when you write data, at least at a high-level. The absolute basics are required to understand what the ZFS ZIL SLOG does. We are going to use a simple example of a client machine, say a virtual machine host, writing to a ZFS storage server. We are going to exclude all of the fun network stack bits, and impacts of technology like RDMA. Remember, high-level.

Let us say that you have a VM running on a VM host. That host needs to save data to its network storage so it can be accessed later by that VM or another VM host. We essentially have three major operations that need to happen. The transmission of the data from the client VM host. Once the data reaches the other side of the network at the storage server, that data needs to be received by the storage server. Finally, the storage server needs to acknowledge that data has been received. Here is the illustration:

That acknowledge is an important step in the process. Until the client receives the acknowledgment, it does not know that data has been successfully received and is safe on storage. That acknowledgment is important because it can have a dramatic impact on synchronous write performance

Synchronous v. Asynchronous Writes

Synchronous and asynchronous writes may seem simple, yet the difference has a profound impact. At its essence, a system will send data to storage to be written and with synchronous write, it will wait until it receives the acknowledge from target storage. With the asynchronous write, a system will send data and then continue to do the next task before it receives the acknowledge.

On the data security side of things, asynchronous writes are generally considered less “safe” because a disruption in the storage or network can mean that the system doing the write thinks that the data is written and safe on persistent storage when in fact it is not. If you think about writing a check thinking you have money in the bank, and you do not, bad things happen (e.g. your check bounces.) Modern systems are generally reliable, but asynchronous writes can cause issues. If you look at client systems such as laptops, it is not uncommon to see asynchronous writes and data loss or corruption. On the server side, this is not a desirable scenario for anyone who wants to keep a job.

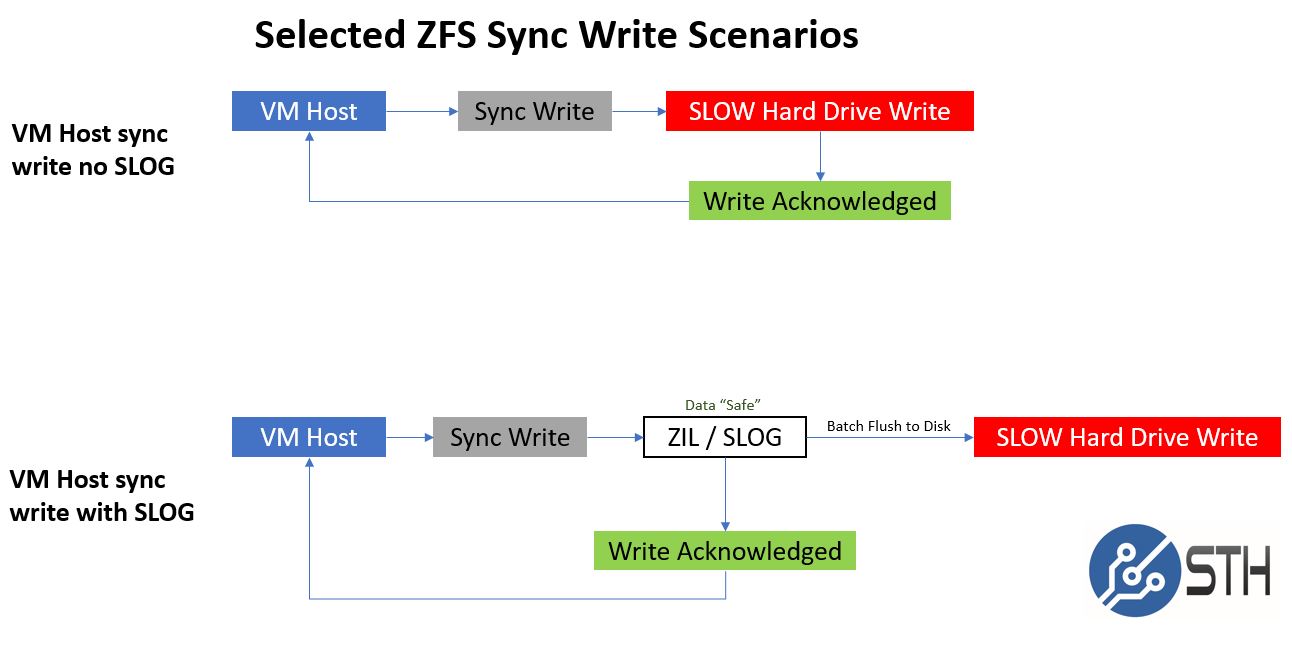

Synchronous writes (also known as sync writes), are safer because the client system waits for the acknowledgment before it continues on. The price of this safety is often performance, especially when writing to slow arrays of hard drives. If you have to wait for data to be written to a slow array of disks and get a response back, it can feel like timing storage with a sundial.

Given its Sun heritage, ZFS is designed to keep data secure and provide storage to many clients. You get a bad reputation as a server/ storage vendor if power and network disruptions cause thousands of machines to lose data. As a result, the ZFS engineers implemented the ability to have a fast write cache. This write cache allows data to make it on the target system’s persistent storage and an acknowledgment to be sent back faster than if the chain was awaiting a slow pool of hard drives to confirm the write occurred.

What is the ZFS ZIL?

ZIL stands for ZFS Intent Log. The purpose of the ZIL in ZFS is to log synchronous operations to disk before it is written to your array. That synchronous part essentially is how you can be sure that an operation is completed and the write is safe on persistent storage instead of cached in volatile memory. The ZIL in ZFS acts as a write cache prior to the spa_sync() operation that actually writes data to an array. Since spa_sync() can take considerable time on a disk-based storage system, ZFS has the ZIL which is designed to quickly and safely handle synchronous operations before spa_sync() writes data to disk.

What is the ZFS SLOG?

In ZFS, people commonly refer to adding a write cache SSD as adding a “SSD ZIL.” Colloquially that has become like using the phrase “laughing out loud.” Your English teacher may have corrected you to say “aloud” but nowadays, people simply accept LOL (yes we found a way to fit another acronym in the piece!) What you would be more correct is saying it is a SLOG or Separate intent LOG SSD. In ZFS the SLOG will cache synchronous ZIL data before flushing to disk. When added to a ZFS array, this is essentially meant to be a high speed write cache.

There is a lot more going on there with data stored in RAM, but this is a decent conceptual model for what is going on.

What is commonly used as a SLOG device?

Traditionally there have been solutions using small RAM-based drives to act as the ZFS ZIL / SLOG device. For example, the SAS based 8GB ZeusRAM was the device to get for years. That changed with NVMe SSDs. Once the Intel DC P3700 hit it was clear RAM + NAND devices were going to take over the market. When Intel Optane SSDs came out in early 2017, they quickly became a solid option. Intel Optane drives combine low latency, and high bandwidth at low queue depth performance, more like RAM, but with data persistence like NAND.

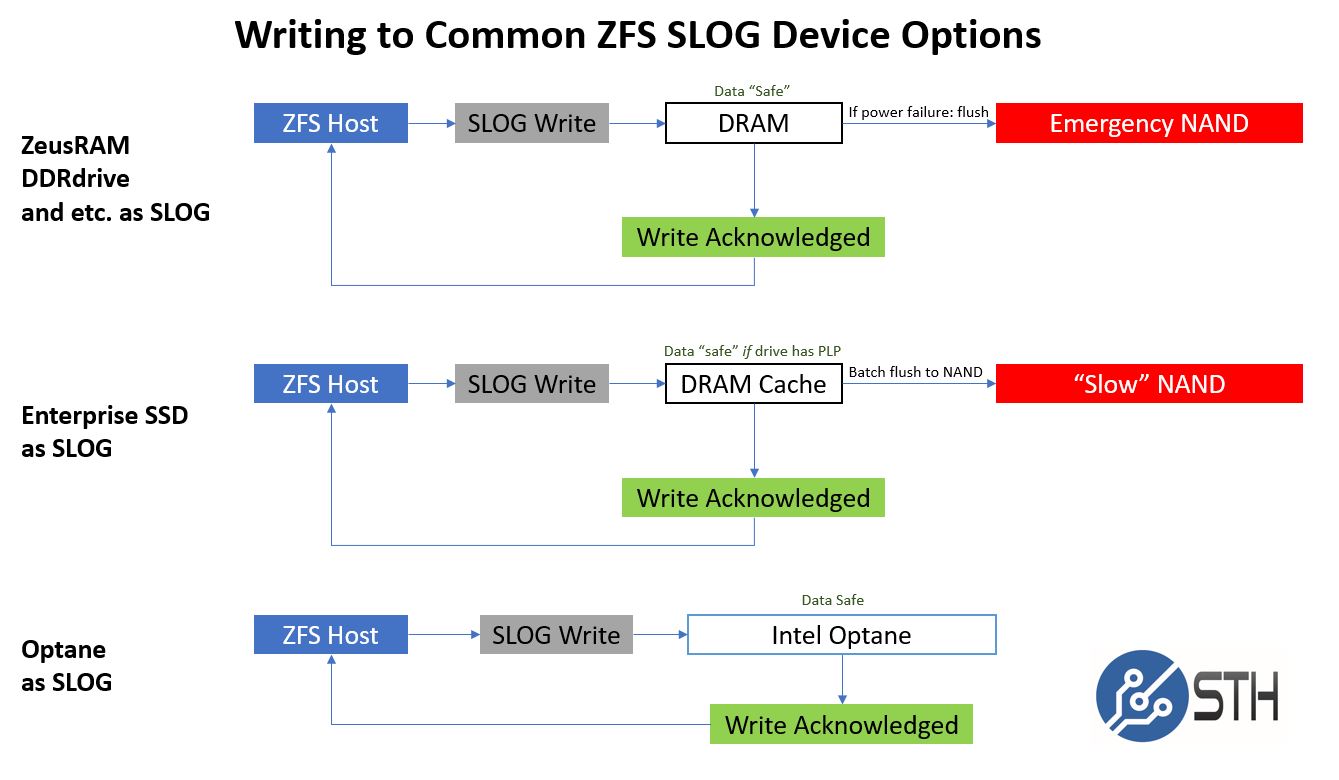

Here are three scenarios we are going to discuss in terms of SLOG device options:

We are going to use a base assumption that for any write cache device you want something with high durability, high reliability and oftentimes you will want to mirror devices. These are base assumptions for any device in this role.

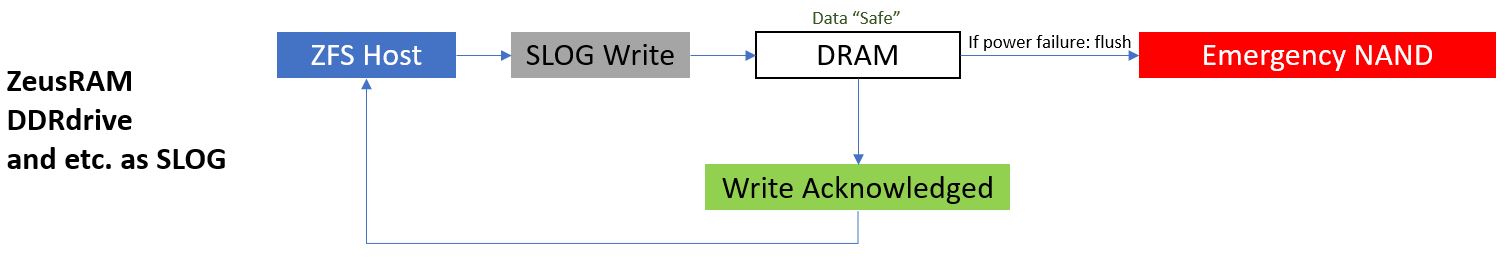

In cases of products like the ZeusRAM and DDRdrive, a sync write happens and the SLOG device stores data in DRAM. DRAM is not persistent storage which means upon power failure, you would lose the data stored, much like main system memory. What these devices generally do is have batteries or capacitors as well as onboard NAND that allows the RAM to either persist through a short outage or to write data out to onboard NAND in the event of a power emergency.

The ZeusRAM went through a SAS controller, so there was a PCIe to SAS controller hop as well as one or more SAS controller to SAS device hops which add latency.

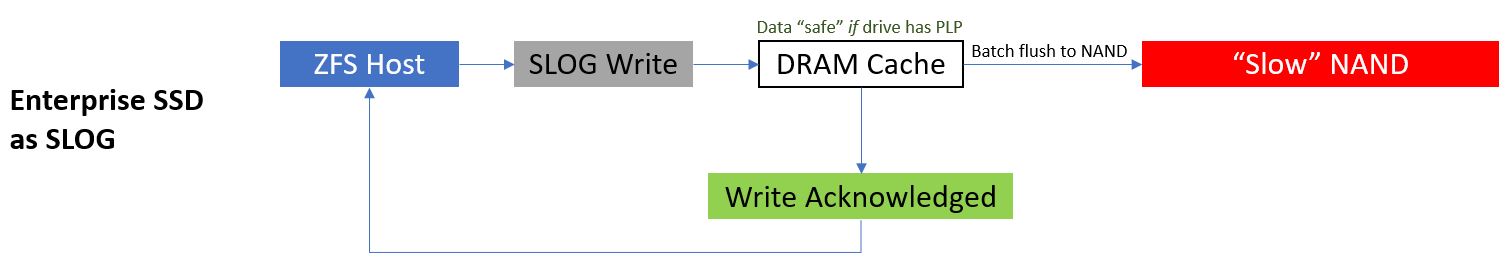

Other options are enterprise SSDs. To maximize the life of NAND cells, NAND based SSDs typically have a DRAM-based write cache. Data is received, batched, and then written to NAND in an orderly fashion. This brings up the same concern we saw with the ZeusRAM based devices where power loss, while data is in DRAM, can cause data loss. In enterprise drives with Power Loss Protection or PLP, onboard capacitors allow the SSD to have enough power on power loss to write out data from the DRAM cache to the NAND.

Since these enterprise drives can treat data as “safe” if it is in the DRAM cache, they can acknowledge that data is saved securely for sync write operations. Most consumer drives use DRAM write cache to achieve high performance, but do not have this power loss protection. That is why their sync write speeds are generally low. Here is the internal view of an Intel DC P3700 SSD. You can see the large capacitor on the right side of the image.

The costs for those capacitors are not included in consumer SSDs as there is a race to have the lowest BOM cost.

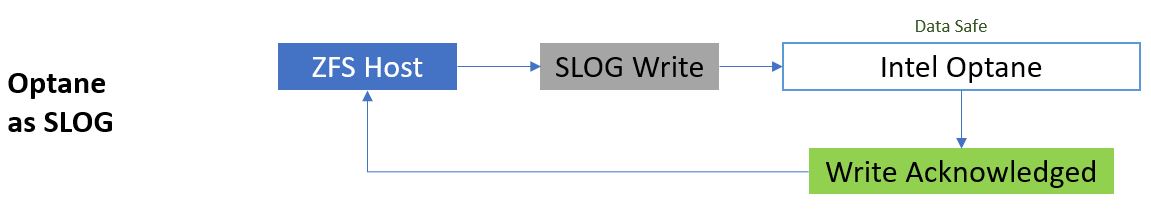

Intel Optane is the relative newcomer and perhaps the most interesting. Remember, Intel Optane stores data on its packages without requiring a RAM-based write cache buffer. There are other benefits such as not needing the same garbage collection algorithms and such that we see on NAND based SSDs. As a result, writes to the drive go directly to the persistent storage media.

Architecturally, Optane is fascinating and there are performance benefits as well. Optane can handle mixed workloads extremely well. Likewise, it can handle low queue depth performance well. Finally, it has high endurance features which make it ideal for a ZFS ZIL SLOG device.

Final Words

In this article, we hope you learned the basics of what the ZFS ZIL does, what a SLOG is and why to use it. You should also have learned about some of the common ZFS ZIL SLOG devices. As you delve deeper into what is happening, there is a lot more going on in terms of when things hit RAM, how flushes happen, and etc. On the flip side, this should give a good enough overview to understand why one may want a SLOG device in a ZFS array.

It is also important to note that these models conceptually can be used elsewhere. For example, RAID cards such as the New Microsemi Adaptec Smart Storage Adapter SAS3 Controllers can use their DRAM write cache and a capacitor to flush data to NAND on a power loss event.

In a follow-up piece, we have some results for the Intel Optane product performing writes and flushes as it may experience as a SLOG device. This is different than a typical mixed workload or a 100% write workload figure. We have not just numbers for the Intel DC P4800X, but also for lower-end products including the Intel Optane 900p and Optane Memory M.2 devices. We also have Intel NVMe SSDs along with a few devices from other vendors along the NVMe, SAS3 and SATA ranges to compare. Data is generated, charts and article are in progress. If you cannot tell by the general tone of this article, the Intel Optane drives are a new category killer.

That’s not a terrible overview. The technical side of me wants to jump in but at a high-level this certainly works.

Remains the question,

is the upcoming SSD technology like Intel Optane the future or the end of Slog devices for ZFS?

Compared to highend SSDs like an Intel P3700, the former prime Slog solution, Intel Optane halves the latency with around 8 x the write iops for small random writes. Optane also eleminates the need for trim and garbage collection as it is adressed more like RAM than Flash.

So yes, a small 16-32 GB Optane device would be a perfect Slog. Intel has such devices but they are useless for an Slog due the lack of Powerloss Protection, the real reason why you want an Slog – protection against a powerloss combines with slow pools. But like any SSD technology, they become larger and cheaper. The current 900P is the first of a new area of large NVMe disks capable for ultimate performance, affordable price and all features that make a perfect Slog.

With the Optane 900P and its successors, you do not need an extra Slog device as they are perfect when using the onpool ZIL functionality and they offer enough capacity to use them for a pool. We only need enough PCI-e lanes and affordable U.2 backplanes for Optane only storages.

For me this means the forseeable end of the Slog concept as you need them mainly for VM or database use where capacity is rarely a concern. For a real high capacity filer you do not need sync write and an Slog, CopyOnWrite is enough to protect a ZFS filesystem.

+gea to me it’s more interesting what happens when 3d xpoint goes in DIMMs. Then your RAM is persistent. Optane is still too expensive compared to normal ssds. I can see QLC storage and optane as cache.

The point is that an Slog is not a traditional write cache device as all writes on ZFS are going always through the rambased writecache to make a large fast sequential write from many slow random writes. The Slog is an additional logging of the content of this ram cache to make this ramcache persistent. The Slog is never involved in writes beside the case of a crash where its content is written to pool on next reboot. This is why you only need a few Gigabytes size for an Slog. Your effective write performance with sync enabled is therefor sequential pool write performance + logging performance of small random io for commited writes that are in the ramcache. This logging can go onpool (ZIL) or to a dedicated Slog device.

If a single pooldisk has a similar performance than the Slog, the onpool ZIL with distributed writes over all disks is faster than an Slog with writes to a single disk. The Slog must be much faster than the pool to be helpful for performance. Using an Slog with a similar performance than a single pool disk makes sync write even slower compared to using the ZIL.

With spindle based disks and around 100 iops per disk, an Slog with 10k-40k iops makes a huge difference. A pool from average NVMe disks has so many iops that an additional Slog that is only slightly faster will not help. You may only want an Slog maybe with desktop NVMes like the Samsungs without powerloss protection to make sync writes powerloss save but not nuch faster even with Optane as Slog.

The 16/32GB Optane drives are slightly more performant than the S3700 as SLOG. Though I think they are very expensive per GB, so you should just jump straight to the 480GB 900P which is 5 times faster again.

We found no difference if zil log, spinning rust array and VMs are on the same machine.

We have also found the it to be the case that when the spinning disk arrays are on the same host and the VM’s, the benefit was negligible.

could never figure out why. Secondly, performance would degrade over time to less than 30% of the original performance numbers, even when disks were only 50% full.

Maybe ZFS is more optimized for network storage, than direct host storage.

NAS or DAS does not matter.

CopyOnWrite filesystems increases fragmentation what makes disk slower even with a 50% fillrate. With most SSDs you have a performance degration on steady write or must care about trim or their internal garbage collection capabilities. Enough RAM and high iops disks or vdev concepts help to keep performance high.

This is why the new generation of NVMe or persistent RAM concepts like Optane are the ultimate performance solution as they overcome all limitations of current disks or SSDs. Wait a year or two untill they are cheaper and larger than today and we use nothing else with performance needs.

Possible explanation for lack of performance benefit with on-host versus off-host: ZFS ZIL Slog benefit is applicable to synchronous writes only, which most “applications” are loathe to request/require, but going off-host for VM storage, excellent case.

Great coverage of a complex topic Patrick.

Since this article was written Intel has confirmed that they will be releasing NVDIMM-P or Optane DIMM in the 2H 2018.

Searching for “Optane DIMM” returns many Hits, most dated from a couple of days to a few hours ago.

Thank you for the article, good summary of things !

The question that remains for me is how does the new Optane performs agains the old ZeusRam ?

Any benchmarks I could find somewhere ?

Bad news

Intel suddenly removed the 900P from the list of PLP protected disks and lowered the price.

https://ark.intel.com/Search/FeatureFilter?productType=solidstatedrives&EPLDP=true

so the only Optane with powerloss protection at the moment is the 4800X

btw

I made a comparison of the ZeusRAM vs P3600 vs Optane as Slog

http://napp-it.org/doc/downloads/optane_slog_pool_performane.pdf

Why is PLP necessairy if the complete server is behind an UPS?

Tom – I can tell you from experience, UPS and PSUs fail. I have even seen a PSU burst a capacitor right in front of me.

hi, any link or update to this line (i couldnt find/search any article dec 2018), thanks:

> As we have hinted at STH, we just did a bunch of benchmarking on current options so we will have some

> data for you in follow-up pieces.

Hi John, we published on in December 2018 with more data https://www.servethehome.com/intel-optane-905p-380gb-m-2-nvme-ssd-review-the-best/

The general trend lines hold.

Thanks for that link, i actually also found this one which is also relevant from late 2017 (i found it via the freenas forums as someone linked to it )

GREAT articles, tks PK.

https://www.servethehome.com/exploring-best-zfs-zil-slog-ssd-intel-optane-nand/