At STH, we use the term “AOC” to reference Active Optical Cable cabling often. As with our recent DAC or Direct Attach Copper cable guide, we thought it would be useful to address the question, “What is a AOC?” Since at STH we believe it is important to help impart knowledge, even if many readers already know the answer, we felt like it was time for a quick guide.

What is an AOC or Active Optical Cable?

In simple terms, an active optical cable has modules at either end of an optical fiber cable that allows direct communication between devices over that permanently attached fiber cable. Both ends have specific connectors and the cable length is fixed.

In this example, we have two SFP28 connectors on either end. There is then a fixed cable that goes between the two ends allowing devices to communicate. This cable, unlike traditional optical transceivers, is permanently attached to the transceivers at either end. This both prevents the accidental removal of the fiber cable while also ensuring that environmental contaminants such as dust do not enter the coupling.

As part of our fiber optic guide series, we are mostly focusing on pluggable optics. Optical communication is essential for the long-range transmission of data. As networks get faster, and we push into the 400GbE era and beyond, the distance that copper communication can reliably and practically travel at those speeds is limited. These AOCs are one option for some of the longer DAC runs that will no longer be able to be serviced by copper.

One of the reasons this is a less popular cable than DACs is that each end needs the photonics transmitter/ receiver and therefore one does not get the cost benefits of the copper interconnects. With 100GbE and faster generations, the AOC cabling is much thinner and more flexible than the copper connections as well. At this point, most of the market has settled either on pluggable optics without fixed cables or DACs but since our readers still may encounter AOCs, we wanted to address them.

What is a Breakout AOC?

We are going to note that you may see one other important type of AOC, the breakout AOC. With modules such as QSFP+ for 40GbE networking and QSFP28 for 100GbE networking the “Q” stands for Quad. As a result, one way to conceptualize the QSFP+ connector above is that it is carrying four (quad) SFP+ channels. SFP+ is 10Gbps, QSFP+ is 40Gbps, four (quad) 10Gbps links give us 40Gbps of bandwidth. The same conceptual model holds for SFP28 and QSFP28. As a result, one practice is to use the higher-density QSFP+/ QSFP28 form factors and split them to connect to 2-4 lower-speed devices. Here is an example with four SFP28 (25GbE) ends on one side and a single QSFP28 (100GbE) side on the other:

We are going to quickly note that while conceptually this works, not all switches, routers, NICs, servers, storage, and other components support breakout. These days, most do, but there are still quite a few exceptions where they do not. There are even NICs like the HPE 620QSFP28 4x 25GbE Single QSFP28 Port Ethernet Adapter, that are intended to have a QSFP28/ QSFP+ port used with DACs/ AOCs or as four separate connections.

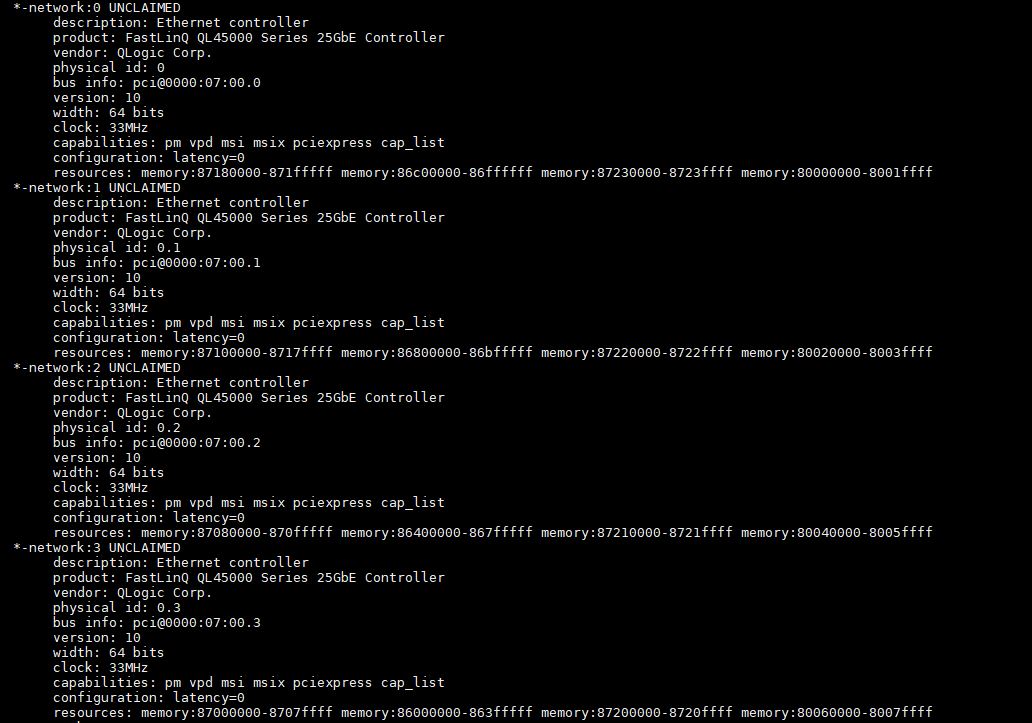

Although you can see one physical port above, you can see the NIC as four separate 25GbE devices not just a 100GbE device:

The important aspect of a breakout AOC is that one can do this optical splitting using just the cable instead of needing some sort of breakout device such as an optical cassette. Saving on additional components such as those cassettes to do this breakout is a prime reason why we sometimes see AOCs.

How Far Do AOCs Reach?

This is a bit dependent on the type of AOC, and the vendor. Still, due to practical limitations we generally see AOCs in the 1m to 300m range. Realistically often that lower limit is 10m and the higher because below that distance the DAC can make more sense. On the upper end, the challenge is often the fixed length of the AOC means that one has to run a cable with a connector across a long distance and also has to be accurate on the distance because the cable is a fixed length.

Realistically, that is a big reason that most in the industry use DACs for in-rack and then standard pluggable optics for rack-to-rack communication. The cost of having to troubleshoot a stuck pull or re-run a cable that is too short outweighs any savings one usually gets with an AOC.

The AOC versus DAC Conceptual Model?

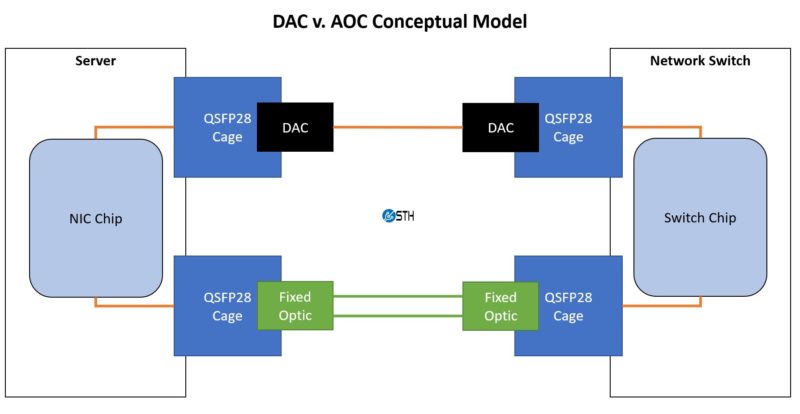

Just to see the difference, here is an updated conceptual model that we used in the DAC article, this time with the AOC portion below:

Here we are representing copper/ electrical communication with orange and the fiber is green. Using DACs, the transition between the modules (here QSFP28) and the chips in the larger systems is copper to copper. On the active optical cable, we have the fixed optical pathways but that communication still needs to transition from copper within the switch to optical communication over the AOC.

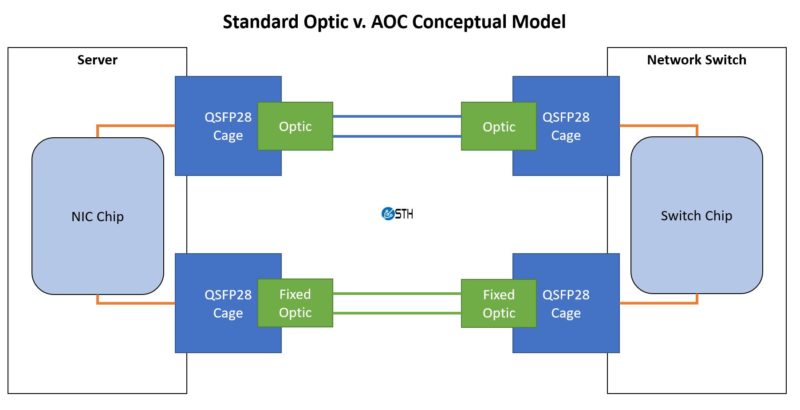

Taking a quick look at the difference between standard pluggable optics and the AOC, we have the fiber that runs between the two optical transceivers as fixed in the AOC and customizable on the traditional optical run.

Since active optical cables still require the same copper to photonic conversion at either end, many of the cost savings that are realized with DACs are not realized with AOCs. The lack of flexibility in AOC deployments is one of the reasons they are not as popular.

What Kind of DAC Should You Look For?

There are two big items we would suggest looking for. The first is speed. For Ethernet, here is the common set:

10GbE/ 40GbE Generation

- 10GbE to 10GbE: SFP+ to SFP+

- 40GbE to 40GbE: QSFP+ to QSFP+

- 40GbE to 4x 10GbE: QSFP+ to 4x SFP+

- 40GbE to 1x 10GbE: QSFP+ to SFP+

25GbE/ 50GbE/ 100GbE Generation

- 25GbE to 25GbE: SFP28 to SFP28

- 50GbE to 50GbE: QSFP28 to QSFP28

- 100GbE to 100GbE: QSFP28 to QSFP28

- 100GbE to 4x 25GbE: QSFP28 to 4x SFP28

- 100GbE to 2x 50GbE: QSFP28 to 2x QSFP28

- 100GbE to 1x 25GbE: QSFP28 to 1x SFP28

- 100GbE to 1x 50GbE: QSFP28 to 1x QSFP28

That should be most of the conversions you need to know. This model will work for generations such as QSFP56 and QSFP-DD and beyond as well.

The second item is vendor compatibility. Many switch, router, server, storage, and NIC vendors lock optics in switches to only be compatible with the vendor’s more expensive validated optics. One could connect a Cisco router to a HPE switch, for example, by placing a Cisco QSFP28 optic in the Cisco switch and a HPE QSFP28 optic in the HPE switch. Then one can run a cable between them.

With AOCs, it is trickier since both ends are fixed to a fiber cable. As a result, devices that are vendor locked when they sense AOCs need to have correct coding at both ends, and that can be more troublesome when connecting gear from different vendors. With standard pluggable optics, one can simply plug the correctly coded optics into respective ports, then connect a passive cable between them. This is a big flexibility disadvantage of AOCs versus standard optics.

Final Words

Hopefully, this helps you understand a bit more about AOCs. We certainly see DACs more often in the data center these days than AOCs. We think that is likely due largely to the fact that AOCs lack the flexibility of standard pluggable optical modules plus a passive cable. One also does not get the cost savings of maintaining an all-copper connection that one gets with DACs. Still, we wanted to cover the topic for our readers.

Just taking a quick search about, these pre-terminated AOCs don’t seem to include a pulling eye, nor do the various sites seem to offer eyes, grips or guide shrouds as accessories.

I would think that in addition to the usual concerns with fixed-length cables, the blocky connectors represent a non-trivial snag risk, and pull stresses would have to be relocated well aft of the connector.

Are AOCs & DACs universal for ethernet, fiber channel & infiniband, or do they differ by use/signal?

Typo: “100GbE to 1x 50GbE: QSFP28 to 2x QSFP28”, should be “ 100GbE to 1x 50GbE: QSFP28 to 1x QSFP28”

Is there a way to tell whether a particular cable will be compatible with particular device (card, switch, whatever)? I would really like to get my hands dirty with some old Inifiniband cards (circa ConnectX-2/3) But the prices of the cables are scary. I’d be willing to pay if I know they’d work, but how can I tell?

@Nikolay Mihaylov

(1) On eBay go with a Mellanox ConnectX-3 MCX354A-FCBT VPI FDR. Provides up to 56Gbps in IB mode, up to 45GbE in IPoIB. It’ll cost you from $50 to $80 depending on vendor. Mellanox ConnectX-2 is too old.

On Linux you can either install the Mellanox package or even simpler:

sudo apt install rdma-core opensm ibutils ibverbs-utils infiniband-diags perftest qperf mstflint

This is for Debian or Ubuntu. For RHEL check their docs for the correct yum incantation.

Then configure the card with the regular nmtui utility (ncurse-based GUI in the console) or whatever other tool you use to configure your NICs. Inside the nmtui dialog INFINIBAND section use the “connected” transport mode and enter 65520 bytes for the MTU.

You’ll need two cards, one for each PC. Hopefully your PCs are “modern” meaning current generation CPU or two or three previous generations. You’ll need a PCI3 Gen 3 x8 electrical slot (can be a x16 slot). On desktop mobos, you might need to move the GPU to the 2nd x16 slot and put the NIC in the first one. In doing so both the GPU and the NIC will get x8 electrical, which you can check with:

sudo lspci -vv -s [slot] | grep Width

which will return the LnkCap and LnkSta.

(2) Cables: a DAC Mellanox MC2207128-003 3-meter can be had for $25 or more on eBay. You can connect the two cards directly. No need for a switch. For longer cables I suggest optical, the Mellanox web site store is full with the model numbers then just look for them on eBay. A Mellanox 5M FDR VPI QSFP Active Optical Cable MC220731V-005 5-meter will cost you $80. Tip for used AOC: verify that the cable is in good shape right away and if not return it right away (no pinch, still correctly attached to each plug). A 10-meter will cost you at least $220. Prices have been going up since the beginning of COVID-19, it’s pure scalping because these cables were made a long time ago. Tip: I usually wait for the “good” deal like for instance once a vendor was selling a pack of 12 x 3-m DAC for $150. That’s was an incredible $12.50 only per cable. I bought it and they were all OK. These are prices for FDR cables. QDR cables are less expensive (but speed is 40Gbps IB and about 25-32 GbE IPoIB depending on computer).

My advice: go with FDR all along the chain cards->cables->switch.

You can then verify with iperf3 that you have +-45 GbE between the two PCs.

If your PCs are old and/or you only have PCIe Gen 2 and/or you only have x4 electrical available the speed will be 10+ or 20+ GbE.

If everything is fine and you get around 45 GbE in one direction and only 35 GbE in the other direction it will be due to the internal configuration (e.g. IRQ affinity and other factors). I’m still reading docs to find out which configuration I should use for the optimal results.

You can download documentation and the Mellanox Windows driver from the Mellanox web site, free of charge.

Final note: used ConnectX-4 100Gbps is still too expensive, card starts at $300.

@Nikolay Mihaylov

If you need more pointers and a how-to, go there:

https://magazine.odroid.com/article/networking-at-ludicrous-speed-blasting-through-the-10000mbps-network-speed-limit-with-the-odroid-h2/

I wrote that back in 2019, it’s about an extreme edge case of using IB with a SBC but most of the text applies to “normal” PCs.

I went totally knowledge-blind when I started so anybody can do it :-)

Have fun!

@domih – thanks a bunch! The thing is, I already have the controllers – HP 544+ FLR. They are crazy cheap (got them for 11EUR each) as long as you have an HP server to plug them in. And are reported as ConnectX-3 on PCIe3 x8. All good except the cables. So thanks for the info on those. But I live in Europe and IT stuff is more expensive here plus the customs formalities are a nightmare that I want to stay away from. It’s just weird that a single cable costs more than the two controllers combined.