Today is the day we have been waiting for since 2018, or for some since 2015. This is the first major architectural refresh of the Intel Xeon D line, codenamed “Ice Lake-D”. With this new generation, we get not just new cores, but also get PCIe Gen4, 100Gbps of networking, often manifesting in 25GbE connectivity, and a host of new accelerators. Intel is really targeting this generation at networking/ 5G applications so, let us get into the details.

Quick Background

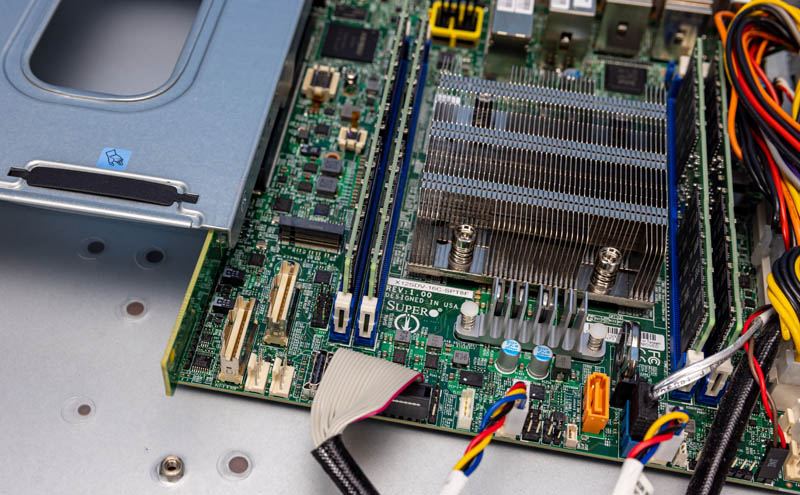

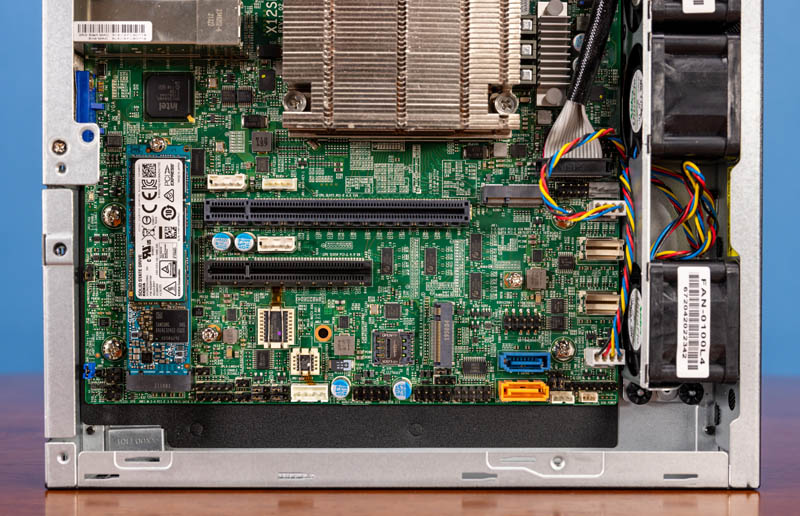

At STH, we have been working with four platforms over the past few weeks. We still have some pre-release firmware, but we are going to be giving you a taste of hands-on with some of the platforms.

We need to give a quick pause to Intel for sponsoring this piece and getting us Fort Columbia as well as Supermicro for sponsoring with the three X12SDV based platforms. Expect more coverage in the coming weeks on these as we get all of the firmware for our formal reviews.

We also have a video version of this piece that you can find here:

As always, we suggest opening this in a new YouTube tab, window, or app for a better viewing experience.

Intel Ice Lake-D – Intel Xeon D-2700 and D-1700 Overview

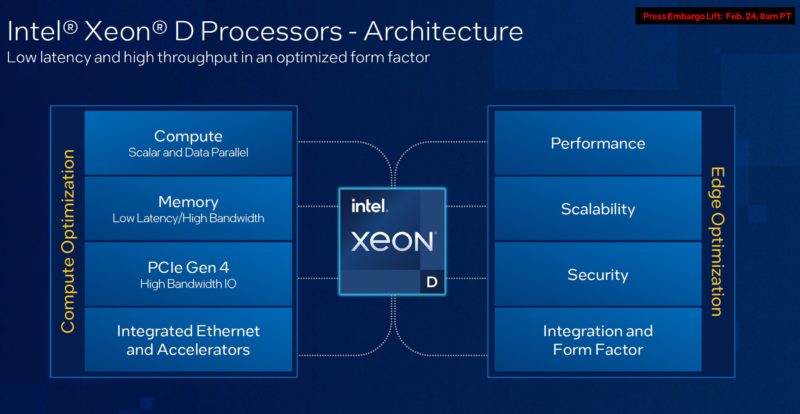

Intel Xeon D is really designed for edge applications. Most who want to run large virtualization servers will want to use standard Xeon Scalable socketed processors. For the edge, there are different requirements and Intel effectively takes the Xeon Scalable IP, and packages it in a way that is more suitable for edge deployments.

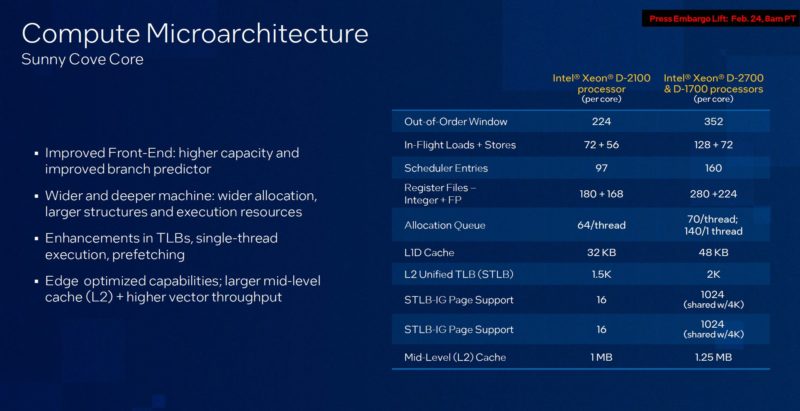

The first major different between the previous generation and the new generation is the compute core. The Intel Xeon D-2700 and D-1700 series processors utilize the Sunny Cove cores. This is effectively a generation or two newer (Cascade Lake was very similar to Skylake) than the D-2100 and D-1500/D-1600 series parts.

Here is an Ice Lake-D LCC (D-1700) chip next to a 3rd Generation Intel Xeon Scalable part. While they may look similar, there is more going on here. The Ice Lake-D part also effectively incorporates the Lewisburg Refresh PCH from a mainstream platform so it replaces more than just the main compute chip from a mainstream server. Key though is that since these share architectures, running workloads that use VNNI for AI inference acceleration can happen both in the data center and at the edge.

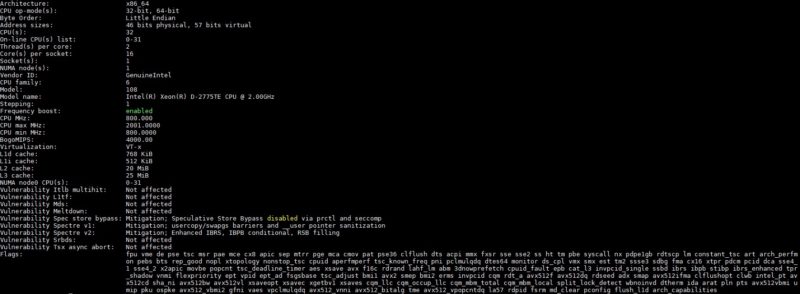

On the Xeon D-2700 side, here is a quick look at the Intel Xeon D-2775TE’s lscpu output where you can see feature flags.

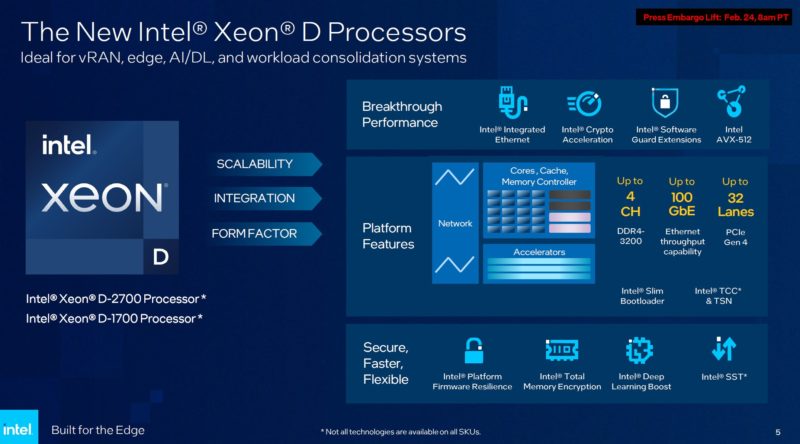

Here is the real overview slide. Intel has a host of features in the new platform that are big upgrades over the predecessors. As of the evening before the launch, we did not have the final SKU list, but not all of the technologies will be available on all of the SKUs. That is basically required in this space since there are crypto offloads in some of the SKUs that cannot be shipped to certain places.

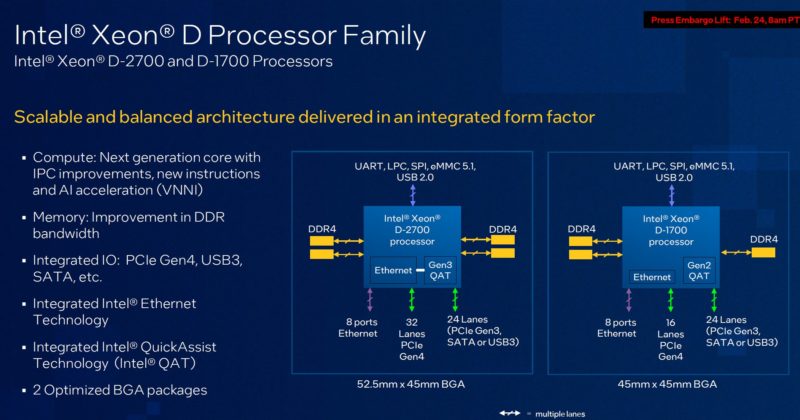

Diving into the details, the D-2700 and D-1700 really are different chips. We have the same cores and high-speed I/O capabilities, but things like the QAT generation, memory support, and DDR4 lanes are quite different.

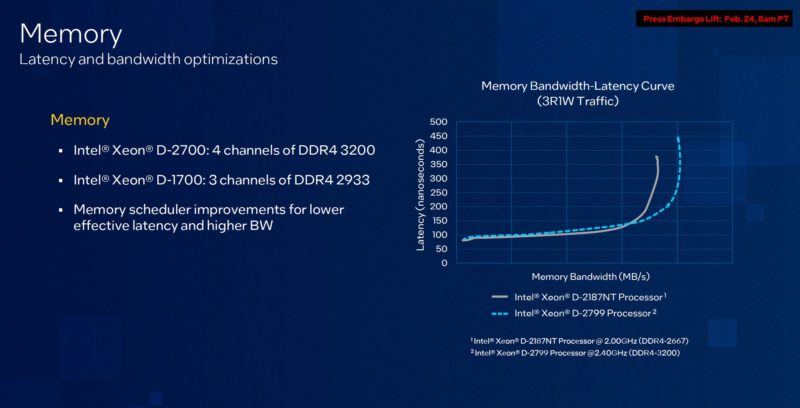

The memory situation is very interesting. The Xeon D-2700 series gets quad-channel memory and up to DDR4-3200 speeds. The Xeon D-1700 gets 3-channels and up to DDR4-2933. Practically, the D-1700 platforms we have seen are dual-channel, not 3-channel, but it is very interesting. Also, we get more memory capacity due to more channels with the D-2700 series. When comparing the D-1700 series, we compare to the D-1500/ D-1600 series where we not only get faster memory speeds in the new generation, but we also get higher-capacity 64GB DIMM support so 256GB is possible. Both of the new chips support UDIMMs as well. All of the memory support will, of course, depend on platform vendor support.

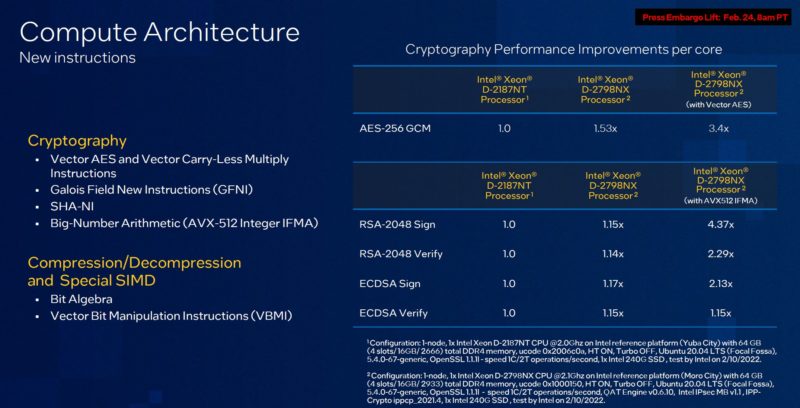

One of the similar points is the crypto offload. There are new crypto offloads in the Ice Lake cores that we have been looking at, and those have fairly large impacts when used.

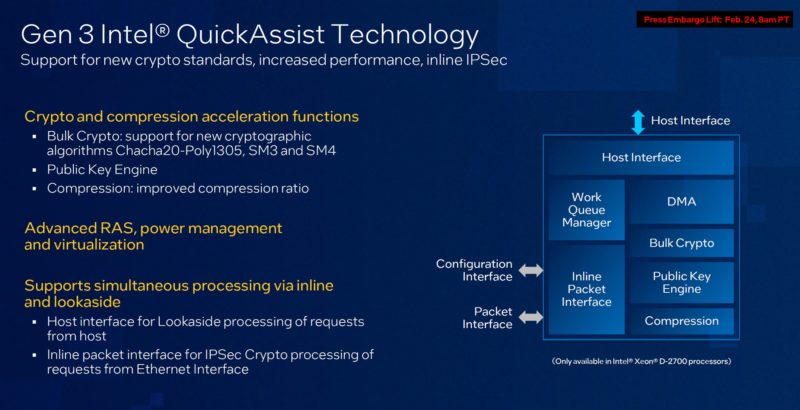

The big one, however, is the QuickAssist technology. That has a dedicated acceleration block for crypto and compression.

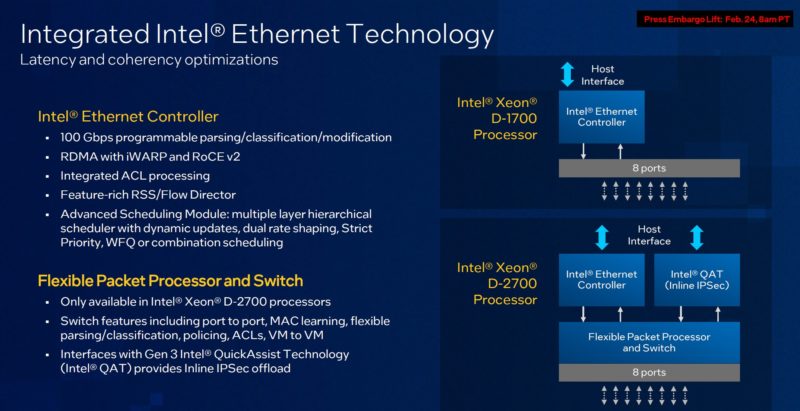

The integration of QAT and also Ethernet technology is different in the D-2700 and D-1700 series lines. The Xeon D-1700 series has a more straightforward networking path. The Xeon D-2700 series can use a flexible packet processor and switch between the ports and the host that can do things like pass IPSec to QAT for offload. There is even a switch built-in. What we get with the new controller is Intel 800 series NIC IP that allows for up to 100Gbps plus features like ROCE v2 and iWARP for RDMA. The 800 series is a big step up from the 700 and 500 series NIC IP from Intel that was found in the previous generations.

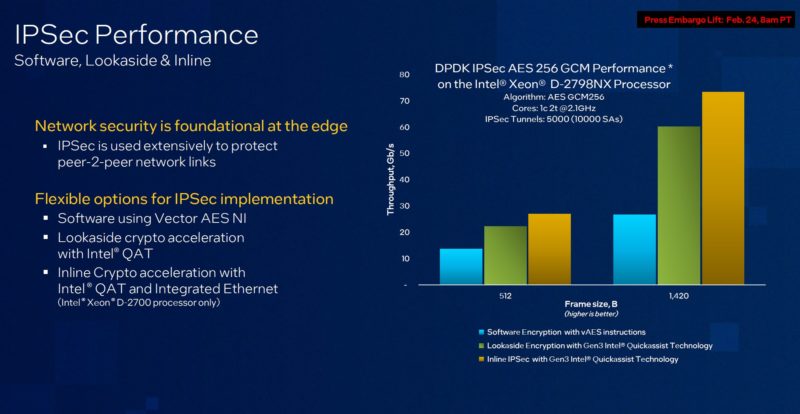

Here is a quick one on the IPSec performance using different acceleration technologies.

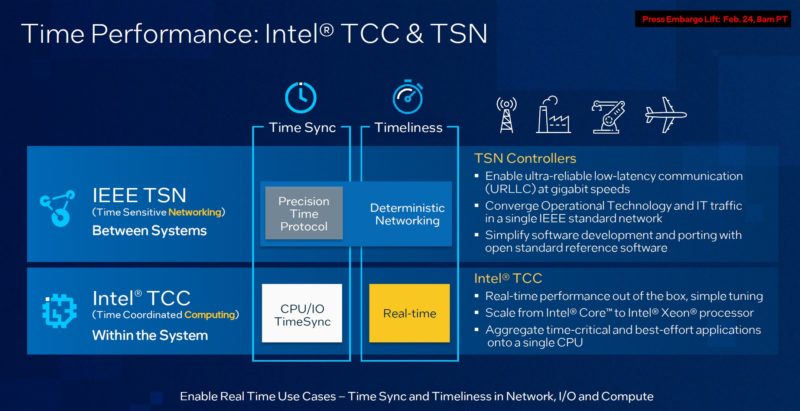

Also, since this is designed for the embedded market, there are SKUs that can handle time coordinated computing (TCC) and networking (TSN). Many embedded markets are looking for better time accuracy and then the ability to process based on that accurate time.

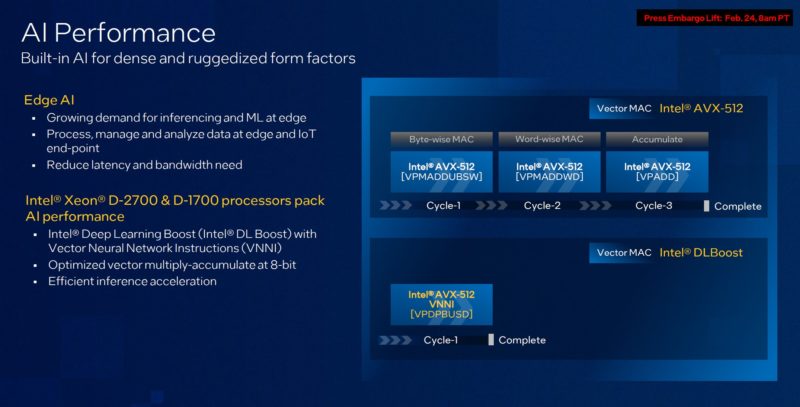

With this generation, we also get AI inference acceleration since these are Sunny Cove/ Ice Lake Xeon cores.

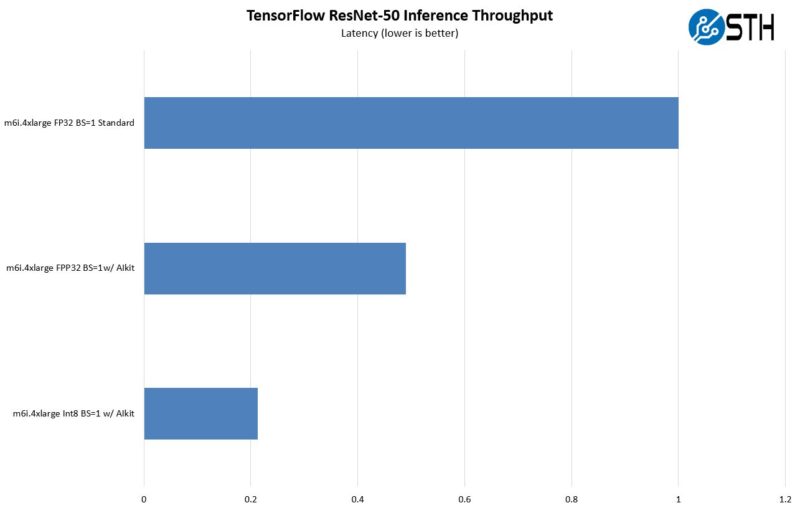

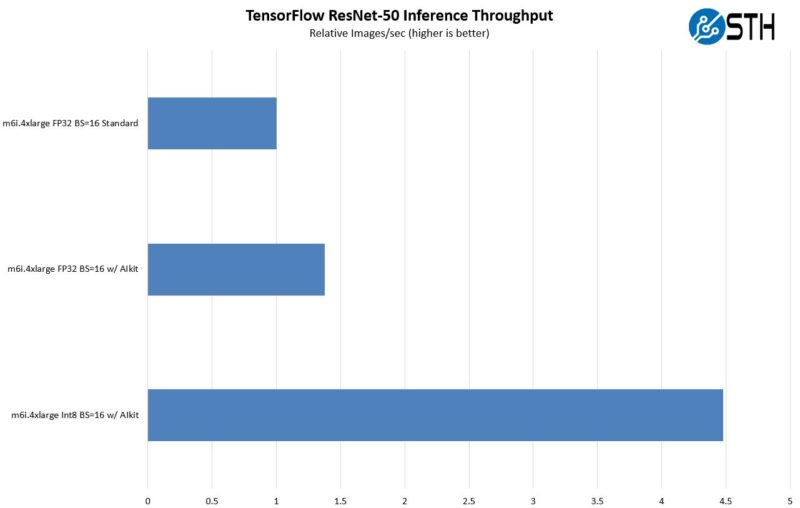

We did some inference benchmarks using DL Boost in our Stop Leaving Performance on the Table with AWS EC2 M6i Instances piece. One gets better latency for lower throughput workloads with VNNI acceleration.

One can also process significantly more. While this will not make AI inference accelerators obsolete, it also means that there is some baseline AI acceleration available to all of the new Xeon D platforms.

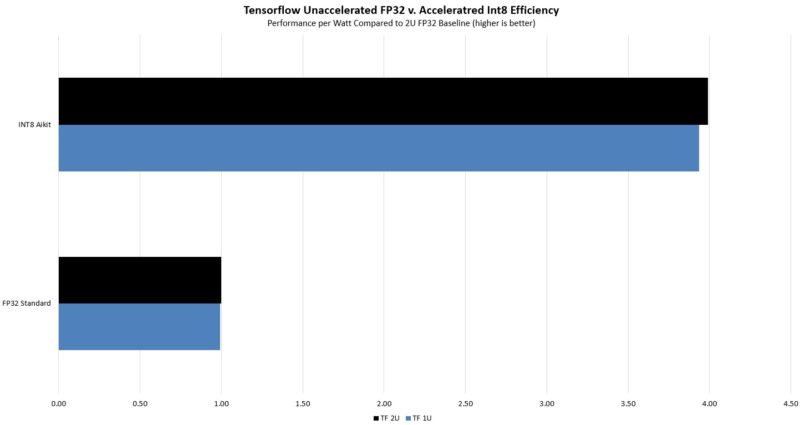

In edge deployments, power is often a big consideration. Earlier this week in our Deep Dive into Lowering Server Power Consumption we showed how AI inference using INT8 instead of unaccelerated FP32, as one would use in the D-2100 or D-1500/ D-1600 series also leads to better power efficiency.

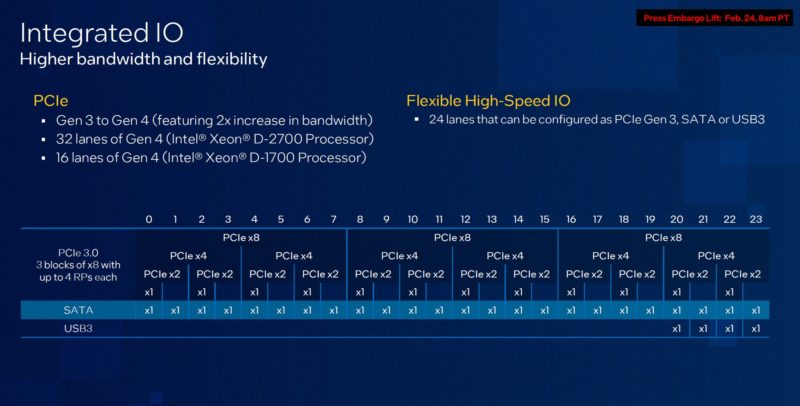

We discussed the integrated I/O. Part of the packaging magic for Xeon D is that it incorporates the PCH. The PCH is often a large chip that uses a lot of power and takes up a lot of motherboard space. By integrating I/O, that space is saved and the power impact is lowered. As with the previous generation, we get flexible I/O that can be PCIe Gen3, SATA, or USB 3 and this comes from Lewisburg era IP.

PCIe in the new generation is PCIe Gen4 and on the Xeon D-2700 series we get up to 32 lanes of PCIe Gen4 as well as the 24 lanes of lower-speed HSIO.

Something we expect on most D-2700 and D-1700 series platforms is the use of higher-density x4 or x8 connectors versus high SATA density. These higher-density connectors both can allow for better PCIe Gen4 signaling. On the flexible HSIO lanes, they can also be reconfigured to support PCIe Gen3 or SATA making the motherboards more versatile.

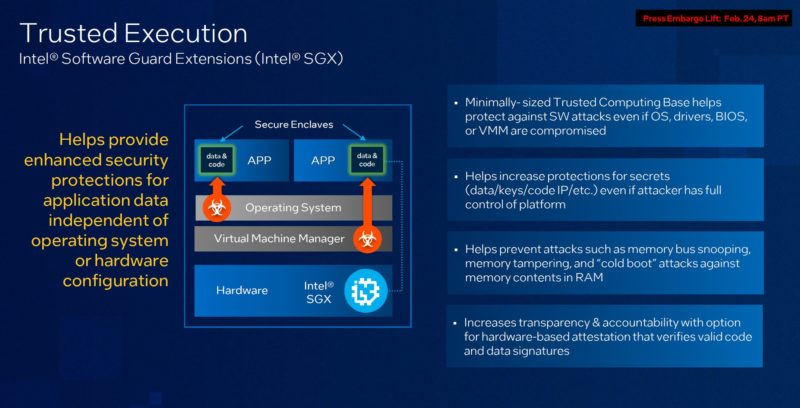

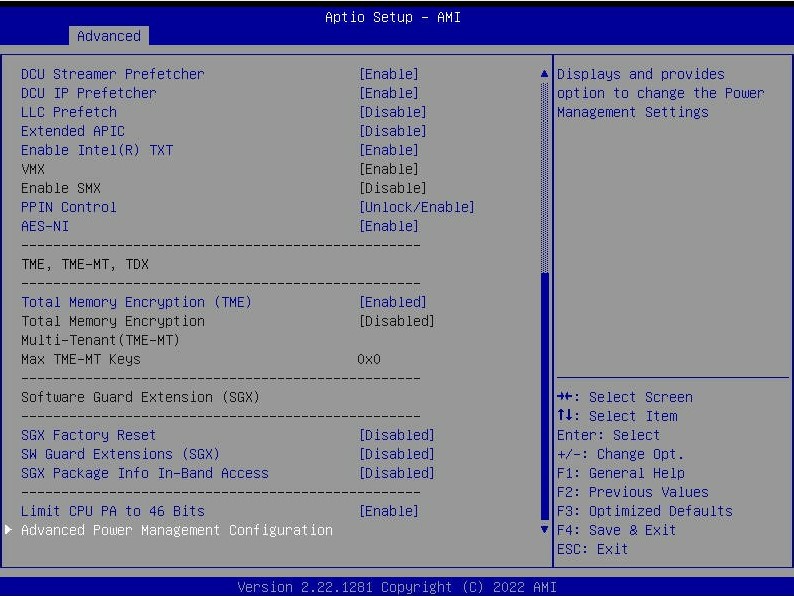

Intel also has its Software Guard Extensions technology on these chips. Intel SGX is Intel’s secure enclave technology.

We saw SGX in our test platforms, as well as the option for Intel TME or Total Memory Encryption.

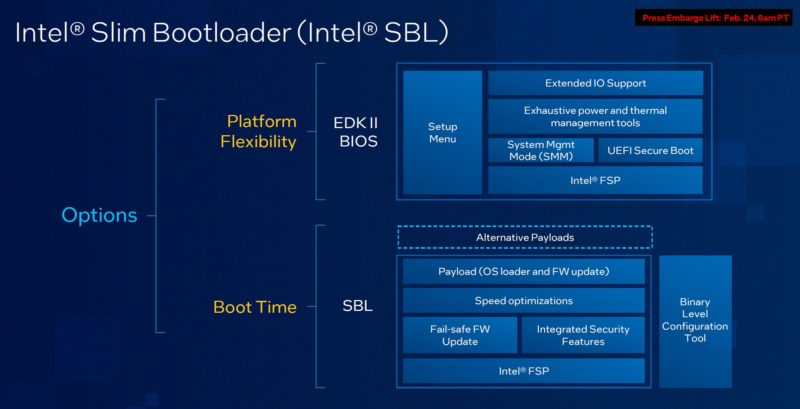

For the embedded market, Intel also has a Slim Bootloader to help speed boot times as sometimes very fast boots are required for critical systems.

Let us get to some hardware testing and take a look at the systems.

I’ve waited so long for this. 25G Supermicro gimme now.

Optane DIMM support is not mentioned, is that the use case for the 3rd memory channel slot on the 1700-series?

Does “Up to 100Gbe” total bandwidth or are 2x100Gbe channels to redundant switches supported?

I’m interested in Ice Lake D for storage servers where both the above may become important. Awaiting the replacement for the QuantaGrid SD1Q-1ULH.

Anyone else remember when these chips were still supposed to replace X299 as their latest HEDT platform? (Aka, moving from max 18c/36t Skylake to 20c/40t Ice Lake.)

Pepperidge Farms remembers.

On all of these Xeon D’s Intel lists total BW. So I’d say this one is total 100G. Intel’s always putting “OPTANE” on anything they can so if their foils don’t say it, I’d say its a hard no.

I can’t wait for the reviews!

Looking forward to the D-1700s filtering down to the budget levels in a few years’ time – should make good web servers. We’re still trucking on our D-1521.

The ARK listings are out, and there’s no Optane support FWIW

The Intel ARK listings are out and there’s no Optane on the D-2799 FWIW

@Cooe,

Ice Lake -D (the SOC) was going to replace X299? Or just Ice Lake -HCC generically was going to replace X299? I don’t think Ice Lake -D was ever going to be ~20C/40T, but hey I absolutely could be wrong.

Let’s hope this means the prices in the second hand Xeon D market go down as the older devices get cycled out.

The product listings are up on the Supermicro site: https://www.supermicro.com/en/search?Search=x12sdv

Looks like the whole 1700 series is limited to 16 PCIe Lanes from the CPU, may not be a problem if you can use the integrated networking just something to bear in mind.

https://ark.intel.com/content/www/us/en/ark/products/series/87041/intel-xeon-d-processor.html