5th Gen Intel Xeon v. 4th Gen Xeon XCC Power Consumption

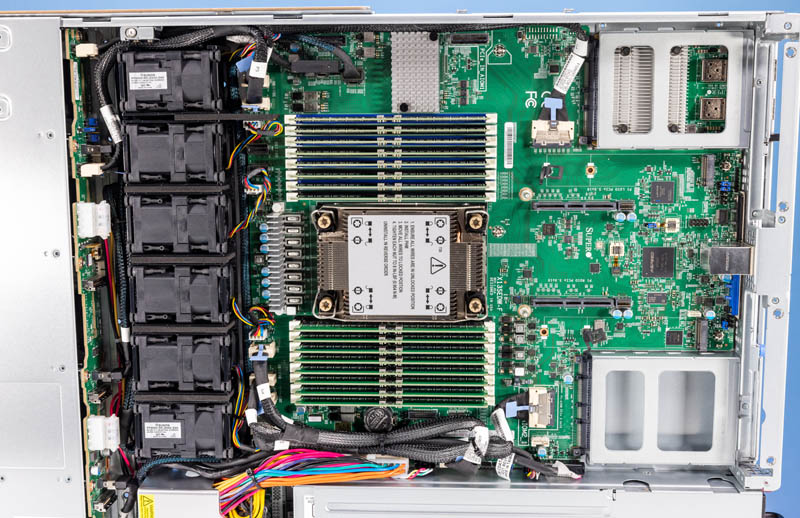

The question was how do we get some kind of reliable way to measure not just the performance, but also the system power consumption of the CPUs. For that, we went on an odyssey. We started with as barebones of a Supermicro SYS-111C-NR that we could get.

We pulled all of the NICs from the system, and we only populated 8 of 16 DDR5 DIMM slots for full memory bandwidth with less power consumption. From there, we added back an ASUS USB 3 2.5GbE NIC for our low-power network. The net result was as minimal of a configuration as we could get to help isolate the CPU power. We could not do anything about the PCH, and the ASPEED BMC uses 5-7W even when the server is powered off. We also used a Kioxia CM6 SSD. Still, we wanted to isolate the CPUs as much as possible.

Here are the results in a table that Alex made:

At idle, even with the most cores and the most cache, the 5th Gen Intel Xeon Platinum 8558U CPU package was showing around 67W versus the Xeon Gold 6414U. Adding 50% more cores, 20% more TDP, and 200MB of L3 cache actually reduced the power consumption at idle by about 17%.

One item to note is that we were fairly regularly getting 238-240W on the Gold 6414U package. We thought it might just be firmware, but we were using the same firmware and platform as the Platinum 8458P, so that did not make sense as a generational issue. Still, the trend was fairly close, and when we used other programs, we got closer to 249-250W, so it might have been a stress-ng issue with this part.

We showed that the performance went up, and power consumption went down at idle. 24% maximum increase on a relatively bare system (the server power consumption would go up with more NICs, drives, and so forth) for getting so much more performance is relatively little.

Key Lessons Learned

To be fair, when I first asked Intel if we could get the parts, I was skeptical. With only 50W more TDP, I was not sure that the new top-end Intel Xeon single-socket SKUs would offer a lot more performance even going from 32 to 48 cores.

I was wrong. The new Intel Xeon Platinum 8558U is considerably faster than the Xeon Gold 6414U. 50% more performance for around 50W TDP or 85W at the server level is super. Even with an almost crazy gain in the single socket generational performance, this is not one that is marketed heavily. AMD has more cores per socket until the Xeon 6 Sierra Forest launch slated for Q2 2024. Even without performance per socket leadership, the generational performance gains at the top end of the Intel single-socket SKU stack are astounding.

While we get more performance, the pricing went up from the Gold 6414U at $2296 to the Platinum 8558U at $3720. The performance certainly went up to match that, and realistically in a single socket server like this the CPU is less than half of the total cost. For most configurations, it means maybe 50-70% more performance for 85W at the PDU and perhaps 20% more system cost.

The one thing we really wish these parts had was accelerators like QuickAssist. Intel QAT support is now in Ubuntu and we have tested this before and found massive performance gains. It would be a big competitive move to position the lower-core count parts against AMD and Arm if these were enabled on the single-socket SKUs.

Still, it was shocking to see just how much better EMR was than SPR. Our best guess is that Intel is getting some power savings from the 2-tile versus 4-tile design, and that power is being used to fuel more power and, therefore, increase clock speed on the cores.

Final Words

Of course, the world of server CPUs is always changing. Still, this was a great way to look at how Intel is expanding its single-socket market. We hope in future generations, Intel will continue to expand in this segment. There are a lot of lower power racks out there. We experienced this firsthand which is what prompted this project. For those lower power racks, the single socket server is going to increasingly be the answer whether those are deployed today in the field or as lab nodes in the future.

Hopefully, we get an expanded single-socket lineup from Intel in the coming generations. When you look at the power consumption of modern servers and where we will be with 500W+ CPUs in the not-too-distant generations, there are simply a number of racks that will need lower power options. An easy way to lower power is to go single-socket. Even against that backdrop today, it is somewhat crazy that performance and power consumption got this much better without it being a highlight at the 5th Gen Xeon launch. At least we now have some idea of just how much better the 5th Gen versus 4th Gen XCC CPUs are.

It isn’t just the idle that’s big. It’s also that you’re getting much better performance per watt at the CPU and server

In isolation this finding is marginally interesting but if you could add a comparison of performance/watt to future system tests and have a larger dataset to compare between AMD and Intel this becomes much more useful. Most systems in the wild have average loads between 20 and 40% so having idle, baseline, full load data would be very interesting.

Anecdotally I’m seeing lower idle and full load power consumption with Bergamo systems that are not stripped down (~115W/350W)

“Intel tends not to market these heavily as AMD does”

Since AMD “server” CPUs are only 1 or 2 sockets – a single socket it most of AMD’s business. And with the boneheaded way they connect 2 CPUs (64 PCIe lanes PER CPU) – with a single socket you get 128 PCIe lanes – with dual socket you get ..wait for it.. 128 PCIe lanes.

“In isolation, this finding is marginally interesting but if you could add a comparison of performance/watt to future system tests and have a larger dataset to compare between AMD and Intel this becomes much more useful.”

LOL. how about the ability to feed cores? AMD is terribad at that. But it is just a desktop CPU masquerading as a server CPU. There is a reason AMD has little to no exposure in the data center outside of AWS type systems (where Intel still outnumbers AMD 10:1)…

My current setup is all SPR – I did buy another storage server and am running EMR on that server – not sure there is enough of an uplift to replace ~40 or so SPR CPUs in the storage system/SAN.

“Most systems in the wild have average loads between 20 and 40%”

No. Pre virtualization, yes – now No. Maybe AMD can only get 20-40% due to the lack of ability to feed cores, poor memory subsystems and all those “extra” cores that do next to nothing. That which cannot eat cannot perform.

More interested in Granite Rapids than upgrading nearly new servers. PCIe5 is a major limitation at this point – and with PCIe6 being short term and with PCIe7 likely to be the next longer term (unlike PCIe4 which was a single-gen) bus – can’t come soon enough.

@Truth+Teller:

Emerald Rapids is limited to 1S or 2S. There are only 5 SKUs of Sapphire Rapids that are capable of 8S operation. If this was so important why did Intel remove it from EMR?

You’ve got it backwards: AMD’s desktop CPUs have server cores masquerading as desktop ones, and it was always the case for Zen. Intel’s P-cores are server cores as well, just without additional cache and AVX-512/AMX units.

I’m not sure where you got the AMD’s weak memory subsystem from when it outperforms Intel designs. How can Intel’s 8-channel design beat EPYC’s 12-channels? Not to mention that Intel still segregates memory speed by SKUs – SPR goes from 4000MT/s to 4800MT/s while every AMD SP5 CPU has the same speed – 4800MT/s. EMR brings the max to 5600, but it is still segmented. Even at that increased speed it still falls short in raw bandwidth.

Thanks for the article. Well done. Its good to hear that Intel is still producing advanced chips and staying in the hunt with AMD and the other Chip OEMs.

@Truth+Teller:

“Since AMD “server” CPUs are only 1 or 2 sockets – a single socket it most of AMD’s business. And with the boneheaded way they connect 2 CPUs (64 PCIe lanes PER CPU) – with a single socket you get 128 PCIe lanes – with dual socket you get ..wait for it.. 128 PCIe lanes.”

In a dual socket you can get up to 160 PCIe lanes with AMD. This is done by only using 3 connections between CPUs instead of 4. Multiple server companies allow this setup option. Oh and this has been an option since at least Milan.

“No. Pre virtualization, yes – now No. Maybe AMD can only get 20-40% due to the lack of ability to feed cores, poor memory subsystems and all those “extra” cores that do next to nothing. That which cannot eat cannot perform.”

This is just pure lies on your side. Even in a virtualized environment you won’t see overall usage much about 40-50% most of the time. You cannot have a cluster running at 100% across all servers all the time. If a server were to go down you don’t have the required at minimum N+1 to run everything. That will mean some VM or most VMs will crash.

“More interested in Granite Rapids than upgrading nearly new servers. PCIe5 is a major limitation at this point – and with PCIe6 being short term and with PCIe7 likely to be the next longer term (unlike PCIe4 which was a single-gen) bus – can’t come soon enough.”

How is PCIe 5 a major limitation right now? PCIe 5 was released in 2019 and not implemented until 2022. PCIe 6 specification wasn’t released until January 2022. As is normal the PCIe generation on servers is from a few years before that. It takes time to validate these new generations on the server hardware. Not to mention that CPUs being released now were beginning their designs back in 2020, you know when PCIe 6 wasn’t an official spec. We will probably get PCIe 6 in 2026 and PCIe 7 isn’t expected to be released until 2025 so look to 2028/29 for server CPUs running that. Overall a PCIe generation will run probably about 3-4 years now for a while.

“In a dual socket you can get up to 160 PCIe lanes with AMD. This is done by only using 3 connections between CPUs instead of 4.”

A couple extra PCIe lanes in exchange for crippling inter-socket bandwidth! Now that’s a patently AMD solution if I’ve ever heard one.

“A couple extra PCIe lanes in exchange for crippling inter-socket bandwidth! Now that’s a patently AMD solution if I’ve ever heard one.”

That isn’t true at all. A Milan (3rd Gen) based Epyc with 96 lanes of inter-socket bandwidth has 50% more inter-socket bandwidth than Naples (1st Gen) based Epyc. A Genoa (4th Gen) with 96 lanes has 50% more inter-socket bandwidth than Milan with all 128 lanes.

TruthTeller sounds like an Intel shill.

@Enrique Polazo, don’t forget his alter ego, GoofyGamer either! Also looking forward to follow-up posts from TinyTom, PerfectProphet and AllofyouareAmdumbs.

AI is where these CPUs shine. A100 80GB still go for 16k+ on ebay. Instead you can grab 2×8592 and a future proof amount of DDR5 and get probably 1/3~1/2 the tokens/s. Hopefully Granite Rapids could bring parity.

Yes you can divide the weights onto multiple GPUs and do pipeline parallelism to achieve good utilization. But when you start to go beyond transformers, into transformer-CNN hybrids or something very different, the software is not there.