Power Consumption

The redundant power supplies in the server are 850W units. These are 80Plus Platinum rated units. At this point, most power supplies we see that are over 500W are 80Plus Platinum rated. Almost none are 80Plus Gold at this point and a few are now Titanium rated, although we will review a few servers over the next months with Titanium rated power supplies.

The system is designed for a balanced workload. With the 225W cTDP offerings such as the AMD EPYC 7543 and EPYC 7713 we saw 72-75W idle, 295-300W 70% load, 392-400W 100% load, and 408-414W max power consumption. The number that surprised us the most was the idle figure which we expected to be higher. Once we got into Ubuntu 20.04.2 LTS, idle became reasonable for this class of machine.

To buyers of dual-socket Xeon E5-2600 V4 systems, that may seem shocking for a 1U. At the same time, when we compare this to multiple systems that we would consolidate into a system like this, the power consumption is relatively low.

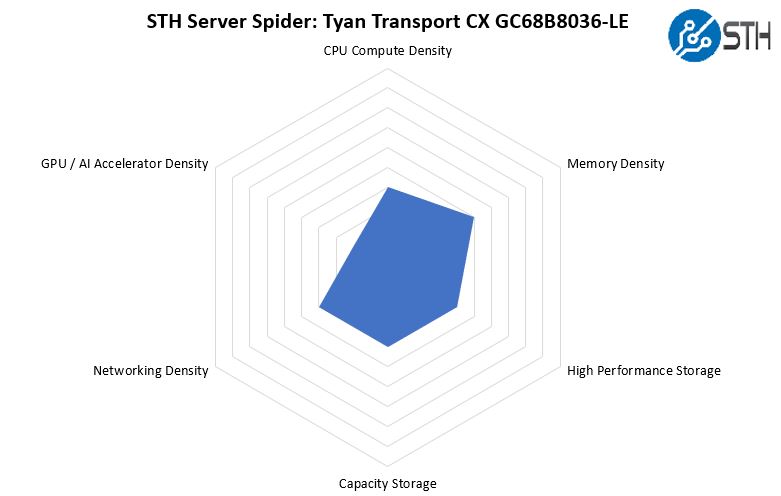

STH Server Spider: Tyan Transport CX GC68B8036-LE

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

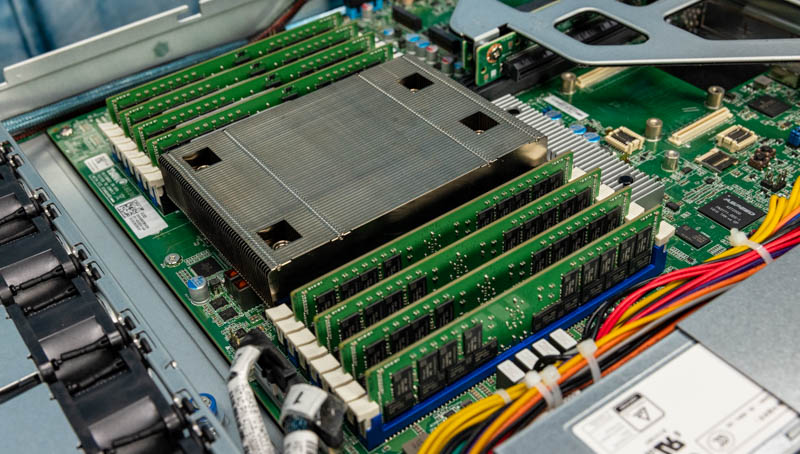

Often when we review 1U 4x 3.5″ bay platforms, they score poorly in “high-performance storage” because they do not have 2.5″ NVMe bays. With the Tyan Transport CX GC68B8036-LE, we get those four front NVMe bays plus two internal M.2 SSD bays so we can actually use this system for NVMe storage. We also get more memory density than with 1U single-socket servers that do not have 16x DIMMs per socket. Since this is scored more on density, it was surprising to see how much Tyan fit in what is a cost-optimized platform.

Final Words

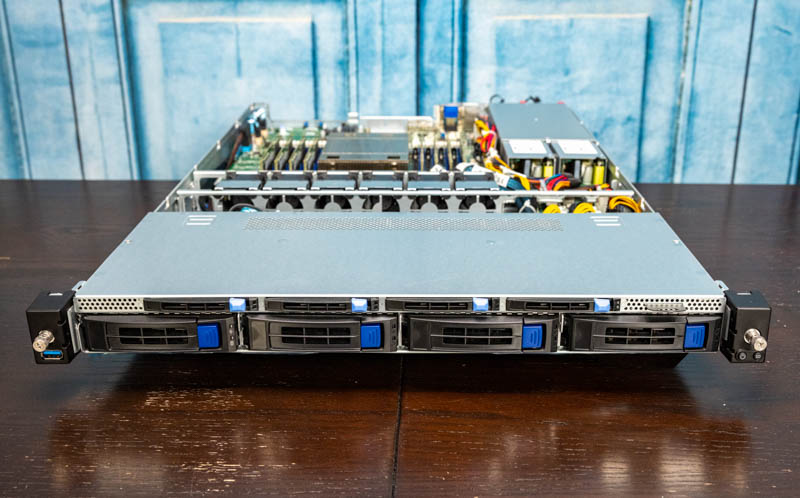

At first, one can look at the Tyan Transport CX we are reviewing here and ponder why it was built without full access to all of the AMD EPYC PCIe Gen4 lanes or why it is still using OCP NIC 2.0 slots. Once you see the capabilities and performance, it becomes clear. This is the solution to consolidate low-end dual Xeon E5 V3/ V4 platforms as well as the Xeon Silver series.

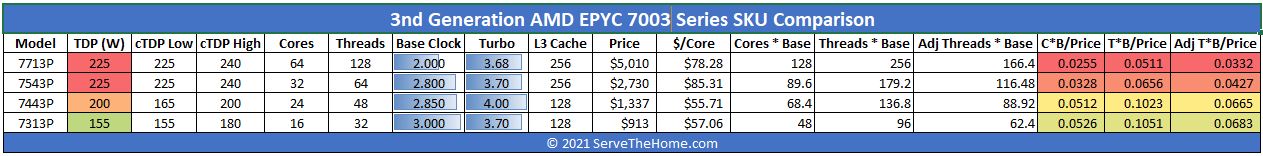

Typically one would purchase these in the $1500-1700 range as barebones, which is higher than some previous-generation systems, but also lower than today’s dual-socket systems. Moving to a single AMD EPYC 7003 design with the “P” series SKUs starts to look more affordable when the math is 2:1 to 4:1 system consolidation ratios. Even the EPYC 7713P plus this barebones system are around $6600 combination which equates to around $1650 per node if you are consolidating 4:1 for the CPU and server, and one still is using less rack space, fewer network ports, and potentially fewer PDU ports at lower power consumption.

In our example using the lab load generation nodes (picture of one of the systems below) consolidating at the heart of the Intel Xeon E5-2630 V4 ~4-year-old system to this platform makes a lot of sense. The Intel Xeon Silver 4100 and the lower part of the Xeon Silver 4200 series follow a similar pattern. Moving from 8 sockets across four nodes to a single 1U 1-socket CPU is absolutely transformative to infrastructure.

As one may have noticed, we are saying 8:1 consolidation, but in terms of performance, we actually got better than 8:1 consolidation with the EPYC 7713(P) and the Xeon E5-2630 V4 nodes. We do not have 4x 2P systems to compare, but based on our single-node data for dual-socket E5-2640 V4 and Xeon Silver 4114 systems will be susceptible to the same 8:1 consolidation given the additional headroom on the EPYC 7713, but that is an extrapolation from one system to four while the Xeon E5-2630 V4 example is one we were able to test.

When the new AMD EPYC 7003 series was launched, it was easy to see that AMD did not have low TDP SKUs. The narrative that was difficult to show in dual socket configurations is that AMD’s strategy is to offer an upgrade path from one or more dual-socket servers coming off of refresh into a single socket node.

Tyan recognized that while we often focus on top-bin performance, much of the market purchases lower-cost CPUs. With the Transport CX GC68B8036-LE, Tyan has a system with the flexibility to accept hard drives and NVMe SSDs, along with plenty of networking, CPU cores, and memory capacity to offer these consolidation opportunities at a lower cost.

As we move to an era with rapidly expanding CPU and accelerator TDPs, this concept is going to become more important. Data center power and cooling capacity will start to dictate single-socket configurations. The challenge a system like this faces is that buyers are accustomed to purchasing dual-socket configurations and there is inevitably a lag between when architectures (should) change, and when that change makes its way into deployments. Our hope is that by reading STH, you have the knowledge to inform yourself and your colleagues regarding the fact that this model change is possible, and that it will become important to an increasing number of segments in the near future.

What a mouthful codename

Keep consolidating those E5 v4 nodes. Because of people like you, prices for for E5 v4 systems are finally beginning to drop to reasonable prices on the second hand market. My business is finally able to afford upgrading our E5 v2 servers.

Would love to see how these do in a switchless Azure Stack with the new OS.