The History of Virtualization – Supercomputers and Mainframes

[divider]

To set the scene – Computing in the 1950s-1960s.

Supercomputers in the 1950s were the size of several large filing cabinets. They used drum memory for fast storage with hard drives like the IBM RAMAC coming in second if they were available at all. Working memory at the time was core memory, made up of small metal rings suspended in a lattice of metal wires. The PCBs that went in to the cabinets were large and held relatively few components. Whilst Jack S Kirby invented the Integrated Circuit in 1958, it would take time for this breakthrough to find its way in to widespread computer manufacturing. [e.d. or Robert Noyce co-founder of Intel depending on the account you read.]

This is also a time where there was no direct computer input past being able to flick a few switches. Computers ran programs in batches which were read from individual punched cards. The punch cards would be directly loaded or have their contents uploaded on to large magnetic tapes. The mainframe would then be prepared to load a job, read the data from tape (or punch cards), execute the program and recorded the output to another tape. This output would be offloaded and generally either sent to be printed or sent for punch card generation.

There was no interactive ability between a running program and a user, it was a case of starting a program and waiting with crossed fingers to see if it produced the desired results hours or sometimes days later.

[divider]

Multi-Processing at UNESCO.

The concept of virtualization is believed to have naturally evolved from the ideas put forward by Christopher Strachey, First Professor of Computation at Oxford University, in his paper entitled ‘Time sharing in large fast computers’. The paper was read at the UNESCO conference on Information Processing in 1959.

Strachey described what he referred to as multi-programming, an ability where programs could be allowed to run without the need to wait for peripherals, whilst also allowing a user to debug code. His concept still centered on the current core batch processing but went on to describe architectural requirements such as memory protection and shared interrupts. This concept of multi-programming, was to nudge computing research in a new direction.

Later, Strachey wrote…

‘When I wrote the paper in 1959 I, in common with everyone else, had no idea of the difficulties which would arise in writing the software to control either the time-sharing or multi-programming. If I had I should not have been so enthusiastic about them.‘

[divider]

From MUSE to Atlas

There were less than 16 digital computers in the UK by 1955 and the lead in computing had been eroded away from the golden years between 1948 and 1951. The machines relied on vacumn tubes and had a throughput of around 1,000 instructions per second. They also tended to have primary memories of 4k or less.

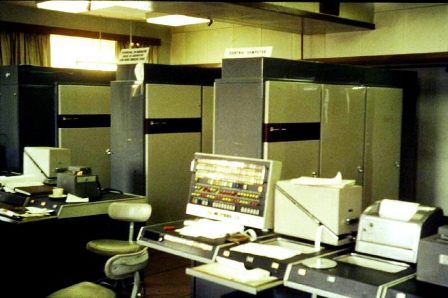

Copyright: Iain MacCallum.

It was partly this situation along with a requirement by British physicists for more computing power in 1956 that drove Tom Kilburn and his team from Manchester University (UK) in their own supercomputing project. IBM was working on the IBM Stretch (7030) project, which was seen as a contender for the physicists requirements. The Manchester team aimed to produce a multiuser machine, capable of about 1 million instructions per second and with a minimum of 500k primary memory.

The project was named MUSE, short for Musec (Microsecond) Engine and drove ahead regardless of the limited funds available to the Tom Kilburns team. Help came in the form of a colaboration between the reasearch team and a British company called Ferranti Ltd (pdf) joined the project in 1959. Ferranti were a British company which had grown to produce products for defence contracts like gyro gun sights and one of the first IFF (Identification Friend or Foe) radar systems. They had colaborated with Manchester University previously with their Ferranti Mark I, which was the worlds first commercially produced computer, when they had branched in to ‘hight-tech’ devices and formed a computer department. Ferranti managed to secure a loan from the National Research Development Corporation (NRDC) for £300K and with the colaboration the project was subsequently renamed Atlas .

Copyright: Simon Lavington.

The first Atlas 1 was delivered to Manchester University in 1962. It utilized a total of 5,172 20cm x 15cm pcbs (excluding peripherals) made up of just 58 different types. The final cost for the Atlas 1 was between £2m-3m (equivalent to around £50M in 2012). The IBM Stretch project, for comparison, finally came with a $13.5m price tag, later dropping to $7.78m due to missed performance targets.

The Atlas was the first machine to use the concept of virtual memory (called one-level store) and it also pioneered the inclusion of an underlying resource management component called the Supervisor. The Supervisor would be passed special instructions, or extracodes, which enabled it to manage hardware resources such as the processor time allocation dedicated to individual executions.

[divider]

M44/44X

IBM responded with a research project based out of the IBM Thomas J. Watson Research Center (NY) called the M44/44X. The M44/44X was based on the IBM 7044 (the M44 portion of the name) and was able to simulate several 7044 systems (the 44Xs). A number of ground breaking virtualization concepts were implemented in the M44/44X project including partial hardware sharing, memory paging and time sharing. The M44/44X project is generally accredited with the first use of the term ‘virtual machine’.

[divider]

CTSS

In 1961 development started on the CTSS (Compatible Time Sharing System) project headed by Professor Fernando Corbato and his team at MIT (Massachusetts Institute of Technology). The project was in partnership with IBM who provided the hardware and engineers to support the MIT team.

In November 1961, an early version was demonstrated on an IBM 709 and by early 1962 IBM had replaced MITs 709 with a 7090 which it then later upgraded again to a 7094.

The CTSS Supervisor resided in memory bank A with the user programs restricted to memory bank B. The consoles would be allowed access to the machines resources for a variable length of time determined by the scheduler process and programs using memory bank B would be swapped in and out from drum or disk memory as required.

Each virtual machine was a 7094 with one of them being the background machine able to directly access the tape drives. The other virtual machines were able to run 7094 machine language or compiled code along with a special extra instruction set for the supervisor services. These supervisor services allowed the foreground virtual machines to access the terminal I/O and file system I/O under the resource sharing control of the supervisor. Libraries were also made available to allow other languages available for the 7094 to also utilize these supervisor services. CTSS pioneered user hardware isolation, separate user file systems.

CTSS was made available for general use to MIT in 1963.

The MIT team was later dismayed to learn that the newly announced IBM System/360 range would not support memory virtualization, a cornerstone to their time sharing efforts. This descision by IBM, despite the pioneering work of the MIT team in collaboration with the IBM Engineers, was made because IBM at the time believed that the future of computing lay with faster batch processing rather than time sharing.

[divider]

Project MAC

MIT decided to instigate a new project in late 1962. Called Project MAC (Multiple Access Computer), its goal was to design and build a more advanced time sharing system based on the experience gained through the development of CTSS. MIT purchased a second modified IBM 7094 running CTSS and set about developing Multics (Multiplexed Information and Computing Service).

Tender went out for a new processor which would run the Multics system and whilst dedicated people at the IBM CSC (see below) produced a modified System/360 matching the requirements it was ultimately rejected by MIT. The official reason was that MIT wanted to use a mainstream processor to aid ease of access for other interested parties. It was suspected, however, that MITs decision was partly based on IBMs choice not to include virtual memory on the System/360 line and their apparent lack of commitment to multi-tasking.

Bell Labs joined the MAC project in 1964 and the GE-645 was chosen as the Multics system processor. GE subsequently also joined the group supplying engineers and programming resources. GE delivered a GE-635 to MIT is 1965 which ran a GE-645 simulator enabling development until, in 1967, a GE-645 was delivered. Multics was able to demonstrate multi-process execution in 1968 and was made available for use in July 1969. Multics continued to be used in various locations right up until 2000. The source code was made publically available at MIT in 2007.

[divider]

CP/CMS

Also in 1964, IBM tasked Norman Rusmussen to form the Cambridge Scientific Center (CSC) with the mandate to support the remaining MIT contractual relationships and to try and build IBMs reputation amongst the academic community. The CSC was based in the same building as the Project MAC team and Rusmussen was able to onboard resources from the original MIT / IBM liaison office originally put together to support the CTSS project.

The initial task the CSC had was to head off anger at the System/360s lack of virtual memory which was seen by MIT as a lack of IBMs interest in time sharing, a concept MIT was fully behind. In order to do this Rasmussen set about getting people together to try and find a solution for Project MACs processor requirement. To their delight they found that the address translation hardware design had already been through the preliminary design phase. Gerry Blaauw had initially put it together but was unable to secure its inclusion in the basic System/360s final design. The final design including address translation was pitched to MIT but was rejected (see Project MAC). The same design was also pitched to Bell Labs who also rejected it.

After this disappointing result, IBM called together the most experienced minds it had on time sharing systems and set about looking on how they could start winning back contracts for time sharing systems. Out of these recommendations a baseline design for a new System/360 model was roughed out which was essentially a System/360 Model 60 but would finally include the dynamic address translator (DAT box). The design was given the designation System/360 Model 67. Rasmussen was subsequently able to make a successful proposal for the Model 67 to MITs Lincoln Laboratories.

The TSS (Time Sharing System) project was also born from the same meeting and development was passed to a team managed by Andy Kinslow. TSS was to be an all-in-one solution but proved to have been pushed to market too early when early customers of the 67s noticed serious stability and performance issues.

Rasmussen, in the meantime, moved forward with a project to build a separate time sharing system for the Model 67. Bob Creasy left Project MAC and joined the CSC as the project lead and the CP-40 (Control Program 40) project came in to being. Due to the projects “counter-strategic” direction a list of goals, which whilst valid were not the real reason for the project, were drawn up and emphasized whenever required.

Designed and built to run on the System/360 Model 40, CP-40 was envisaged to provide not only a new type of operating system but to also provide virtual memory and full hardware virtualization. To properly protect users from each other, pseudo machines (later to be renamed virtual machines after a discussion with Dave Sayre about the M44/44X system) would be implemented. The number of virtual machines was fixed at 14 with each having 256k fixed virtual memory. Disk storage was partitioned in to minidisks and a virtual memory system was designed that would allow 4k paging.

As development progressed, it was seen that a second component would be required. Named the Cambridge Monitor System (CMS) it was to be a single user operating system, thus significantly reducing the complexity, and would be heavily influenced by CTSS.

CMS allowed people to add new instructions, interface with CP via privileged instructions and present a file system where users only saw filenames and the rest of the files details were hidden (i.e. which blocks were used to store the data for example).

Implementation of the CP/CMS system began in 1965 but was problematic considering the very limited resources available to the CSC for the project. Rasmussen brokered various machine time sharing deals between the CSC, MIT and various IBM departments in order to get time on CTSS. The time was used to run a S/360 assembler and emulator which was required for development with no System/360-40 for use. In January 1967 CP-40 and CMS were put in to production.

[divider]

CP/CMS – From 40 to 67.

In 1966 another team at CSC were tasked with modifying CP/CMS system to run on a System/360 Model 67. Without the access to a Model 67, the CSC team set about modifying the CP-40/CMS to simulate a Model 67.

Whilst changes required to the CMS were minimal, developing and applying them was confounded by the rapidly changing CMS code base as new features were incorporated.

The CP was more of a challenge due to hardware address translation on the Model 67 having marked differences to that of the modified Model 40. The opportunity was also taken to make the CP more generic, the fixed control blocks for the virtual machines were changed to variable allowing for variable numbers of virtual machines and the concept of free storage was also incorporated allowing the control blocks to be added dynamically.

In 1967, after developing the code on a Model 40, the team at the CSC were able to start testing on a real Model 67 at MITs Lincoln Laboratory. Lincoln labs had one of the early Model 67s which came with TSS but they found TSS to be highly unstable. TSS would take 10 minutes to load to a point where it would allow user access and then go down again in under that time. When the CSC team loaded CP-67 in under a minute with user access, The Lincoln data center manager, Jack Arnow, told IBM he wanted it.

Lincoln Labs had CP-67/CMS in daily production by April 1967 and by the beginning of 1968 Union Carbide, Washington State University (WSU) and IBMs own Yorktown research facility also had it running. Each organization contributed with amendments, rewrites and changes to CP/CMS which were still being distributed from site to site via around 6,000 punch cards.

A number of new enhancements were added including support for remote consoles and a forerunner of RSCS (Resource Spooling and Communications System) which enabled remote system to system communication. RSCS was built up from initial work in 1969 and in use by IBM by 1971. After partial public release in 1975, it continued to gain in popularity and changed its name to VNET. In 1976 VNET was connecting 50 systems around the US, by 1979 that had exploded to 239 systems in 10 countries. The popularity continued to grow and by 1983 over 1,000 nodes were connected. In comparison, ARPANET (the forerunner of the Internet) at 1981 connected 213 computers.

IBMs Systems Development Division (the group behind TSS/360 and OS/360) had been trying to get IBM to kill off the CP-67 project throughout 1967 and in to early 1968. With new support from MITs Lincoln Labs, along with some other big names, onboarding CP, IBM allowed the project to continue.

TSS underwent decommittal (ending of support and development) in February 1968 although this was later to be rescinded in April 1969 due to vigorous customer protests. IBM was to finally close the book on TSS in May 1971.

CP-67 was made available as source code by Lincoln Labs and released to eight installations in May 1968. It was classified as a Type III program in June of the same year (Type III programs are customer contributed and are not officially tested, supported or maintained by IBM). It was at that time a number of employees at CSC, Lincoln Labs and Union Carbide left their respective organizations to form the companies National CSS and Interactive Data Corporation (IDC). National CSS went on to sell time on CP/CMS and to produce their own version based called VP/CSS.

During the rest of its life, Cp-67/CMS incorporated a number of new enhancements including a method to prevent page thrashing, support for PL/I, a improved scheduler and fast path I/O.

[divider]

VM/370

In June 1970 IBM announced its plans for the future with the System/370 range, which was still to be provided without memory virtualization. This was however changed in 1971 when IBM announced would get (memory) relocation hardware on the entire range.

Due to earlier antitrust litigation, IBM had unbundled its hardware, software and service offerings which resulted in the team developing CP/CMS moving out of the CSC group. The CSC worked on bootstrapping the CP-67 for the S/370 whilst the CP development team worked on re-implementing CP for the System/370.

As part of the development work, a version of CP-67 came about which could run System/370 virtual machines on a System/360 Model 67s hardware. This became instrumental in the development of MVS (Multiple Virtual Storage), IBMs favored operating system, as the MVS team did not have access to a still rare System/370. Once the MVS team were dependent on CP/CMS it became much harder for IBM to kill off the project.

Autumn 1971 saw the delivery of a System/370 to CSC under utmost secrecy due to the fact that IBM had not publically announced its intention to add relocation to the System/370 range. If people saw a new System/370 going in to the CSC building there is a good chance that speculation may have led to IBMs announcement having much less impact than they would have liked. CP was renamed VM/360 and it was also around this time that the CMS was renamed to the Conversational Monitor System.

The first version of VM/370 shipped to customers in November 1972. At that time IBM predicted that only one System/370 Model 168 (their top of the range) would ever run VM/370 during the entire life of the product. As it turned out, the first Model 168 shipped to a customer ran only CP/CMS and ten years later 10% of large processors shipped from IBMs Poughkeepsie production facility were destined to run VM/370.

Whilst the technique had been used for development work in the past, the release of VM/370 was the first IBM release that officially added the ability to run a virtual machine from within another virtual machine (virtual machine nesting). VM/370 attained IBM TYPE II designation and so was IBM supported.

It would take until 1977 before IBM management were fully onboard with VM but after that point things got better and there were 1,000 installations of VM by 1978.

IBM would go on to have a few ups and downs with subsequent VM service packs but would retain a good user base and steady growth through the 1980s. A major blow came from IBM when, in 1983, they announced they would be shipping any further service packs and releases under the policy of OCO (Object Code Only). Up until that point development of CP/CMS up through VM/370 had been supported by a dedicated user community. With access to the source code, the community had been able to provide code fixes for each other and incorporate new features without having to wait for IBM to prioritize, develop, test and release. IBM refused to change its stance even after vigorous complaining from the various user communities.

IBMs virtualization projects would go on to mature through various implementations right up to the current z/VM offering.

[divider]

Other Implementations

Lawrence Livermore Laboratory began work on its LTSS (Livermore Time Sharing System) at the tail end of the 1960s. The Operating system was to be used for the Control Data Corporations CDC 7600 Supercomputer which went on to take the crown for the worlds fastest computer. The CDC 7600 was designed by Seymore Cray.

Cray went on to found his own company, Cray Research, in 1972 which went on to build the Cray 1 and X-MP supercomputers running CTSS (Cray Time Sharing System). CTSS was developed by the Los Alamos Scientific Laboratory in partnership with the Lawrence Livermore Laboratory (LLL) and leveraged the experience LLL had with LTSS. Unfortunately CTSS was built using a tightly constrained model and had no support for TCP/IP or other emerging technologies and so could not keep up with following generations of Cray hardware. Lawrence Livermore Laboratory later went on to try and correct some of these constraints with the New Livermore Time Sharing System (NLTSS) but this only saw usage on Cray machines in the late 1980s and early 1990s.

Digital Equipment Corporation (DEC) started a project in 1975 aimed at developing a virtual address extension for their extremely popular PDP-11 computers. The project was codenamed ‘Star’. Alongside the Star project, the Starlet project was also formed to build a new operating system based on their previous RSX-11M system software. The project was run by Gordon Bell and would include Dave Cutler who would go on to lead development on Microsoft Windows NT. The projects resulted in the VAX 11/780 computer and the VAX-11/VMS operating system (later to be renamed VAX/VMS). VMS was to be renamed OpenVMS in 1991 and was ported to both the Alpha and Intel Itanium range of processors. DEC was eventually bought out by Compaq in 1998 which is now part of HP after its merger in 2002.

[divider]

Coming up next…

In part 2 we will be seeing how visualization on supercomputers came to cross over to the Intel Enterprise and home PC arena.

[divider]

References

I would like to thank the people and organizations below who have freely made their research, white papers and experiences available to everyone.

The following links have a wealth of information for those who wish to know more about this era of computing history. Many have pictures of the systems and the people involved.

- Simon Lavington: The Atlas Story (pdf) and for permission to use the Atlas pictures.

- The Our Computer Heritage (UK) site.

- Melinda Varian: VM & the VM community (pdf)

- R.J. Creasy: The Origin of the VM/370 Time Sharing System (pdf)

- Everything VM: History of Virtualization

- Tom Van Vleck: History of the IBM 360/67 and CP/CMS

- Jim Elliot (IBM): IBM Mainframes – 45+ Years of Evolution (pdf)

- Chuck Boyer (IBM): The 360 Revolution (pdf)

- The Computing History website

- The Multicians website.

Fun read. Thanks! Nice to see new authors write about topics that they are passionate about.

Thanks for the comment. It was a pleasure to write.

I have made a couple of small amendments with thanks to Simon Lavington for pointing them out to me.

The following three parts will cover;

2. The PC era of Virtualization.

3. The technology of virtualization.

4. How to build your own mini-virtual server – mITX Style.

please can you give me link for next parts?

The advice given above is highly relevant. The post reveals some burning questions and issues

that ought to be discussed and explained, found something much like this page a couple days ago

Furthermore, it’s essential to comprehend within the detail.

In the post, an individual can easily find something

basic, remarkably for him/her, something that can be extremely useful.

So I am delighted with the data I’ve just obtained.

Thanks a lot!

where are the other parts of history of virtualization? vmware, xen, kvm…