At Hot Chips 2023, Ventana Micro a RISC-V CPU startup showed off its new data center Veyron V1. The Ventana Veyron V1 looks to the new era of RISC-V CPUs for the data center. While this is on the V1 product, the company apparently already has V2 working.

Since these are being done live from the auditorium, please excuse typos. Hot Chips is a crazy pace.

Ventana Veyron V1 RISC-V Data Center Processor Hot Chips 2023

Ventana has a fun target slide for the Veyron V1. It might best be described as “wherever we can find demand.”

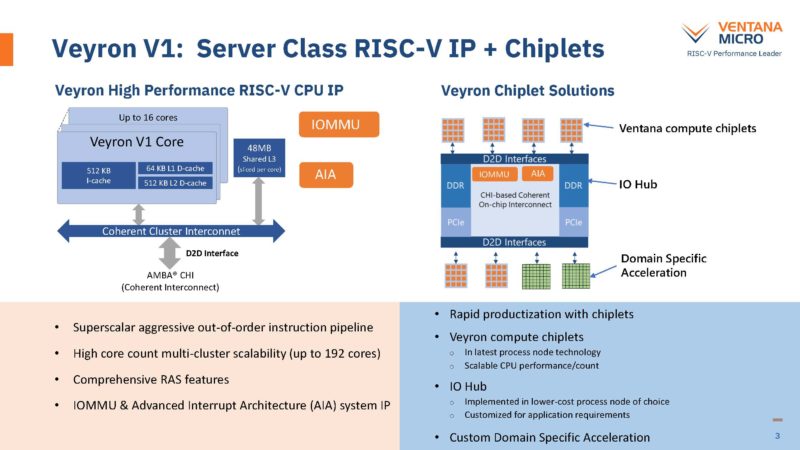

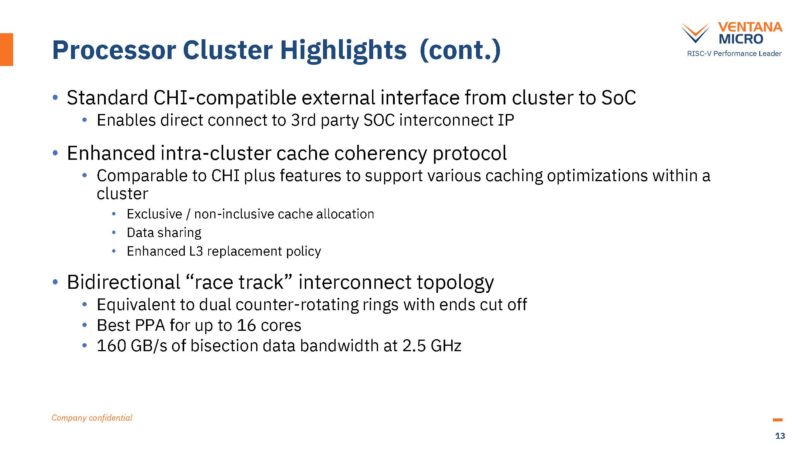

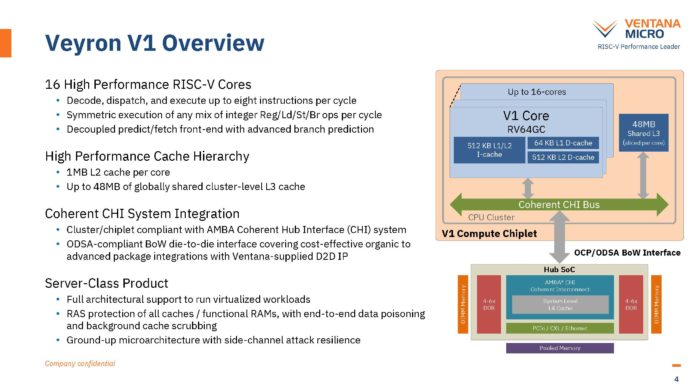

The idea is that Ventana Micro has a RISC-V CPU core, up to 16 cores per chiplet, then combines them with an I/O hub that has things like DDR memory controllers and PCIe. Ventana says it can scale Veyron V1 up to 192 cores but it can also integrate domain-specific accelerators.

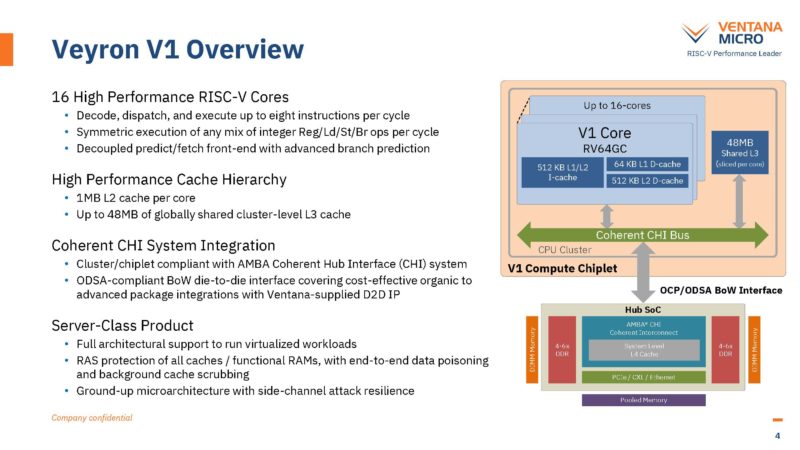

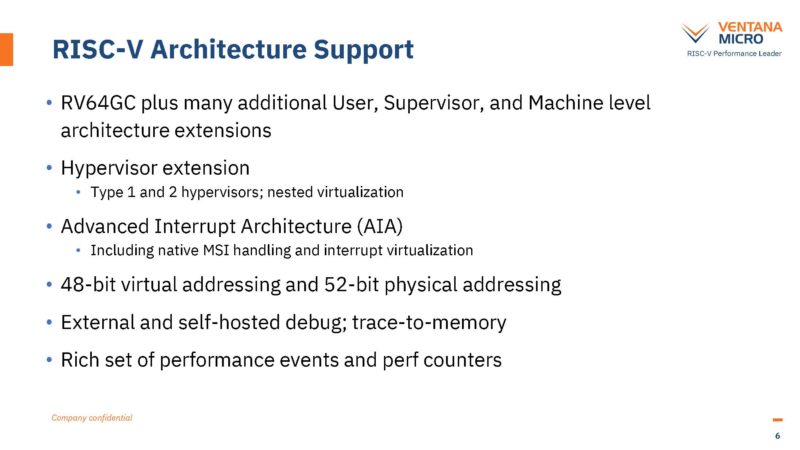

Here are the key specs including the cores, cache, and more on the chip. Ventana says that Veyron V1 will have support for things like virtualization as well as measures making it more resilient to side-channel attacks.

On the support side, something we were surprised with is that the company is already discussing nested virtualization. Arm Neoverse N1 chips we saw did not even support nested virtualization.

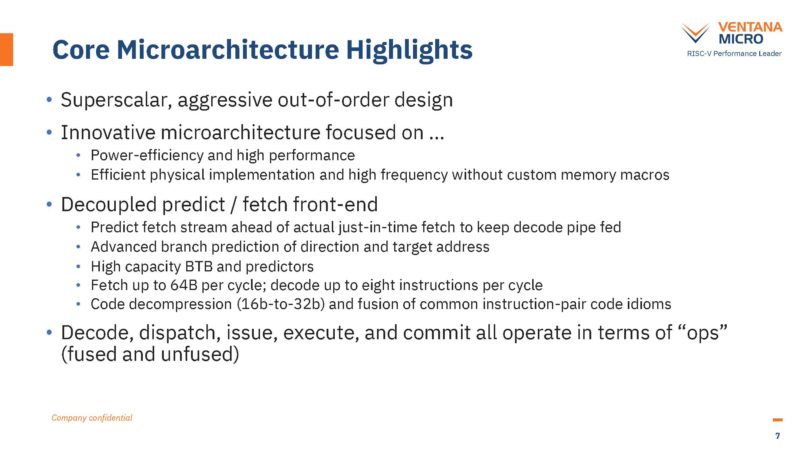

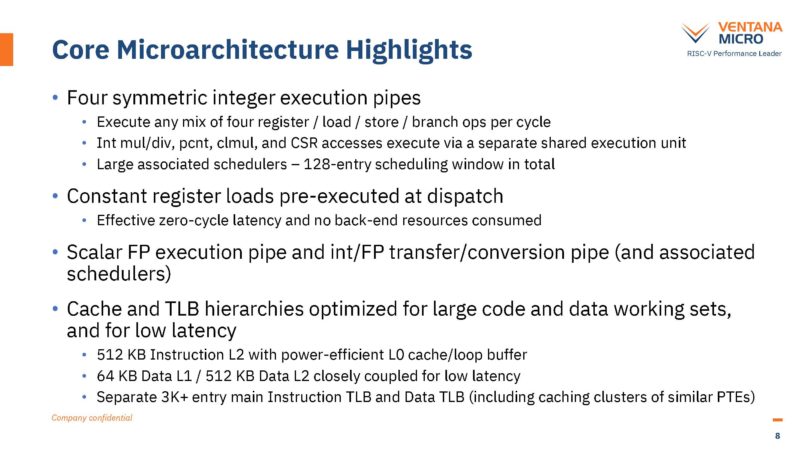

Here is a bit more on the core microarchitecture. We cannot transcribe this as fast as just showing the slide.

Here is a bit more on this.

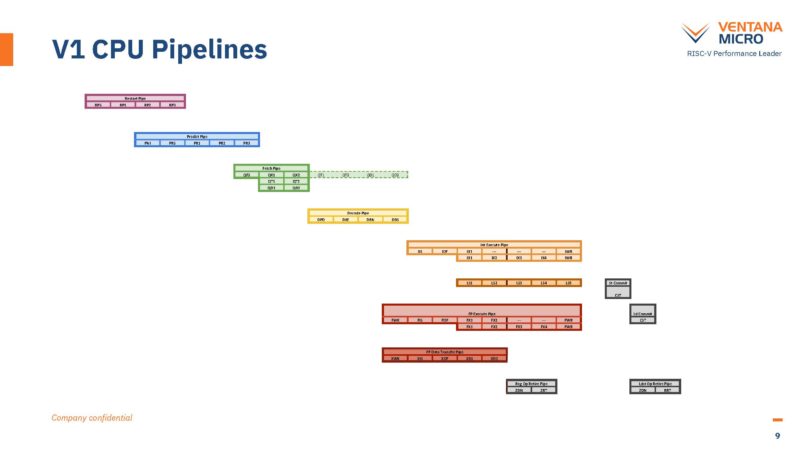

Here is the pipeline in a very hard to read chart.

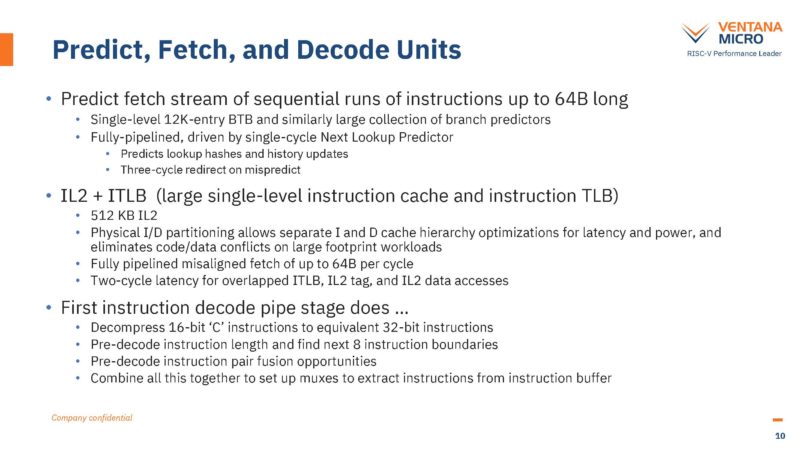

Here is the predict, fetch, and decode slide:

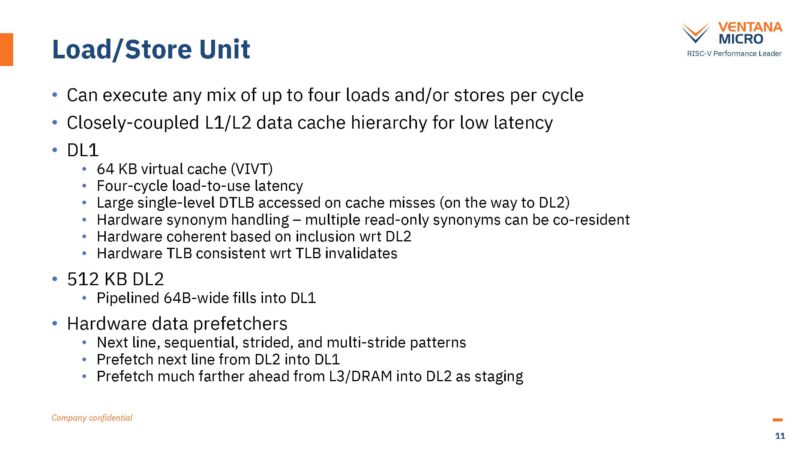

Here are the load/ store details:

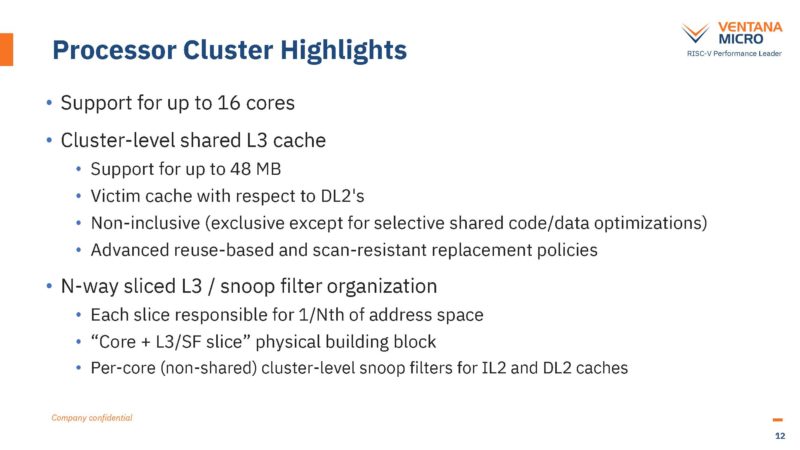

From a processor cluster size, each 16 core cluster has up to 48MB of L3 cache.

It would have been really interesting if the company included UCIe here just to say it is one of the first UCIe CPUs and leaned into chiplets.

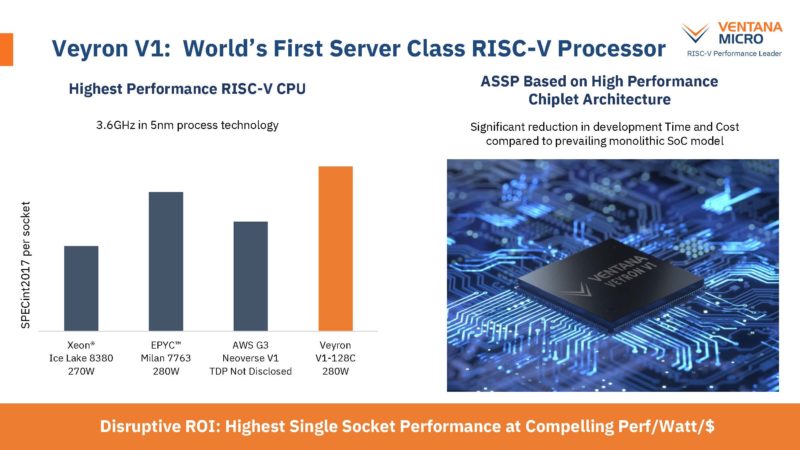

In terms of performance, Ventana is targeting what is now previous-gen performance with the 128 core Veyron. The numbers for CPUs like AMD EPYC Bergamo are much higher (>2x) than Milan. The company said V2 is not in production while Bergamo is already generally available.

In a market for RISC-V, Ventana does not have to be faster than AMD and Intel at this point. It just needs to be not x86, not Arm, and to be RISC-V. Folks are looking at RISC-V specifically as a replacement for Arm in future CPU and xPU designs.

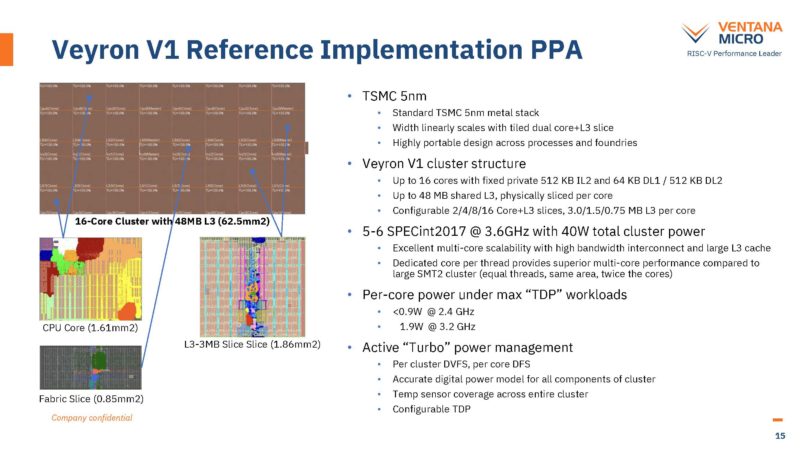

Ventana also has a reference Veyron V1 implementation that can be used for TSMC 5nm.

Final Words

RISC-V is the technology to watch in the x86 alternative space. Arm is already big, but as it works to revamp its business RISC-V has an opportunity to disrupt leveraging a lot of work that Arm did. In 2016 when we reviewed the Cavium ThunderX, Arm server CPUs were very rough. Since then, there has been a lot of work to move from a single x86 architecture codebase and infrastructure to a multiple architecture world for x86 and Arm. A lot of that work is being leveraged by RISC-V to increase its market velocity. It also seems like its I/O die design leverages lessons learned from AMD which has proven successful.

Hopefully, we get to get hands-on with some real Veyron V1 hardware soon.

“Back to the Future” – Perhaps choice (a colorful palette of server CPUs beyond the somewhat monochromatic X86_64 world) is good for the industry.

In my longish (1978 – 2021) IT career, the 1980s was my heaviest coding decade…In assembler and C/asm on a wide range of server level CPU designs (Intel 8086/286/386, Zilog Z8000, Motorola 680×0, Mips, HP RISC, IBM RISC, a bit of Vax, …) did I toil.

That was all before the Linux software environment made moving to a new CPU architecture a bit less challenging.

I don’t have a flux capacitor nor a DeLorean, so I can’t foresee the future, but in my experience CPU choice made for a more vibrant industry.

(Full disclosure: The bulk of my income over those decades in IT was derived by something X86[_64] or Sun powered).

Performance doesn’t look too good compared to Altra Max either (which is similar to EPYC 7763), so per-core performance is only slightly better than the 4-way Neoverse N1. And the virtual L1 caches look risky – even if fully handled in hardware, it will cause overheads whenever page table mappings change (which happens a lot on big servers).