The Tyan Thunder HX GA88-B5631 is truly an interesting machine. It is a 1U, single socket design that supports up to four PCIe 3.0 x16 devices. Most commonly this is going to be four GPUs, FPGAs, or MIC accelerators. Although we have recently looked at 8x GPU and 10x GPU 4U designs, the 1U 4x GPU design is significant. If you have enough power and cooling, that is 160 GPUs per 42U rack (assuming 2U for switches.) It also means one can use 16 to 20 GPUs in the 4-5 U that a standard 4-5U GPU server can hold.

Beyond the pure number of GPUs this server is significant for another reason. It is a major advancement in architecture over the previous generation. In this generation, one can utilize a single Intel Xeon Scalable CPU and power the machine instead of two Xeon CPUs.

There are several implications of this architectural shift. First, one gets six memory channels on a single NUMA node instead of eight in two NUMA nodes. By not traversing a socket-to-socket link, performance is greatly improved. One can also use lower-end CPUs with a single socket design because one does not need faster QPI/ UPI. With one, lower end CPU you can save power and save on system costs.

Test Configuration

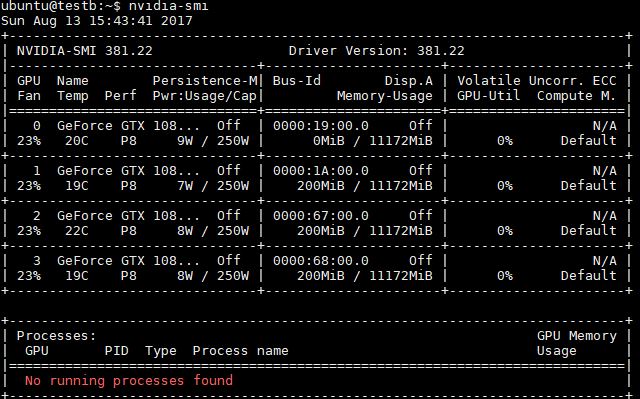

We built a configuration that we think represents a solid configuration template deployments will use for deep learning:

- System: Tyan Thunder HX GA88-B5631

- CPU: 1x Intel Xeon Silver 4114

- GPUs: 4x NVIDIA GTX 1080 Ti 11GB Founders Edition

- RAM: 6x 16GB DDR4-2400 ECC RDIMMs (Micron)

- SSDs: 2x Intel DC S3710 400GB

- NIC: Mellanox ConnectX-3 VPI

NVIDIA does not like their GTX cards to be used in servers. At the same time, it is wildly popular. The fact that CUDA applications work well with GTX series cards is one of the major reasons NVIDIA can maintain its leadership position in the AI and deep learning spaces.

With the configuration out of the way, let us look at the server.

Tyan Thunder HX GA88-B5631 Overview

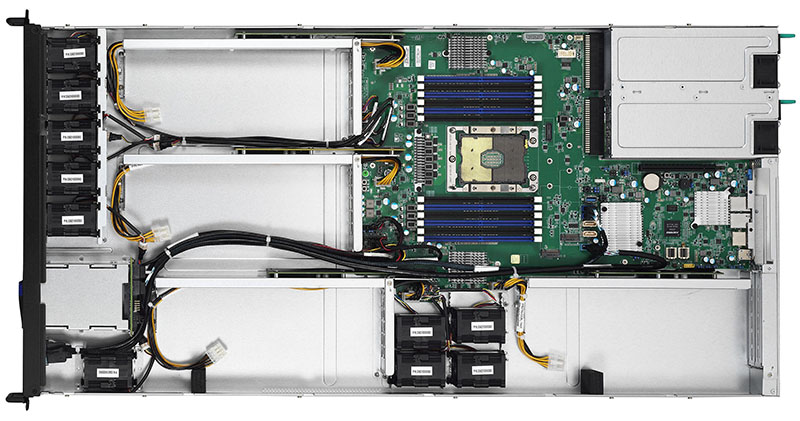

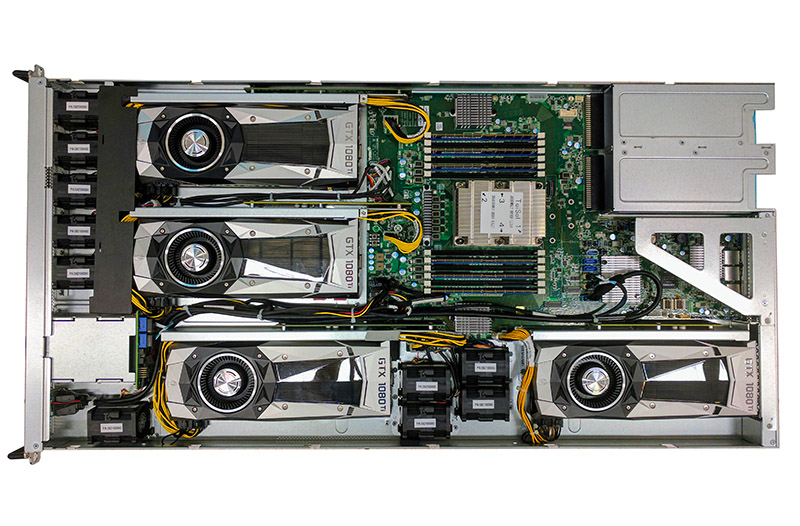

Here is the Tyan Thunder HX GA88-B5631 top view. One can see that there is a custom motherboard, four full height, and width card slots, as well as a multitude of fans.

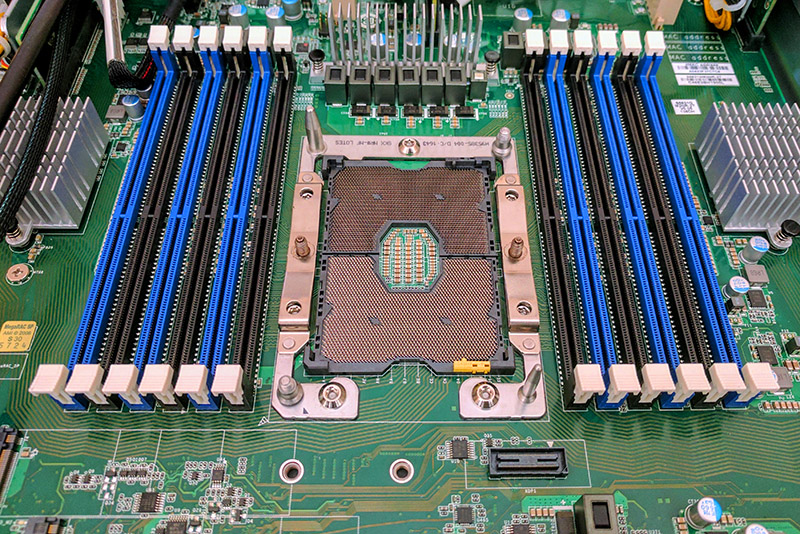

With this generation of Intel Xeon Scalable processors, one can utilize up to DDR4-2666 in quad channel operation. Further, one gets up to six memory channels per socket with two DIMMs per channel. The Tyan GA88-B5631 takes full advantage of this architecture and utilizes twelve DIMM slots.

Current documentation says that the system is designed for up to 165W TDP CPUs so that covers all but the niche SKUs like the 205W TDP Intel Xeon Platinum 8180 we tested.

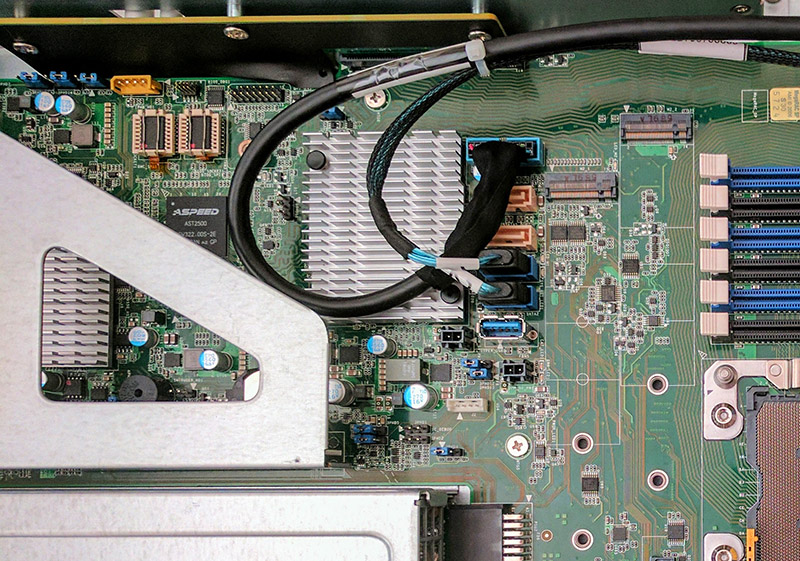

The Tyan S5631 motherboard powering the system has spaces for two internal m.2 storage drives. M.2 has become a popular storage form factor in servers with this generation. We like that the board can support larger 22110 form factors which are often required for drives with capacitors onboard.

Alongside those m.2 slots is a USB 3.0 front panel connector, four 7-pin SATA ports and a USB 3.0 Type-A internal header. With two hot swap bays in the front of the chassis and additional room for two SATA DOMs this configuration makes sense. There is also a PCIe x16 riser that sits over the motherboard allowing for add-on cards, such as a 100Gbps fabric adapter.

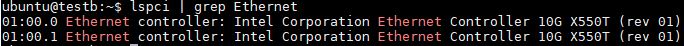

Along with that networking, the system has an out-of-band management port as well as two 10Gbase-T ports via an Intel X550T Ethernet Controller that is connected to the PCH. That allows maximum bandwidth for the GPUs and PCIe x16 fabric slot.

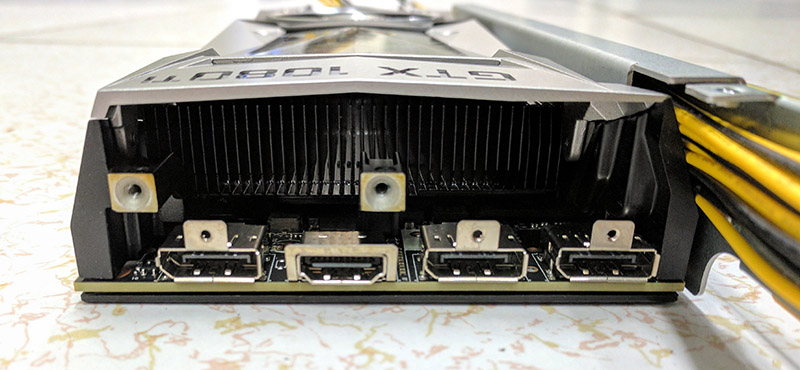

The real story here revolves around the four GPUs. Each GPU is set into a special PCIe x16 riser:

For our NVIDIA GTX 1080 Ti Founders Edition cards, this meant removing the stock PCIe bracket. That requires removing seven screws per GPU.

Tyan has different brackets for NVIDIA GRID/Tesla cards, GTX series cards, AMD cards, Intel cards and just about whatever you may want to put in the system.

This is a picture of our initial fit test and boot before we added the Mellanox card and PSUs:

One of the most important aspects to see here is that all four GTX cards are aligned with front to back airflow. This is not a major concern with passively cooled NVIDIA GRID or Tesla cards for example, but it is a concern for actively cooled cards. We have seen other solutions on the market which orient GTX cards improperly so this is a great job by the Tyan engineering team.

One item we did want to note was that the Tyan Thunder HX GA88-B5631 is deep. At 34.5″ it is the longest server we can fit in one of our test racks in our Sunnyvale, California lab.

Please note in the above that we kept the protective blue covering on our test system.

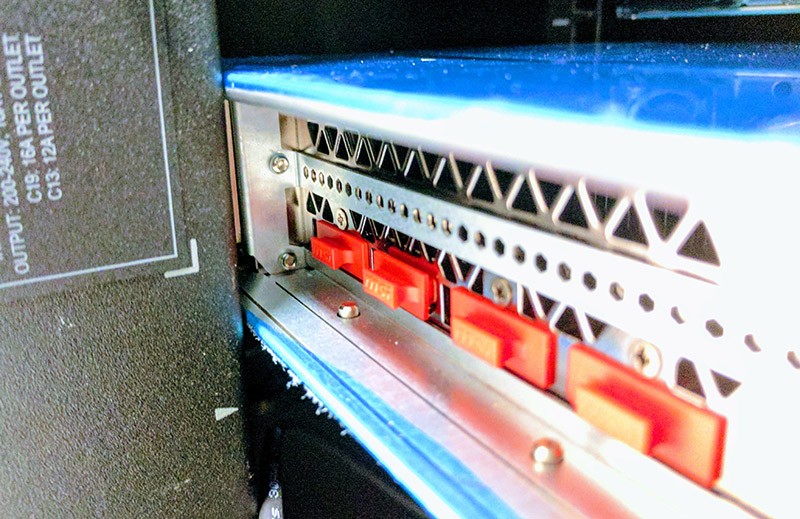

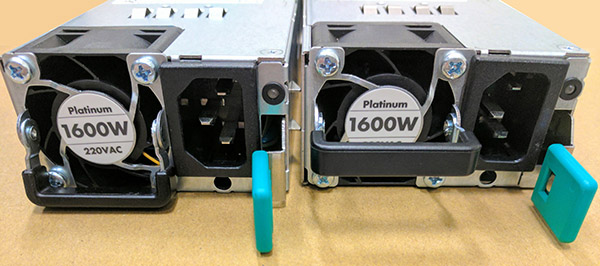

The system is powered by two 1600W 80Plus Platinum Delta power supplies. The 1600W figure is only for input power above 200V. On our 208V lab power, this provided redundancy with our configuration. On lower voltage power, for example, 120V, these PSUs would need both operating to keep the system up under full load.

Total installation time (box to racked) was around 55 minutes. This would have been much shorter if we were not using GTX GPUs and instead were using our GRID M40’s for example.

Multi-GPU Topology, Peer-to-Peer Bandwidth, and Latency

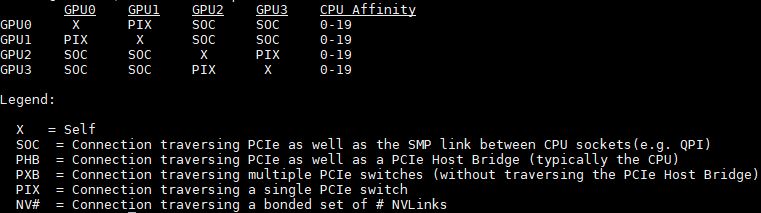

Following-up on our single or dual root peer-to-peer bandwidth and latency piece, we wanted to provide similar numbers for this configuration. First here is the topology:

As you can see, the Tyan GA88-B5631 has two PLX PEX8747 PCIe switches that connect the four GPUs.

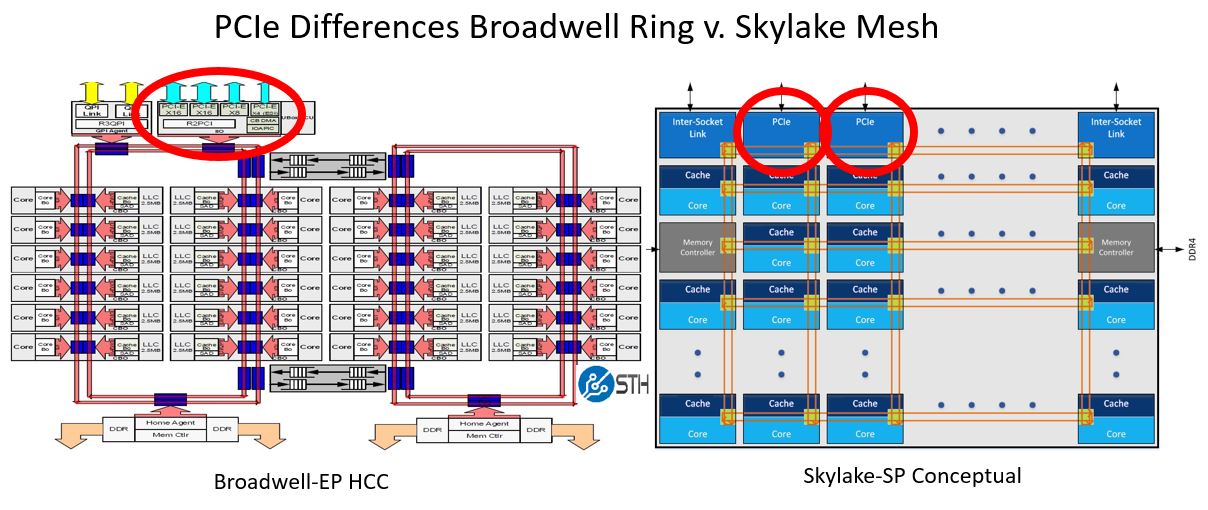

Each PCIe switch is connected to a different PCIe root. This is very similar to what we outlined in this diagram on the new Intel Xeon Scalable “Skylake” mesh architecture.

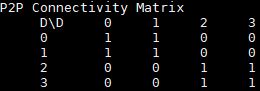

The current impact of this is that P2P connectivity is between the two GPUs on each switch. Tyan did say they were working with NVIDIA to see if they can enable this across all four GPUs.

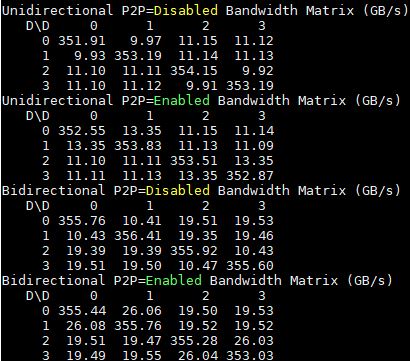

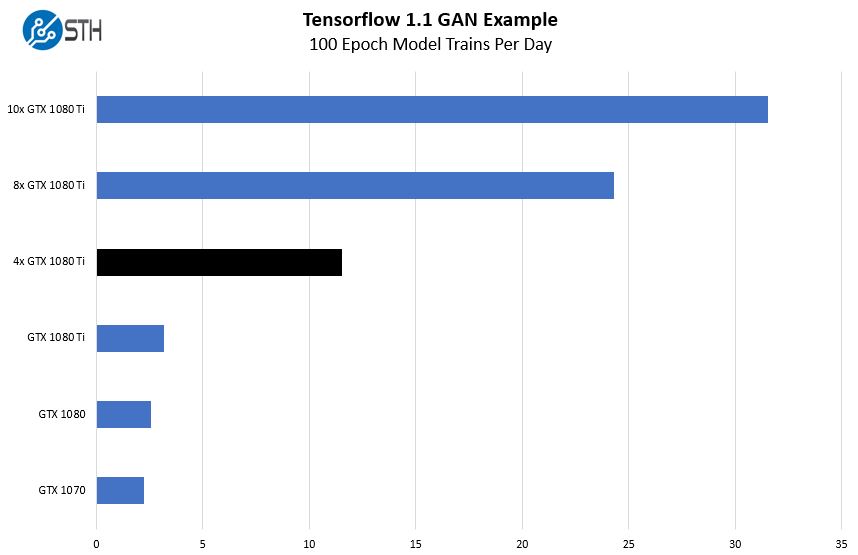

Here are the uni- and bi-directional bandwidth figures with P2P enabled and P2P disabled:

A quick call-out here is that the unidirectional P2P bandwidth is significantly higher than we saw with socket-to-socket dual root in the Intel Xeon E5 V4 generation.

Here are the P2P latency figures:

Overall, one can see the design is about what we would expect.

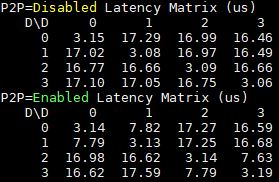

Example Performance STH Tensorflow GAN

In order to translate the bandwidth figures into a tangible performance, we wanted to put some perspective into other deep learning builds we have done. We took our sample Tensorflow Generative Adversarial Network (GAN) image training test case and ran it on single cards then stepping up to the 4x GPU system. We expressed our results in terms of training cycles per day.

As you can see, the performance is about what we would expect from a four GPU system. On this chart the 8x and 10x GPU systems utilized 4.5U each. If you are space rather than power limited, the 1U Tyan Thunder HX GA88-B5631 can achieve excellent performance per rack unit numbers.

Tyan IPMI Management

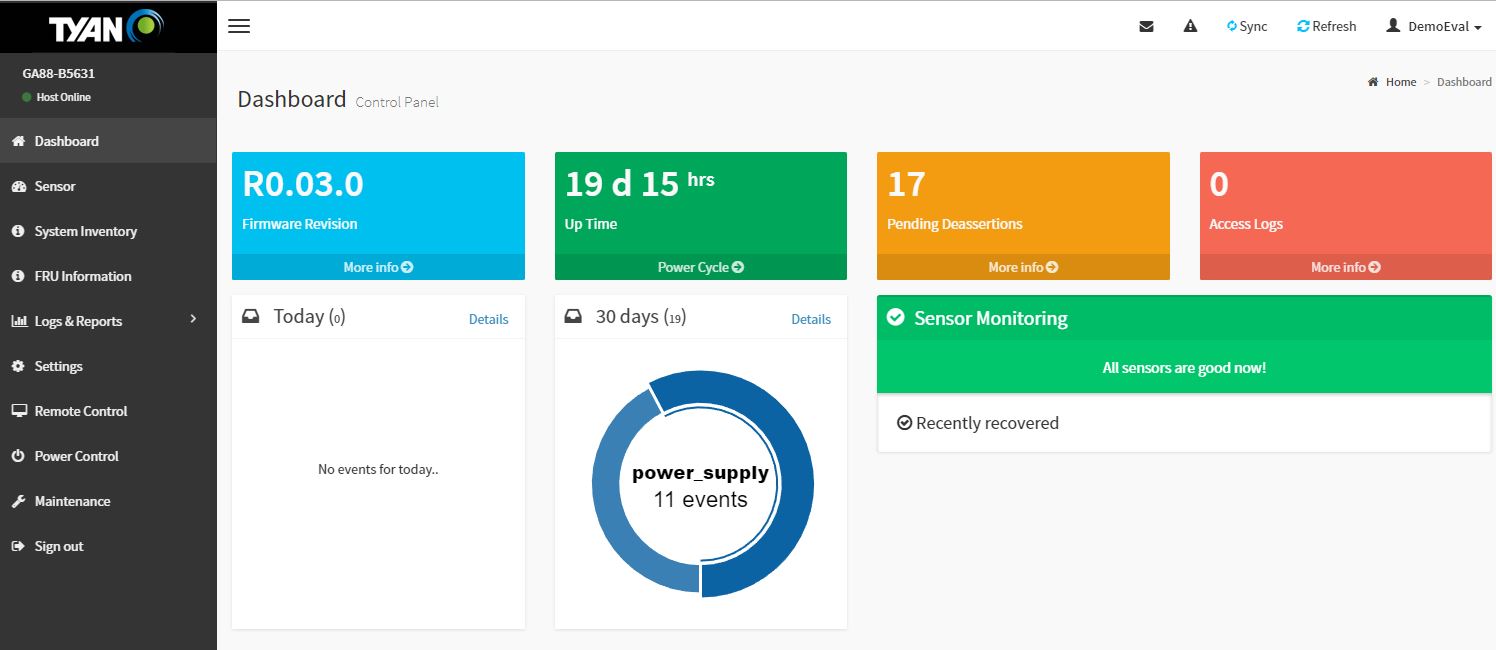

The last time we looked at Tyan IPMI was many years ago. Today, we are greeted by a thoroughly modern design:

A look at the dashboard and we see something that looks distinctively modern:

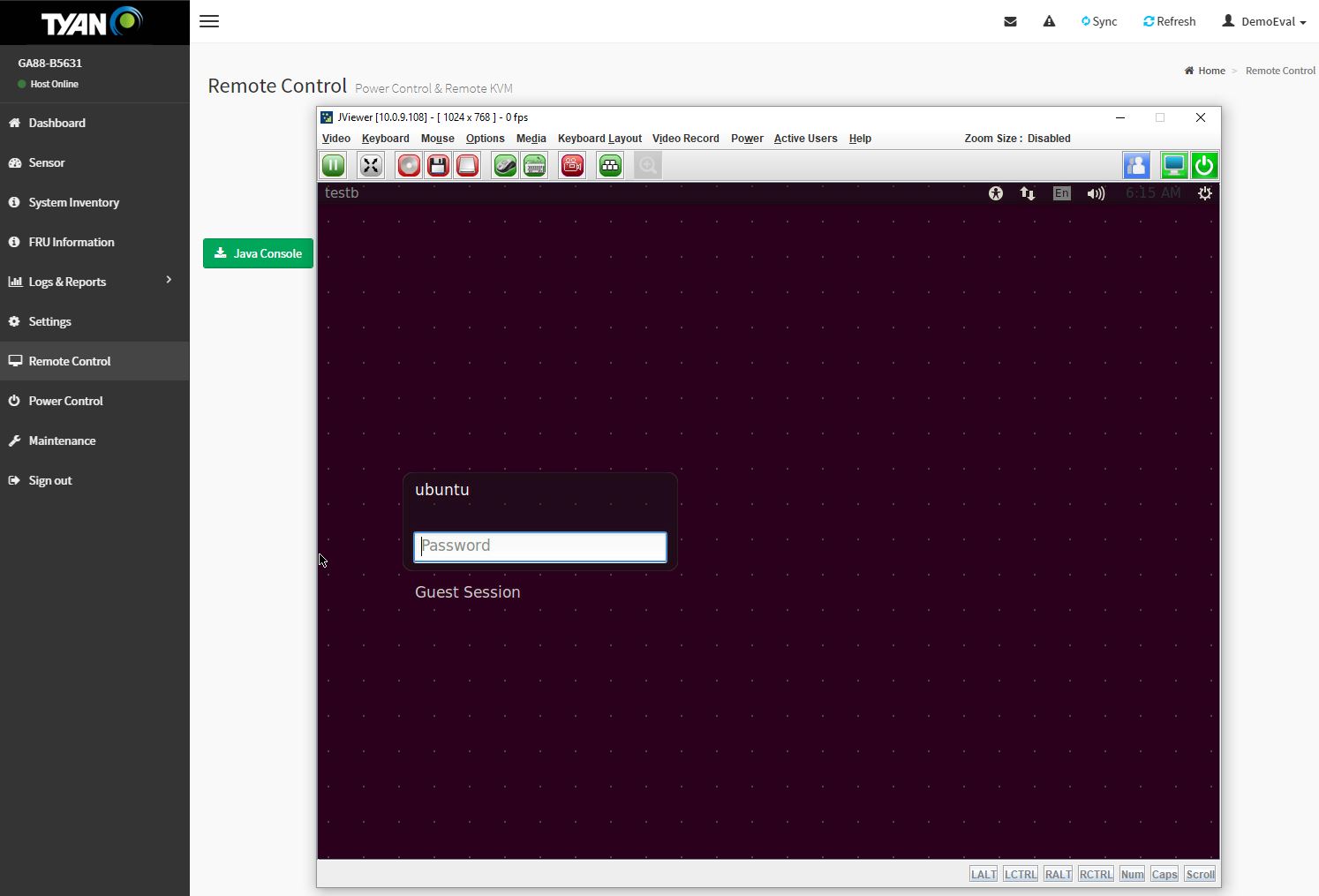

Like many servers today, the Tyan management solution includes iKVM functionality. Currently, the solution employs Java. We do hope to see a HTML5 version in the future. On the other hand, Tyan does not charge an additional license for iKVM access to the OS console which traditional vendors such as HPE, Dell EMC, and Lenovo do.

For most organizations that will use such a server, the remote management capabilities work well. Tyan also supports Redfish APIs for next-generation server management.

Power Consumption

We wanted to provide some sense of how much power the system utilized as measured by our APC PDUs on 208V data center power:

- No GPUs Idle: 102W

- Tensorflow: 1154W

- Maximum observed: 1392W

There was still room for this configuration to use more power. We utilized the default power limits for our testing and did not bump the NVIDIA GTX 1080 Ti’s to a 50W higher power limit (300W.) Instead, we used a stock 250W power limit.

Final Words

If one thinks to similar 1U 4x GPU designs from 2015 that required two CPUs, this is a major leap forward. While that 2015 system may have required two CPUs around the $1500 price point each to ensure full inter-socket bandwidth, the Tyan Thunder HX GA88-B5631 can utilize a $500 or $700 CPU that also utilizes a fraction of the power.

Beyond this, the design shows that dual root using two different PCIe roots on the new Intel Xeon Scalable family mesh interconnect works well. We saw better performance than we saw previously in socket-to-socket Xeon E5 designs.

One final note to consider here is power. For some, the prospect of using 160 GPUs and 40 CPUs in a rack may sound tantalizing. From a practical standpoint, without using the cards to their full 300W power limit, we were still hitting over 1.4kW per U. That means 40U will consume about 56kW excluding networking. Not all data centers can deliver power and cooling for racks of this density. Still, if you are adding GPU compute into your infrastructure, the new Tyan Thunder HX GA88-B5631 achieves an awesome level of density while reducing CPU costs.

Very nice powerfull little system with a lot of GPU-power.

Would love to see a GROMACS benchmark on this system with an optimal XEON.

Ethereum mining? :D

Wow, Tyan is up and coming!

60 kW per rack is not uncommon at all. Sure, it will cost you but it is widely available.

where can I buy this?