Tyan Thunder CX GC68A-B7126 Internal Overview

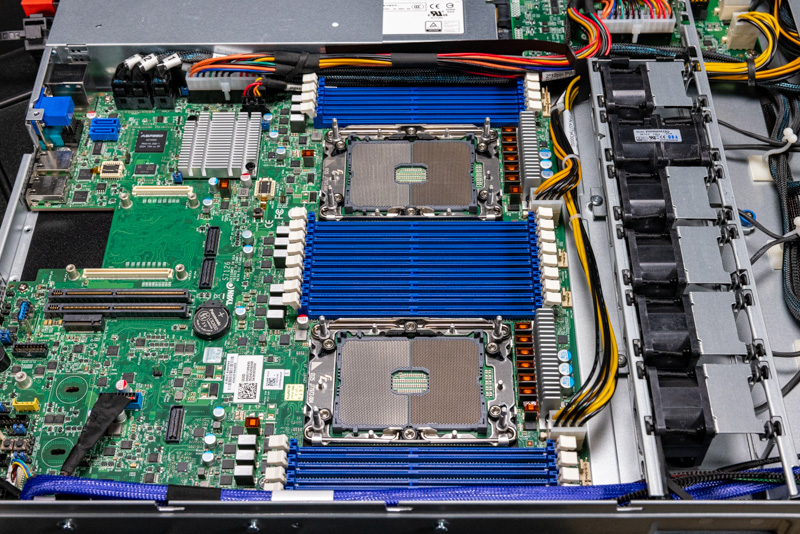

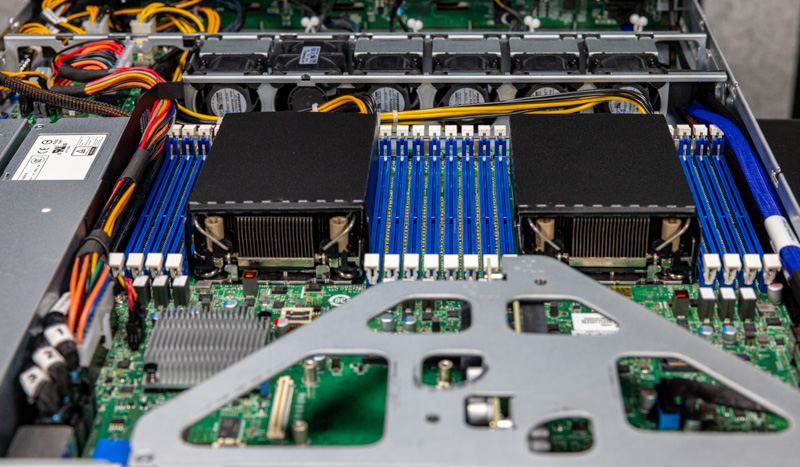

Inside the server, we get a fairly standard layout with drives in the front (to the right of the photo below), then fans, CPU, and memory, followed by I/O. There is a lot going on here so we are going to generally work from right to left, oriented by the photo below. We have already looked at the power supplies and power distribution board at the top of the photo.

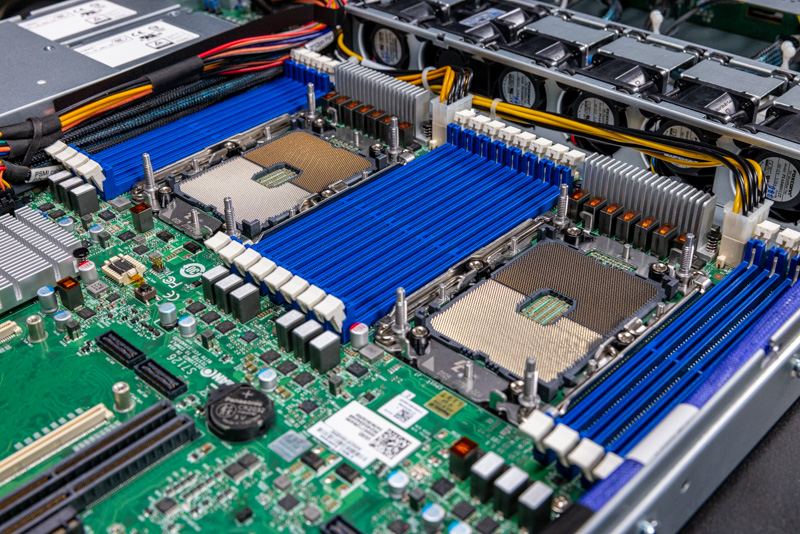

First, one will notice that the entire platform is based on the EATX Tyan S7126GM2NRE (or S7126) motherboard. Many of the server’s design decisions are based on this motherboard choice. Using an EATX motherboard trades off some expandability. What one gains, however, is the ability to use the motherboard in more platforms and thus increasing volumes. Increasing volumes in turn mean lower costs and higher quality since they see more deployment scenarios that get tested over time.

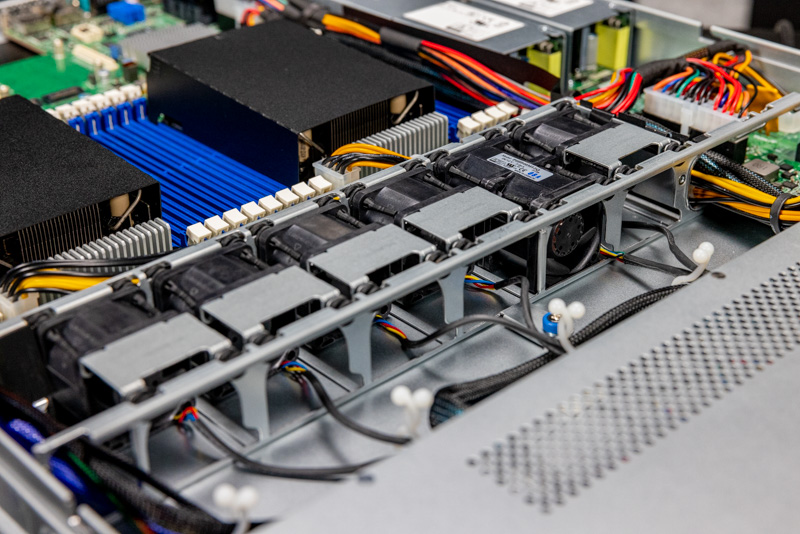

Cooling the system, since we have an EATX motherboard, we have a fan array. Here we have six fan slots that are not hot-swappable. In 1U systems, using rubber stand-offs and this type of wired connectivity is very common in the industry. Something that is a big cost optimization, but that we wish was different, is that one can see we have space for six redundant fan modules but we only have one installed. Gigabyte is using lower-cost fan modules here which usually save on power and costs, but if ever a server needs to be serviced, then many of those cost savings just move to the operating expenses. Fans are very reliable these days, but it is just something to note.

Behind the fans we have the two Intel Xeon Ice Lake CPUs memory, and I/O.

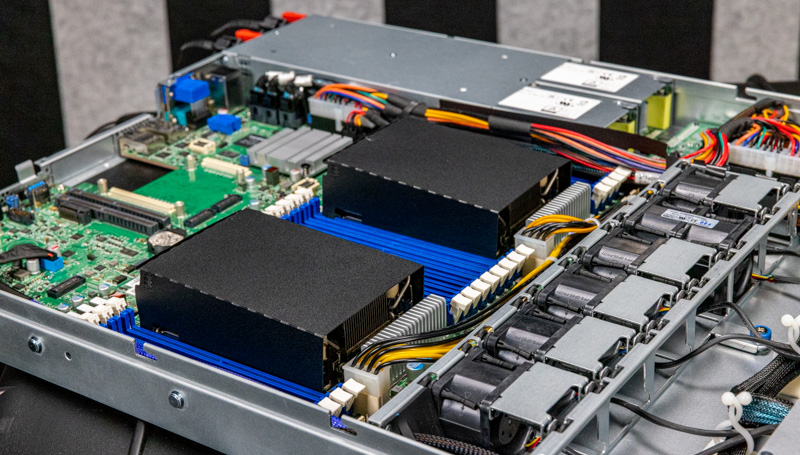

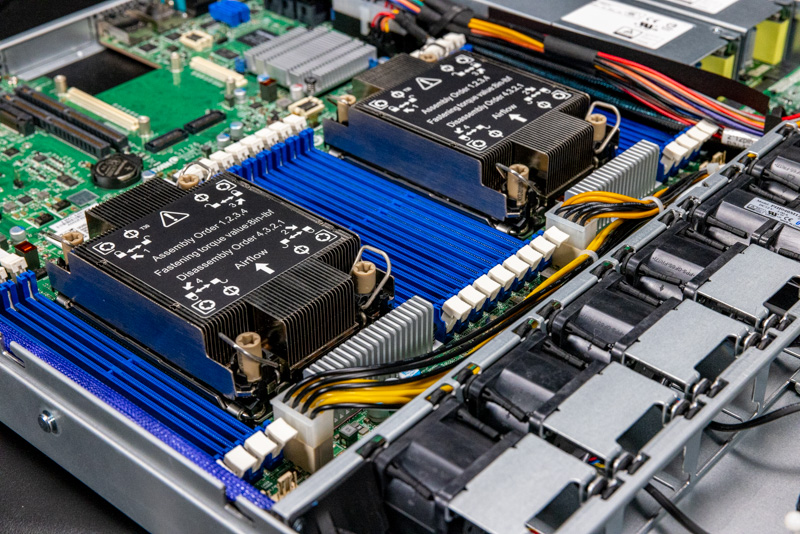

The airflow guides in this system help keep airflow over the heatsinks. These are lower-cost units and are small in comparison. We usually see these airflow guides extend to the fan partition but the CPU power cables obstructed that path here.

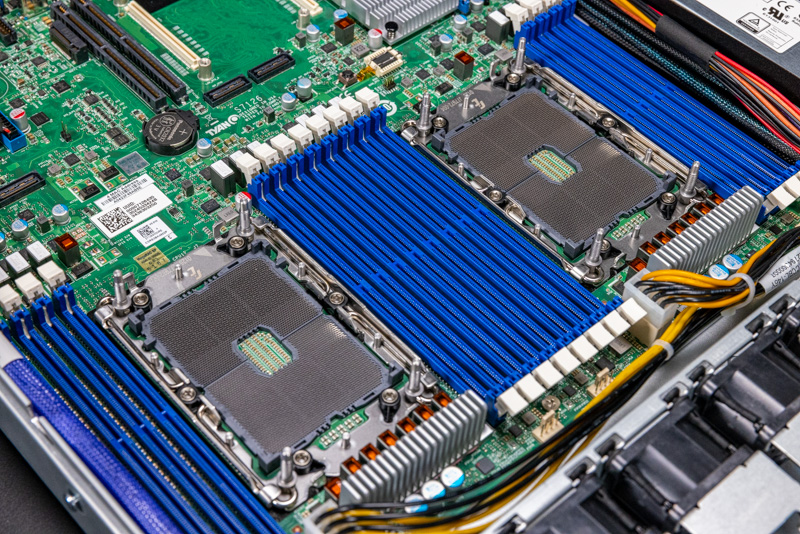

The CPU heatsinks are newer LGA4189 units. We have a guide for Installing a 3rd Generation Intel Xeon Scalable LGA4189 CPU and Cooler on STH.

The system itself only supports up to 205W max TDP CPUs. That is more similar to previous-generation Xeons where that was the maximum TDP of the line. The Ice Lake Xeon line goes up to 270W TDP, but one is unlikely to put Platinum 8380’s in low-cost platforms.

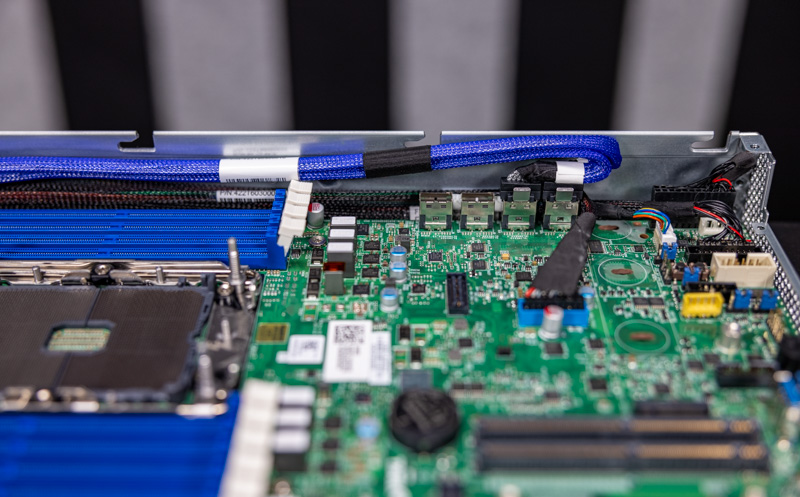

For memory, we get sixteen DDR4-3200 slots. That fills all of the memory channels with one DIMM per channel (1DPC.) Ice Lake Xeons can take two DIMMs per channel (2DPC) but that requires a custom motherboard to fit 32 DIMMs in a dual-socket server, adding to costs. For applications where this is designed to fit, 1DPC is common and sometimes customers will deploy this type of server with fewer than full sets of 16 DIMMs to further lower costs.

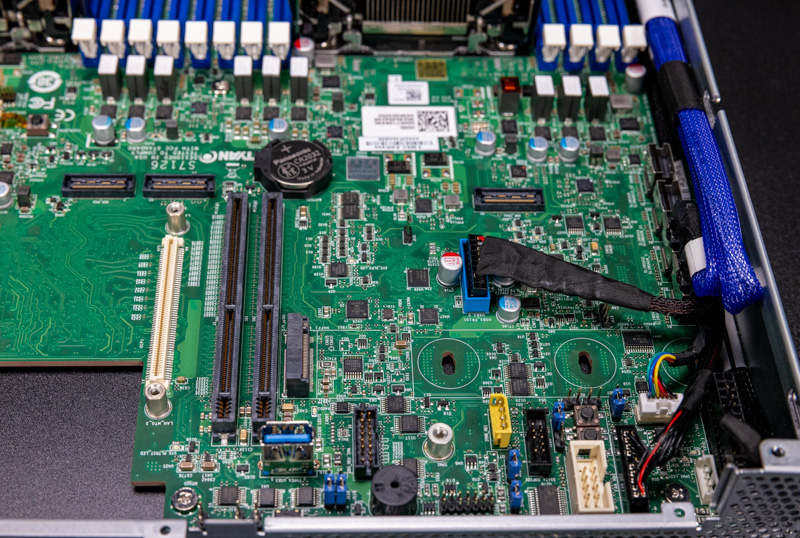

Behind the motherboard, we get the Intel C621A PCH and the ASPEED AST2500 management BMC. Some vendors have switched to the ASPEED AST2600 in this generation but this is the first generation where that is really happening so many still use the older generation that has many millions of deployments. Here we can also see the three SFF-8643 cables labeled 1, 2, and 3 that provide 12x SATA lanes to the front bays. There are also two internal SATA headers here.

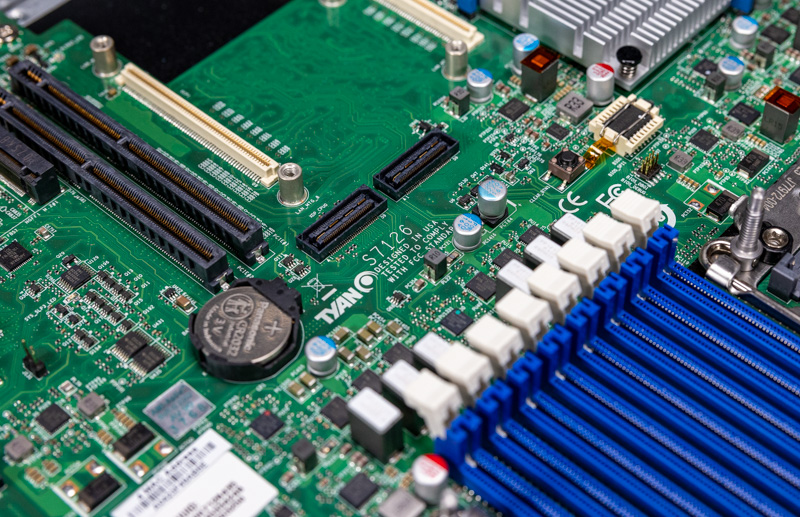

For rear expansion, in our external overview, we mentioned the OCP NIC 2.0 slot. The OCP NIC 2.0 slot has all x16 PCIe lanes available. OCP NIC 3.0 slots are out, but the Gen2 NICs are much less expensive.

The two middle riser slots are being used for PCIe Gen4 x16 slots here, but each is actually a PCIe Gen4 x32 slot. Tyan shares this motherboard with other platforms and the x32 slots can be exposed in 2U risers.

On the rear of the motherboard, we also have a USB 3 Type-A internal port as well as a M.2 slot. This M.2 slot is a PCIe Gen3 x2 slot that can handle up to 22110 (110mm) M.2 drives. The primary purpose of this is to be a boot drive slot for the system and it utilizes PCIe lanes to the Lewisburg Refresh C621A PCH not the CPUs.

Of course, we must mention that there are four SlimSAS connectors that each have PCIe Gen4 x4 links. Two are used for the two 2.5″ front NVMe drive bays here.

We have to at least give a quick nod to the fact that this may be our favorite cable color.

With the hardware overview complete, let us get to the block diagram, management, and performance.