Tyan GC70-B8033 1U Power Consumption

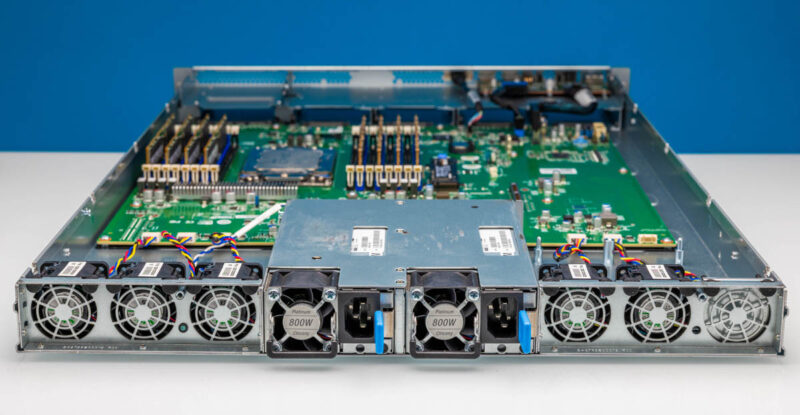

The system itself came with two 800W power supplies, but they were way more than our configuration needed.

Total system power in our configuration has yet to peak above 350W. At idle, we saw about 83W with the AMD EPYC 7C13 and closer to 85W with the AMD EPYC 7713P. That is awesome.

For those running in very low-density 120V racks (10A, 15A, or 20A), this platform is great for adding multiple compute nodes for a cluster. That is something that newer chips are challenged with.

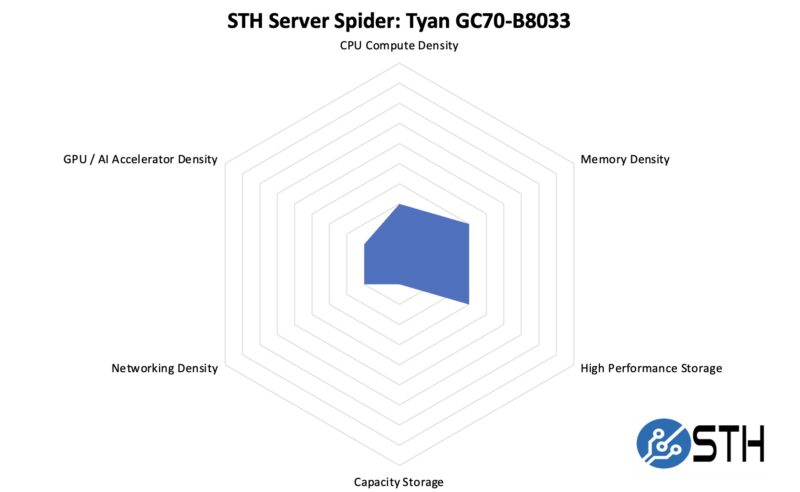

STH Server Spider Tyan GC70-B8033

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This is quite an interesting node since it does not try to be the densest. Instead, it is a lower-cost node designed for a simple hosting application. To be completely fair here, if you are coming from a 2017-2021-era 1st or 2nd Gen Intel Xeon Scalable or anything older, then this is going to be faster and likely lower power—all that with the advantage of being newer. The catch is that expandability is minimal.

Final Words

At this point, you might be wondering why we are reviewing this node. Originally, it came because we purchased these nodes for an ultra-low price at under $2300 new with the AMD EPYC 7C13. Here is the Newegg listing (Affiliate link.)

We had been planning to do a review and publish it as a “great deal” but then we purchased the two servers and realized they were the only two in stock. We had a bunch of DDR4-3200 64GB DIMMs, so this seemed like an awesome opportunity until they arrived, and we realized that we could not use them to show the STH community. Instead, these are just going in as test nodes in a cluster.

With all that said, this is a fairly awesome system with a very simple design and execution. It is also a bit strange since it is designed for either power via AC or a DC busbar, meaning we do not get rear I/O. Instead, we get an immensely easy-to-service design without even cables running in the length of the motherboard or a storage backplane. It may seem silly, but we really like these systems.

There’ll be a market for cheap Milan + NVMe storage. This with 8-10 NVMe 1 PCIe NIC and 1 OCP NIC and 2 M.2.

Milan with cheap DDR4, cheaper PCIe 4.0, is better than newer. Not all DC SSDs are even Gen 5.0 yet, so why pay for a more expensive mobo and cables for storage?

Maybe I’m missing something but the rear fans seem to be in the way of any external ports on the PCI-e devices.

Would make for a great affordable SME all-flash HCI node:

2x m.2 mirrored boot SSDs

4x NVMe for all-flash storage

dual/quad 25 or dual 100GbE OCP NIC

lots of cores and sufficient RAM for VMs

maybe an Intel QuickAssist add-in-card in the PCIe riser. (no outputs required)

or with the Intel Flex 140/170 GPUs for a VDI node

@michaelp:

The rear fans are there to provide forced airflow through the add-in PCIe card. This server is not compatible with consumer cards that have video outputs. It’s for cards that have just passive flow-through cooling.

What bothers me about this server is lack of BIOS updates. The version on Tyan’s site is over a year old which means it did not receive many critical security updates, some of which are only applicable by firmware updates and not runtime loadable microcode.

When I go to Tyan site and search for S8030 I get this very nice if venerable board: https://www.tyan.com/Motherboards_S8030_S8030GM2NE.

Google, same.

This is a circa 2021 board that seems to be out of production.

That is the S8030 not the S8033

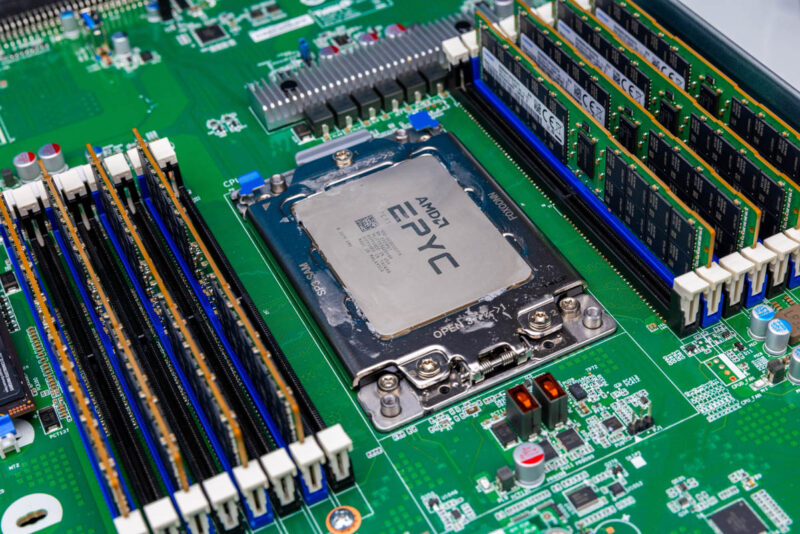

Neat seeing this thing out in the wild. This was a custom ODM project for Twitter. It looks very specialized to a very specific application because it was. This was designed as a frontend web server for Twitter and have absolutely nothing else added in. No backplanes because their frontend servers would never have more than four drives. Front I/O so it could support a 48Vdc busbar. But instead of having a 48Vdc power plane, it used CRPS edge connectors on the motherboard so it could still support CRPS power supplies for datacenters which were not getting 19″ busbar racks. Absolutely cheapest design possible for the exact configuration required by the project. Nothing extra or unnecessary to add cost for a project which would have shipped around 100k-200k servers across a few vendors.

This was available from a few ODMs which dealt with Twitter at the time. The project had just about entered mass production stage. Then Musk bought Twitter and fired the entire server team. Then he stopped all payments for servers. Twitter’s ODMs collectively were stuck with tens of millions of dollars of dead inventory – custom servers, so custom that nobody else wanted them. Already built, ready to ship, suddenly without a buyer.

There’s loads of these sitting around a few ODMs warehouses collecting dust. Probably about to be dumped on the market for cheap before the Milan CPUs become too obsolete.

Tyan GC70-B8033

Tyan GC70-B8033 ,How is this server? What projects has it been used for before?