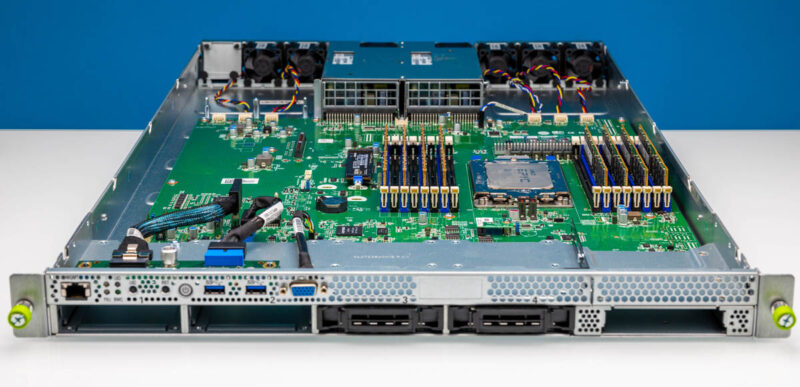

Tyan GC70-B8033 1U Internal Hardware Overview

Here is a quick overview of the system again to help orient you as we go through the system from front to back.

The motherboard inside is the Tyan S8033.

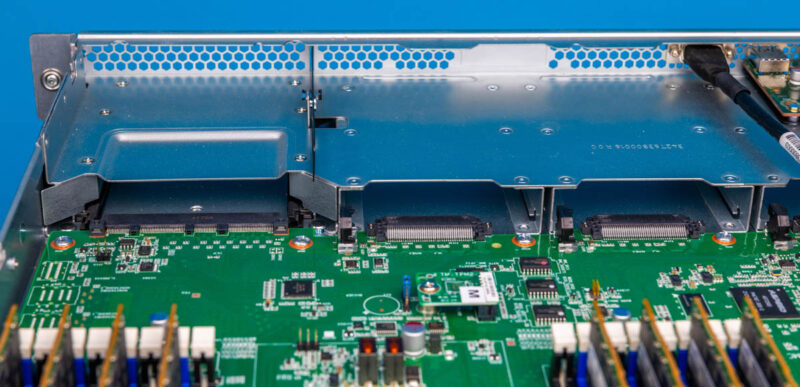

One of the most unique features here is the front OCP NIC 3.0 and NVMe drive bays that directly plug into the motherboard, skipping cabled backplanes.

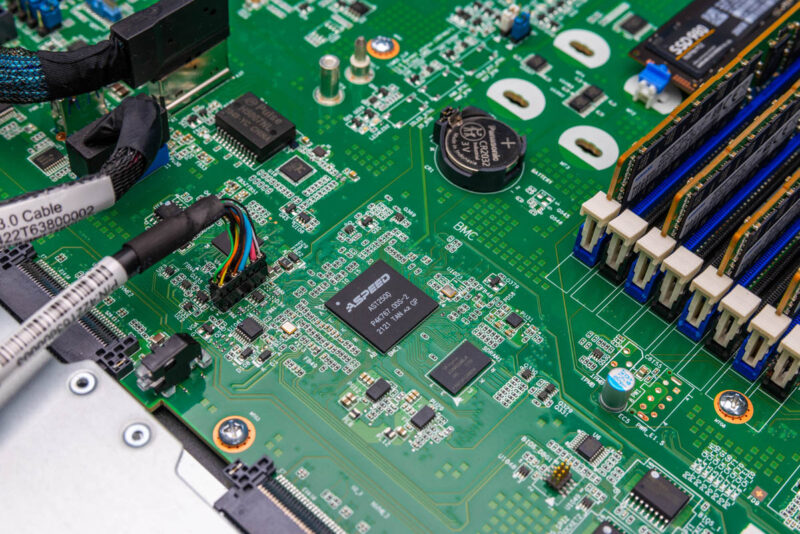

The motherboard uses the ASPEED AST2500 BMC.

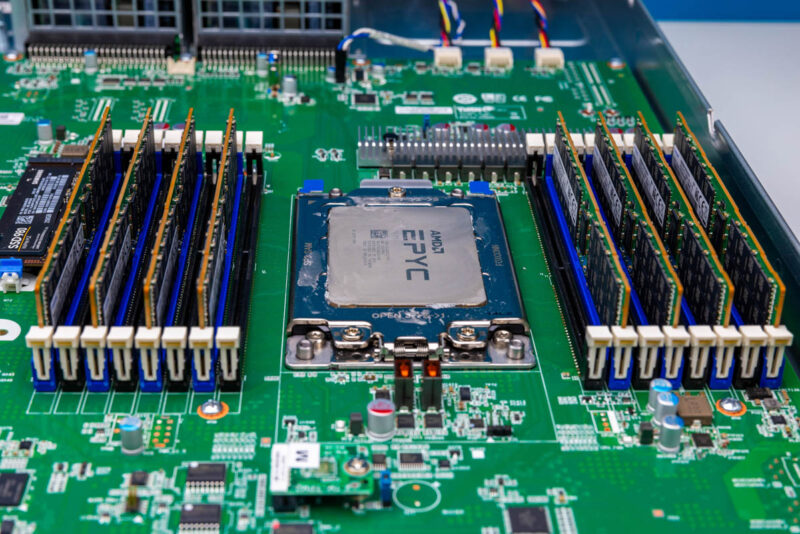

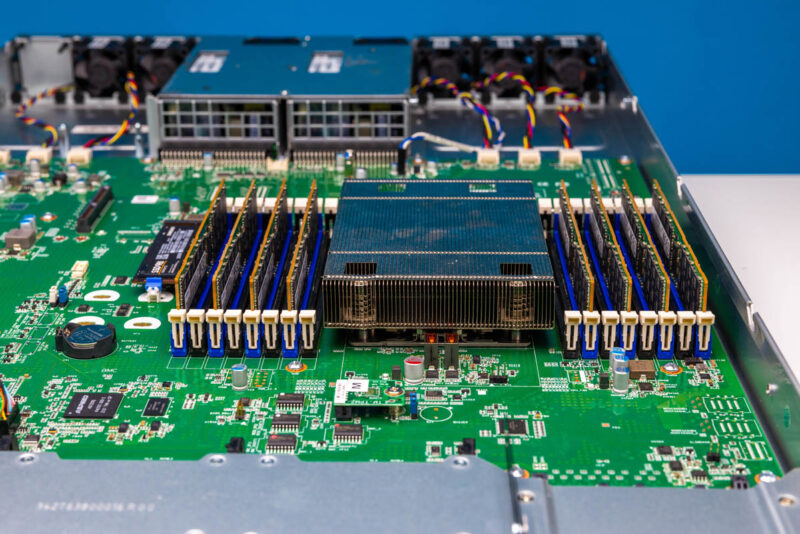

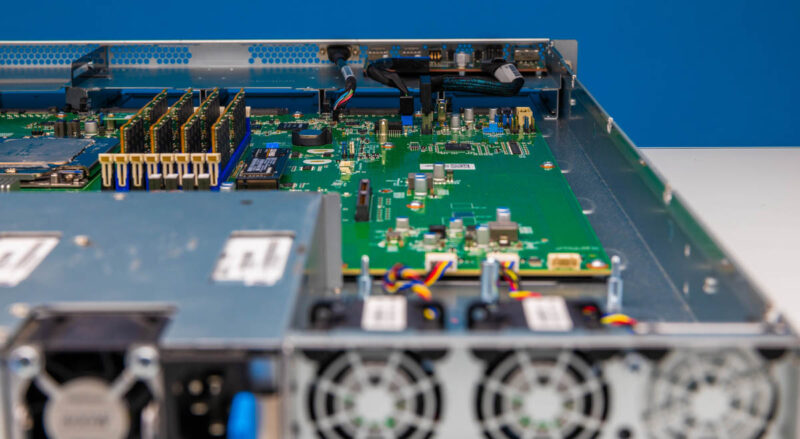

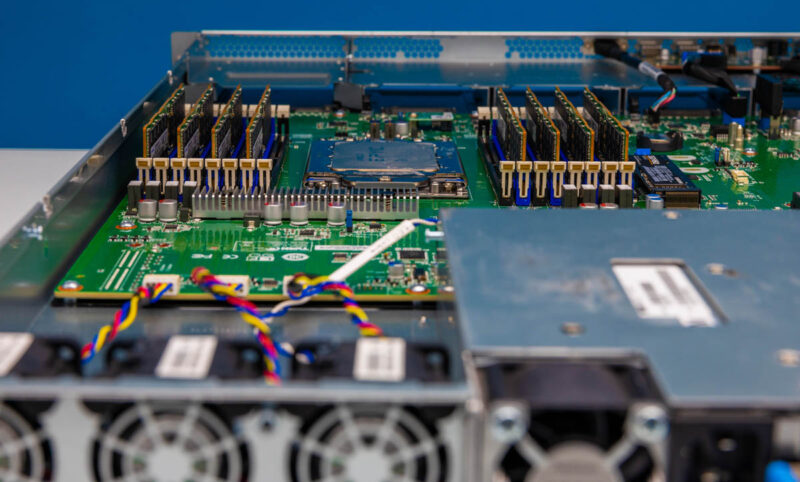

This is an AMD EPYC 7003 “Milan” platform. Our system came with the AMD EPYC 7C13 and a full set of 16 DIMM slots for the 8-channel memory 2DPC CPU. While this is not the newest generation, it is a lower-cost option for something like a web server. We added 8x 64GB DDR4-3200 DIMMs for a neat 64 core/ 128 thread and 512GB memory configuraiton.

Atop the SP3 socket sits a 1U heatsink.

There is then a shroud that helps pull air through the channel to the side of the PSUs with three fans. The shroud is hard plastic and very easy to place.

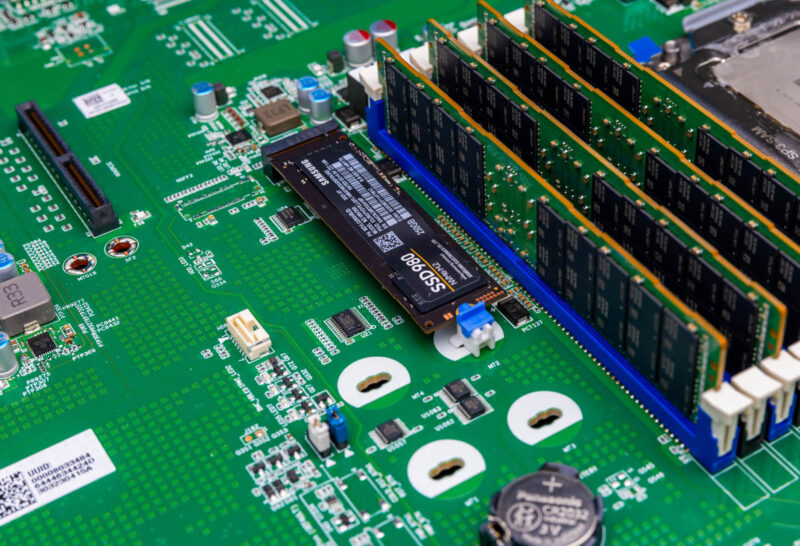

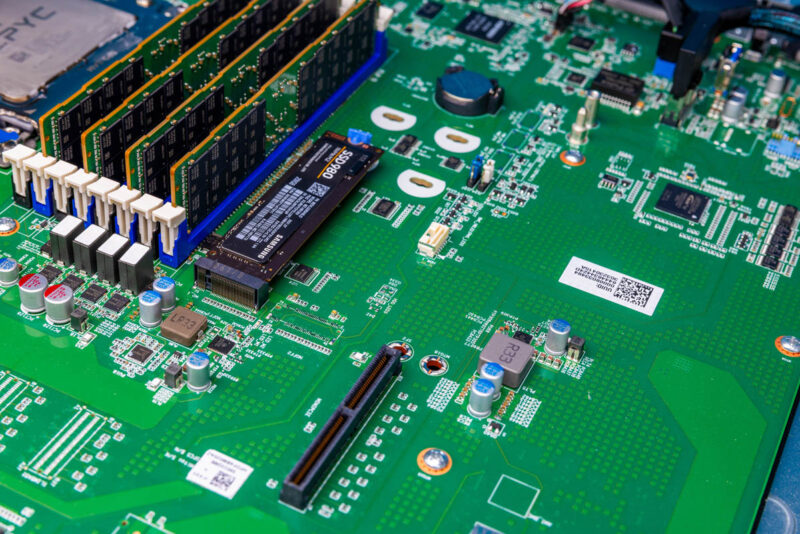

Next to the CPU and memory is the internal M.2 storage. This server has a single M.2 slot that is held in-place by a tool-less retention mechanism that can be placed at 80mm or 110mm distances. That means it can support M.2 2280 drives like this Samsung or longer M.2 22110 drives.

One will also likely notice that there are pads and stand-off cutouts for a second M.2 slot that are unpopulated in our system.

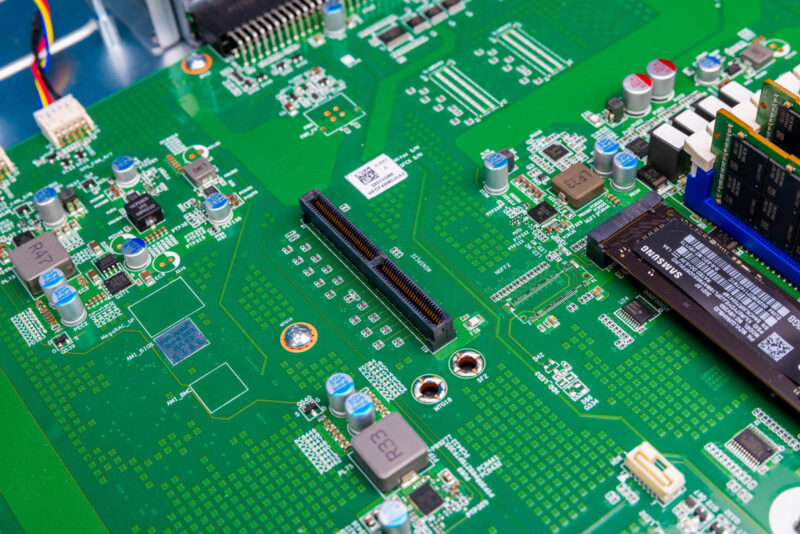

The left rear of the motherboard has a seemingly random slot. This slot is for an optional PCIe riser that can support a GPU like a NVIDIA A10. We do not have the riser, but that is what this is for.

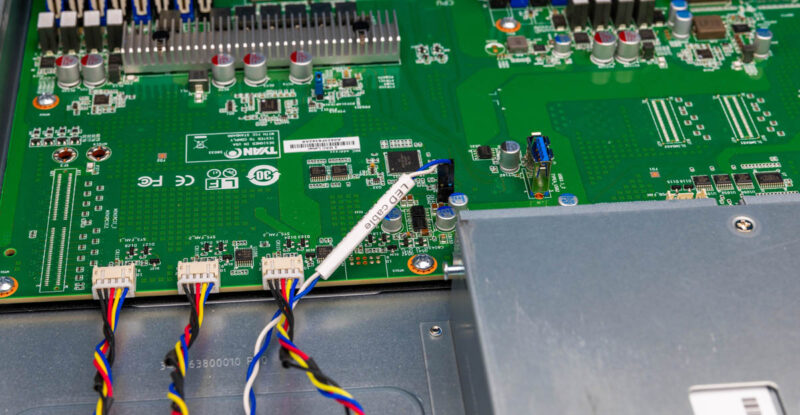

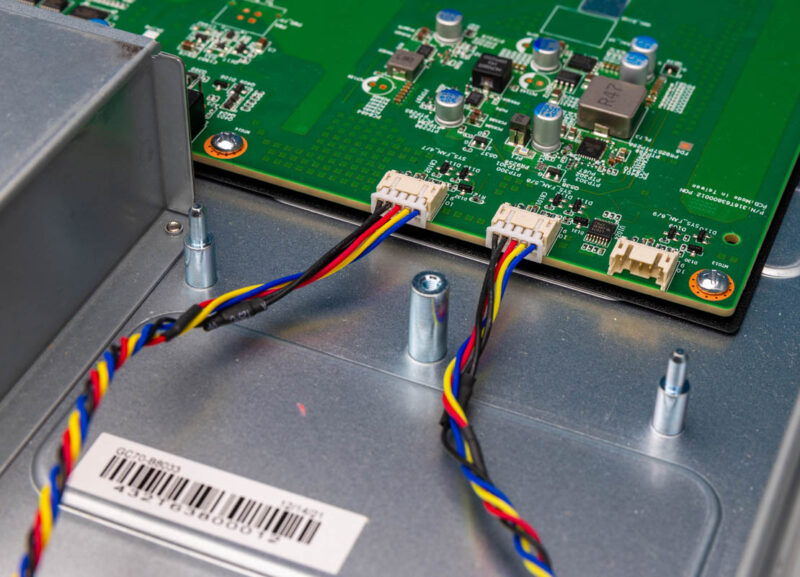

In the rear of the motherboard, we get edge fan connectors and an internal USB 3 Type-A port.

On the other edge of the motherboard, we get more fan headers with one unoccupied because we do not have the 6th fan module populated. The metal stand-offs are there for the PCIe riser.

At this point, you will probably have noticed another neat feature of this platform. While there is plenty of space to route cables between the front and the rear of the chassis, there are none running through the sides.

That makes this server unique compared to many that we review. It also makes service on this server ridiculously easy.

Next, let us get to the block diagram and topology.

There’ll be a market for cheap Milan + NVMe storage. This with 8-10 NVMe 1 PCIe NIC and 1 OCP NIC and 2 M.2.

Milan with cheap DDR4, cheaper PCIe 4.0, is better than newer. Not all DC SSDs are even Gen 5.0 yet, so why pay for a more expensive mobo and cables for storage?

Maybe I’m missing something but the rear fans seem to be in the way of any external ports on the PCI-e devices.

Would make for a great affordable SME all-flash HCI node:

2x m.2 mirrored boot SSDs

4x NVMe for all-flash storage

dual/quad 25 or dual 100GbE OCP NIC

lots of cores and sufficient RAM for VMs

maybe an Intel QuickAssist add-in-card in the PCIe riser. (no outputs required)

or with the Intel Flex 140/170 GPUs for a VDI node

@michaelp:

The rear fans are there to provide forced airflow through the add-in PCIe card. This server is not compatible with consumer cards that have video outputs. It’s for cards that have just passive flow-through cooling.

What bothers me about this server is lack of BIOS updates. The version on Tyan’s site is over a year old which means it did not receive many critical security updates, some of which are only applicable by firmware updates and not runtime loadable microcode.

When I go to Tyan site and search for S8030 I get this very nice if venerable board: https://www.tyan.com/Motherboards_S8030_S8030GM2NE.

Google, same.

This is a circa 2021 board that seems to be out of production.

That is the S8030 not the S8033

Neat seeing this thing out in the wild. This was a custom ODM project for Twitter. It looks very specialized to a very specific application because it was. This was designed as a frontend web server for Twitter and have absolutely nothing else added in. No backplanes because their frontend servers would never have more than four drives. Front I/O so it could support a 48Vdc busbar. But instead of having a 48Vdc power plane, it used CRPS edge connectors on the motherboard so it could still support CRPS power supplies for datacenters which were not getting 19″ busbar racks. Absolutely cheapest design possible for the exact configuration required by the project. Nothing extra or unnecessary to add cost for a project which would have shipped around 100k-200k servers across a few vendors.

This was available from a few ODMs which dealt with Twitter at the time. The project had just about entered mass production stage. Then Musk bought Twitter and fired the entire server team. Then he stopped all payments for servers. Twitter’s ODMs collectively were stuck with tens of millions of dollars of dead inventory – custom servers, so custom that nobody else wanted them. Already built, ready to ship, suddenly without a buyer.

There’s loads of these sitting around a few ODMs warehouses collecting dust. Probably about to be dumped on the market for cheap before the Milan CPUs become too obsolete.

Tyan GC70-B8033

Tyan GC70-B8033 ,How is this server? What projects has it been used for before?