In our Tyan GC70-B8033 review, we are going to take a quick look at this 1U server. It is not the latest Genoa generation, but it is designed to be a lower-cost 1U platform for the AMD EPYC “Milan” generation or the EPYC 7003. As a single socket system, we thought this would be an interesting one for folks to see. The actual model number of what we are reviewing is the Tyan BS8033G70E2HR-C. There is a lot different here than a standard 1U server, so we though it would be a great opportunity to show folks some different features on a front I/O server. You may have already seen this on STH in our AMD EPYC 7C13 review.

Tyan GC70-B8033 1U External Hardware Overview

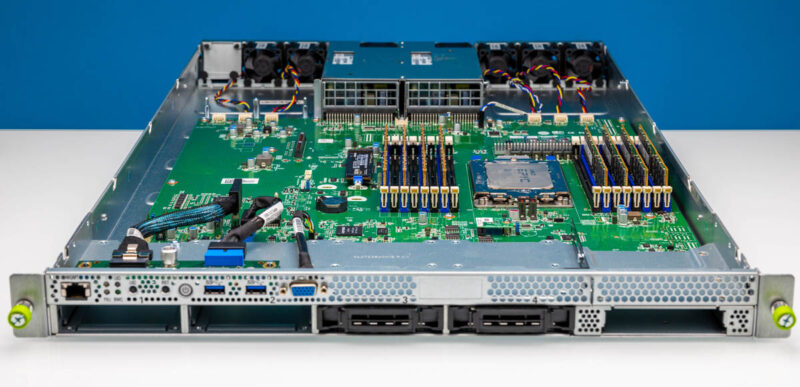

Taking a look at the front of the server, it is fairly clear that this is not your average enterprise 1U server. Instead, this is where everything serviceable, aside from the power supplies, is located.

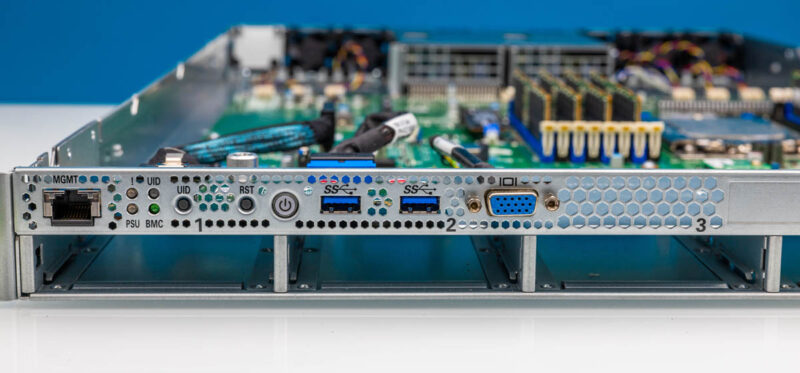

That includes not just the power button and status LEDs. Instead, we get the management I/O block with the out-of-band management port, two USB 3 ports, and a VGA port on the front.

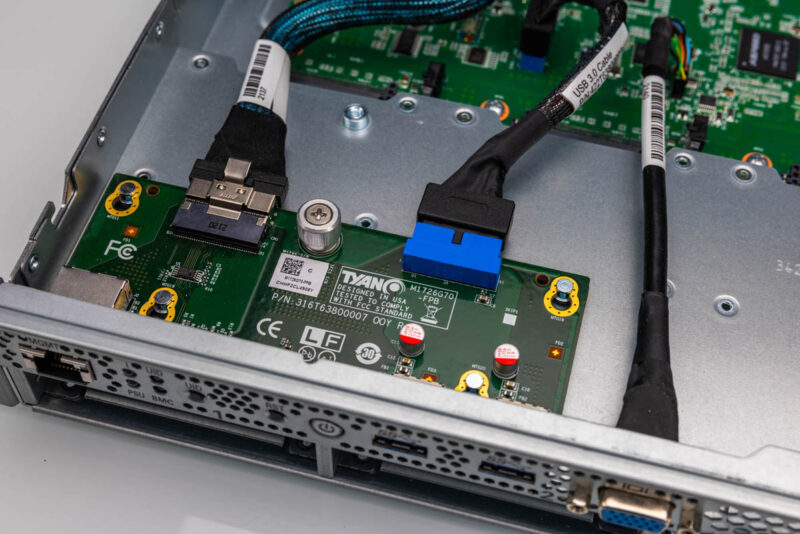

That functionality is located on a small board above the drive bays. Something to notice here is that the I/O board is held into place via slotted standoffs and a single thumbscrew for serviceability. The VGA port skips this board and is instead directly cabled to the motherboard.

The drive bays are different as well. Instead of having a cabled storage backplane, the 2.5″ drive bays plug into connectors at the edge of the motherboard. This is very different from your average server.

The drive trays we received are Tyan’s tool-less model. You open the side latch and slide the drive in.

Once you do that, the drive is locked in place. Above is an Intel Optane 1.5TB drive with the latch open. Below is the Kioxia CM5 drive with it closed.

Our system only came with two trays and two blanks, but this uses the standard tool-less Tyan latch 2.5″ drive design, so we could source two additional trays. There are bonus points here because we were able to source different color tabs for the different drive types.

The four 2.5″ drive bays are not the only edge I/O on the motherboard. There is also an OCP NIC 3.0 slot on the front that is directly plugged into the motherboard.

Tyan is using the SFF with Pull Tab OCP NIC 3.0 form factor. This is our preferred form factor, allowing for the most accessible service. To learn more about the differences, see our OCP NIC 3.0 form factor guide.

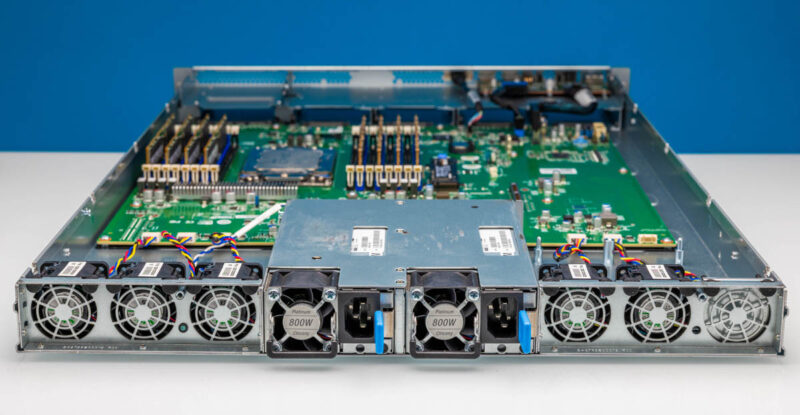

Looking at the rear of the system, one can see that there are a lot of fans.

Our system came with two 80Plus Platinum-rated 800W AC power supplies. There is another option with this system to use DC power via a busbar in the rear. That design is why we see the power supplies in the center location and all of the I/O and non-power/ fan serviceable items in the front.

Next, let us get inside the server to see what it has in store for us.

There’ll be a market for cheap Milan + NVMe storage. This with 8-10 NVMe 1 PCIe NIC and 1 OCP NIC and 2 M.2.

Milan with cheap DDR4, cheaper PCIe 4.0, is better than newer. Not all DC SSDs are even Gen 5.0 yet, so why pay for a more expensive mobo and cables for storage?

Maybe I’m missing something but the rear fans seem to be in the way of any external ports on the PCI-e devices.

Would make for a great affordable SME all-flash HCI node:

2x m.2 mirrored boot SSDs

4x NVMe for all-flash storage

dual/quad 25 or dual 100GbE OCP NIC

lots of cores and sufficient RAM for VMs

maybe an Intel QuickAssist add-in-card in the PCIe riser. (no outputs required)

or with the Intel Flex 140/170 GPUs for a VDI node

@michaelp:

The rear fans are there to provide forced airflow through the add-in PCIe card. This server is not compatible with consumer cards that have video outputs. It’s for cards that have just passive flow-through cooling.

What bothers me about this server is lack of BIOS updates. The version on Tyan’s site is over a year old which means it did not receive many critical security updates, some of which are only applicable by firmware updates and not runtime loadable microcode.

When I go to Tyan site and search for S8030 I get this very nice if venerable board: https://www.tyan.com/Motherboards_S8030_S8030GM2NE.

Google, same.

This is a circa 2021 board that seems to be out of production.

That is the S8030 not the S8033

Neat seeing this thing out in the wild. This was a custom ODM project for Twitter. It looks very specialized to a very specific application because it was. This was designed as a frontend web server for Twitter and have absolutely nothing else added in. No backplanes because their frontend servers would never have more than four drives. Front I/O so it could support a 48Vdc busbar. But instead of having a 48Vdc power plane, it used CRPS edge connectors on the motherboard so it could still support CRPS power supplies for datacenters which were not getting 19″ busbar racks. Absolutely cheapest design possible for the exact configuration required by the project. Nothing extra or unnecessary to add cost for a project which would have shipped around 100k-200k servers across a few vendors.

This was available from a few ODMs which dealt with Twitter at the time. The project had just about entered mass production stage. Then Musk bought Twitter and fired the entire server team. Then he stopped all payments for servers. Twitter’s ODMs collectively were stuck with tens of millions of dollars of dead inventory – custom servers, so custom that nobody else wanted them. Already built, ready to ship, suddenly without a buyer.

There’s loads of these sitting around a few ODMs warehouses collecting dust. Probably about to be dumped on the market for cheap before the Milan CPUs become too obsolete.

Tyan GC70-B8033

Tyan GC70-B8033 ,How is this server? What projects has it been used for before?