Heading into the week of Flash Memory Summit 2017 we wanted to highlight a few key trends that we are expecting to see at this year’s show. There are going to be a ton of announcements, and let us face it. You are busy and need a summary especially as many of the technologies announced this week will be years away from a product. Here is the preview summary.

New Form Factors

It is no secret in the industry that the popular 2280 m.2 form factor and even 22110 do not provide the best data center form factors. Vendors see these form factors as too small to put enough NAND behind a controller. When a goal is to minimize controller costs, bigger form factors are better. U.2 has been the traditional answer, but they take up significant space in a server.

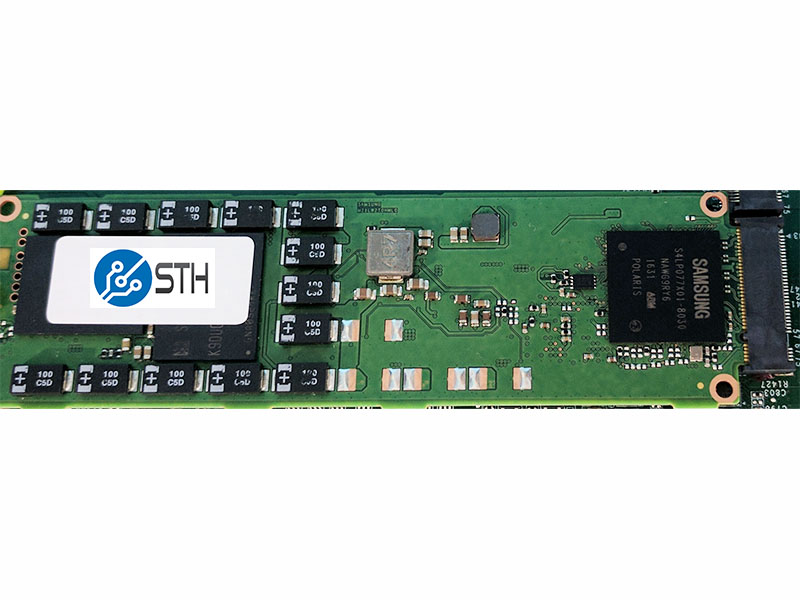

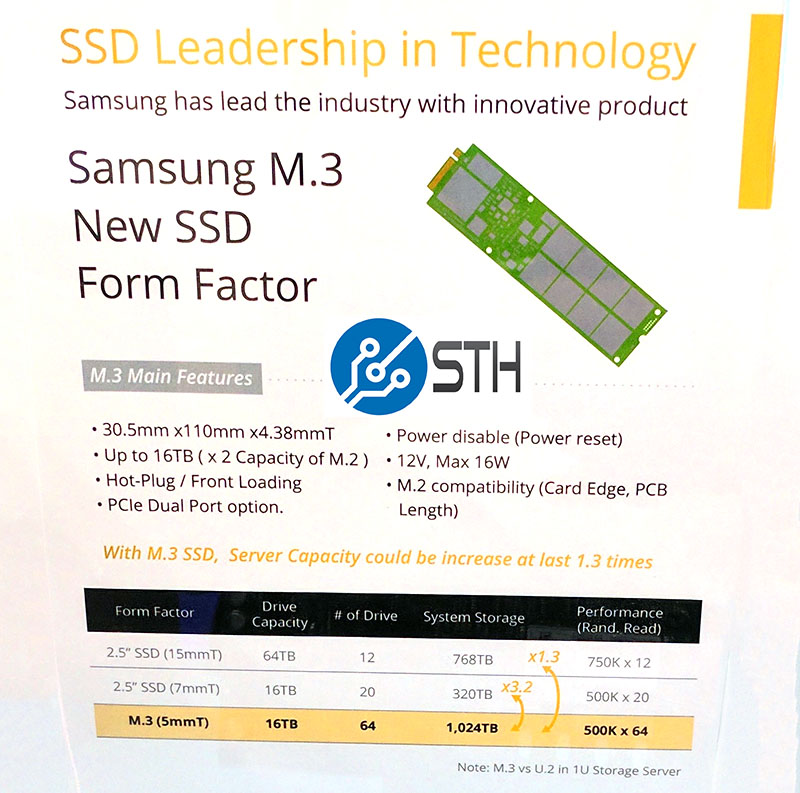

STH showed that Samsung is pushing a “m.3” standard a few months ago:

Which allows power loss protection circuitry as well as larger capacities than traditional m.2 SSDs.

A few months ago, Samsung was already touting 16TB m.3 devices.

While that may be the Samsung view, we expect other vendors to start addressing this trend at FMS 2017.

NVMe over Fabrics

This is going to be, by far, the hottest trend at FMS 2017. Name a vendor and we expect them to be involved with at least one NVMe over Fabrics demo at FMS 2017. The reason this is hot has more to do with the networking side. As SFP28 and QSFP28 networking bring 25/50/100GbE along with legacy 40GbE and Infiniband/ Omni-Path, the groundwork is there to start using NVMe devices over fabrics. Remember, most of today’s NVMe SSDs are x4 devices so networking is starting to catch up with maximum throughput.

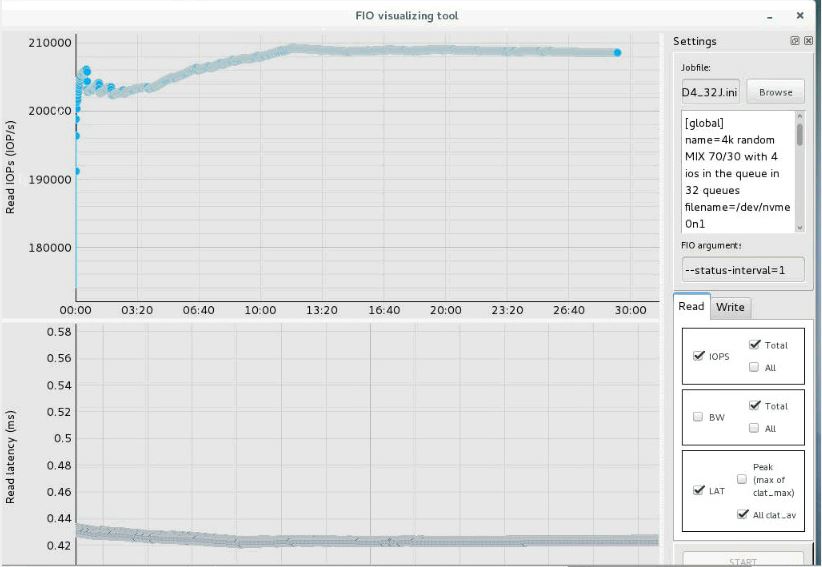

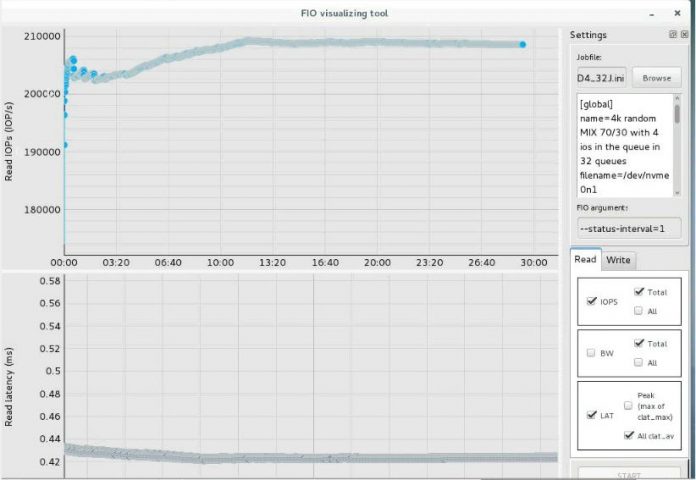

Above is an example of NVMe over fabrics using Intel Omni-Path 100Gbps fabric and an Intel Optane PCIe SSD running 70/30 random 4K workloads over the fabric. There is surely more to tune, however, you can easily see the promise.

SATA Starts to Wane

Last year I asked several vendors when we were finally going to see the transition from SATA to PCIe. For those that are not aware, most numbers from analyst firms such as IDC place SATA still in the leadership role for data center SSDs. There are a few factors that are pushing PCIe and NVMe to the forefront this year.

First, we have just started a major platform refresh cycle. With AMD EPYC and Intel Xeon Scalable processor launches, we have two new platforms that offer something that we have not seen since the Intel Xeon E5 V1 series, major new server re-designs.

These server re-designs mean more U.2, m.2 and emerging form factors are supported in server. For example, Dell EMC is upping the number of NVMe drives supported in its PowerEdge 14th generation servers. As slots swap from SATA/ SAS to PCIe, that will have a major impact on adoption figures.

JBOF’s Get Cool!

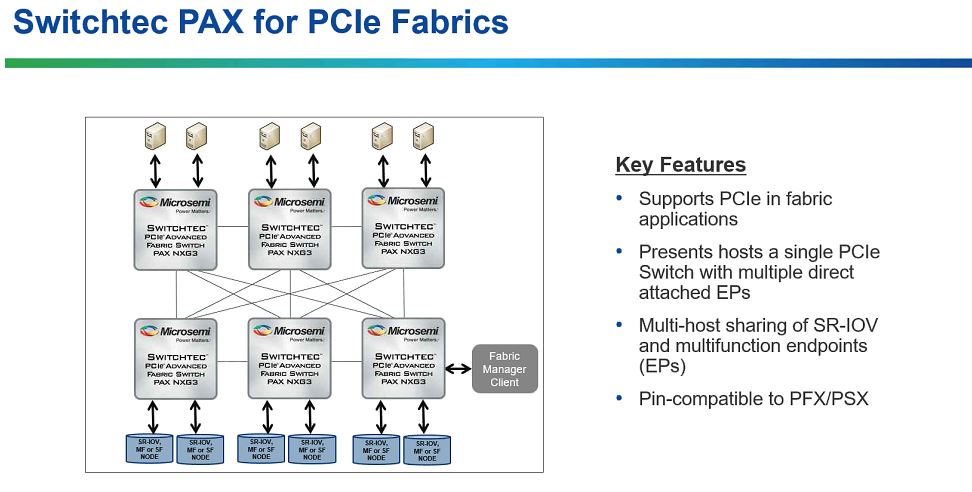

Just a bunch of flash (JBOF) is a term that many vendors do not like, but the new use case is really cool. What we are going to see at FMS 2017 is a number of JBOFs that support SR-IOV. By doing so, they can have multiple servers access the drives at any given time.

The impact of this is huge. When drives sit in a chassis one has two options. They can be locally utilized, but with a low utilization figure in terms of performance and capacity. Alternatively, they can be part of a distributed storage system (vSAN, Ceph, GlusterFS) and then one adds a new layer of complexity. With the new JBOFs we are seeing one can essentially connect a number of servers to a shared NVMe shelf. These servers can then access the storage arrays instead of using local storage.

Here is where the use case is interesting: shared chassis. If you have a 4 node 2U system or denser, you are trading storage capacity for compute density. Using JBOFs and PCIe switches you can add more NVMe storage and get higher utilization. NVMe flash is a major cost driver so optimizing its use is another step in composable infrastructure.

New Solid State Storage

There are going to be a number of new storage technologies in FMS with two main themes. First, there are a number of technologies aimed at increasing density and capacity. After TLC was adopted in the enterprise, vendors are hopeful QLC will be adopted quickly. With 3D NAND vendors feel they can bring to market a solution that will bridge the need to store data on SSDs instead of hard drives. There are a large number of workloads that are heavily read focused and for those workloads, QLC is going to be the next step.

At the other end of the spectrum, the high-performance side is completely bound by a PCIe 3.0 x4 bus common on traditional NVMe form factors. One answer is to move to higher-bandwidth PCIe 3.0 x8 or x16 form factors for NVMe drives. The next-generation is moving to PCIe 4.0 to get more bandwidth per lane. The fact that neither AMD nor Intel adopted PCIe 4.0 in their mid-2017 generation products means that these products are going to be focused on more niche platforms. One example will be SoCs that allow for PCIe 4.0 connections and NVMe over fabrics without a traditional x86 server.

Beyond the traditional form factors, the next-generation storage in this space will be dominated by moving persistent storage toward the memory. Intel said its Optane DIMMs will be a 2018 product. Other technologies like NV-DIMMs are being pushed heavily with OEMs such as Dell EMC and HPE. More exotic technologies will push these boundaries even further.

Final Words

FMS 2017 has some cool new technologies but there are a few “key themes” as Patrick calls them. One segment of the market is driving toward replacing the next tier of disks as primary storage. That entails lower write endurance, lower cost per byte and higher densities. We are going to see mainstream SATA SSDs surpass HDD capacities, not just futuristic halo products. That also means using NVMe in a more cost effective manner either through JBOFs or NVMe over Fabrics. Another segment is aggressively pursuing RAM and trying to add a high capacity/ lower latency tier just below RAM. Finally, there is going to be the natural evolution of the status quo for mainstream SAS, SATA and NVMe whith the transition to NVMe take hold as we move toward next-gen system platforms.

Great article, just a note: “A few months ago, Samsung was already touting 16GB m.3 devices.” should be 16TB

Fixed for you Cliff. Thanks!

And I think we’re now reaching a point where the limit is more in the CPU than in the storage, at least in x86. Intel tries to upsell customers who need more lanes by de-tuning their lower tier models and AMD is as of yet incapable of putting more than 8 cores on a single and connect a significant number of lanes to it. PCIe 4.0 will also take a lot longer to be adopted unless Intel and AMD are willing to change platforms with the next generation.

This may be a great niche for other CPU architectures, imagine having 64 PCIe lanes and a high speed interconnect of off one SoC,