At STH, we review servers, storage, and networking gear. That gear usually resides in data centers. Although we have several facilities we use, a common challenge is that most facilities do not allow filming or photography in them. Over the pandemic, we have been working on a solution. Luckily, we were able to get a tour of the PhoenixNAP data center in Phoenix, Arizona.

Video Version

Just as a quick note here. To get the data center tour, PhoenixNAP sponsored both my and Joe’s travel down to Arizona. That also allowed us to have Joe film in the data center so we could get a video on our tour as well. PhoenixNAP did not get to see this article/ video before it was released and allowed us to create editorially independent work. We did get special access to the data center since normally photography and filming are not allowed. Speaking of the video version, you can check this out here:

As always we suggest opening the video in a YouTube tab or window. This is a case where we were able to film a lot more than we can share photos of below.

PhoenixNAP, for those who are unaware, is not just a colocation data center. The Phoenix facility also houses the company’s in-house hosting offerings that include dedicated servers as well as its bare metal cloud offering. The company has multiple facilities also in places such as Ashburn, Atlanta, Amsterdam, Belgrade, and Serbia but the Phoenix location is its namesake. In the video, we show off a few of the racks for its bare-metal cloud offering, but we are primarily focusing on the facility itself from a colocation angle. Colocation is a big deal as it helps STH save an enormous amount of money (relative to our budgets) each month.

That is enough background, let us check out the facility.

PhoenixNAP Data Center Getting Inside

We wanted to show the facility’s front door from this angle because this photo captured an important aspect of the site. One can see the desert landscaping. That is important for PhoenixNAP. Phoenix has an extremely low risk of natural disasters such as earthquakes, tornados, hurricanes, and so forth. While it is an extra hop to Asia compared to Los Angeles, it is also a lower-cost location to put a data center along with the lower natural disaster risk while also being in a relatively large US city. Getting to the site was actually extremely simple as it took us under 10 minutes to get from the PHX airport terminal to the data center.

In front of the main entryway, pillars are installed to prevent driving into the security office and that is the first level of security. As with many data centers, there is both a badging system as well as the ability to intercom/ buzzer to the security office to get into the lobby.

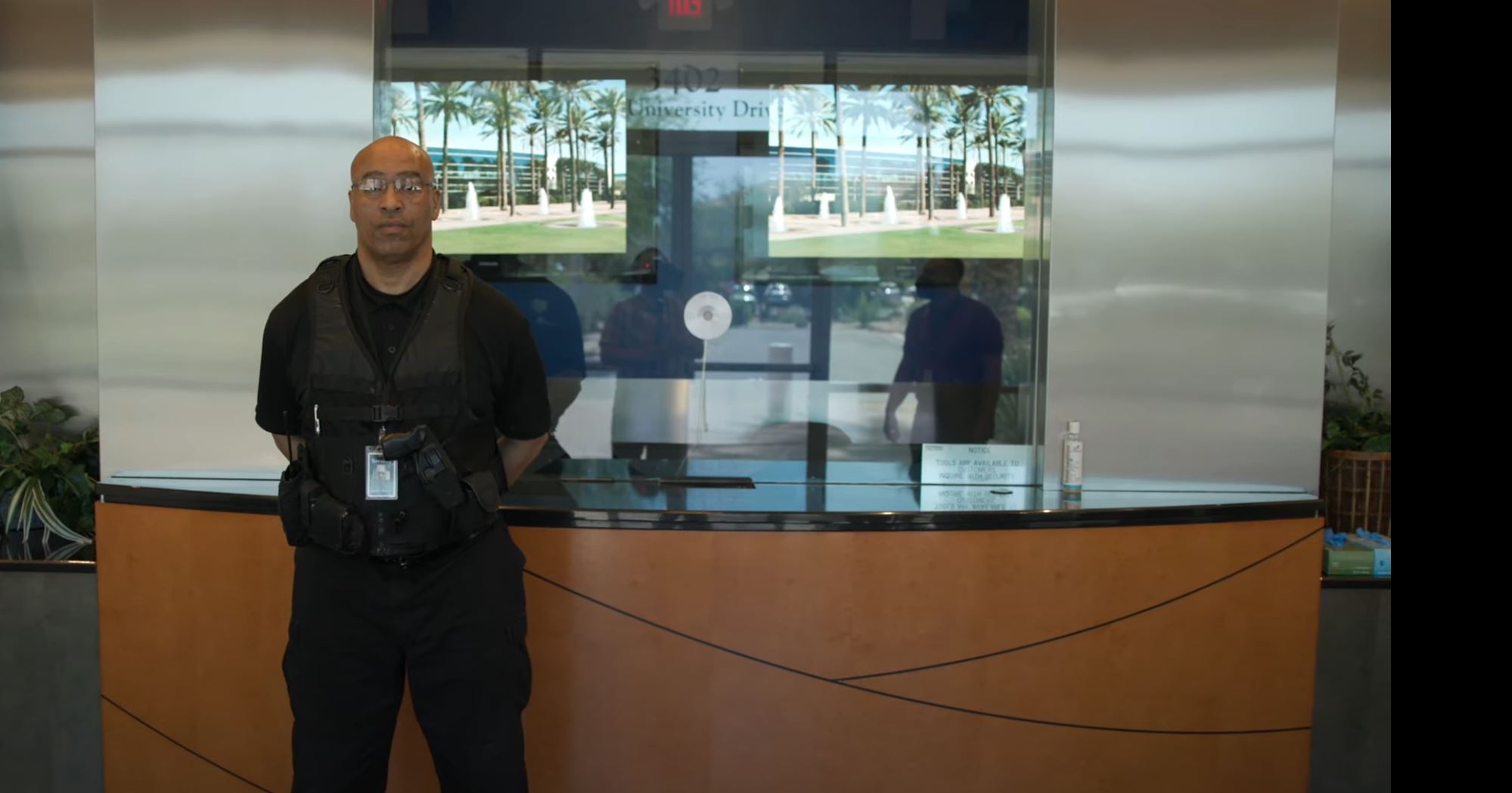

Once inside, there is a security guard that sits inside a reinforced booth with bulletproof glass. James, the security guard, came out to take a quick photo but then returned to his station while another security guard was doing rounds. One typically badges through the next set of doors, and since we were guests we had to get guest badges and use them at the various doors we passed through.

Getting past the security booth was only the first step. This effectively only allows access to the hallways with the restrooms as well as other areas that require primarily badge-only access (e.g. the office space for PhoenixNAP employees and customers.)

Here we could see the NOC room, although most folks were working remotely due to the COVID19 pandemic. Just after we did our tour, Phoenix opened up significantly in terms of masks for those vaccinated but we should put into context that we toured the facility during somewhat unusual times.

Something else that we wanted to point out that we are not going to show from the facility is that there is a relatively large number of cubicles, offices, and conference rooms on-site, along with a very large cafeteria area. We have been in facilities with 4-6 cubes/ offices for customer use and small closet-size break rooms. There was an entire section of the building dedicated to this, and that is important since PhoenixNAP has a number of customers that deploy employees on-site for extended periods of time in addition to the normal traffic from folks working on infrastructure.

Getting to the data center floor requires going through a standard anti-tailgating “man trap” then into a room where one authenticates with an iris scan, PIN, and badge to get into the first data center hallway.

Next, let us get into connectivity since that is a major story with this facility.

STH “hey we’re going to do something new”

Me reading: “holy moly they’ve done one of the best tours I’ve seen in their first tour.”

Fantastic article and especially video. Plenty of good practices to learn from. I’m surprised they signed off on this, if I was a customer I wouldn’t be too happy. But a fascinating look into an a world that is usually off-limits.

Should have said hi! I’ve got 30 racks in pnap.

We manage ~3000 servers and at many facilities. I’ll say from a customer perspective I don’t mind this level of tour. I don’t want someone inventorying our boxes but this isn’t a big deal. They’re showing racks, connectivity, hallways, cooling and power. The only other one I’d really be sensitive about is if they took video inside the office an on-site tech uses because there may be whiteboard or papers showing confidential bits. We use Supermicro and Dell servers. No big secret.

I just semi-retired from a job of 9 years supporting 100+ quants, ~5K servers in a small/medium-sized financial firm’s primary data center, in the suburbs of a major US city.

I gave tours every summer for the city-based staff…They had no idea of the amount of physical/analog gear required to deliver their nice digital world.

…The funniest part was that each quant had their own server, which they used to run jobs locally and send jobs into the main cluster…They always wanted to see THEIR server!

Always neat to see another data center and reminds of the time I worked in one a decade ago.

I am surprised by the lack of solar panels on the roof or other infrastructure areas outdoors. It is a bit of an investment to build out but helps in efficiency a bit. In addition it can assist when the facility is on UPS power and needs to transition to generator during the day. Generally this is seen as a win-win in most modern data centers. I wonder if there was some other reason for not retrofitting the facility with them?

The generators were mentioned briefly but I fathom an important point needs to be made: they are likely in a N+2 redundant configuration. Servicing them can easily take weeks which far too long to go without any sort of redundancy going. Remember that redundancy is only good for when you have it and any loss needs to be corrected immediately before the next failure occurs. Most things can be resolved relatively quickly but generators are one that can take extended periods of time. This leads me to wonder why there is only two UPS battery banks. I would have thought there would be three or four with a similar level of redundancy as a whole and to increase the number of transfer switches (which themselves can be a point of failure) to ensure uptime. Perhaps their facility UPS is more ‘modular’ in that ‘cells’ can be replaced individually in the functional UPS without taking down the whole UPS?

While not explicitly stated as something data centers should have in their designs but keeping a perpetual rotation of buildout rooms has emerged in practice. This is all in the pursuit of increasing efficiency which pays off every few generations. At my former employer a decade ago had moved to enclosed hot/cold aisles to increase efficiency there in the new build out room. As client systems were updated, they went into the new room and eventually we’d have a void where there no clients and the facilities teams would then tear the room down for the next waves of upgrades.

The security aspect is fun. Having some one, be it a guard or tech watch over third parties working on racks is common place. The one thing to note is how difficult it is to get an external contractor in there (think Dell, HPE techs as part of a 4 hour SLA contract) vs. normal client staff to work on bare metal. Most colocations can do it but you have to work with them ahead of time to ensure it can happen in the time frame necessary to adhere to those contracts. (Dell/HPE etc. may not count the time it takes to get through security layers toward those SLA figures.)

There is a trend to upgrade CRAC systems to greater cooling as compute density is increasing. 43 kW per rack is a good upper bound for most systems today but the extreme scenarios have 100 kW rack possibilities on the horizon. Again, circling back to the build out room idea, perhaps is tie to migrate to water cooling at rack scale?

I probably couldn’t tell in the pictures nor in the video, but one of the wiser ideas I’ve seen done in data center is to color code everything. Power has four dedicated colors for feeds plus a fifth color exclusively used for quick spare in emergencies for example. All out-of-band management cables had their own dedicated colors vs. primary purposes. If a cable had an industry standard color (fiber), the tags on them were color coded on a per tenant basis for identification. Little things that go a long ways in service and maintainability.

Thank God STH did this. I was like oh that’s a security nightmare. You did a really good job showing a lot while not showing anything confidential.

What I’d like to know and see is how this compares to EU and Singapore. Are those big facilities or like a small cage in another DC? How is the network between them.

Equinix metal is getting a lot of wins.

STH is the perfect site for datacentre reviews. You’ve obviously been to others and you’re not old fogey boring like traditional datacentre sites.

Patrick,

If it’s possible, I suggest touring a SuperNap (Switch) facility if they’d allow for it.

That is one of the most amazing DCs I’ve ever been in. Their site does have some pics. It’s amazing.

Philip

In the video at 5 minutes 28 seconds you can see my network rack : )