At the ASUS booth during SC23 we saw a number of the company’s AI products. That included a NVIDIA HGX H100 system along with a PCIe GPU system. Since it is getting near the end of the year, we wanted to show that, and we have a small nugget for our STH regulars in this one as well.

Touring the AI Servers at the ASUS SC23 Booth

First, we saw the ASUS ESC N8-E11. This is ASUS’ NVIDIA HGX H100 8-GPU system with 4th and 5th Gen Intel Xeon Scalable processors. What was perhaps more interesting is the ASUS ESC N8A-E12. This is a similar system, but using the AMD EPYC 9004 series.

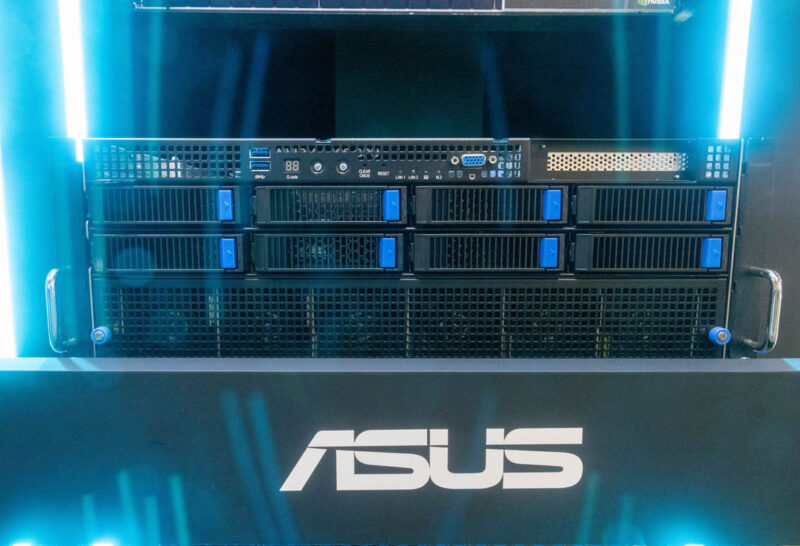

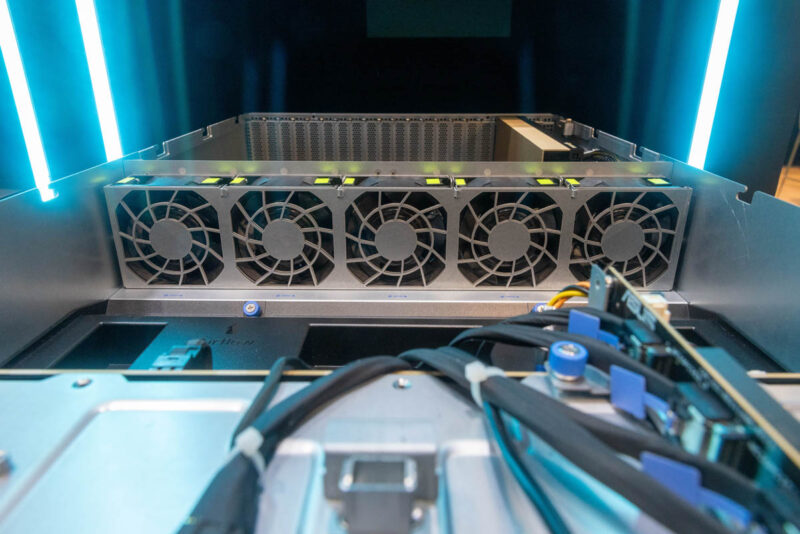

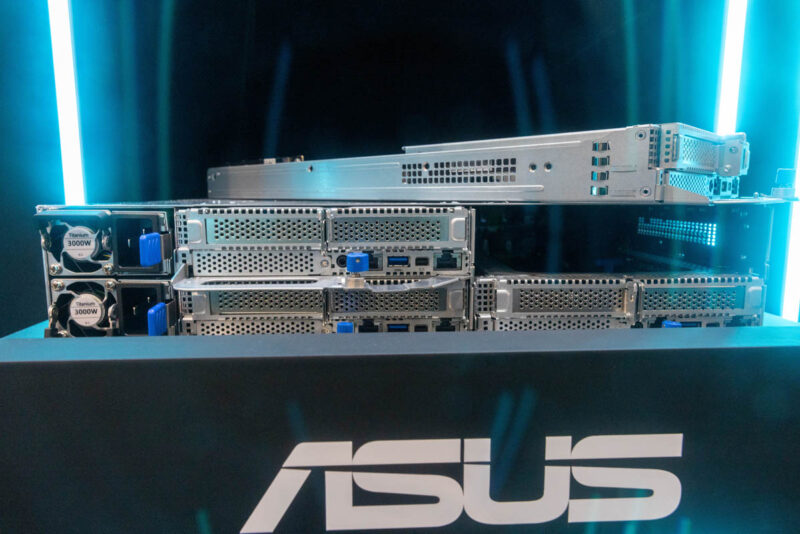

The front of the 7U system is dominated by fans and vents. Still, there are eight 2.5″ NVMe drives.

The front also has LAN ports, a management port, and then a few fun features. There is a Q-Code LCD screen which we have seen on generations of ASUS systems. Then there are four USB 3 ports. Many systems only have two, so it is perhaps the smallest feature, but one we thought we would point out.

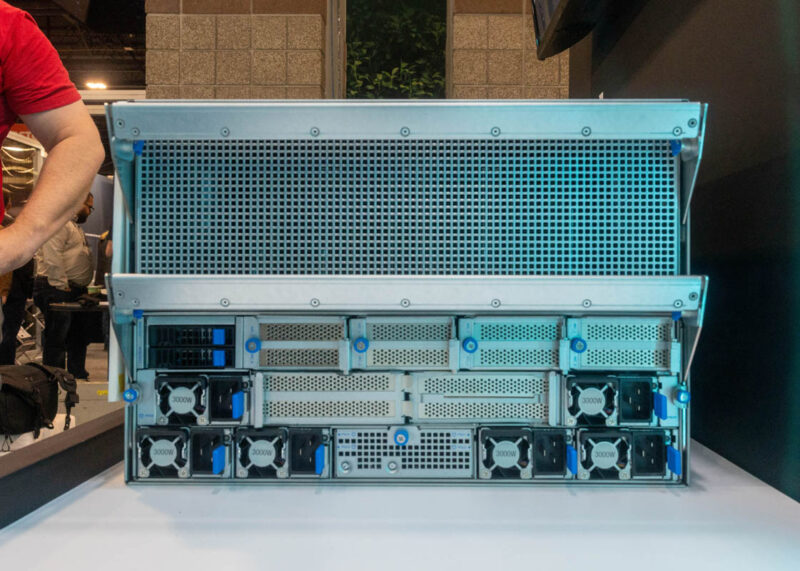

Here is the back of the system next to Patrick’s arm.

The bottom rear has a huge tray. Here we can see the two rear 2.5″ drive bays, PCIe expansion slots, and six 3kW PSUs.

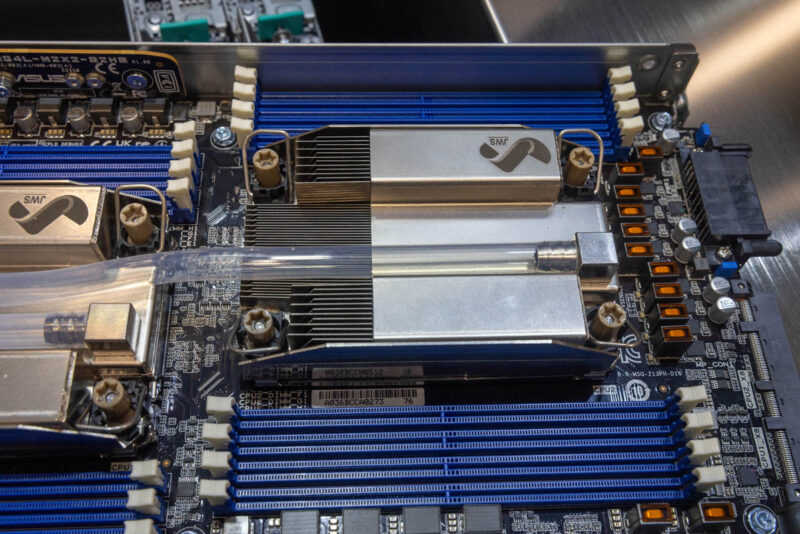

This bottom area is also where the motherboard with the CPUs and memory are.

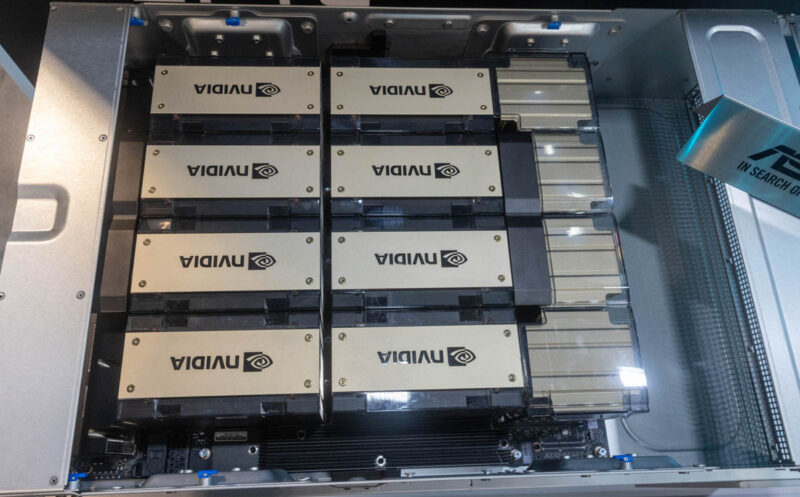

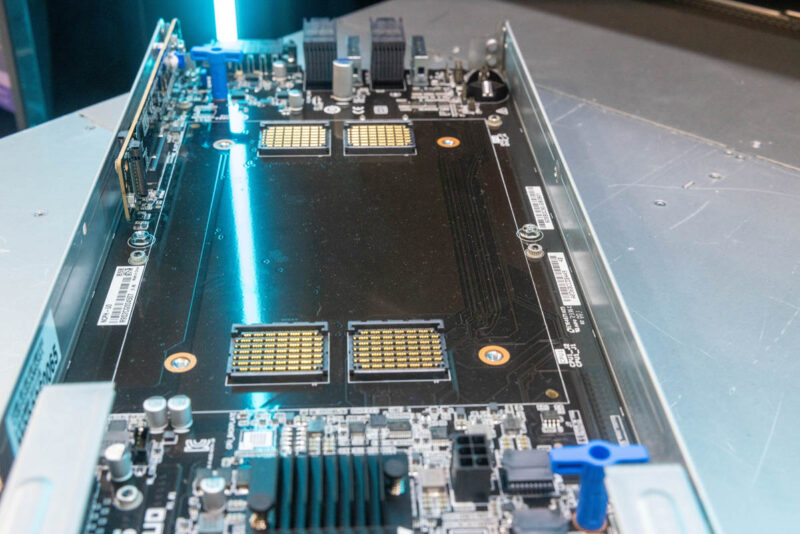

The top rear has the NVIDIA HGX H100 tray.

This is perhaps the biggest feature of the servers since this is what is in such high-demand these days.

The HGX H100 systems are always cool to see, but ASUS has more.

ASUS ESC8000-E11 PCIe GPU Server

ASUS also had one of its ESC8000-E11 PCIe GPU servers at the show.

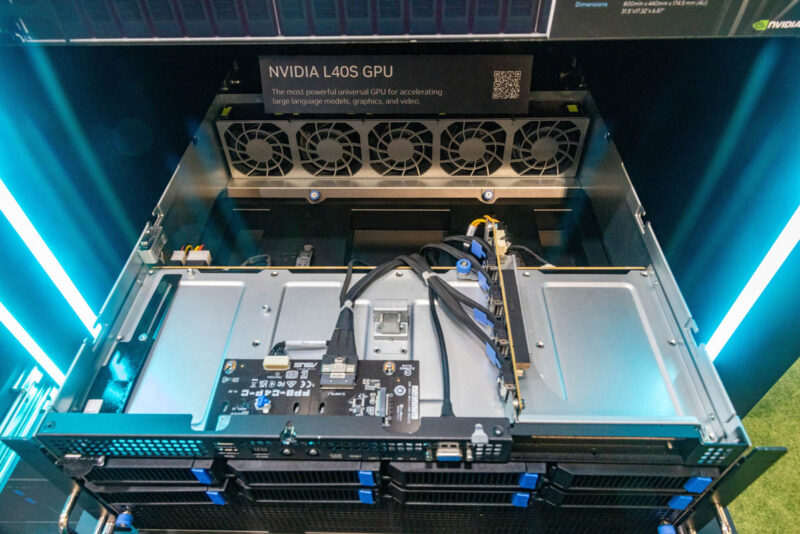

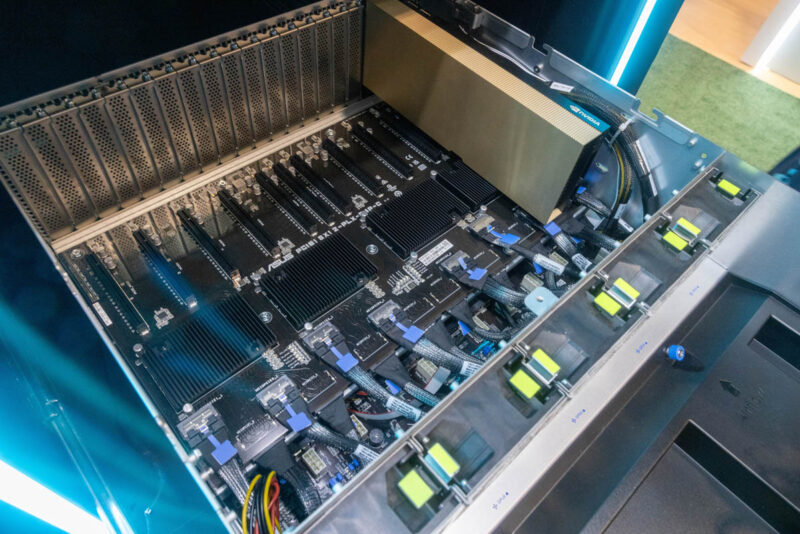

This is a fairly traditional PCIe GPU server design that is built to handle GPUs like the NVIDIA L40S and H100.

The ASUS design has midplane fans for the GPU area and front fans for the bottom CPU and memory area.

In the GPU area, ASUS has a number of ESC8000 variants. Some have direct cabled connections, PCIe Gen5 switches, Intel and AMD options, and so forth.

For many organizations, it is more cost-effective to use L40S servers than H100 servers, and availability is generally much better as well.

Liquid Cooling Coolness

Of course, ASUS had liquid cooling options. Something you may see here is a direct-to-chip liquid cooling setup with a twist.

ASUS also had its immersion cooling tank on the show floor.

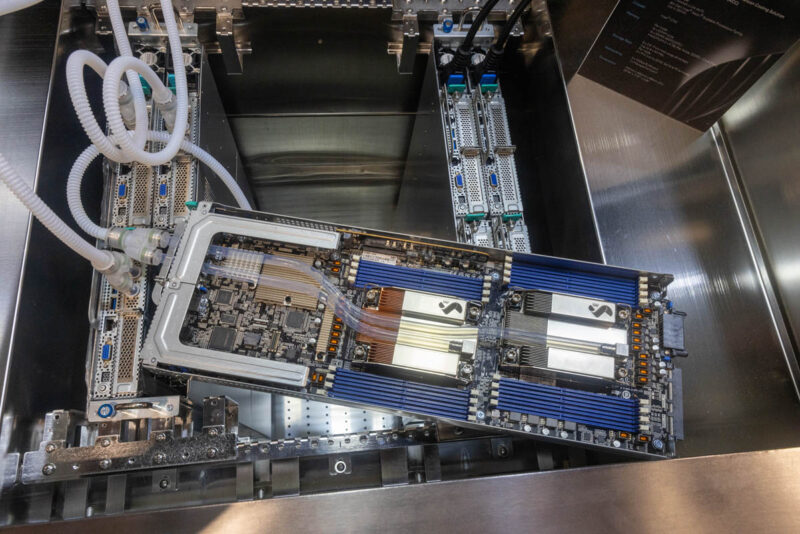

The surprise was that ASUS had a solution that combined the two. Immersion fluid to cool the lower TDP components and direct-to-chip liquid cooling for the higher TDP parts.

The advantage of this setup is that companies can get higher fluid flow rates over the high-TDP parts. Beyond that, one does not need to worry about fluid leaking outside of the loops, as that is the entire point. Fluid is pumped through the cold plates, but only in one direction since there is fluid around.

ASUS RS720QN-E11-RS24U

Lastly, we had a NVIDIA Grace Superchip system. This is a node that is designed to take a Grace Superchip 144 core module and also provide I/O for NICs and more.

The connector is the Grace Superchip, not the Grace Hopper connector. Grace Hopper is a 1kW part, so it is tough to cool with air in a system like this.

That is because this is a 2U 4-node design.

That system has a total of 576 Arm Neoverse V2 cores.

Final Words

Overall, ASUS had a lot at SC23. While the company had standard Intel Xeon and AMD EPYC servers, we thought we would show some of the accelerated platforms.

If you want a quick look at a few more views, here is a 1-minute short video of the ASUS displays at SC23. We found it on a great new YouTube channel.