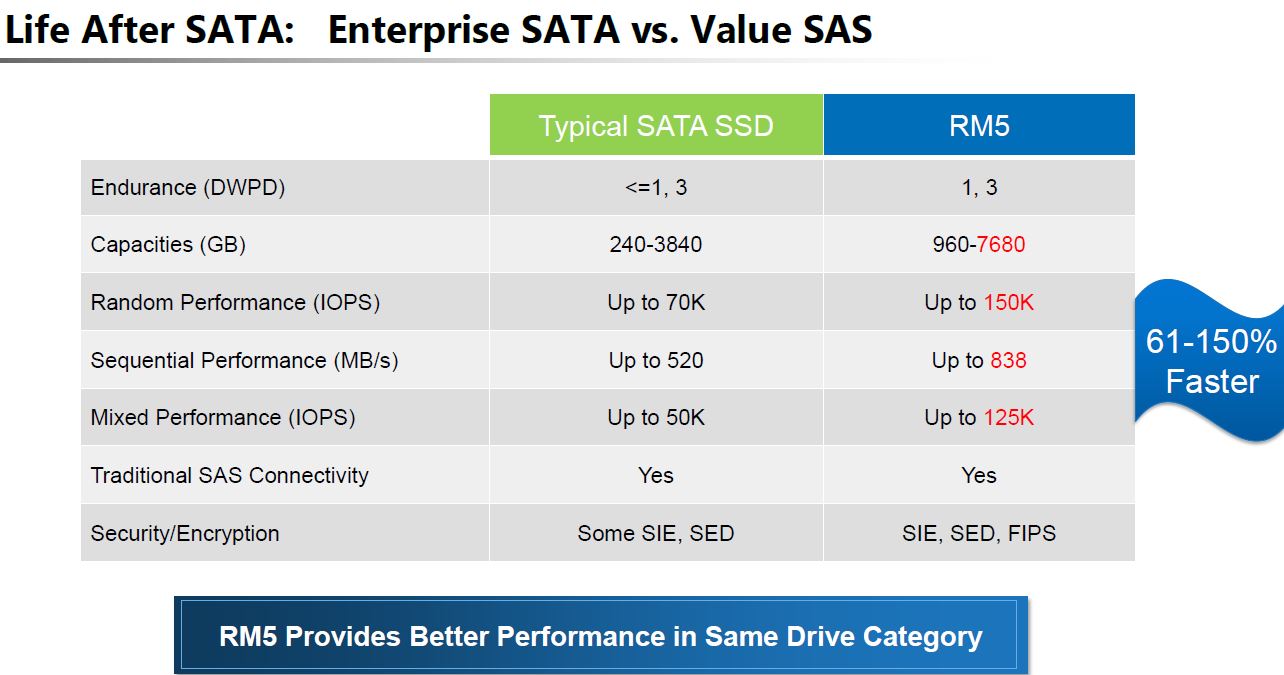

Recently we saw an announcement of Toshiba Memory RM5 in HPE ProLiant Servers. At Dell Technologies world, Dell EMC had the Toshiba RM5 featured prominently in several servers including the Dell EMC PowerEdge MX and Dell EMC PowerEdge R740xd. Toshiba has an intriguing value proposition for customers who have direct attached compute node storage.

Toshiba Memory RM5 Value SAS in Dell EMC PowerEdge

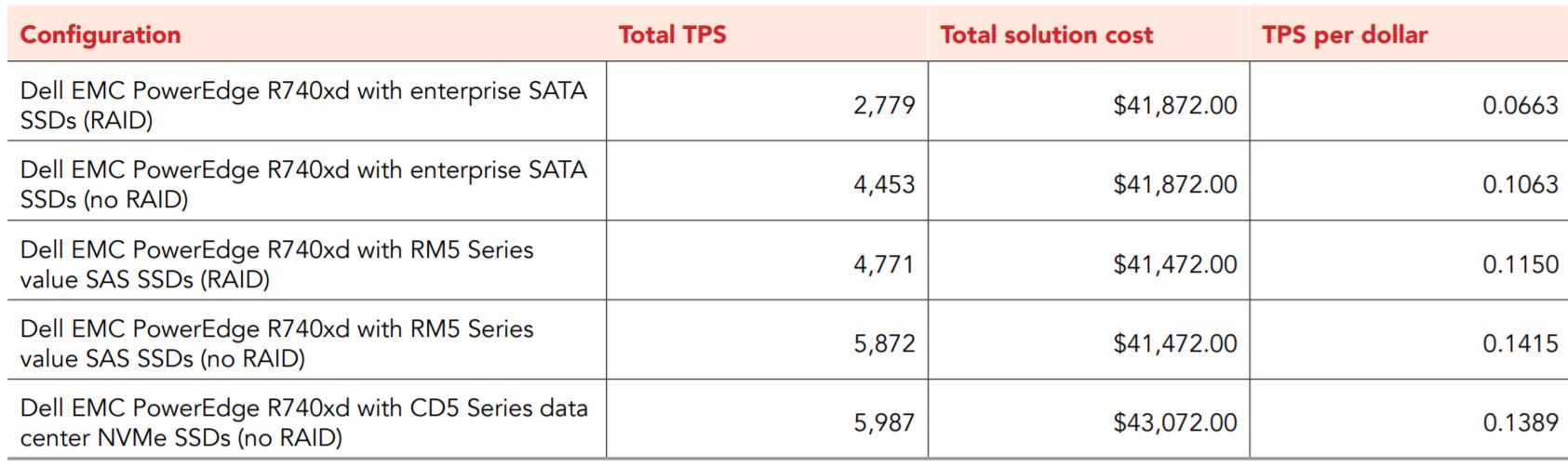

Toshiba Memory commissioned a study from Principled Technologies where Dell EMC provided pricing for the same Dell EMC PowerEdge R740xd with various storage configurations. If you want to learn about the server, you can see our Dell EMC PowerEdge R740xd Review. One of the interesting notes here is that in these configurations the Toshiba Memory RM5 configuration was $400 less costly than the “enterprise SATA” configuration.

We dug into the configuration specs (source for the above table) and found that they were using the Intel SSD D3-S4510 a 960GB 3D TLC NAND value drive for enterprise SATA. We want to point out here that this data is from a PT report commissioned by Toshiba so we would expect that the configurations chosen would come out favorable to Toshiba.

We covered the proposition of the Toshiba Memory RM5 in its launch. Essentially, Toshiba is banking on the fact that most Dell EMC servers, as well as those from other major vendors, come with dedicated SAS controllers and backplanes. Inside the server, these backplanes are single port which is one reason why SATA drives have been popular.

With the Toshiba Memory RM5 value SAS product, Toshiba is exploiting the higher-end SAS3 12gbps protocol to deliver more performance and features than SATA III 6.0gbps can offer.

Final Words

The value proposition is clear here for the value SAS segment. More performance and being SAS-native at the same, or potentially lower cost than SATA is a good message. Longer term, this is a segment that will cede to NVMe over time. Without being designed for high-availability systems, the value SAS segment has a natural limit as NVMe transitions to Gen4 and more NVMe lanes become available. For the next few years, single port value SAS has a clear value proposition versus putting SATA SSDs on SAS controllers in servers.

I believe there is a lot to recommend that all serial channels “sync” with the single-lane speed of chipsets.

As this article tends to demonstrate, the 12G clock sets it far above the 6G ceiling where SATA is stuck.

Let’s not forget that the “S” in SATA stands for “Serial”, whereas NVMe connectors use 2x or 4x PCIe lanes (read “parallel”, NOT “serial”).

As a means of conserving scarce PCIe lanes, future SATA standards e.g. SATA-IV, should support a variable clock rate preferably exploiting “auto detection,” in the same way that modern SATA drives are compatible with SATA-I, SATA-II and SATA-III ports on motherboards and add-on controllers.

I realize that there are plenty of industry partners who may laugh at this suggestion,

nevertheless I remain staunch in my belief that the next SATA standard should oscillate at 16 GHz

and utilize the 128b/130b “jumbo frame” that is also a feature of PCIe 3.0.

Here are a few raw numbers that also emphasize my points above.

We were told that NVMe eliminated a “lot” of protocol overhead.

We’ll, let’s look at a few empirical numbers, to see if that’s true.

(a) the best SATA-III SSDs now READ at ~560 MB/second:

560 / 600 = 93.3% of raw bandwidth (e.g. Samsung 860 Pro 2.5″ 512GB)

(b) the best NVMe M.2 SSDs now READ at ~3,500 MB/second:

3,500 / 3,938.46 = 88.9% of raw bandwidth (i.e. MORE overhead!)

(i.e. 8,000 Mbps / 8.125 x 4 = 3,938.46 MBps)

What happens to SATA performance if we switch to

the 128b/130b “jumbo frame” and leave all else unchanged?

6,000 Megabits per second / 8.125 bits per byte = 738 MB/second

738 / 600 = 23% increase

12G SAS benefits the same by switching to the 128b/130b “jumbo frame”.

We should drop both SAS and SATA in favour of PCIe3 x4 NVME. SAS is a half measure for SSD. Particularly in light of the incoming Rome PCIe4 processors: break down 64 lanes of that PCIe4 to PCIe3 and use it for 32 NVME drives. It seems obvious.