Today, the Top500 June 2019 edition was released. Twice per year, a new Top500.org list comes out essentially showing the best publicly discussed Linpack clusters. We take these lists and focus on a specific segment: the new systems. Our previous edition published around SC18 last year you can revisit at Top500 November 2018 Our New Systems Analysis and What Must Stop. As the title hints, there is a trend in the industry, especially championed by Lenovo, to run Linpack on portions hyper-scale web hosting clusters and call them supercomputers. Primarily using this technique, Lenovo has vaulted itself to add another 47 systems or half of the 94 new systems on the list.

Top500 New System CPU Architecture Trends

In this section, we simply look at CPU architecture trends by looking at what new systems enter the Top500 and the CPUs that they use.

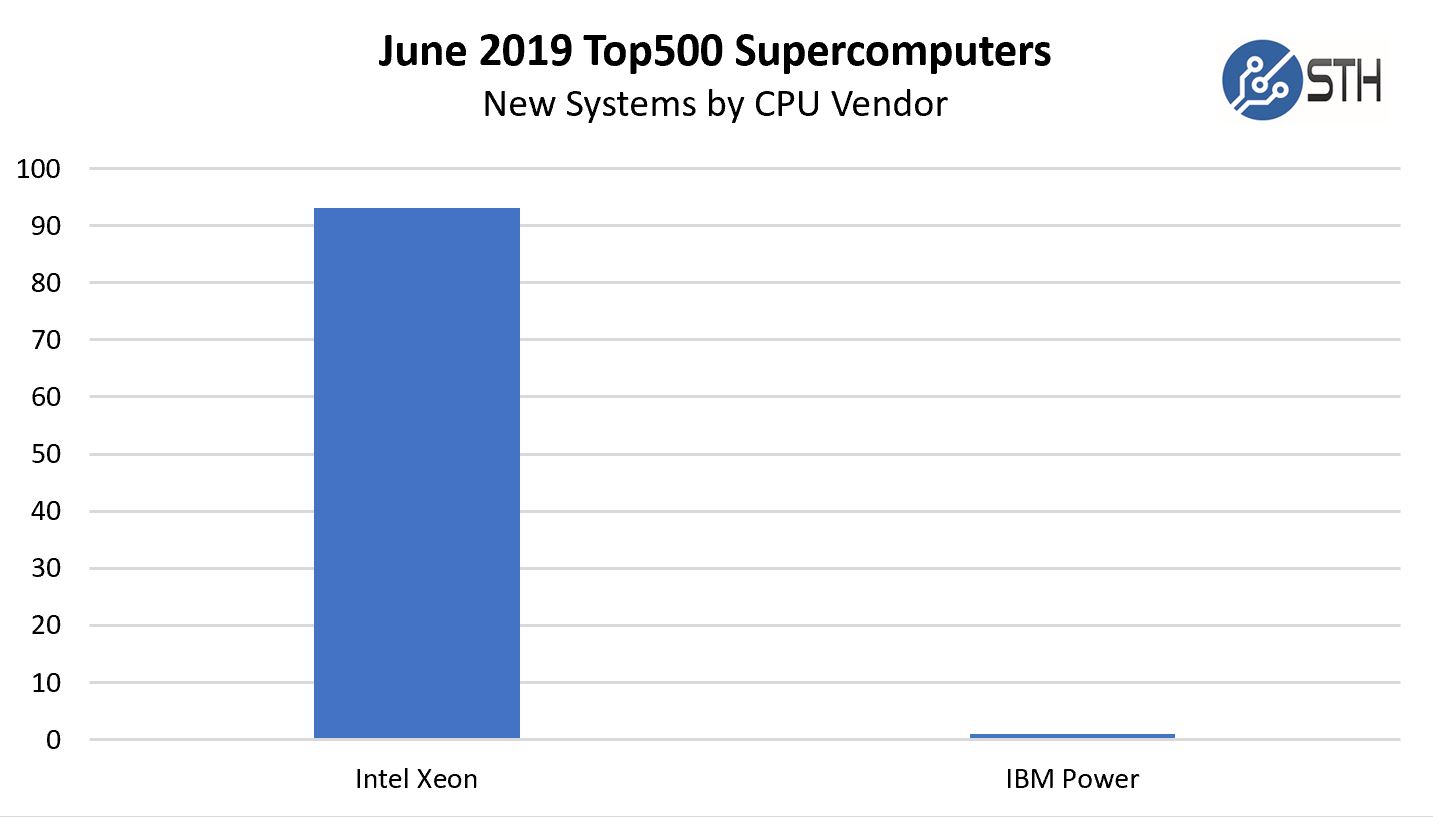

AMD and Arm did not see a new system added at ISC 2019. Power saw one system. Intel dominated the new systems list.

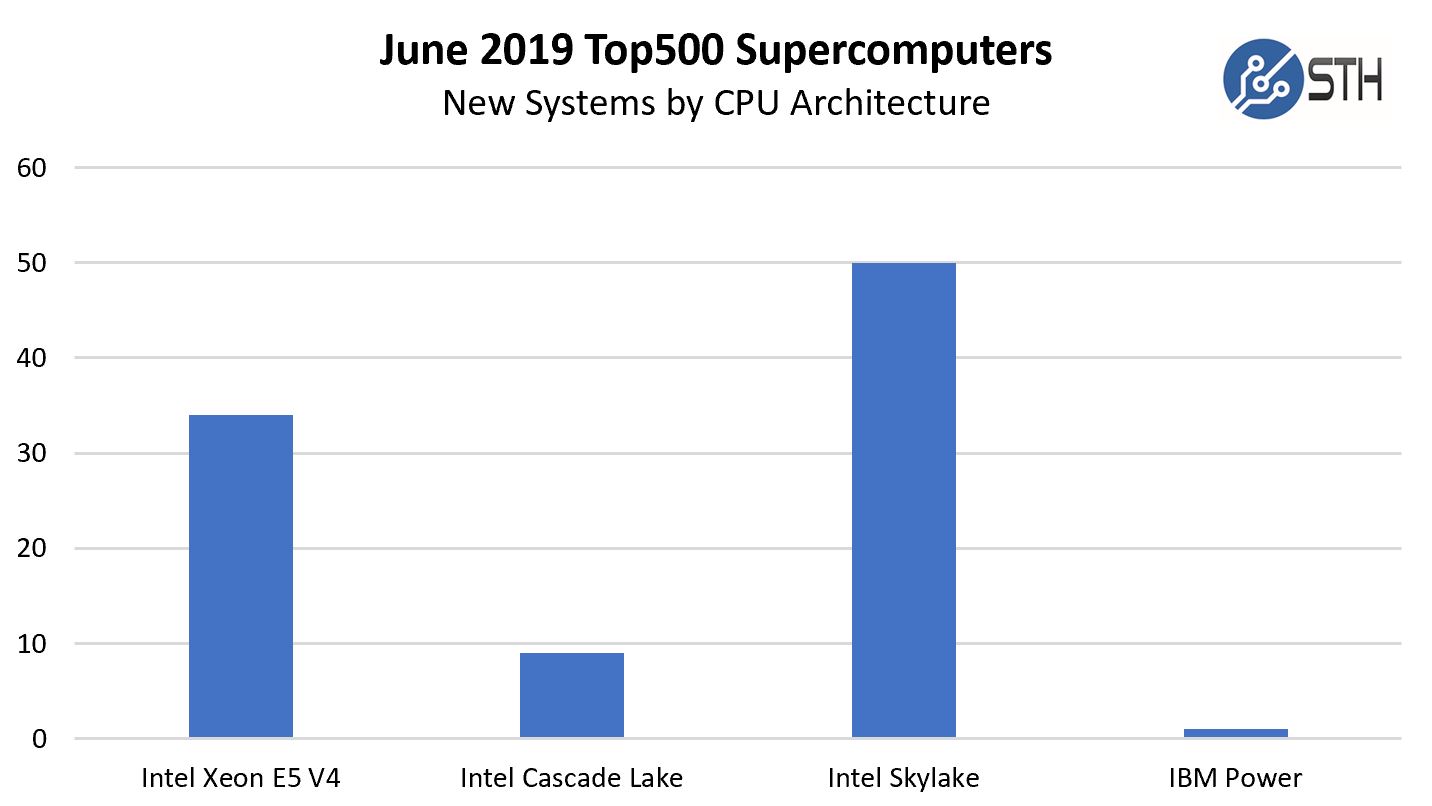

Here is a breakdown based on CPU generation:

As you can see, over a third of the list is still Intel Xeon E5-2600 V4 systems while about 63% of the list is Intel Xeon Scalable families, with IBM Power taking a single system. Frankly, the fact that a CPU that launched in Q1 2016 still being deployed by over a third of the new systems as a one to two generations old CPU, is noteworthy itself. We are going to discuss that later in the interconnect section and how Lenovo is stuffing the list for marketing purposes.

Intel Xeon Scalable (Skylake-SP) launched in July 2017, about two years ago. You can see STH’s coverage at Intel Xeon Scalable Processor Family (Skylake-SP) Launch Coverage Central. We now have the newer 2nd Gen Intel Xeon Scalable family (Cascade Lake) launch along with Cascade Lake-AP or the Platinum 9200 series targeted at this segment.

From the AMD side, we know that AMD EPYC has a big contract for the 1.5 Exaflop Frontier Supercomputer, but we are still in the AMD EPYC “Naples” generation with Rome coming next quarter. While we saw a single Hygon Dhyana system in November 2018, we did not see a new system based on the AMD EPYC-derived chip this year.

The European collective is putting its exascale project on Arm architecture, a currently European (owned by a Japanese) company that is in a country trying to Brexit the European Union. Japan is pushing Arm processors for its exascale system designs as well.

This may be the last list we see with this level of homogeneous CPU vendor list.

CPU Cores Per Socket

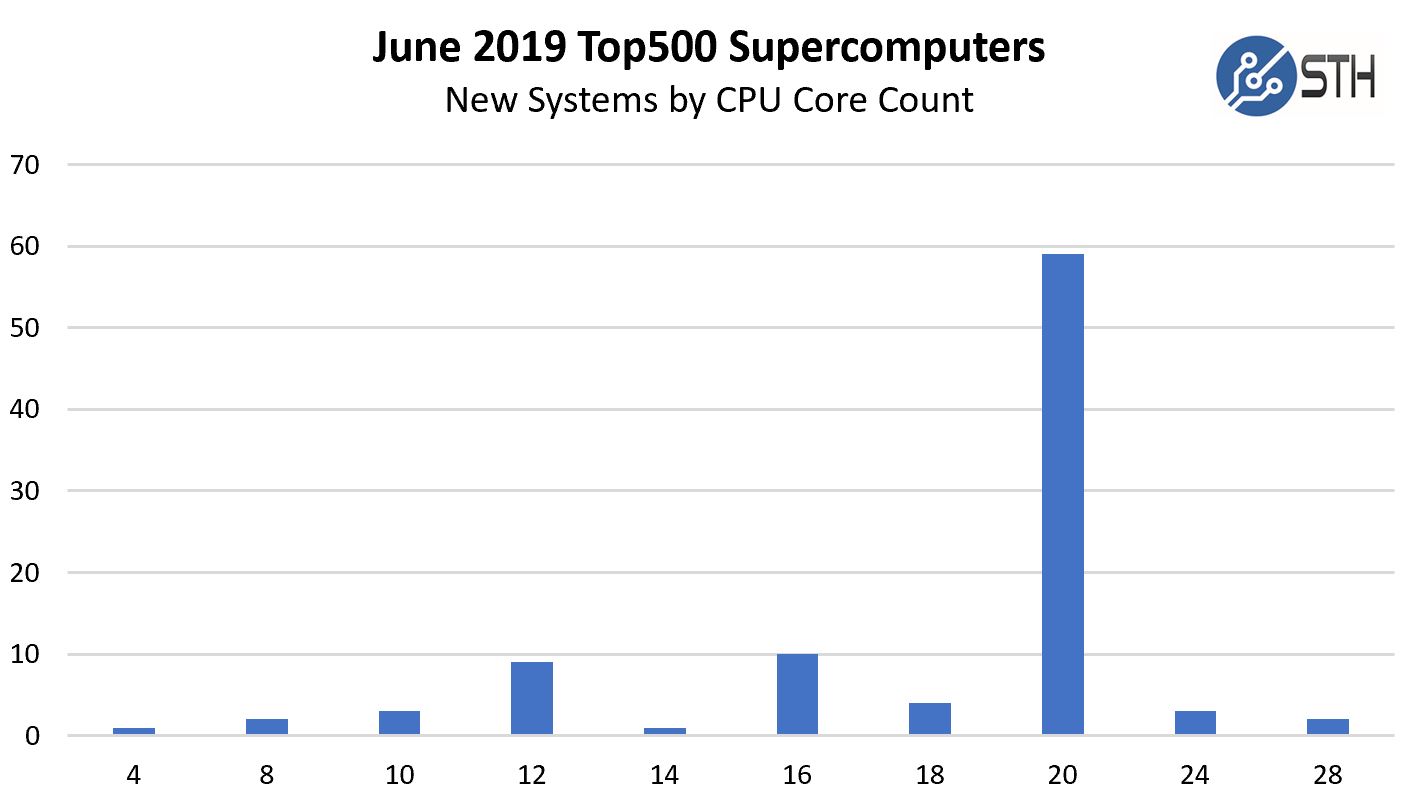

Here is an intriguing chart, looking at the new systems and the number of cores they have per socket.

20-core CPUs were also common in the November 2018 list. It seems like this is the current sweet spot for price/ performance in the segment.

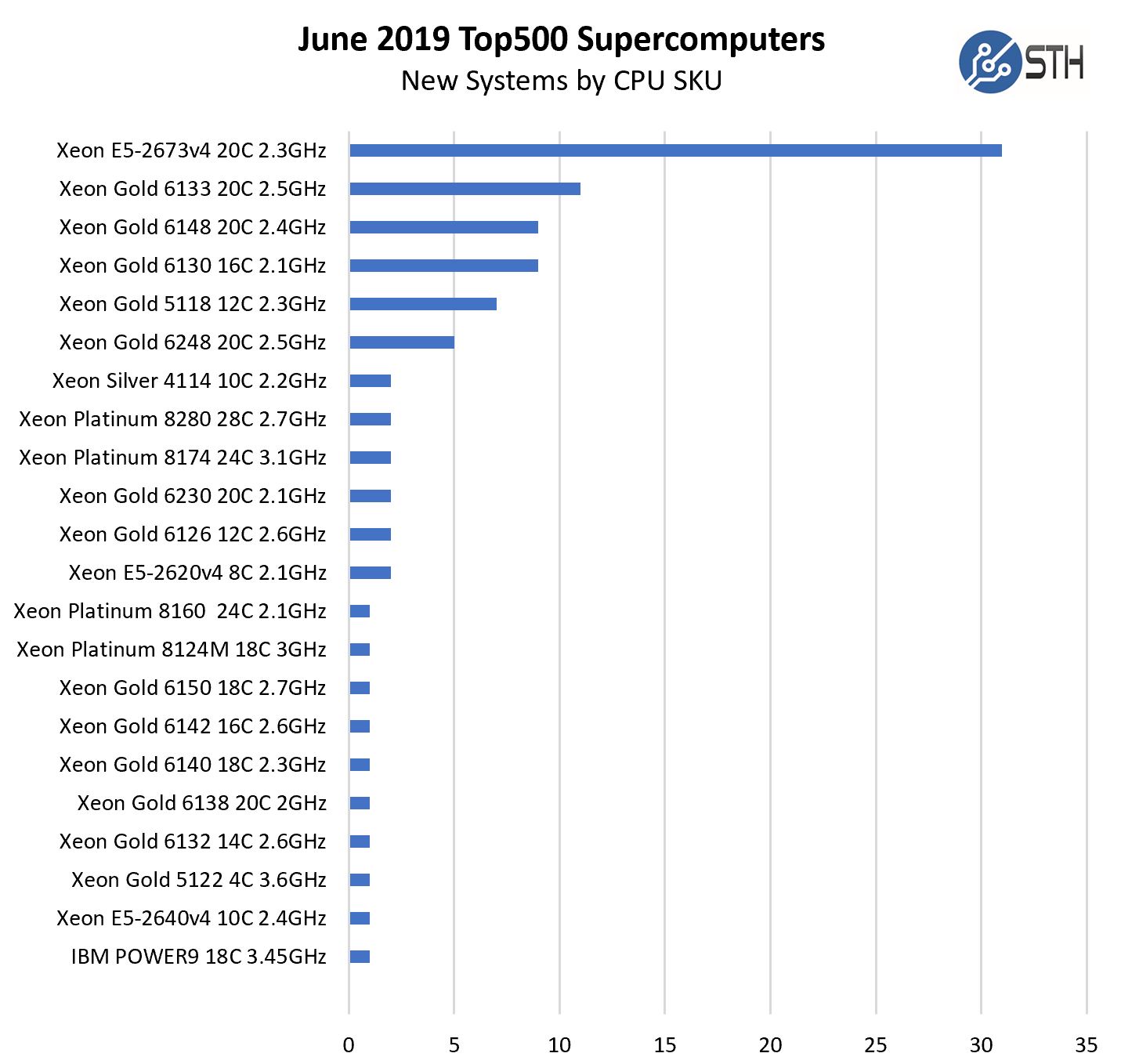

If you want to see the list of CPUs, one can see the new systems by which CPU they use here:

You may be wondering why the top CPU is a 2016-era Intel Xeon E5-2673 V4. This is a figure driven by Lenovo’s marketing campaign to run Linpack on almost anything.

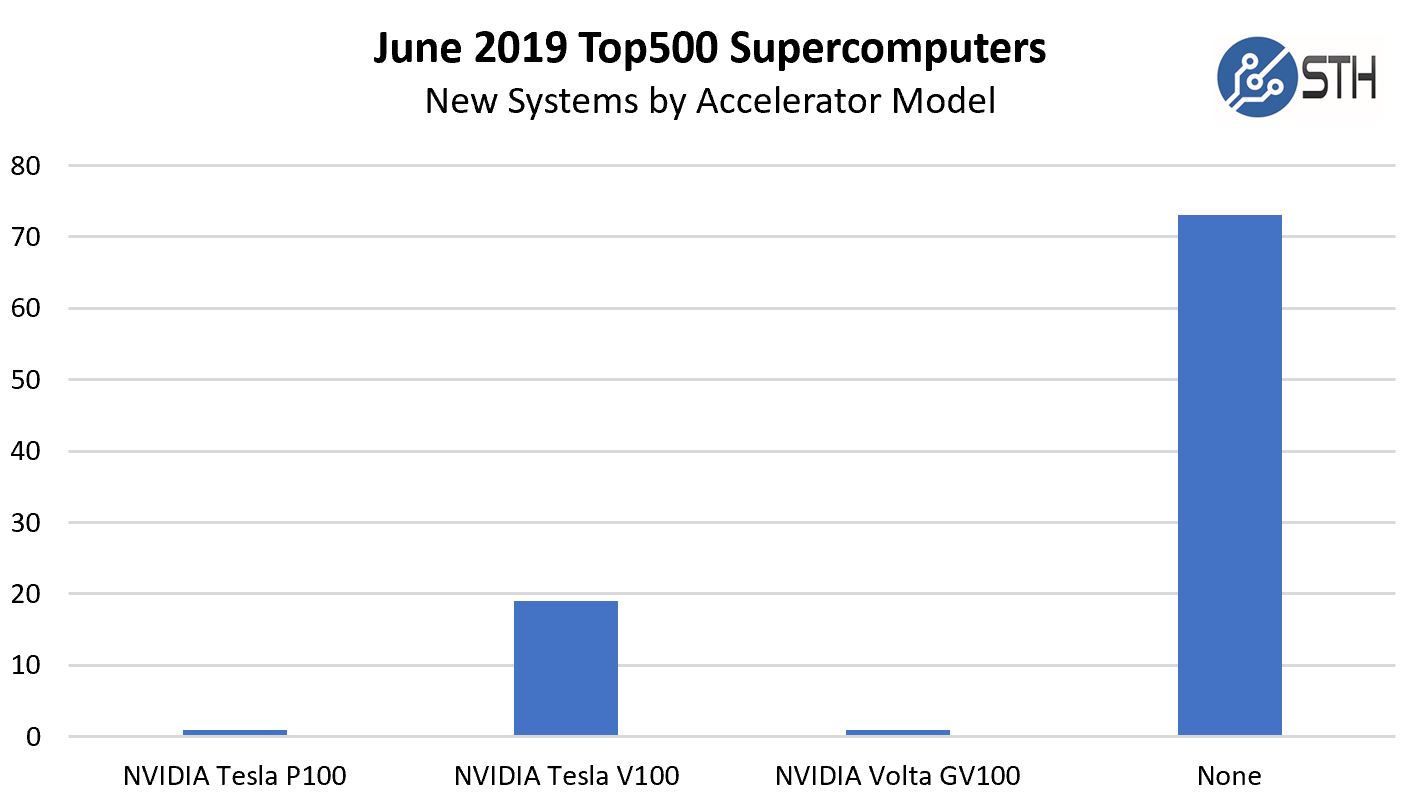

Accelerators or Just NVIDIA?

Unlike in the November 2018 list, NVIDIA is the only accelerator vendor for the new systems. Here is a breakdown:

That percentage is also being skewed by benchmarking web hosting systems, however, NVIDIA owns the new systems on this list.

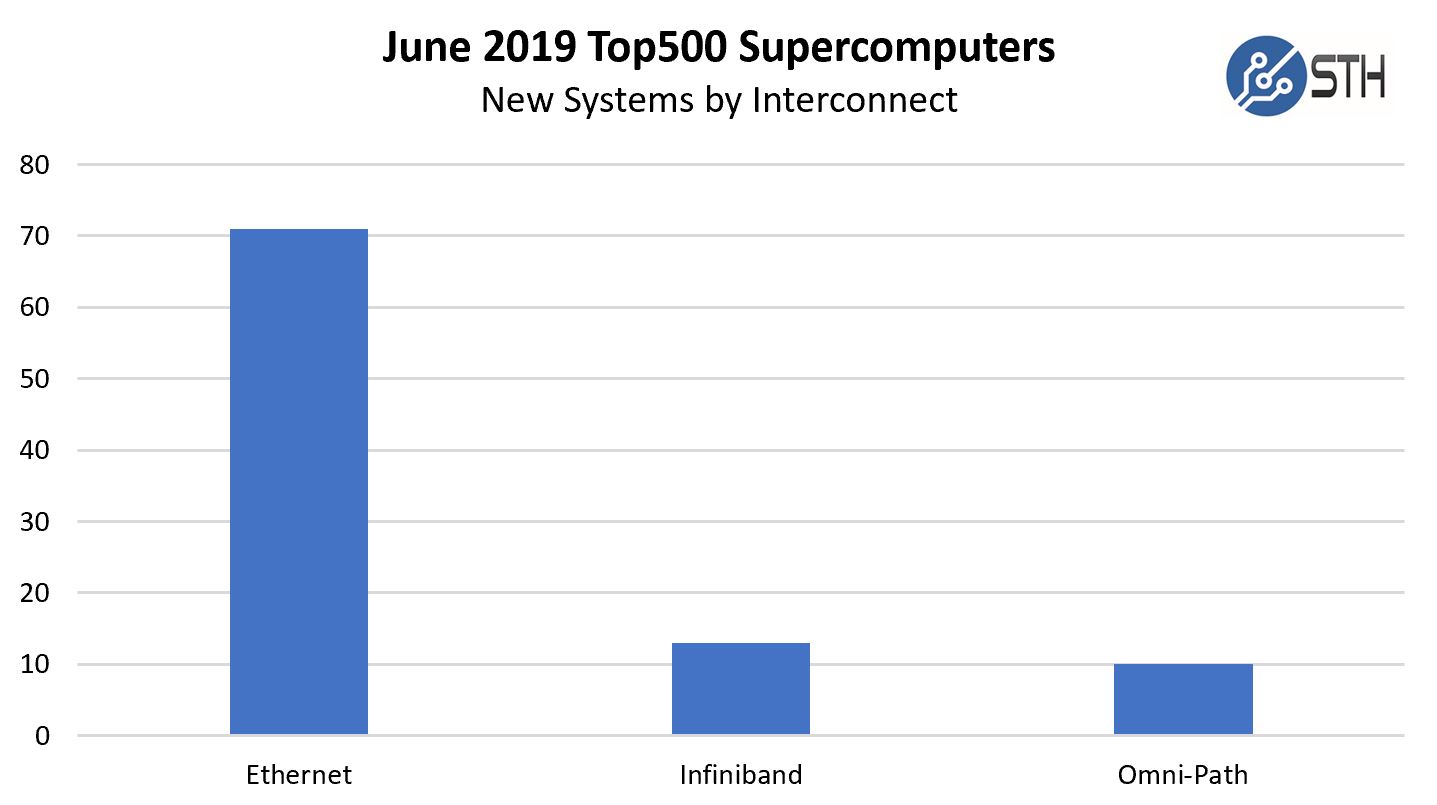

Fabric and Networking Trends

One may think that custom interconnects, Infiniband, and Omni-path are the top choices on the Top500 list’s new systems. Instead, we see 71 of the 94 new systems, over 75%, using Ethernet. This is up from around 70% in November 2018.

Putting Ethernet aside for a moment, Intel Omni-Path saw 10 new systems on the list. We recently showed Inside a Supermicro Intel Omni-Path 48x 100Gbps Switch. 13 of the new systems used Infiniband. Still, we see no OPA 200Gbps generation being shown as Intel has decided to mothball the technology.

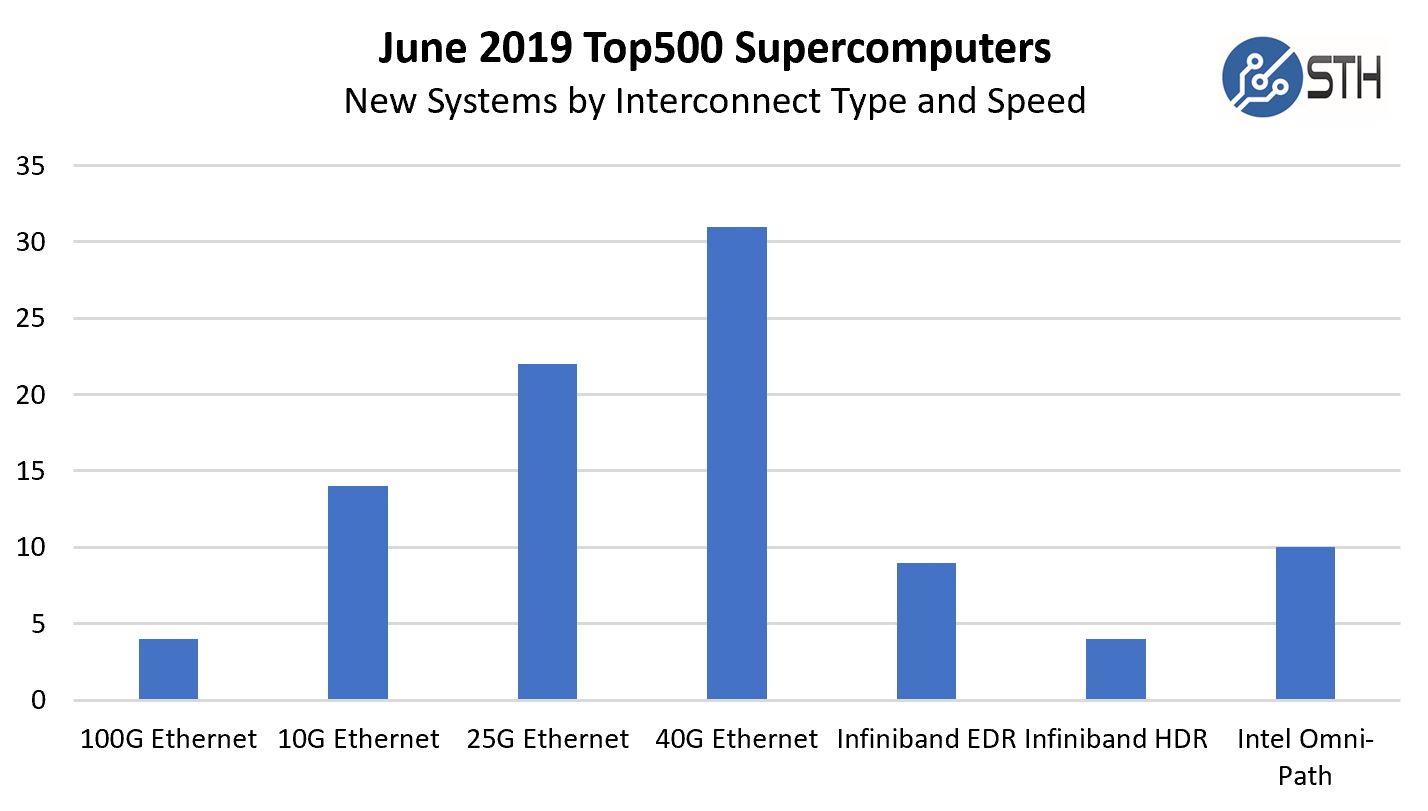

Drilling down, here is what the breakdown looks like.

100GbE is interesting. There is a lot of work on trying to use Ethernet infrastructure, at 100GbE and beyond for HPC applications as with Cray’s Shasta and Slingshot interconnect. Taking the Infiniband, Omni-Path, and 100GbE away, we see a number of 10GbE, 25GbE, and 40GbE interconnects.

A few notes starting with the 25GbE installations. The AWS entry is an EC2 C5 cluster in their us-east-1a region with Intel Xeon Platinum 8124M CPUs. Perhaps this makes sense given someone may want an on-demand EC2 cluster to crunch numbers.

The two Inspur systems on the list are different than the Lenovo and Sugon systems. While these are 25GbE systems, they also have NVIDIA Tesla V100 GPUs. These seem to be HPC systems in service providers running Ethernet and are using 25GbE for lower latency. If you subscribe to the idea that current clusters can be composable and handle both AI and HPC duties, then this makes sense.

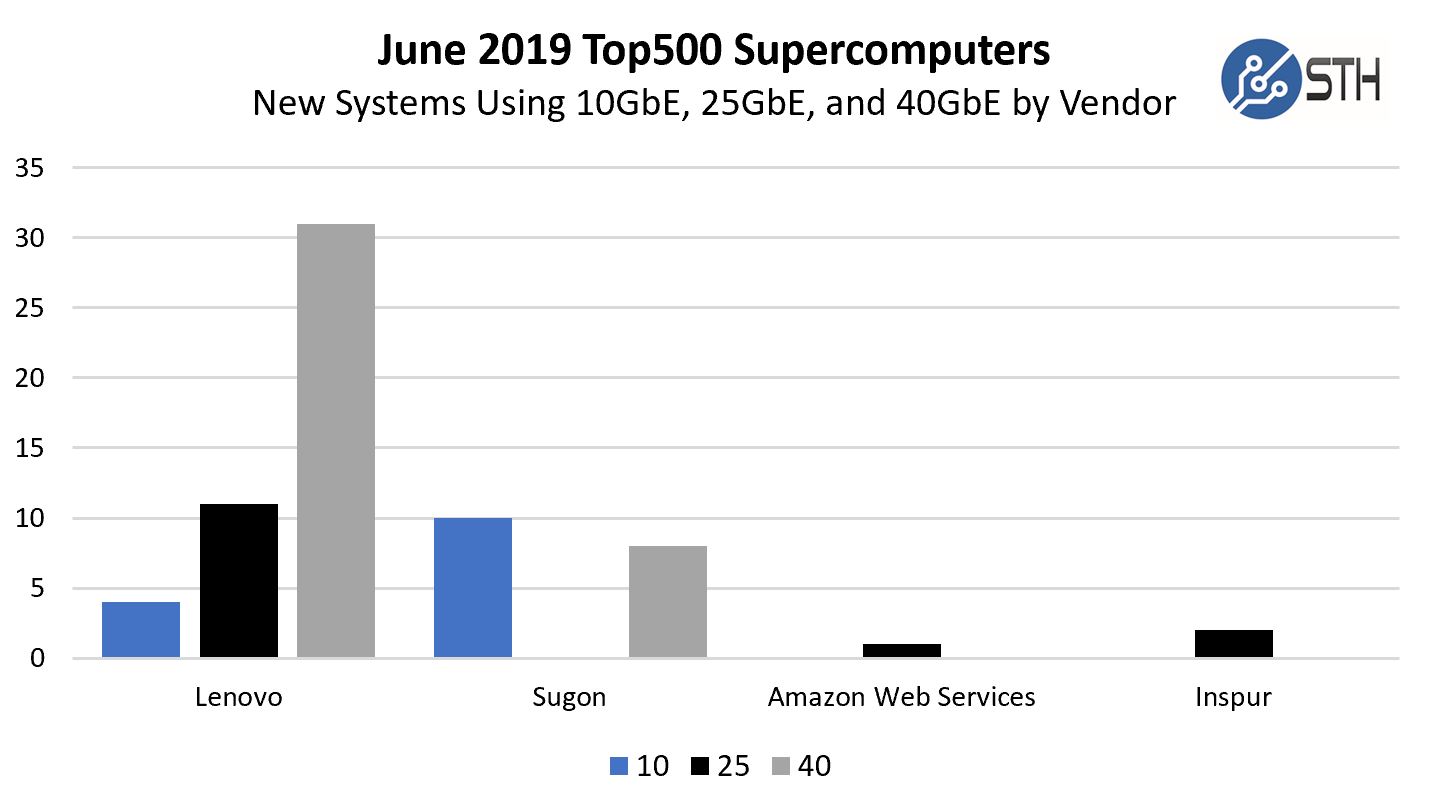

Then we get to Lenovo and Sugon. Most of these systems are not accelerated platform save for three 10GbE systems using Tesla P100/ V100 GPUs. These three are interesting because they are examples of systems that use expensive GPUs and Xeon Gold 6148/ 6138 CPUs but are using lower-end commodity 10GbE networking.

The remainder of the Lenovo and Sugon systems look more like web hosting platforms. Consider this: all 31x 40GbE systems are made by Lenovo and are also Intel Xeon E5-2673 v4 systems using CPUs from 2016.

Let us just call this what it is, list stuffing. Lenovo engages in a systematic exercise to garner headlines by running Linpack on systems that are meant for web hosting. The Top500 is designated by a benchmark result that can run acceptably on systems that do not necessarily run HPC or even AI workloads well. This is a definition problem, however, consider this. Every potential customer that Lenovo pitches for traditional HPC systems knows that this is a systematic business practice not just accepted, but encouraged by the company.

Final Words

The Top500 list saw a sharp contraction in terms of new systems being added. In November 2018 we saw 153 new systems, versus 94 new systems in this list. Those numbers were buoyed by certain companies adding non-HPC systems to the list.

Frankly, this is devaluing the Top500. Undoubtedly it is a great marketing tool and one we are trained to look at. The top systems on this list are pushing boundaries that will lead to scientific advancement. It is a list that like most things in life, has great power, but a key way it can be distorted. As is, the Top500 list perhaps should be reframed from the Top500 Supercomputer list to the “Top500 clusters that someone has run Linpack on.”

If the HPC community truly sees this practice as an issue, there are two ways to proceed. First, one can define a new benchmark. Second, one can take this business practice into account when looking at HPC vendors.

Looking beyond this practice, we know the future is bright and big. We know the 1.5 Exaflop Frontier Supercomputer is coming. In the meantime, we wait for the major new announcements from the exascale era to coalesce into actual systems. Hopefully, the November 2019 list will bring larger systems.

As is, the Top500 list perhaps should be reframed from the Top500 Supercomputer list to the “Top500 clusters that someone has run Linpack on.”

Amen, a new list is needed with rules to get these meaningless clusters off.

I would also be nice when they run Linpack again with migitations for Spectre/Meltdown/Foreshadow and Zombieland.

Lol great idea. Let’s do mitigations for Foreshadow so nobody can look from one VM into another VM on a machine with Hyper Threading. Oh wait. These aren’t virtualized and usually have HT turned off. Ha! That was a funny one Misha.

Honestly this analysis was kind of confusing and seems wrong till the time I’ve finally understood that you are talking about difference between top500 nov 2018 and top500 june 2019.

Honestly, don’t find it interesting as top 10 on the list of the time. 3 POWER9 “boxes”, a lot of Xeon E5 and Skylake and what’s most interesting is also SW26010. China many-core CPU ticking on modest 1.45GHz.

@Jared Hunt

Spectre/Meltdown/Foreshadow and Zombieland.

I thought this article was about supercomputers, thanks for pointing me out it’s about VM running on servers.

The inevitable march of technology. Old standards, once high, become easily reachable. Lenovo are being buzz kills, but the compute is being created. Just because it’s not secluded to running lab work doesn’t mean it doesn’t exist? I would love to see AWS run a small and scalable % of their total systems through Linpack just to show how massive they really are…

Micah I think that’s the AWS C5 cluster? What about Google?!?

Misha Engel I think he’s saying you’re talking about Foreshadow. The mitigation for Foreshadow on Intel is turning off HT so you avoid the case where one VM can get into the space of another VM when they’re both on a HT core. He’s pointing out that in supercomputers, Foreshadow mitigation is therefore irrelevant =D

@Sam Davis.

I was not talking about Foreshadow, I was talking about migitations against Spectre/Meltdown/Foreshadow and Zombieland.

“If the HPC community truly sees this practice as an issue … define a new benchmark …”

HPCG is the benchmark the community defined, isn’t it?

Top500.org now publishes three lists:

– top500 (linpack),

– HPCG (conjugate gradient),

– green500 (linpack energy efficiency).

HPCG is a complementary benchmark that puts more stress on inter-node communications.

http://hpcg-benchmark.org/

(One might complain that they haven’t collected 100 results yet. Less competitive systems omit themselves? More press attention could encourage HPC users and buyers to ask for results, and HPC owners to submit results.)