At SC24, the Microsoft Azure HPC team had its newest instance hardware for the HBv5 on display. The newly announced instance type uses the chip formerly known as the AMD MI300C, which combines Zen 4 cores with HBM memory making something much larger than the Intel Xeon MAX 9480.

This is the Microsoft Azure HBv5 and AMD MI300C

Here is the AMD EPYC chip.

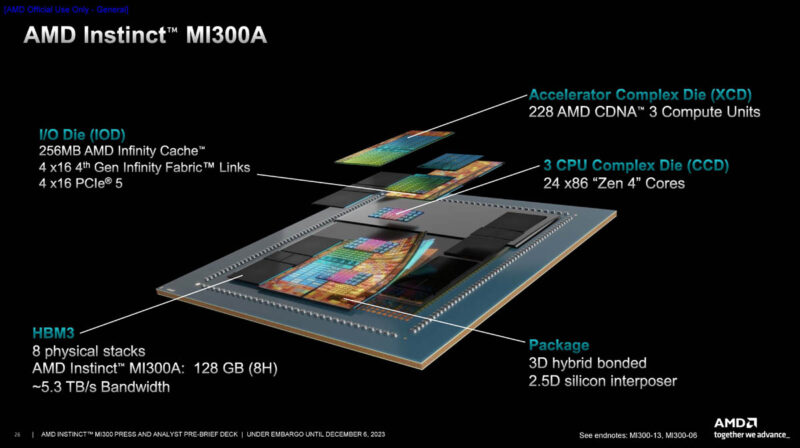

To understand what is going on here with the MI300C, it is worth looking at the AMD Instinct MI300A. With the MI300A, the XCD or GPU accelerator die was replaced by a 24 core Zen 4 CCD. With the MI300C, imagine if instead all four sites had 24 core CCDs. Many stories came out yesterday calling this an 88 core part. It is actually a 96 core part with eight cores being reserved for overhead in Azure. HBv5 is a virtualized instance, so it is common that some cores are reserved. Aside from having 88 of the 96 cores in the top-end VM, SMT is also turned off by Azure to help its clients achieve maximum HPC performance.

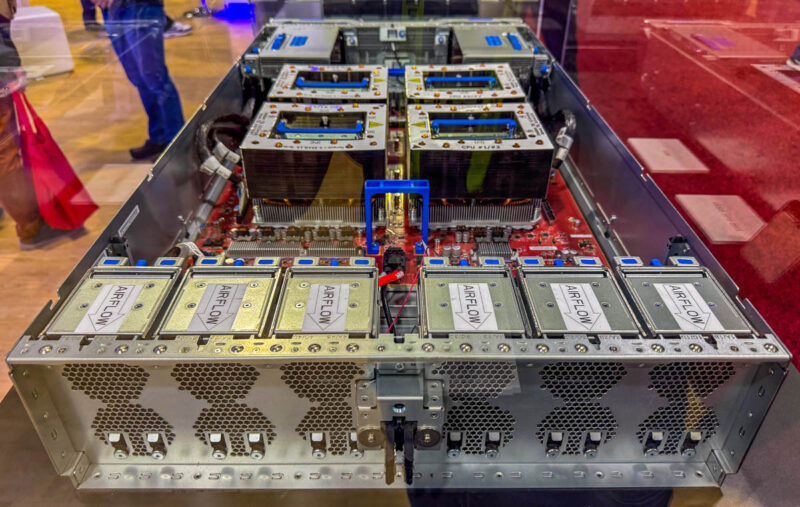

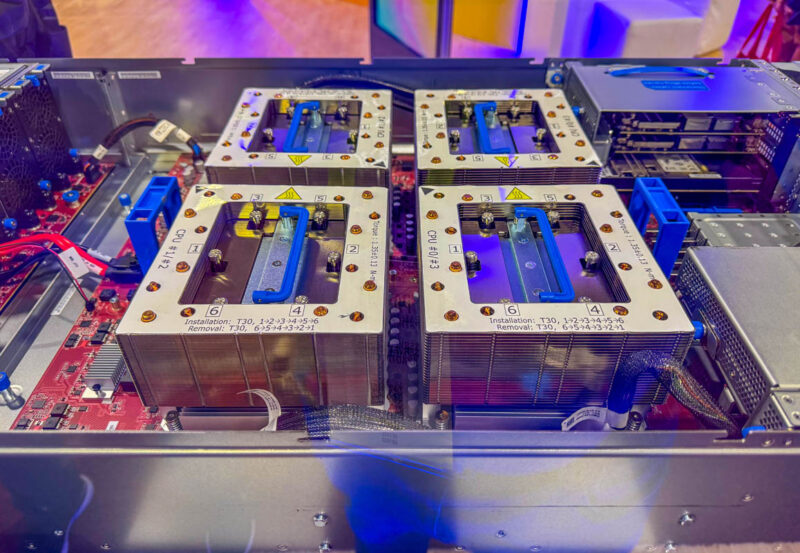

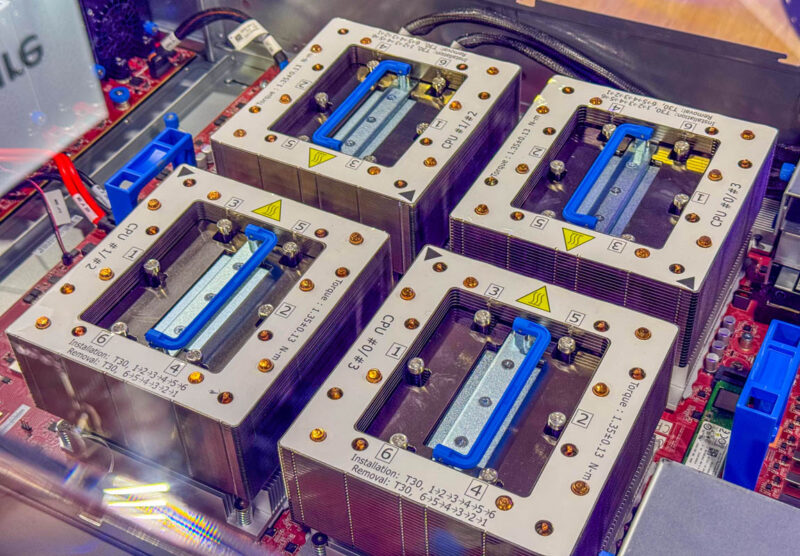

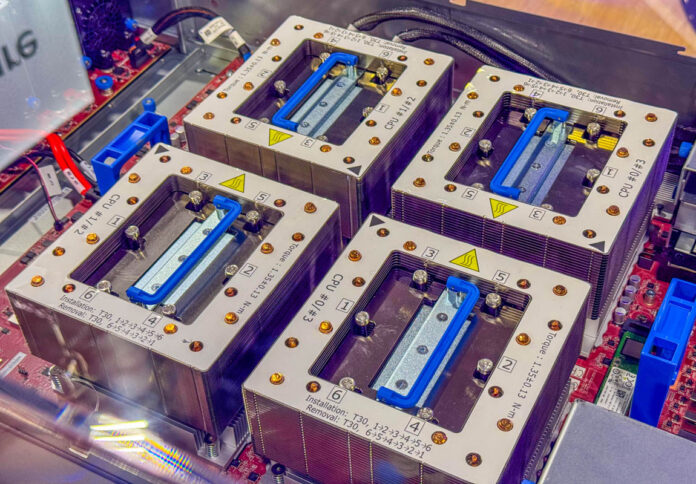

In terms of the actual box, we can see the fans and power bus bar tap at the rear of the chassis, but then some massive heatsinks in the middle.

In pictures, these look less impressive, but in-person they are massive heatsinks. Microsoft is using a 4-socket design, very similar to how one might see an AMD MI300A server.

Around these four accelerators we can see a M.2 SSD as well as various NICs in the system. Notably, we do not see DIMM slots.

Each of the MI300C accelerators has a 200Gbps NVIDIA Quantum-X NDR Infiniband link. We can also see a 2nd Gen Azure Boost NIC for storage under the right Infiniband cards and then the management card below those. In the center we have what appears to be eight E1.S storage slots for the 14TB of local storage.

Running in VMs, Microsoft gives customers access to up to 352 of the 384 cores and up to 400-450GB of the HBM3 memory. Microsoft also says that the Infinity Fabric links here have twice the bandwidth compared to its previous generations.

Final Words

I do not think the MI300C is going to be a mass-market part. Instead, this is one that AMD made specifically for Microsoft and that really shows the power of hyper-scale HPC. Other vendors have various configurations of the same chips for HPC clusters. Microsoft has a very bespoke design for its customers that we did not see elsewhere. For AMD, it is easier to qualify a chip for one or a handful of large customer systems than it is to make a mass market part and work with many OEMs. It also shows the power of AMD’s chiplet architecture scaling between the all-GPU MI300X/ MI325X, the all CPU MI300C, and then the mixed MI300A APU powering El Capitan and other systems. It is always cool to see systems like these on the floor. Thank you to the Microsoft Azure team for bringing cool boxes.

This is very neat. AMD should make a version available to the mass market. Even as a halo product for geeks with too much money. Sometimes you just gotta take the crown and flaunt it.