At OCP Summit 2024, we saw the new Meta FBNIC. Meta (and Facebook) really led the industry towards multi-host adapters, and this is the newest one. Instead of one high-speed NIC per system, this is one NIC for up to four systems with up to 400Gbps of combined bandwidth.

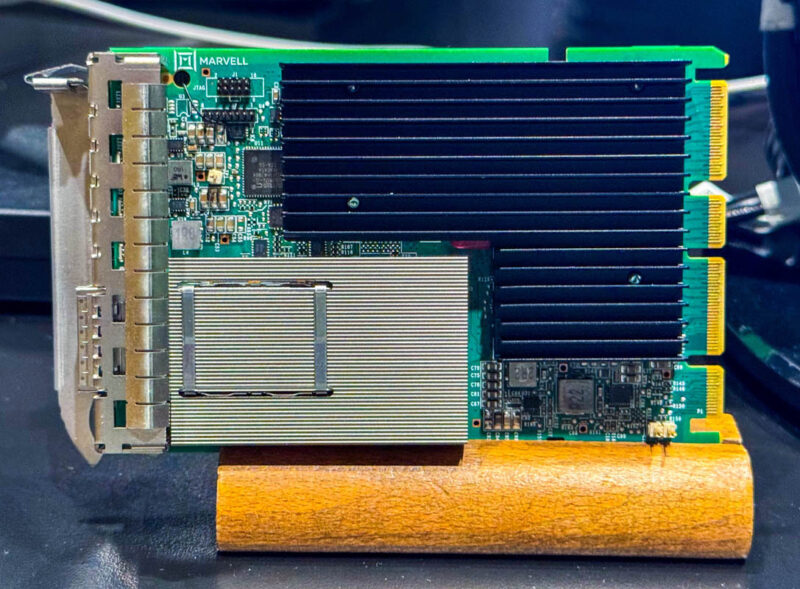

Meta FBNIC 4x 100G Multi-Host Adapter by Marvell

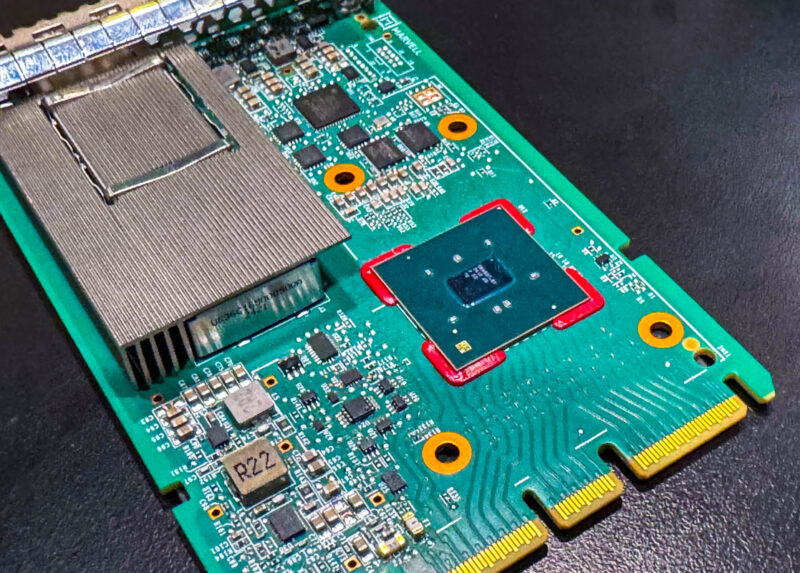

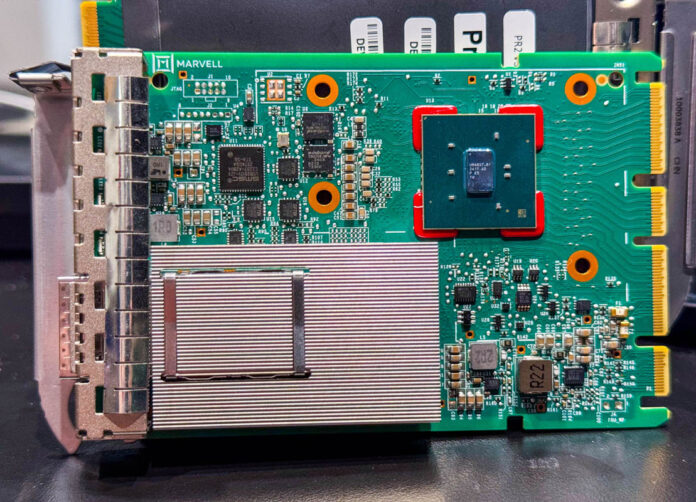

The NIC says Marvell since it is a co-developed NIC with Meta’s ASIC.

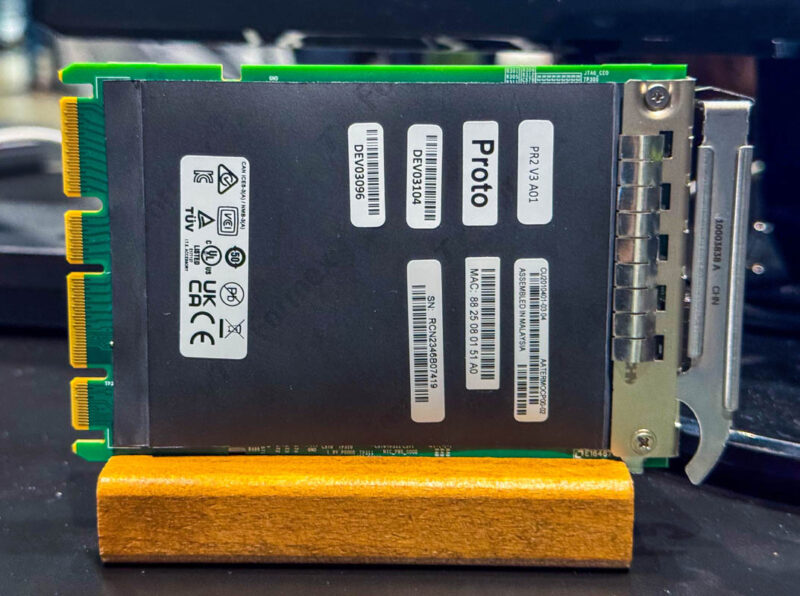

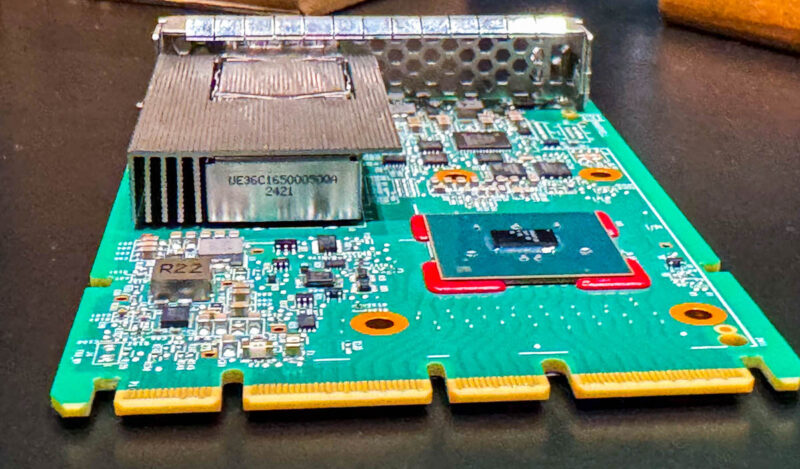

As one might imagine, it is also an OCP NIC 3.0 design. The form factor is a SFF with Ejector Latch, which we have seen many times before. Using that form factor allows the NIC to be removed from the front of the chassis aiding to serviceability.

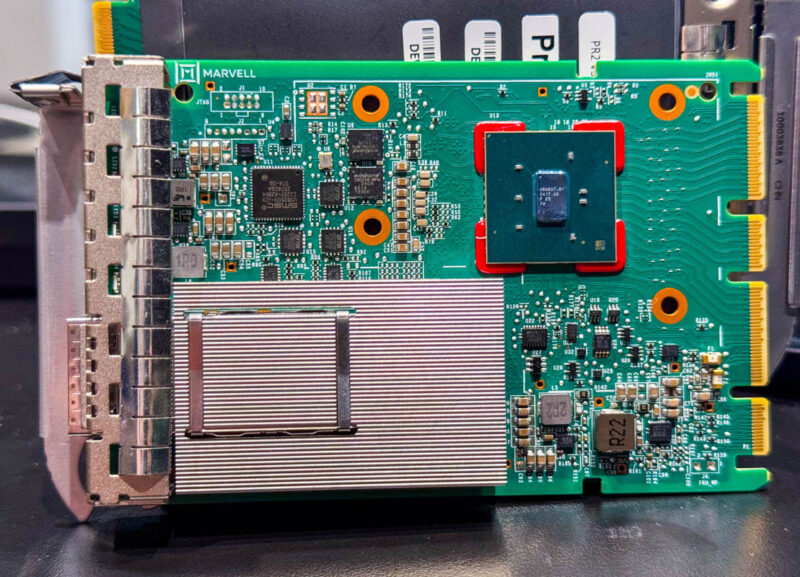

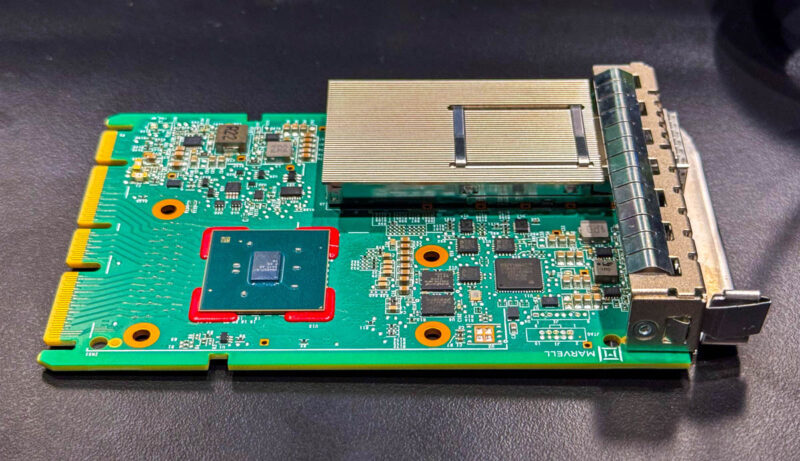

Here is the NIC without the heatsink. The network ASIC is likely smaller than many folks might imagine for one that is pushing 4x 100GbE connections on one end and up to 4x PCIe Gen5 x4 connections on the other.

A large heatsink on the optical cage is there to improve cooling. Bigger heatsinks here usually mean that Meta has more tolerance for airflow and ambient temperatures.

That chip is tiny!

Here is another look at the OCP NIC 3.0 x16 connector.

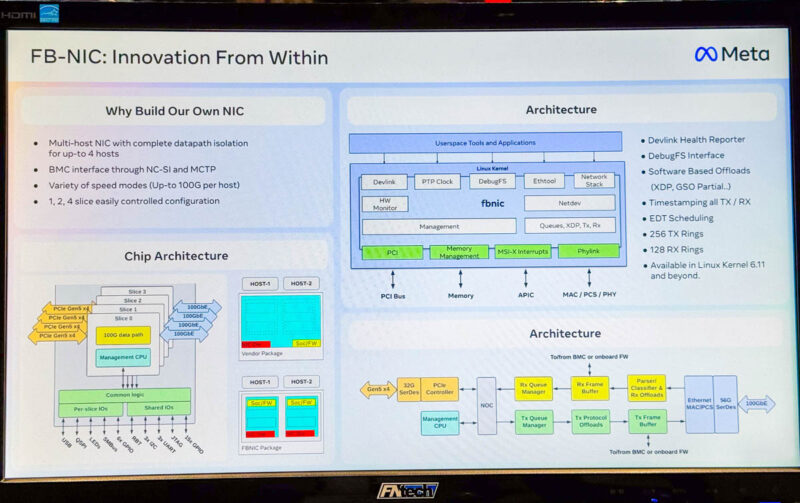

Telling on the design points under “Why Build Our Own NIC” is the first one, that it is designed to be a multi-host adapter for four hosts. That means that Meta needs to tie in not just four PCIe Gen5 x4 connections (each supporting 100G per host) but also connections to the BMCs for each server. It is really cool that Meta shows off the architecture and that the driver is available starting in Linux Kernel 6.11.

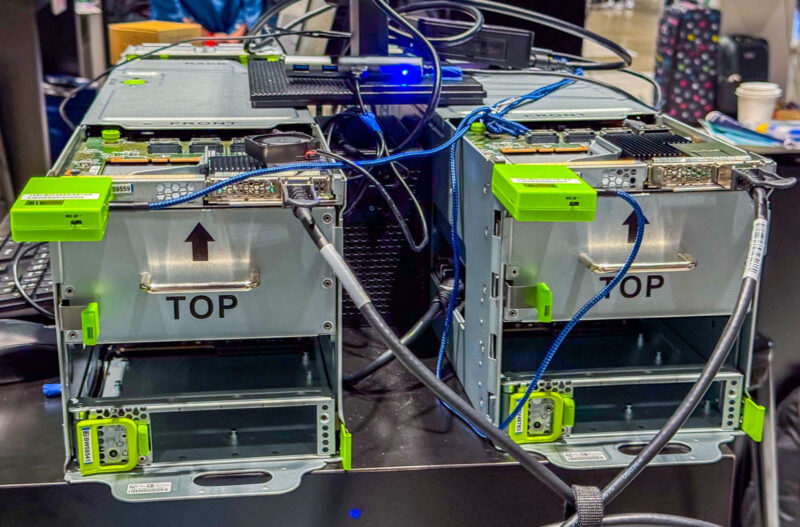

Meta also had a nice demo of the NICs working on the show floor.

You can also tell that they were on expensive show floor power setting up this demo.

Final Words

Marvell, in a big win for them, makes the adapter. Meta said this is the first of its network adapter ASICs. Meta is a big enough consumer of NICs that it can make its own. Years ago, the halls were lined with vendors trying to sell Meta and Microsoft NICs at OCP Summit. This is a significant shift in the industry.

Hopefully folks like seeing some of the hardware that gets deployed at massive scale.

To add a bit of historical perspective on this card’s performance: A quarter century ago I was installing a lot of “QFE” network cards in Sun servers (“Quad Fast Ethernet”) // 4 ports of “Fast” Ethernet as in 100-Mbit.

Moore’s Law certainly had a profound affect on my IT career.

I’ve read the this presentation few time and i don’t get what are the new thing this NIC bring to the table?

@Carl: This adapter goes the other way around as far as I can tell… You installed quad-ports in single servers and this thing does quad servers with a single NIC.

@PaCM: What this adapter brings is the ability to increase compute density with high-bandwith NICs but maintaining a lower cable count. This solution uses QSFP-DD connectors to combine up to 4 seperate 100G datapaths (1 for each server) in a single NIC that is put into a multi-server chassis.