It is Wednesday, but it feels like it has been a full week already. This week, I gave an in-person sales training around AI and general purpose servers, attended the Marvell Analyst Day, and filmed two videos that should be going live in January 2024. As part of the week, a chart from Server Core Counts Going Supernova by Q1 2025 came up a few times, and it might be one of the most important aspects of understanding the AI buildout’s impact on the industry.

Why the Chart is Powerful

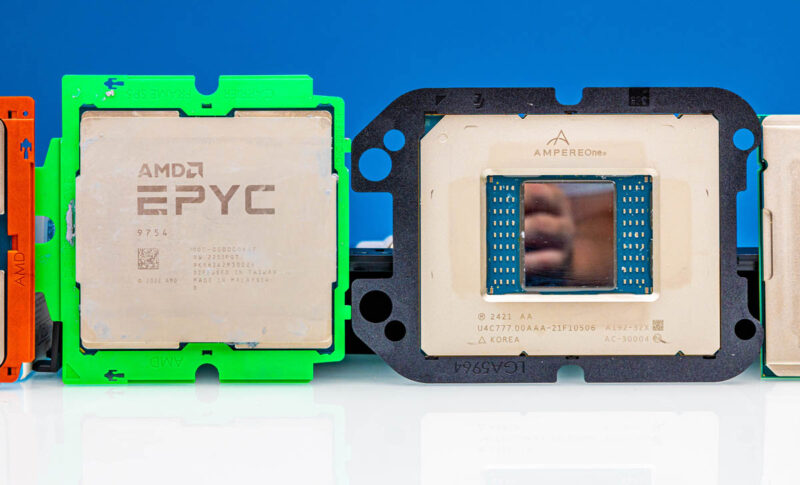

Here is the chart that I used this week, just to level set:

That charts from 2010 to 2025 the massive growth in top SKU core counts for AMD, Intel, and Arm CPUs from Ampere and NVIDIA. The AMD Pc line is the Zen 4c/ Zen 5c lines since those are more cache reduced cores rather than “E-cores”. For Intel, we have the light blue E-core line. We are keeping the prediction that we will see 288 core Sierra Forest-AP parts on there. From what we are hearing, they are available, albeit as special order parts. We have not confirmed if they will be full retail parts, or remain special order, but I would not be surprised if they stayed in that path in the future given what I am hearing.

The reason this chart is so powerful is simply what it shows. The growth rate on increasing server CPU core counts is clearly accelerating at a rate that we simply have not seen. In addition to that, the number of vendors participating and the architecture choices are continuing to proliferate.

At the same time, the feedback we keep hearing from folks in the industry is that it is hard to sell general purpose compute servers, but it is very easy to sell AI servers. At Marvell’s analyst day, there was a discussion on the hyper-scale build out expenditures increasing by something along the lines of $100B this year. That is a tremendous amount even if it includes non-silicon components. Better put, folks should be wondering how server vendors are making considerably better products (and they are), there is more competition than there has been in well over a decade, and yet, general purpose compute servers are hard to sell.

The key insight to this chart is simple. CPUs are scaling along a mix of performance per clock and the number of cores packaged into larger and higher power packages. GPUs and AI accelerators are scaling faster in many ways, and especially once one includes adding capabilities through lower precision. Historically large generational gains in CPUs are largely being met with decisions to extend the current compute fleet. Customers are inspired to join the AI race, yet they are more tepid joining a new CPU and server refresh race.

Final Words

With the AI world focusing on large cluster-level gains on an annual basis through software upgrades, new hardware upgrades, using faster networking, and simply deploying bigger clusters, that seems to be on a path of well over 2x or greater annual gains for the next several years. The networking folks are working on a cadence of doubling capacity every 18 months or so. On the storage side, looking at the primary purpose of a SSD which is to store data, we saw 30.72TB SSDs last year, 61.44TB SSDs this year, 122.88TB SSDs in Q1 2025, and the 245.76TB generation is set to follow soon after. Even the storage folks are in a 2x growth per year path at this point.

Perhaps the big question in the data center now is whether anything shy of doubling performance and perhaps connectivity every 18 months with CPUs is going to be enough. AI accelerators, networking, and storage have all aligned on that path already. The fact that we are seeing historical greatness from the CPU vendors during a time of vast data center spending, makes one wonder if more radical approaches are needed to redirect more spend.

We often hear server CPU vendors say they are listening to their customer’s needs. One has to wonder if the real need is to accelerate at a much higher pace.

I am sure folks will have thoughts on this, and that is the point.

The sad part is the – even if recently accelerated – slow rate of increase in RAM bandwidth and usable size at 1DPC. Hopefully that too can get on a ~24month doubling trajectory, for non-HBM. Or is HBM the defacto main memory going forward with classic memory a cache layer before local storage, before remote storage?

I must have missed something. How did you connect CPU cores to AI workloads and AI expenditure?

CPU cores seems really disconnected from GPU / AI Accelerators…

> The growth rate on increasing server CPU core counts is clearly accelerating at a rate that we simply have not seen.

This is pretty unsubstantiated by that plot. At least I would expect a log plot to back such claims up, as from this linear plot one can merely see that growth is superlinear.

Growth rate implies something like a percentage increase in core count each year. As the exponential growth lines indicate, the plot simply seems to show a more or less constant growth rate over the last 15 years.

Maarten they’ve got the growth rate in the lines. That looks like it’s the trend line feature in Excel. You can see the trend line is increasing much faster in the 20’s