NVIDIA has a secret weapon in the fight for next-gen AI server dominance. For the higher-density NVIDIA Rubin NVL576 generation, NVIDIA plans to ditch the cable cartridge and switch to a midplane design.

The NVIDIA Rubin NVL576 Kyber Midplane is Huge

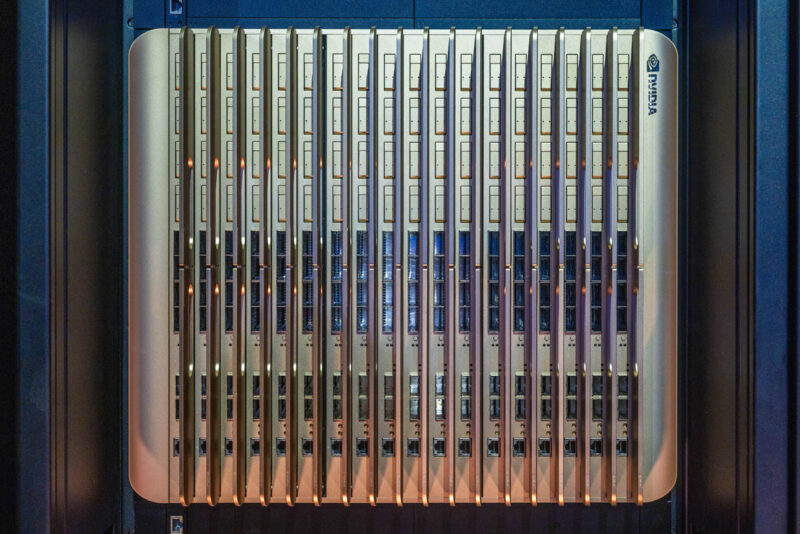

At NVIDIA GTC 2025, we saw the next-gen NVIDIA Rubin NVL576 generation rack, codenamed “Kyber.” If you have seen in our coverage, the Kyber rack moves fans and power supplies (currently) out of the rack to increase the compute density. Here is a shot of the compute blade chassis. You will notice there are 18 compute blades just in this chassis.

The rear has the NVLink switches.

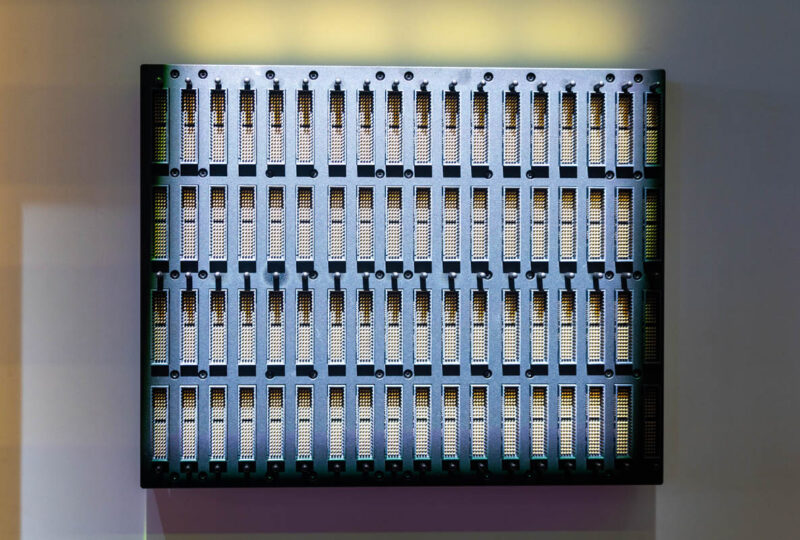

Connecting these internally, NVIDIA showed a new midplane design. There are 18 columns and four rows of connectors.

There are a number of notable items here, but one huge one is that by liquid cooling the entire rack, NVIDIA is able to build this midplane without consideration for airflow. Midplanes are not new, they have been used in equipment like blade servers for many years. Those midplanes usually have to sacrifice density for cutouts for airflow.

The other big impact is that it removes the cable cartridge that connects the NVLink switches and compute blades in the NVL72.

This is a big change to the architecture coming in the next two years.

Final Words

The “so what?” is important here. Increasing the reach of the copper interconnect domain means that NVIDIA saves a lot of power and increases reliability over optics. The new backplane makes it so even more silicon can be connected in the same rack via copper interconnects. Part of even making that work is changing the power and cooling setup. This is the kind of systems design that is going to be required as we get into the next generations of AI clusters.

First thing I thought when I saw that was.. It looks from CPU sockets from afar. So I guess that’s where the market is heading. Everything that was a system becomes an integrated unit.

I have air cooled systems with similar density of boards. The trade off there is that the airflow follows a S style path where density has to be sacrificed above and below the compute blades to account for this. I’ve seen a few clever techniques to counter this or use the volume of space more efficiently like putting the power supplies in line with the front air intake but behind the midplane board. Similarly the near the top where the primary airflow moves toward exhaust as the front panel IO board/service boards. They’re out there but not very common as that type of density need has traditionally been rare.

There doesn’t seem to be any midplane to midplane board connections. For this design that doesn’t surprise me given its already massive size. However, I have seen other systems do this to further increase overall system density and reduce cost. The trade off is generally there is an extra communication hop between top and bottom midplanes or a potential bandwidth bottleneck there. Cooling also takes up space between the mid planes as well, reducing overall density slightly.

One note worthy thing would be if there are any signal repeaters behind the alignment shroud or on the rear of the PCB. That is simply a lot of IO that needs to move decently long for copper. I know of the compute modules themselves there is a signal booster right before the backplane connector but I would fathom there still needs to be some on the midplane.

There’s a point where floor space in the datacenter isn’t the commodity we are trying to save though.

Power density is likely the biggest constraint once you get aircooling out of the way (facility water cooling).

So many datacenters will barely go above 40kw per rack, and these guys are shooting for custom build 120kw+. That custom design work drives costs too.

It’s cool to see it, but I wonder at the value proposition is of pushing density this far. Probably required for the networking latencies or something, but costs of fiber v copper compared to power per rack; forcing a redesign of the fundamental power delivery in the datacenter might not be the win it’s being touted as. Just a thought. The pictures of the xAI datacenter show an oddly haphazard power delivery system to the racks. At some physical floor space isn’t the commodity people think it is.

This, while very cool, is not an exactly new thing. Mainframes and minicomputers have had this sort of thing for decades. Midplane and backplane boards are not a new thing by any means. This is just Nvidia’s own take and adoption of a proven design language that was perfected by IBM, Control Data and DEC over many years of mainframe and minicomputer system design practice.

@MDF, this isn’t about saving floorspace, it’s about increasing the number of GPUs in a single NVLink domain. Physical closeness makes the problem easier.

So how do the NVLink switches connect to the GPU sleds? More connectors on the opposite side.for the switches?