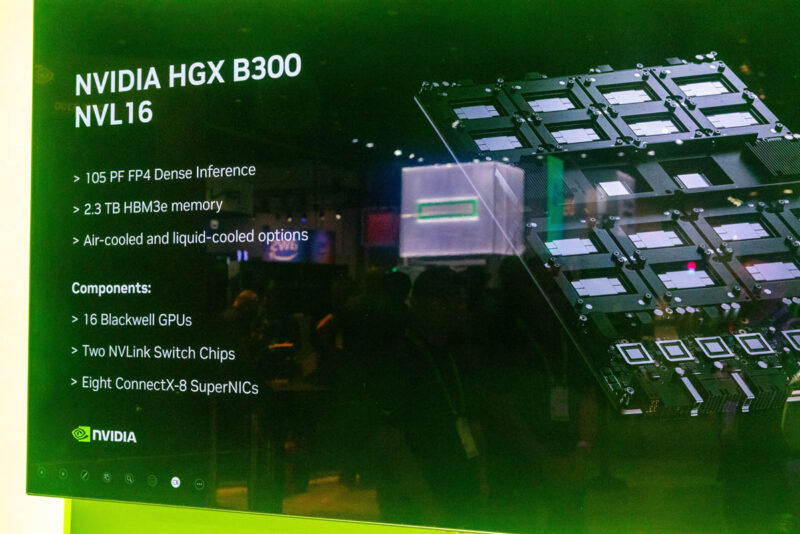

NVIDIA is making a massive change to its HGX B300 platform. First, is the easy one, it is now called the NVIDIA HGX B300 NVL16 as NVIDIA is noting the number of compute dies it has connected via NVLink instead of the number of GPU packages it has. Beyond that, it gets more exciting, and that has server manufacturers trying to figure out how to accommodate the new subsystem.

The NVIDIA HGX B300 NVL16 is Massively Different

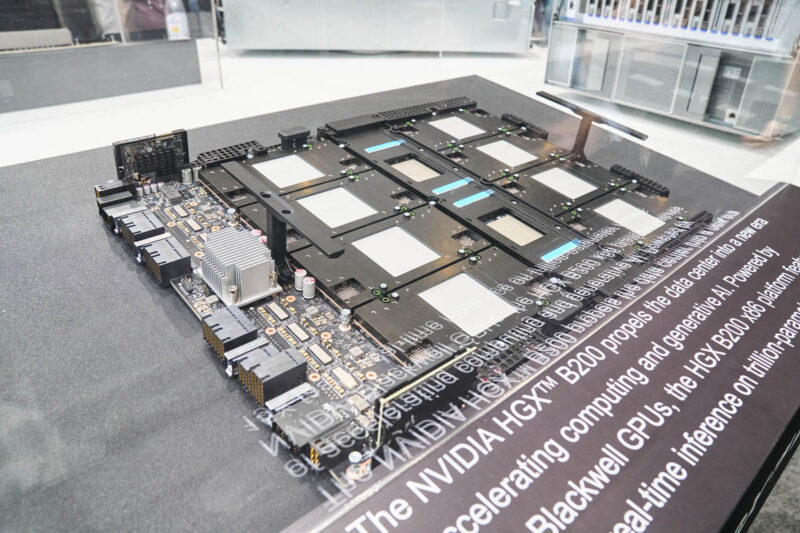

Some time ago we posted the NVIDIA DGX versus NVIDIA HGX What is the Difference? article. Largely until the HGX B200 things remained the same. Things are now changing with the NVIDIA HGX B300 NVL16. Onboard we get up to 2.3TB of HBM3e memory. We can see the sixteen dual Blackwell GPU package modules in the below image, but there is a lot more going on here.

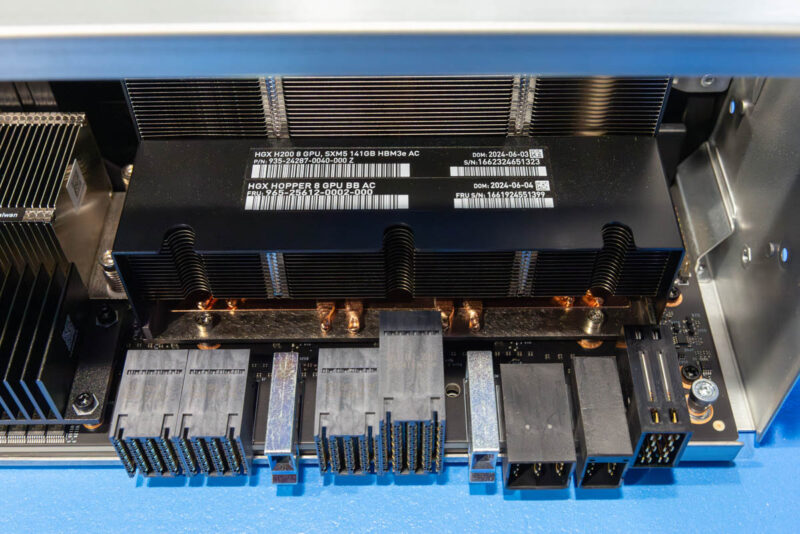

Last year we saw the NVIDIA NVLink Switch Chips Change to the HGX B200. Here you can see the two NVLink switch chips in the middle of the eight GPU packages. This is similar to what we see on the image above with the HGX B300 and when we see the assemblies.

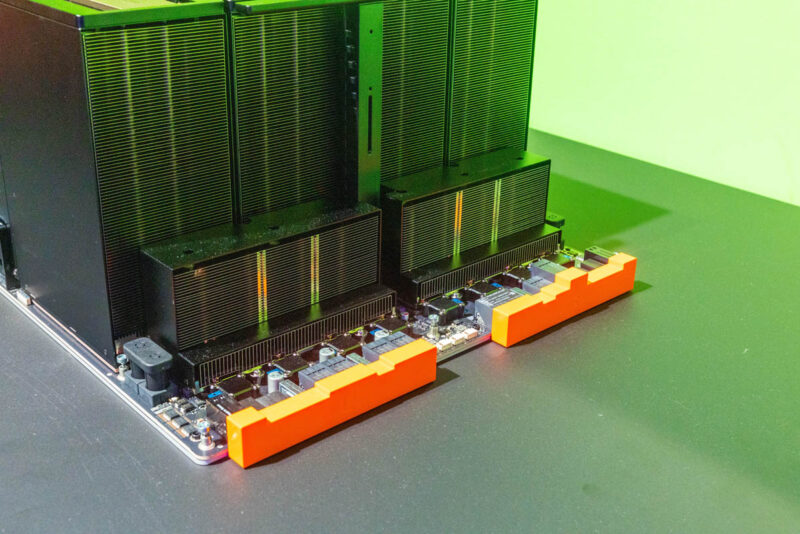

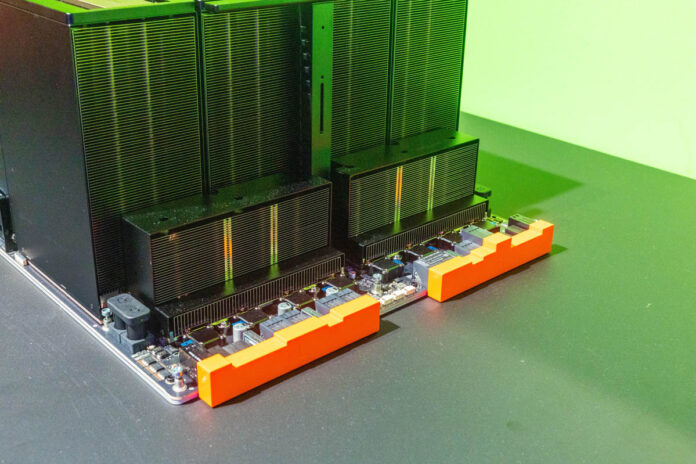

We can see the eight large air cooling heatsink towers, each servicing two Blackwell GPUs and then in the middle we have the section for the NVLink switches. At first, this may look very straightforward in terms of a swap.

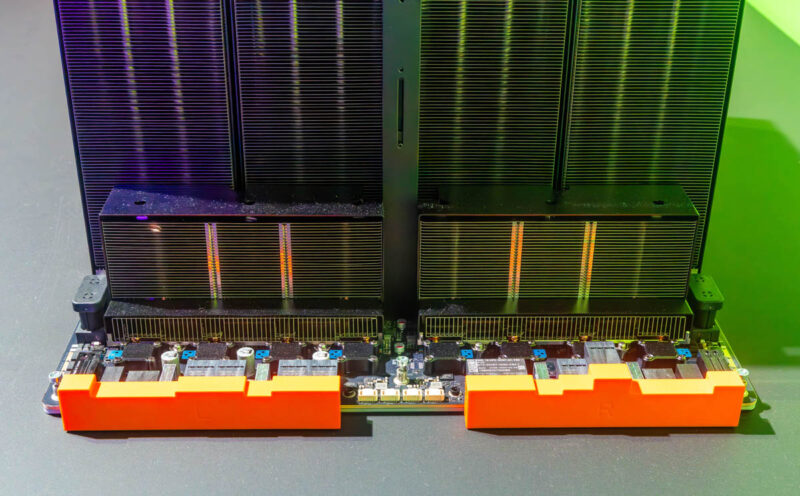

On the end, we can see the OCP UBB style connectors with big orange covers. The high-density connectors that AMD, NVIDIA, and others use as part of the OCP UBB spec are very brittle so it is important to have covers on them when they are not in use.

Between these connectors covered in orange and the large GPU heatsinks, we have smaller heatsinks. Instead of being for PCIe retimers, these are instead now NVIDIA ConnectX-8 NICs.

The connectors for the NICs sit between the UBB connectors and the eight NVIDIA ConnectX-8 NICs that are on the UBB. Here is a similar view of the HGX H200 where those eight top facing connectors are not present.

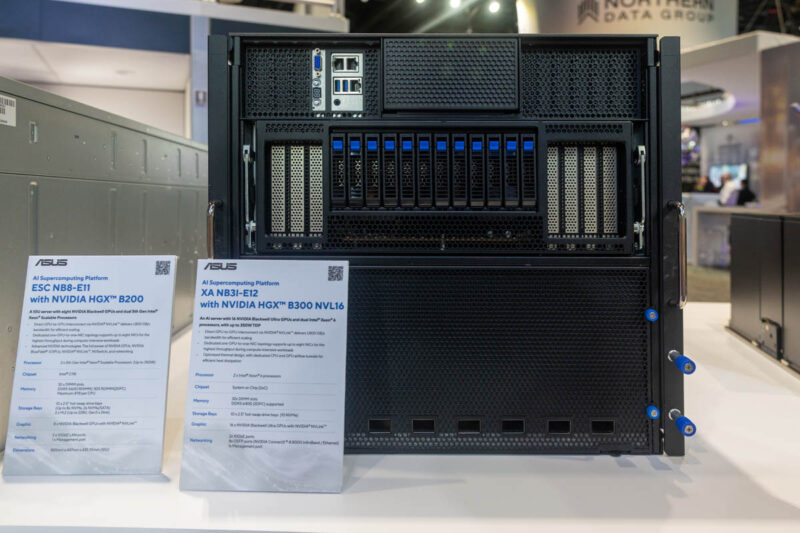

NVIDIA is able to use the built-in PCIe switch in the ConnectX-8, to provide functionality that required other chips previously. It may not seem like a big deal, but for the industry this is shaking things up. For example, in our ASUS AI Pod NVIDIA GB300 NVL72 at NVIDIA GTC 2025 tour, we briefly showed the ASUS XA NB3I-E12 where you can see the eight network connections on the removable HGX tray along the bottom of the system.

Different vendors have different tray designs. Often the faces that lead to the external chassis face are opposite the ConnectX-8 NICs, so cabling needs to bridge the gap. Other vendors are trying to figure out how they will deal with the change.

Final Words

This may seem like a small one at first, but it is a big move for NVIDIA. In some ways it simplifies systems design. At the same time, if you are using either the NVIDIA HGX B300 platform or the NVIDIA GB200/ GB300 platforms, you will end up using NVIDIA for your east-west GPU networking given the new design integration. The north-south networks are still a bit more up for grabs, but this is a big move by NVIDIA in the HGX B300 generation. NVIDIA is putting more GPU silicon on the platform, but it is also taking away the networking choice.

In the Substack we go into why this is going to be rough for Broadcom, Astera Labs, and more as the system design changes:

Already from H200, the air cooled option seemed crazy. The server is more expensive due to heat sinks and fans. And those fans use +30% more power. Even if an HDU is used I think liquid cooled will win in TCO.

I wonder how many layers that baseboard must have and how many zeros follow its price