With 20 Arm cores connected using C2C to a Blackwell generation GPU, 128GB of LPDDR5X memory, and 200GbE NVIDIA ConnectX-7 networking, the NVIDIA DGX Spark is exciting. At $3999 it is far from cheap. On the other hand, we expect folks to create the most awesome clusters with this.

The NVIDIA DGX Spark is a Tiny 128GB AI Mini PC Made for Scale-Out Clustering

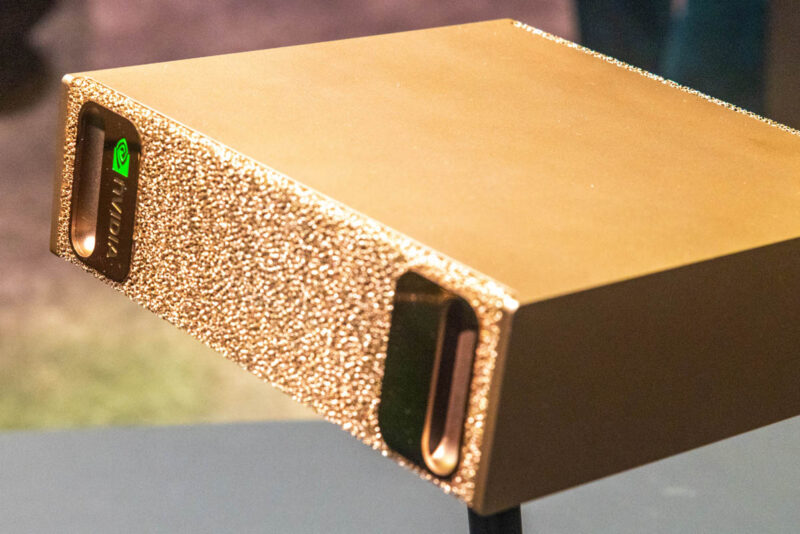

The NVIDIA DGX Spark is tiny, easily fitting into the palm of your hand, with flashy styling reminiscent of the NVIDIA DGX-1. What is inside is going to be a game-changer for those serious about local and portable AI development. Instead of a large, power-hungry rack server, this is a 170W device.

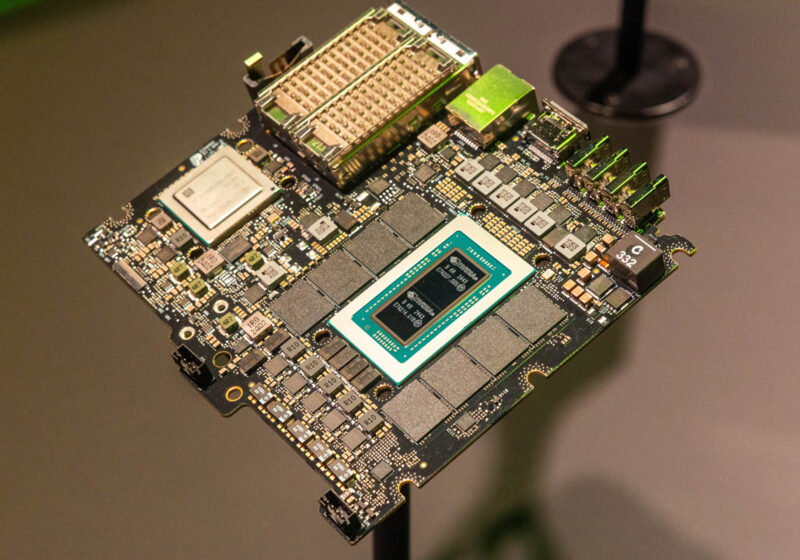

Inside, Arm-based CPU offers 10 Cortex-X925 and 10 Cortex-A725 Arm cores for 20 cores total. Unlike the GB300 data center GPU, the GB10 has display outputs. The package is flanked by 128GB of LPDDR5X shared memory (rated at 273GB/s.)

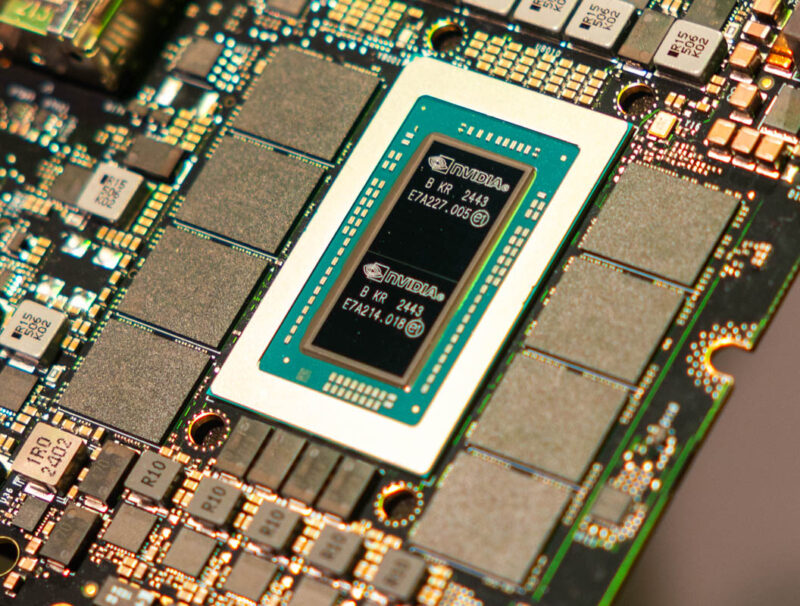

NVIDIA and Mediatek worked on the GB10. Inside, the NVIDIA GB10 combes both an Arm CPU and a NVIDIA Blackwell GPU into a single packaged linked by NVIDIA’s C2C interconnect. You can see the two die package in a close-up.

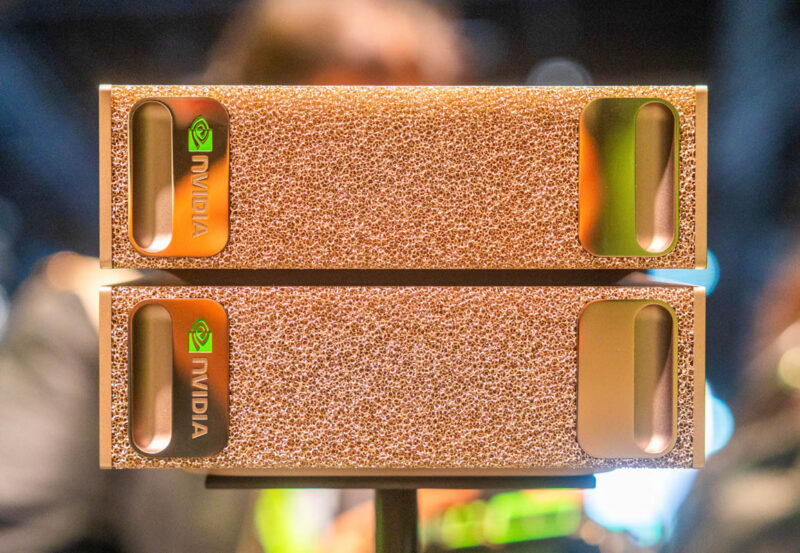

Speaking of two, NVIDIA is selling and supporting these not just as single AI mini computers. Instead, having two in a cluster will be a sold and supported configuration.

On the back we have four USB4 40Gbps ports, a HDMI port, a 10GbE port, and then the dual port NVIDIA ConnectX-7 NIC that we were told supports 200GbE clustering with a second unit.

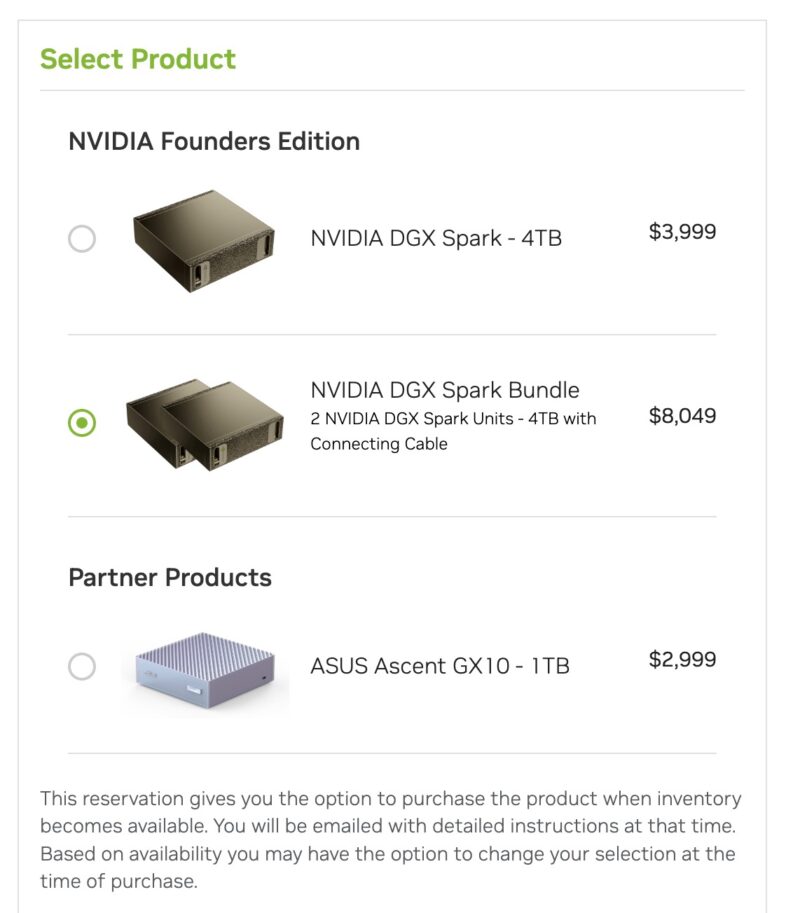

Indeed, on the pre-order page, the NVIDIA DGX Spark 4TB is listed at $3999, or $1000 more than the ASUS Ascent GX10 that shares the same motherboard but with only 1TB of local storage. There is also an option for a NVIDIA DGX Spark Bundle with two of these units and a QSFP cable to support the clustering.

We asked about the ability to connect more than two. NVIDIA said that initially they were focused on bringing 2x GB10 cluster configurations out using the 200GbE RDMA networking. There is also nothing really stopping folks from scaling out other than that is not an initially suppored NVIDIA configuration. NVIDIA will ship these with the NVIDIA DGX OS as like what comes on DGX systems. That is an Ubuntu Linux base with many of the NVIDIA drivers and goodies baked in so you will be able to use things like NCCL to scale-out out of the box.

Final Words

In a package coming in at just over 1.1L and 1.2kg, it is hard not to be excited about one of these. What is more, it is even harder not to be excited about clustering these small systems. The addition of real high-speed networking means that there is easy potential for using a number of these with network storage, perhaps making the 1TB versions a better value.

Somewhat surprisingly, even with NVIDIA showing off huge systems, this might be one of my favorite product announcements of the entire show, especially since NVIDIA is showing off and taking pre-order reservations for the clustered versions. Apple may have better memory bandwidth on the M3 Ultra, but this is another league of scale-out networking from a 10GbE or direct Thunderbolt network. Just the ability to scale to two in a cluster with a single cable makes these very interesting, and the big question is whether NVIDIA will try to scale these out even bigger (or if users will beat them to it and do it on their own.) We are hoping to see these ship this summer.

How are these powered? There’s no info on the NVIDIA page. The pictures of the ASUS version show one USB power port. How much power do they consume?

@Y0s – lack of detail from an NVIDIA web page? color me shocked

@Y0s

I see no power port myself, but it’s 170W …so I’m thinking this is USB powered. Laptop USB power ports are now up over 200W so I’m thinking that’s what is going on.

This is correct. USB Type-C power input. I think it makes a bit more sense with the ASUS labeling.

There’s a small nuclear fusion power resctor inside powering this wonderful device

Just kidding

Great stuff

Thanks Patrick

I want to see a comparison between this and a similarly priced M3 Ultra studio. I heard this can run a 200b model, but I’ve also heard this was going to be $1,000 less, while the M3 Ultra specced out can run a 600b model, or something to that effect.

looks like this summer will be summer of clusters with the mac studio already out and these devices on the way!

ok, I Reserved a DGX Bundle . . . this should be interesting :)

Late to the game, but: the photos give me no idea about how those 170W are going to be dissipated without meting the thing.