Key Lessons Learned

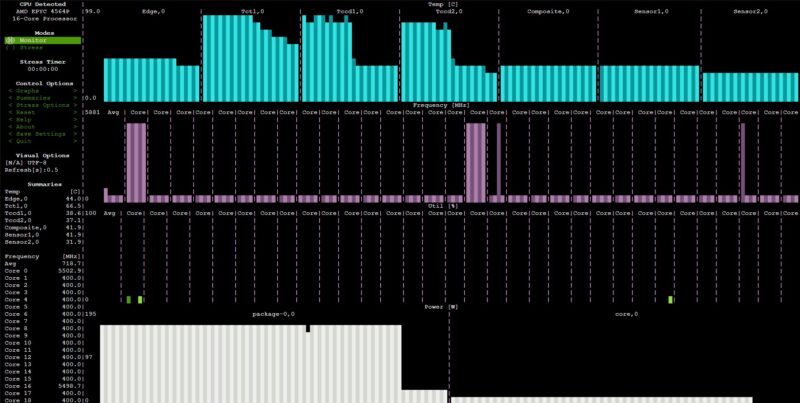

One cool thing about the chips is just how far the frequency range travels. Here is a great example with cores parked at 400MHz while others are running at 5.4GHz. That just shows how aggressive power management is on these platforms.

The AMD EPYC 4224P 65W part can fit under 120-125W in a server for a 1U 1A at 120V deployment common in very low density racks that these servers often are deployed to. On the other side, the AMD EPYC 4565P can use 170-185W just at the CPU package, pushing a server well over 240W under load. There is quite a range here.

Really, the big question now is how does Intel respond to this. Years ago I wrote in a Xeon E article that if AMD ever made an EPYC Ryzen, it would put Intel into a tough spot. That is exactly what is happening these days. Armed only with up to 8 P-cores, Intel’s socket just runs out of steam. Adding E-cores to the Xeon E series would mean folks would buy the CPUs, try putting VMware ESXi on the server, and would get a PSOD due to the P+E core mix. The funny part is that I was talking to someone on the Xeon E team several years ago and they had read what I wrote about the EPYC Ryzen and their response at the time was something along the lines of “I am just hoping they see the market as too small, because that assessment is right.” Now that day is here.

Final Words

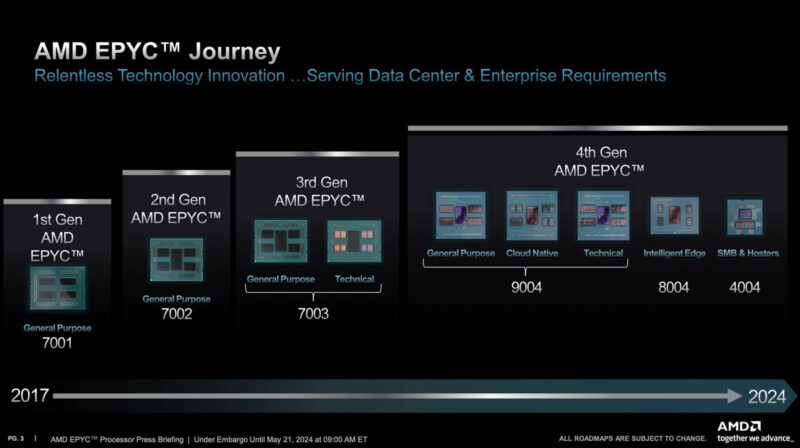

AMD now has a somewhat crazy portfolio of SKUs. That is saying a lot since the last time it launched a mainstream server CPU was over 18 months ago with AMD EPYC Genoa. Intel has launched two mainstream generations in that time and be moving onto a new platform before we get a Genoa refresh. Part ofthe reason for that is that EPYC has gone from being a one CPU offering in 2020 to five different flavors in 2024 with three different sockets. This is a big reason AMD is gaining market share in servers. It is taking common components and scaling them to different market segments.

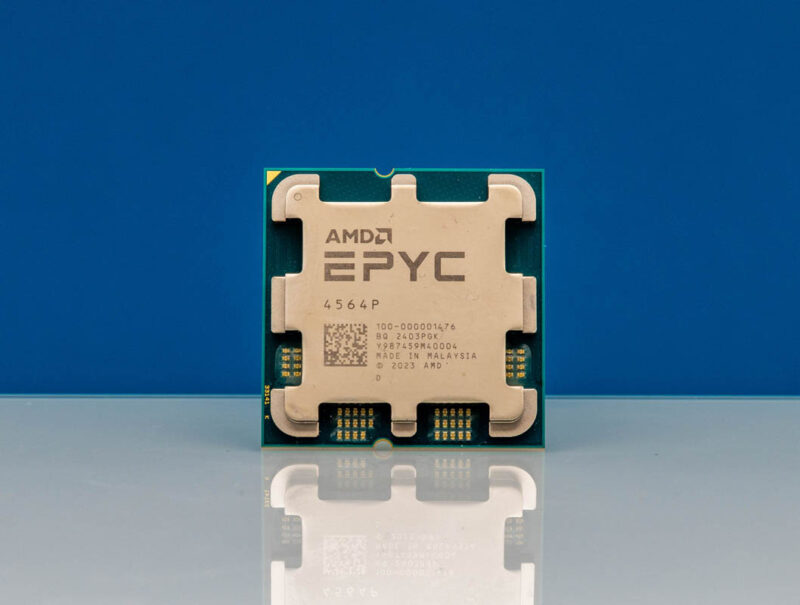

There will be a lot of folks that wonder why we need this part. AMD did not restrict ECC on Ryzen. Putting the AMD EPYC label on the part means that we get more validation for things like high-speed NICs, ECC memory officially supported, server OSes and applications. As much as I see both the Xeon E and EPYC 4004 series as being redundant with desktop counterparts, I also understand why. It is the same reason that the entry-level server parts trail their desktop counterparts chronologically.

Overall, the AMD EPYC 4004 straight wallops Intel Xeon E on a performance per socket basis, while offering lower cost per core, and different options including 3D V-cache models. This is the day of reckoning we have been waiting for in the entry server market for more than half a decade. Intel needs to re-think its strategy for entry Xeons now.

I was looking for a CPU like this for quite some time to replace an old system at work. The 16c one will probably be the CPU we‘ll end up using

Now we just need 64GB ECC UDIMMs at a reasonable price. Preferably with a 4x64GB kit option from Crucial/Micron.

Fantatic. As Brian S mentions we just need 64GB ECC UDIMM and this will be perfect to replace my aging ZFS server. 256GB ram without having to go to large and power hungry sockets.

I was hoping they would use 3DVcache on both chiplets so we could avoid heterogeneous performance issues, but alas, we get the same cache setup as desktop Ryzen.

As noted in the article, this makes the absence of an 8 core 3DVcache 4364PX model all the more glaring.

Maximum memory supportes is 192GB as indicated in the first AMD slide. So unless this is a fake limit like with some Intel processor, this is the max.

I have been doing essentially this for a while with my colo – using Ryzen 7900 + 128G of ECC in a ASRock Rack 1U – it’s pretty much unbeatable for performance at the price, and it runs in around 0.5A at 240V so efficiency is excellent – and you get superb single core performance with turbo going over 5GHz.

Never could understand why AMD didn’t embrace the segment earlier – because Intel have no answer with their current ranges.

Looks like these CPUs will be sold at Newegg for consumer retail, hopefully not an OEM only launch…

The real cost is how much the server motherboards will cost (the only difference between the consumer and server boards in this case will be ipmi). I’m guessing it will be a couple $100 for the cheapest server motherboards. So if these server boards still cost a leg and an arm it might be worthwhile for homelab users to get a consumer am5 motherboard that is known to work with ECC and just forgo ipmi.

I don’t see appeal of 3dVcache is server processor at this point. I’d rather take more efficient CPU instead. I would love to see newer motherboards, with integrated 10g, sas and/or u2 ports that will be useful for homeuse.

@TLN I agree, AM4 had Ryzen pro APUs which came in 35w and 65w, which are basically closest to what this current epyc launch is (ECC memory works, oem variant). No 35w launch kinda sucks but I bet there is some bios setting to limit power usage.

I dont understand the point of these tiny Xeons and EPYCs. Each one is thousands of dollars and their performance is laughable. I get they can be scaled but still, theyre absolutely horrible. Is there something else im missing?

@Sussy, what you’re missing is in the article, and it’s that they are NOT thousands of dollars.

“AMD’s pricing is also very strong. Its 65W TDP SKUs are priced between $36-41 per core”

$40 x 4 cores…you do the math

I wonder how much it would cost Intel to bring at least a bit(eg. at least 1x10GbE and enough QAT punch to handle it) of the networking features they use on Xeon Ds and their server/appliance oriented Atoms to Xeon E.

It wouldn’t change the fact that the competition as a compute part is not pretty for them; but if there are savings from tighter integration vs. a motherboard NIC or a discrete card that could probably make the system-level price look more attractive using IP blocks that they already have.

The other one I’d be curious about, not sure if Aspeed’s pricing is just too aggressive or if too many large customers are either really skittish about proprietary BMCs or very enthusiastic about their own proprietary BMCs, would be the viability of using the hardware that they have for AMT to do IPMI/Redfish instead. Wouldn’t change the world; but could shave a little board space and BoM, which certainly wouldn’t hurt when people are making platform level comparisons.

@Joeri, I doubt it’s a technical limitation. Rather, the biggest GDDR5 DIMMs are 48GB at the moment. When we have 64GB available it will be 256GB. But AMD have not been able to validate this. For a server platform that matters.

If this leads to AM5 mobos with dual x8 PCIe slots (or x8 x4 x4) becoming more common and reasonably priced then bring it on. If it just means more mobos with a single x16 yawning chasm of bandwidth slot then it is stupid. I do think servers need a bit more flexibility than a typical gamer rig.

@emerth: it will be interesting to see what layouts formally endorsing Ryzen as a server CPU leads to, especially with the increase in systems running higher PCIe speeds doing fewer slots and more cabled risers.

Server use cases are definitely much less likely than gamer ones to be “your GPU, an m.2 or two; that’s basically it”; but 16 core/192GB size servers are presumably going to mostly be 1Us, so it wouldn’t be a huge surprise if there are fewer ‘ATX/mATX but with 2×8 or x8, 2×4’ and more ‘2×16(mechanical), one cabled one right-angle riser’ or ‘single riser with a mechanical x8 on one side and two single-width mechanical x16s on the other’ just because these motherboards are less likely than the Ryzen ones to be slated for 4Us or pedestal servers.

Probably a nonzero number of more desktop-style motherboards; this will be used as a low end ‘workstation’ part for people who want desktops but are more paranoid about validation; and that’s still typically tower case stuff; but boards aimed specifically at low cost physical servers will likely be heavy on risers(whether cabled or right-angle) just because of the chassis they are intended to pair with.

@fff, risers or cabled are fine. Breaking out the PCIe to allow more devices sttached is the important thing.

Any NVMe RAID support?

I don’t think PCIe 5.0 is going to do me any good without a storage controller that can utilize that extra bandwidth.

I can’t fan out PCIe 5.0 to twice as many 4.0 NVMe drives without a bleeding edge retimer.

If I could get a 48-port SATA HBA at PCIe 5.0 that cost less than the total of the rest of the platform, then I could understand what to do with this thing.

Looks almost ideal for my cheap designs if I could connect those dots.

As well as Boyd’s remarks and fuzzy’s and mine there is this: server does not really need a chipset or collection of 10/20/40 Gb/s USB. There is no need to spend eight PCIe lanes on that stuff like in a desktop board. Vendors should use them for more slots or cabled connections or on a very large number of SATA drives.

@MSQ: NVMe RAID has been Supported since RAIDxpert 2.0 (2020). Supermicro explicitly mention RAID 1 & 0 on their 4004 mainboards with 2x M.2 slots.

A couple of questions to anyone that uses these systems:

1. Where did you bought them? In Eastern Europe there are a lot of resellers for Dell/HPE but have not seen any for Asrock Rack.

2. What about RAID? Do you use it on such systems? If so, do you use the motherboard implementation, or maybe a software one?

what is the best platform for buy this processor

Glaringly missing from this article is the number of PCIe lanes. With the standard Ryzen 28 this is no competition for Intel’s Xeon W processors.

Hrmm, now if I could buy these in a small platform, similar to HPE Microserver, would be awesome. I don’t have time anymore for manual builds, but these are perfect otherwise for home custom NAS/Firewall/etc…

What’s the point it doesn’t have more lanes

This is an interesting lineup… ANd even if Intel decides ot go with P cores, AMD can overwhelm them with 4C or 5C cores up to 32 cores easily…

Suggest the best website for buy this processor online