Tenstorrent showed off more about its Blackhole silicon at Hot Chips 2024. Tenstorrent is one of those companies that is doing something different and has a lot of investment as well as actual design wins, making it interesting to see what they are building.

Please note that we are doing these live at Hot Chips 2024 this week, so please excuse typos.

Tenstorrent Blackhole and Metalium For Standalone AI Processing

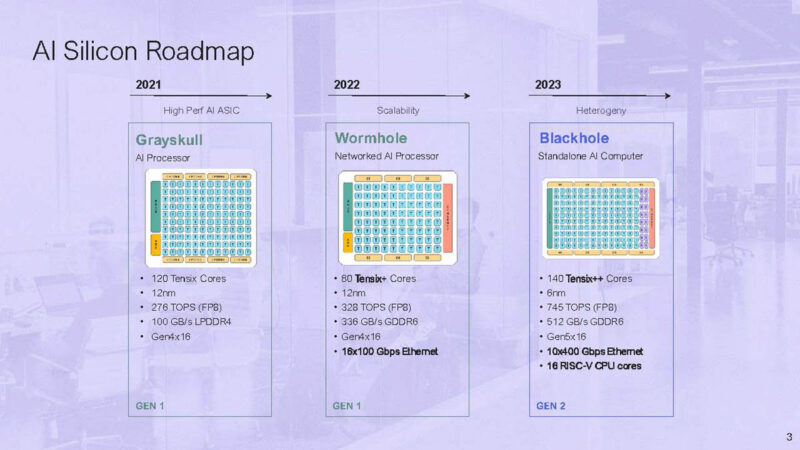

Here is the Tenstorrent AI Silicon roadmap. Blackhole is the 2023 and later chip that was the big update over the previous generation Grayskull and Wormhole.

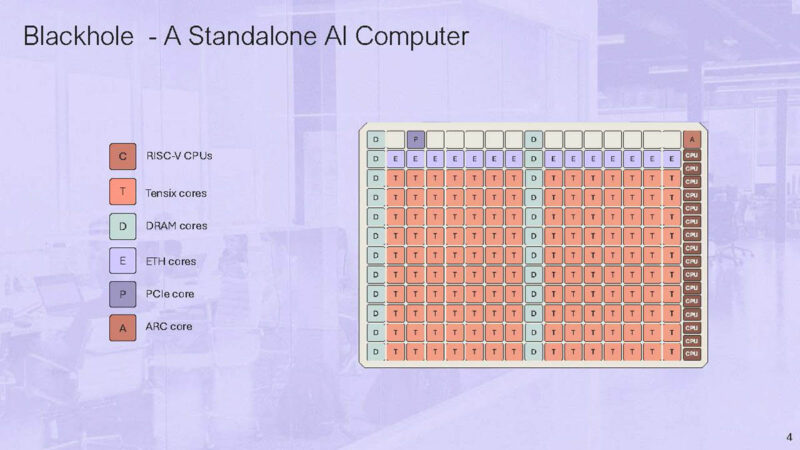

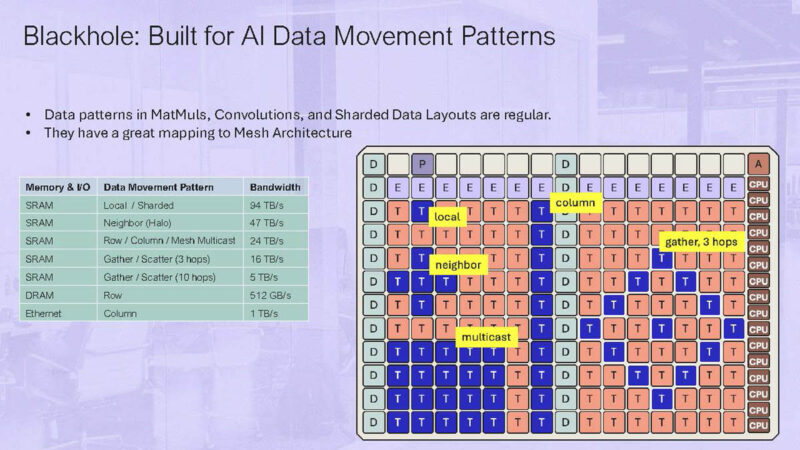

Blackhole is a standalone AI computer based on Ethernet.

The sixteen RISC-V cores are in four clusters of four. The Tensix cores are in the middle with Ethernet on top.

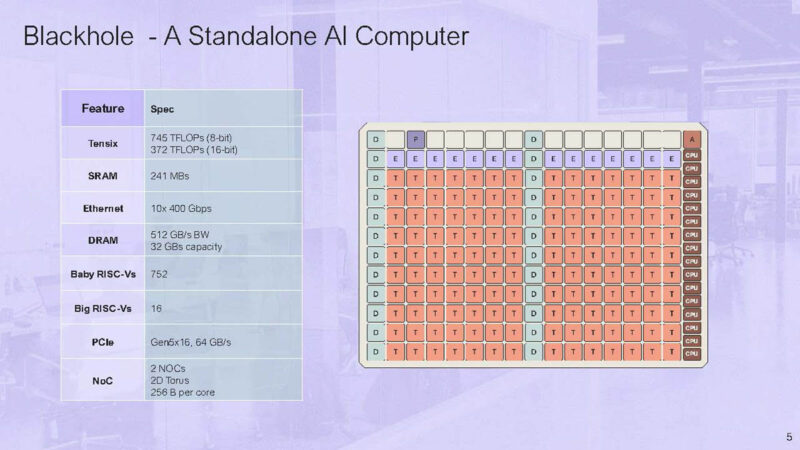

The chip has 10x 400Gbps Ethernet and 512GB/s of bandwidth.

The sixteen big RSIC-V cores can run Linux. The other 752 RISC-V are called “baby” cores that are programmable using C kernels, but they do not run Linux.

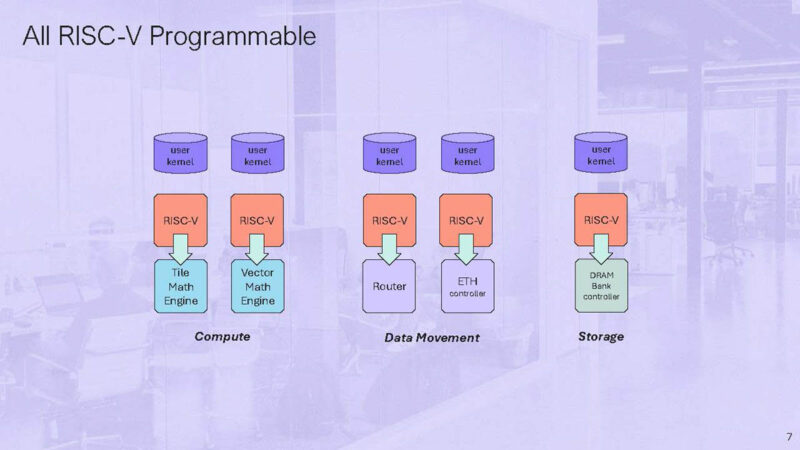

The baby RISC-V’s are programmable and are for compute, moving data, and storage.

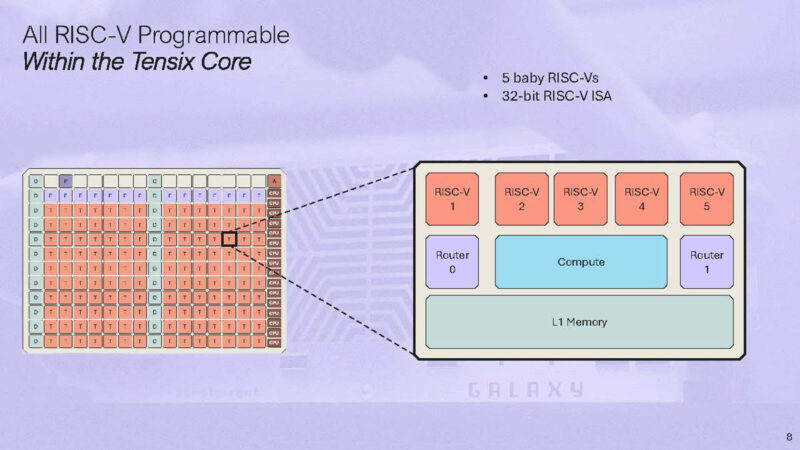

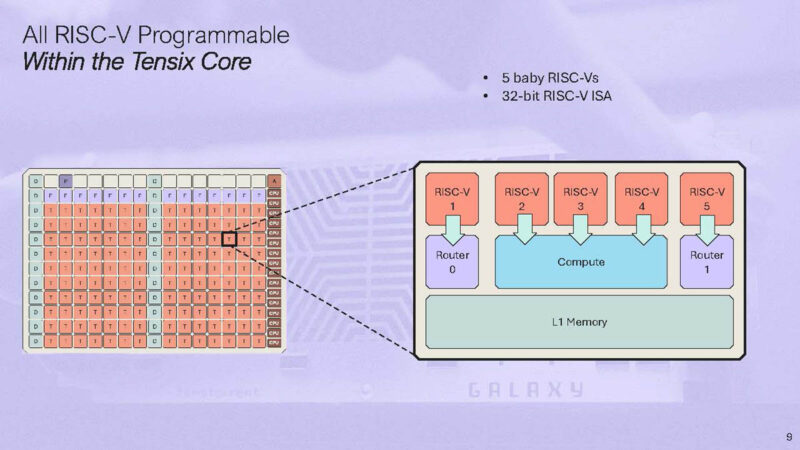

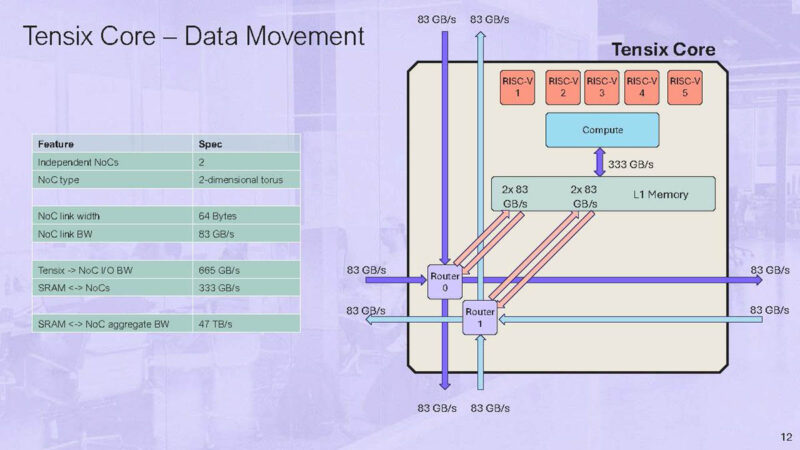

This is a look at the Tensix core with 5 baby RISC-Vs.

There are also two routers that connect to the NOC.

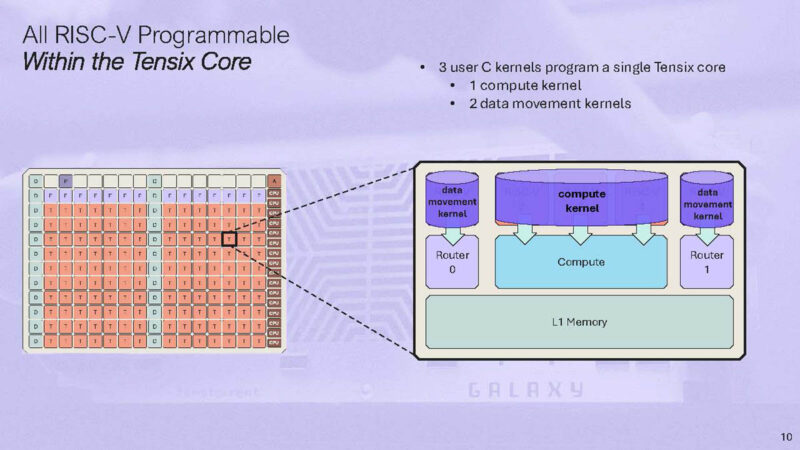

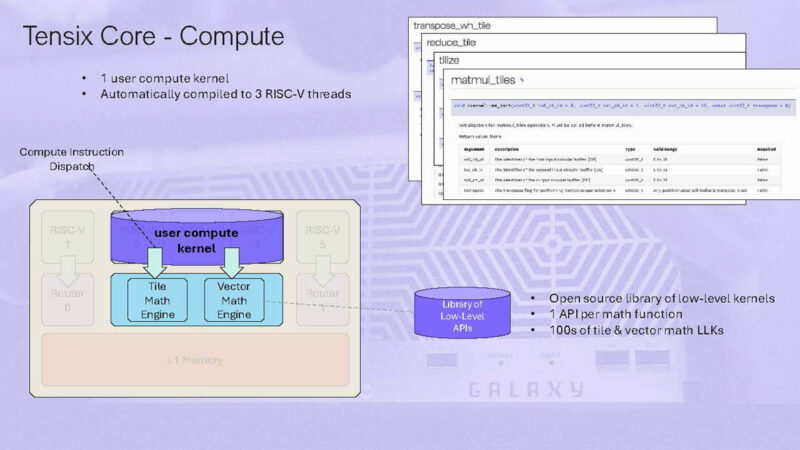

The user can write a compute kernel and two data movement kernels on each Tensix core.

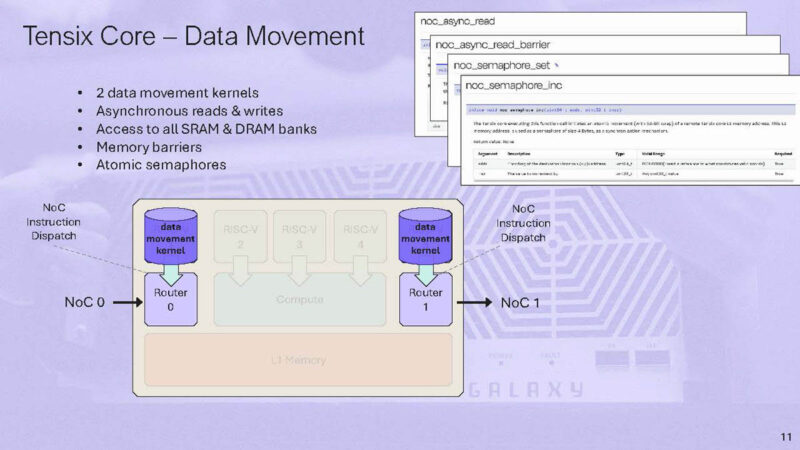

Here is a bit more on the data movement kernels.

Zooming into the routing. The NOC is statically scheduled. The routers move up and to the left or down and to the right.

The cores can be used to do simple or complex operations depending on what is required.

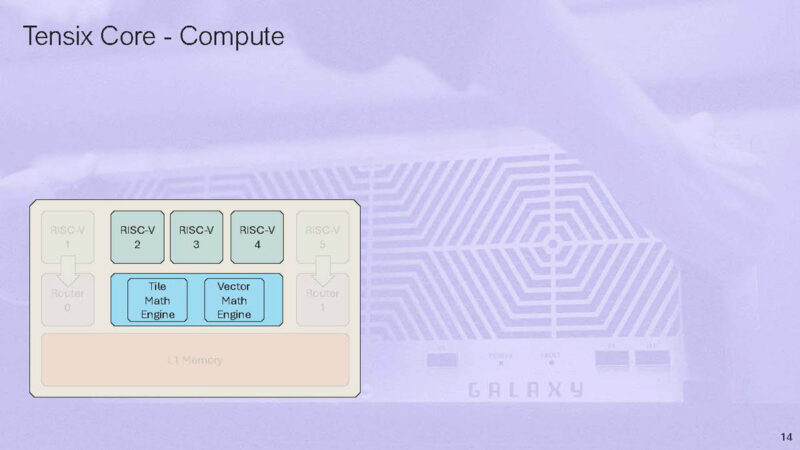

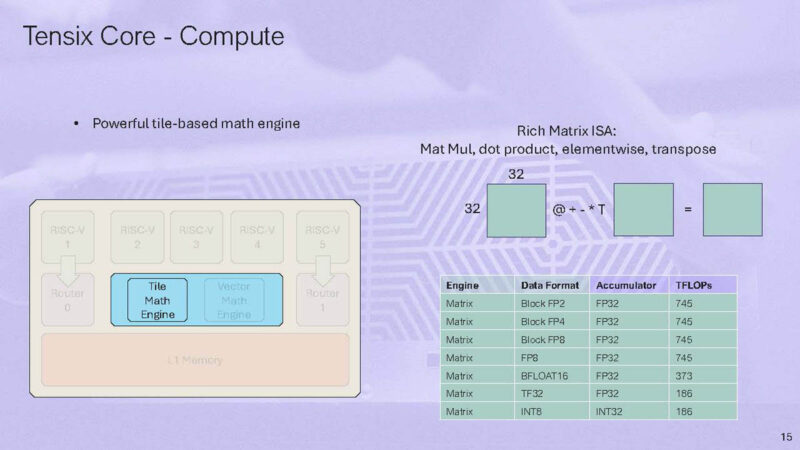

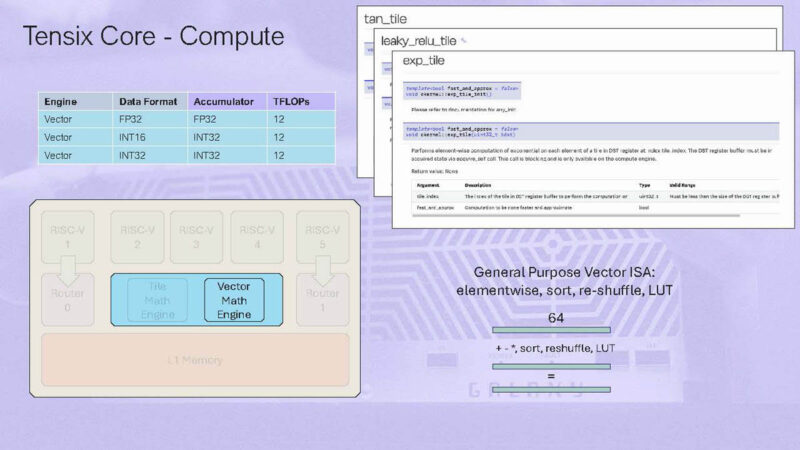

Onto the compute engine, there is a tile math engine and a vector math engine.

The Tile engine operates on a 32×32 tile.

Here is more on the vector math engine:

One user compute kernel is automatically compiled to 3 RISC-V threads.

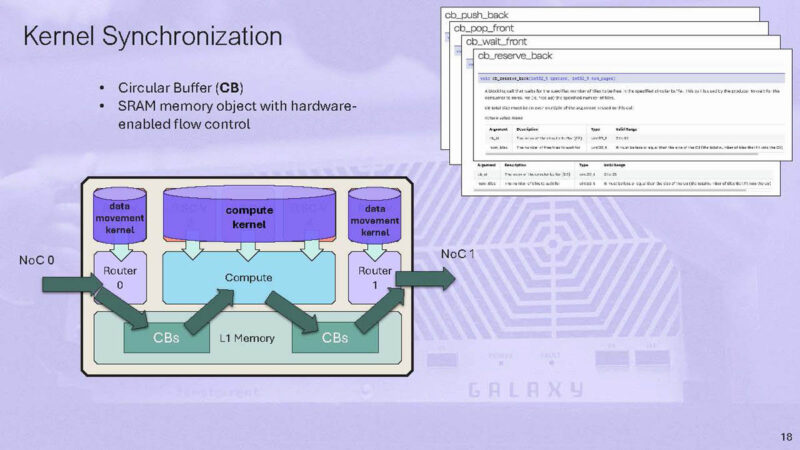

Here is the kernel synchronization. There is hardware-enabled flow control to help synchronize kernels.

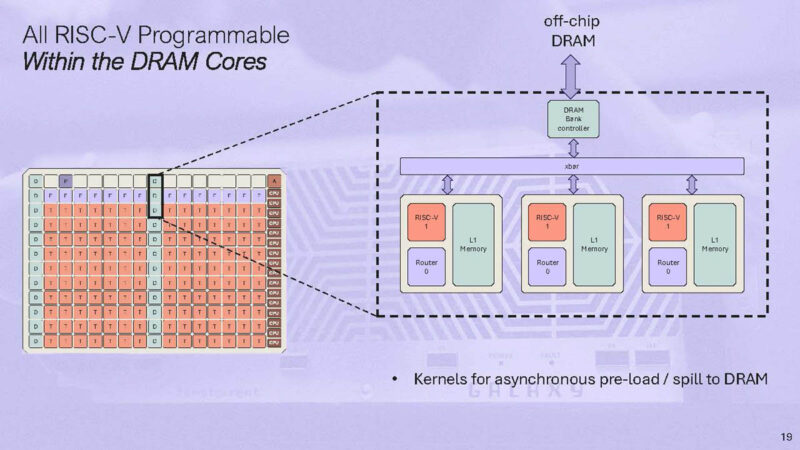

Here is the memory to move to the off-chip DRAM. Overall though, the idea is to keep data local and in SRAM instead of using the external DRAM as much as possible.

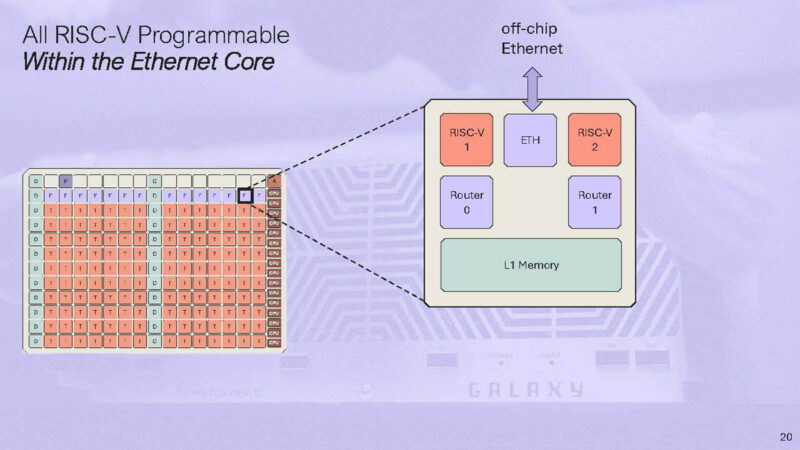

Ethernet is a big deal within the Tenstorrent architecture.

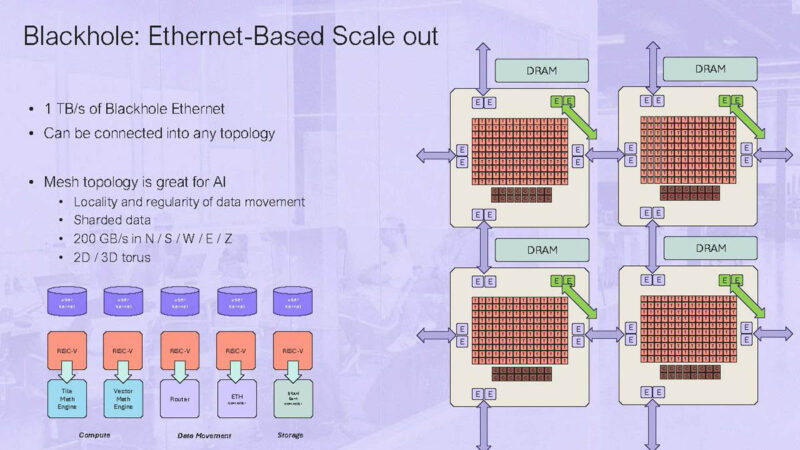

One of the key ideas is that Blackhole will use Ethernet to scale out. Ethernet has the advantage of having regular performance updates, and just about everyone uses it in the industry at some level of their architecture. This is how Tenstorrent is getting a lot of scaling without designing something like NVLink or InfiniBand.

The above shows a 2×2 mesh of Blackholes. AI has a lot of data locality, so these meshes are efficient.

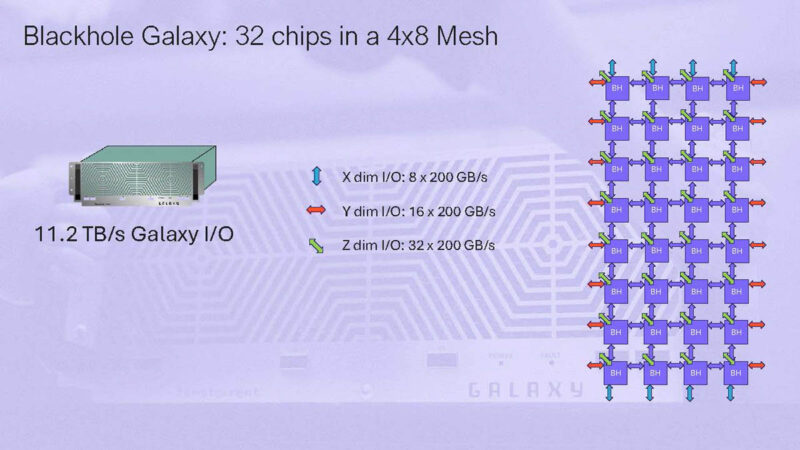

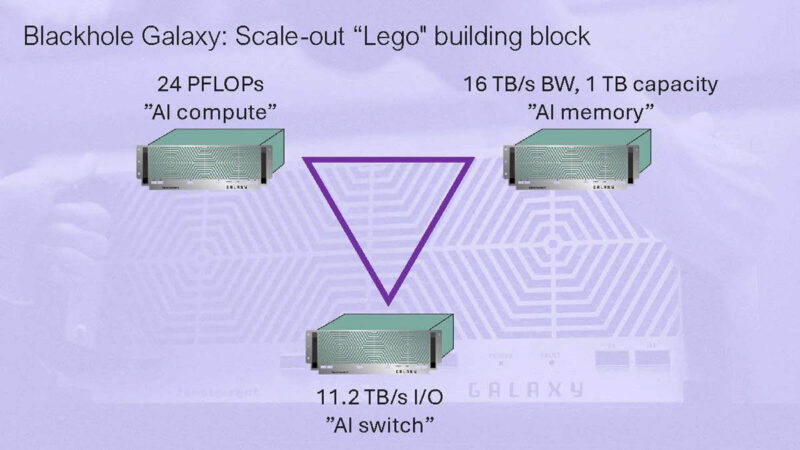

The Blackhole Galaxy will have 32 chips in a 4 x 8 mesh topology.

The idea is that one can scale out by adding more boxes to the network.

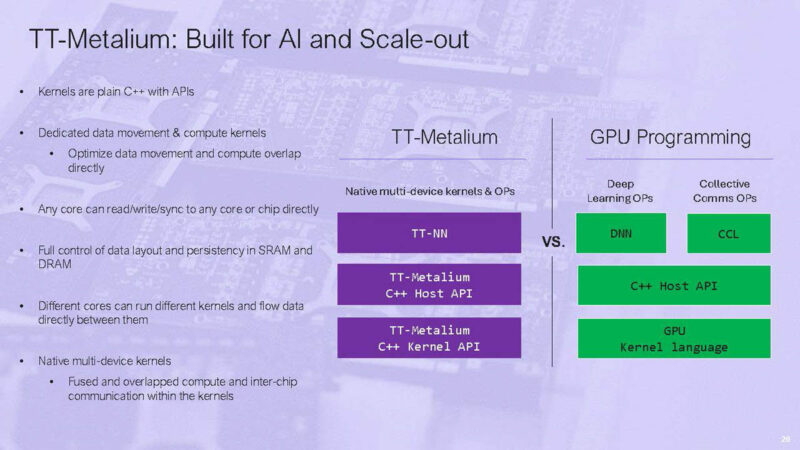

TT-Metalium is part of the company’s low-level programming model to turn hardware into something useful for running AI.

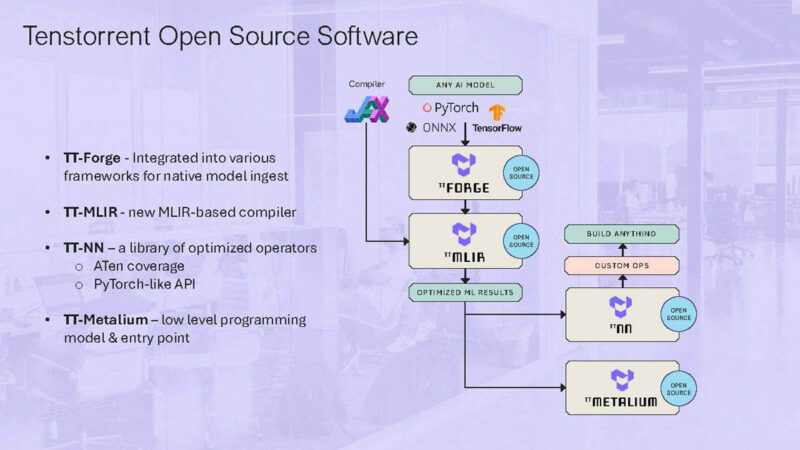

Here is a bit on Tenstorrent open source software.

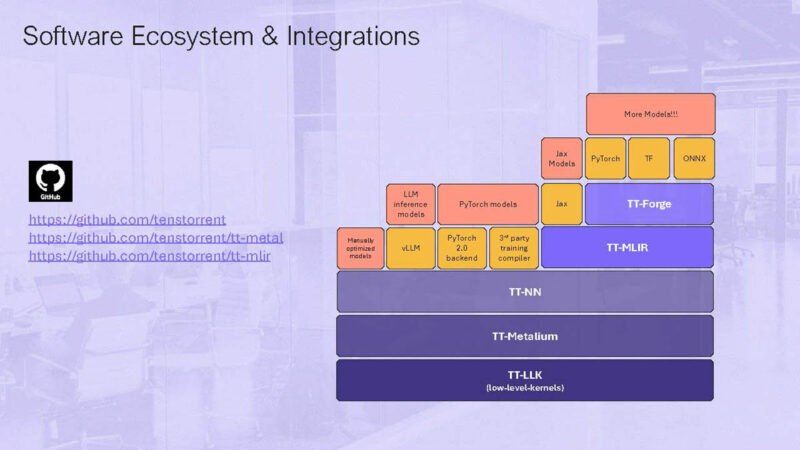

Here is another one on the integrations.

All cool stuff here.

Final Words

If you want to try out Tenstorrent hardware, we just covered Tenstorrent Wormhole Developer Kits Launched. It seems that Blackhole is not as simple to buy, but the systems that these go in are much larger.

Between RISC-V and the usage of Ethernet, Tenstorrent is going the way of open systems for AI acceleration, which is cool to see. If you want to know why we were so excited to see the Inside a Marvell Teralynx 10 51.2T 64-port 800GbE Switch piece and video, this is one reason that 51.2T Ethernet is going to be a big deal in the industry. AI chips like Blackhole are using high-end Ethernet to scale out.