As our first motherboard reviewed for the Intel Xeon Scalable Processor generation, the Supermicro X11SPH-nCTF certainly feels like a next-generation platform. We are going to review the motherboard from the perspective of forming the foundation of a storage server because it has so many storage related features such as an onboard Broadcom SAS3 controller. This is also our first platform using the Intel C622 PCH which you can read more about in our Burgeoning Intel Xeon SP Lewisburg PCH Options Overview piece. The key difference between the Intel C622 and the lower-end C621 is the inclusion of 10GbE networking which adds to the appeal of this platform over previous generations. Let us take a look at the board.

Test Configuration

Here is our basic test configuration for this motherboard:

- Motherboard: Supermicro X11SPH-nCTF

- CPU: Intel Xeon Silver 4114

- RAM: 6x 16GB DDR4-2400 RDIMMs (Micron)

- SSD: Intel DC S3710 400GB

- SATADOM: Supermicro 32GB SATADOM

We will note quickly that this motherboard has now taken the following Intel Xeon CPUs in our labs:

- Gold 6132

- Silver 4114

- Silver 4112

- Silver 4108

- Bronze 3106

- Bronze 3104

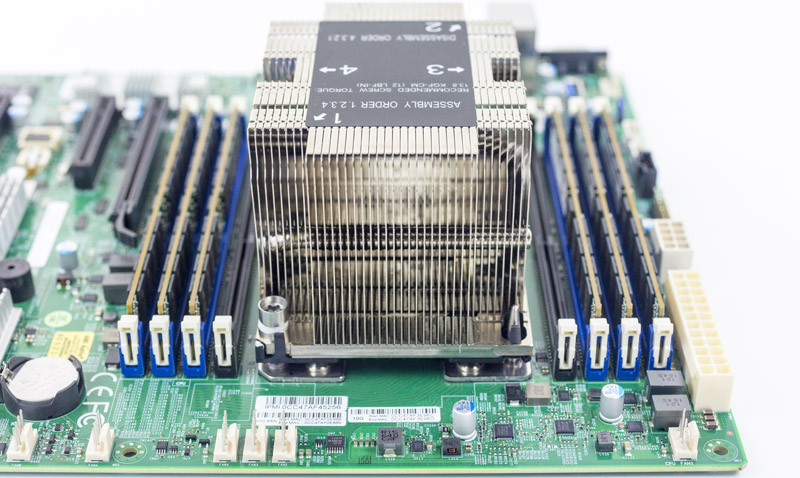

The impressive part is that the mechanicals with the heat sink and socket have held up well with over 20 CPU installations which is more than we expect the vast majority of deployments to ever experience. The motherboard is listed as being able to handle 205W TDP CPUs, however, our Intel Xeon Platinum 8180’s are being used elsewhere so we did not get the opportunity to try them yet.

The Intel Xeon Bronze 3104 enabled all of the system’s storage and networking controllers along with the various expansion slots and ports. As you are preparing to configure a storage system, we do recommend looking slightly higher in the stack to at least the Xeon Silver 4108.

Supermicro X11SPH-nCTF Overview

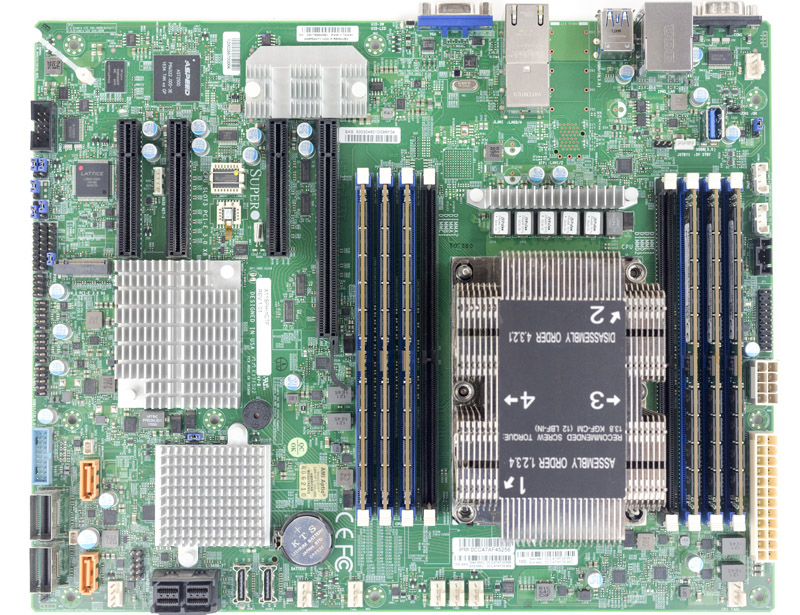

Before delving into the details, the Supermicro X11SPH-nCTF is particularly interesting as an ATX motherboard (12″ x 9.6″.) While normally motherboard sizing is less of a concern in servers, the standard form factor means that the X11SPH-nCTF can fit in a wide variety of chassis potentially including short depth, tower, and standard rack mount cases.

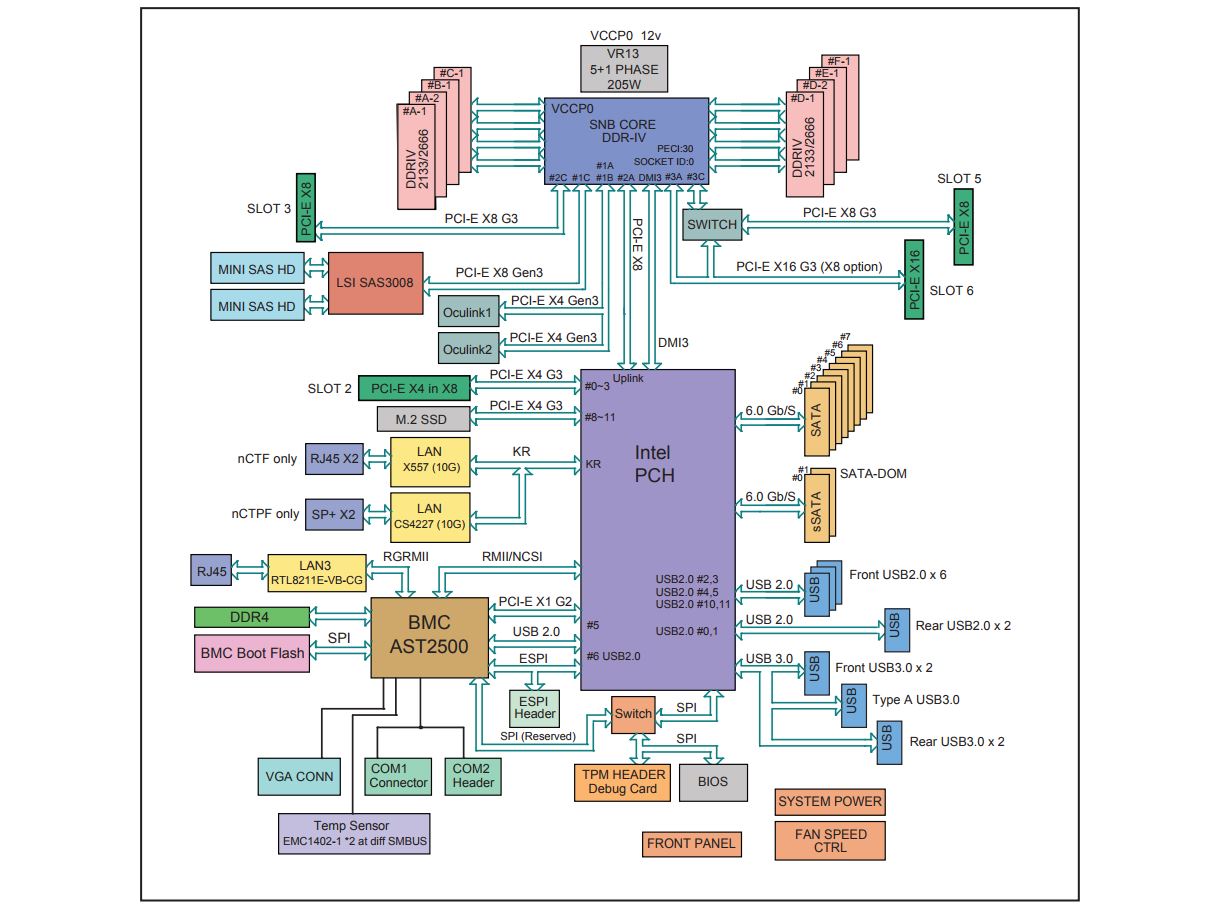

With the new series of CPUs, it is important to look at how the PCH is connected. While the Intel PCH solution has a standard DMI3 connection, designers like Supermicro can allocate more PCIe lanes to the PCH. In a design like the X11SPH-nCTF we wanted to bring attention to the fact that this is what the company did. There is an additional PCIe x8 link to the PCH. That is important because it allows Supermicro to provide more available bandwidth for the 10x SATA lanes, 10GbE and two PCIe slots (PCIe x4 and m.2) that are connected to the PCH.

While running every slot and every device at full speed would still mean the PCH has some bottleneck, the reality is that it is a very unlikely scenario where your boot drives, both 10GbE links, all eight data SATA devices, m.2 SSD and PCIe x4 device will all be running at 100% at the same time. Had Supermicro only used a DMI3 link to the PCH, it would have been a cause for concern as a potential bottleneck, however, the additional PCIe 3.0 x8 link alleviates this concern. On balance, this is an appropriate design choice.

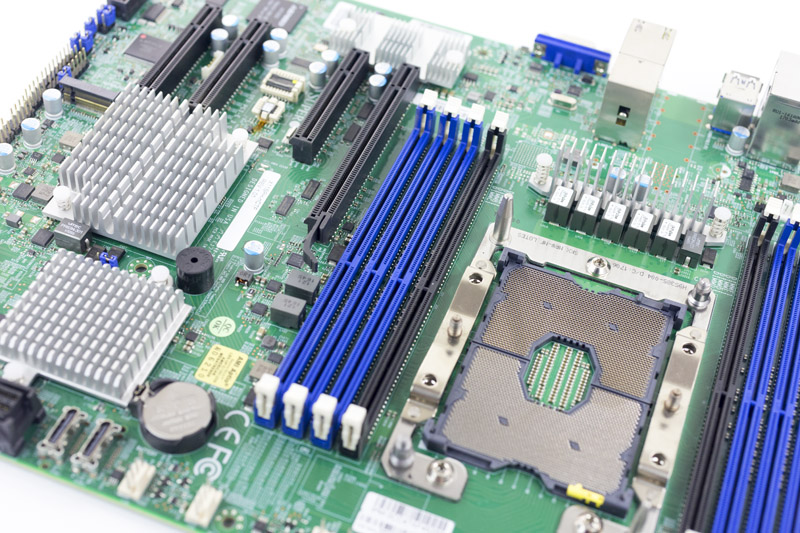

The CPU socket is flanked by eight DDR4 DIMM slots, four on each side. In our Intel Xeon Scalable launch coverage, we showed that the new “Skylake-SP” generation of CPUs can take six channels of memory and up to two DIMMs per channel. The black DIMM slots are additional DDR4 DIMM slots to make the total RAM capacity equal to the previous generation two DIMM in four channel configurations (totaling eight.)

The LGA3647 socket has held up extremely well in our testing. After a dozen installs we now think the new socket is easier, faster, and less risky to service than previous generations.

With six DDR4 DIMMs installed and a 2U heatsink, the front to back airflow should work easily in most cases.

The Supermicro X11SPH-nCTF is a special platform as it offers much more than a bare minimum set of features. It is a storage focused platform and we, therefore, see a multitude of features designed to excel in that role. One great example of this is the onboard Broadcom SAS 3008 controller which is a SAS3 design from the company’s LSI/ Avago acquisitions. This allows the motherboard to have eight SAS3 12gbps channels and connect to more advanced topologies such as SAS expanders and external disk shelves.

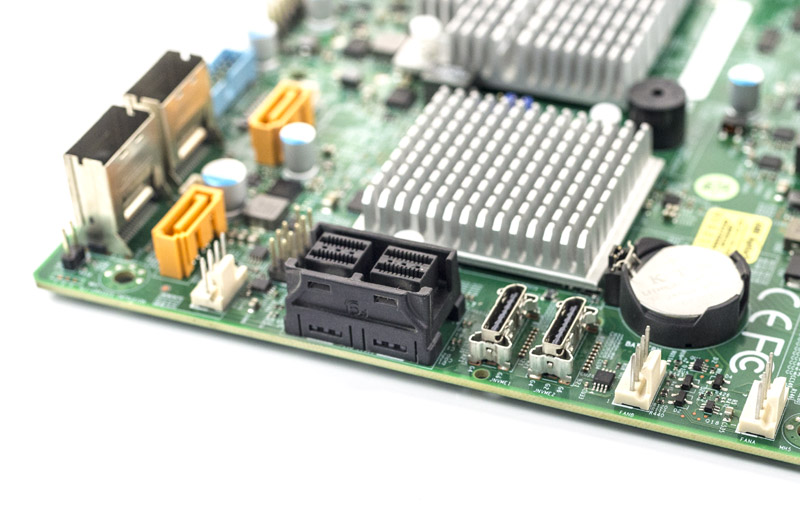

Next to the SFF-8643 SAS3 ports there are two connectors which many of our readers may be less familiar with. Those are Oculink connectors which provide connectivity to NVMe SSDs and backplanes. In the near future, we will review a platform that takes advantage of these connectors.

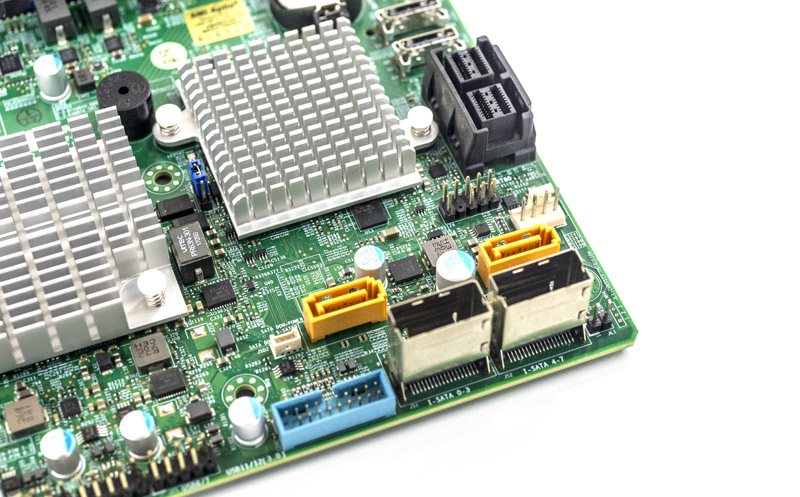

Moving to the PCH enabled storage, there are two gold/ orange 7-pin SATA ports. Each can support a SATADOM module without requiring an external cable. We use SATA DOMs heavily in our hosting and lab infrastructure as boot disks and the SATA powered drives are significantly easier to work with.

There are two SFF-8087 connectors, each carries four SATA III 6.0gbps ports for eight ports between t he two. Add to this the two standard SATA ports and one sees ten devices total. For those looking to build a lower-cost storage server, this means there are a total of eighteen SATA 6.0gbps ports available to the platform, eight of which are SAS3 12gbps ports and capable of using expanders. That is a lot of storage connectivity.

Next to the SFF-8087 connectors is a front panel USB 3.0 header. This is an industry standard connector. Beyond this, Supermicro also adds a USB 3.0 Type A header for an internal USB drive. While we prefer the SATA DOM boot drive, some organizations like using USB drives with an embedded OS.

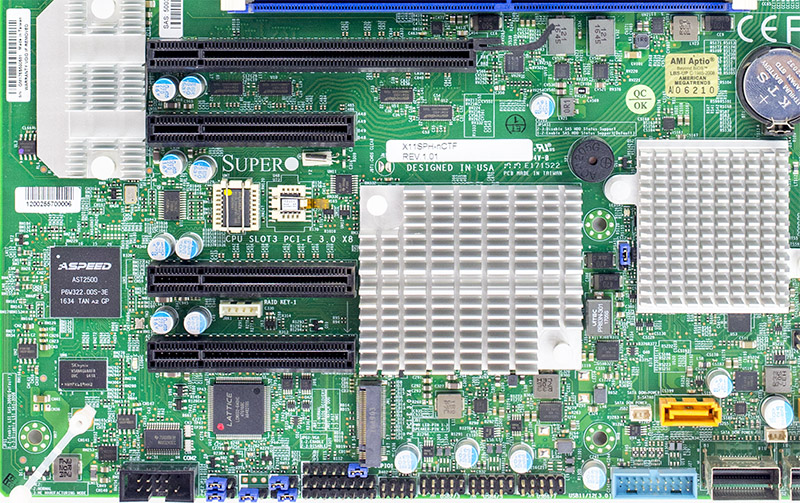

In terms of PCIe expansion, the Supermicro X11SPH-nCTF has a surprising amount. There are four PCIe slots along with one m.2 PCIe slot for a NVMe drive. A pair of PCIe 3.0 x16 and PCIe 3.0 x8 physical slots can be used either in x16/x0 or x8/x8 mode. Outside of GPUs and 100GbE networking cards, PCIe x16 cards are less common so we like the option to use both slots as x8. There is a PCIe 3.0 x8 slot in a x8 connector. The third PCIe 3.0 x8 connector is a PCIe 3.0 x4 electrical slot that connects via the PCH.

We appreciate that these new platforms support m.2 NVMe devices out of the box. In previous generations of Supermicro motherboards, m.2 support was achieved via an add-in card for many platforms. Now, we have that capability built-in further enhancing storage capabilities.

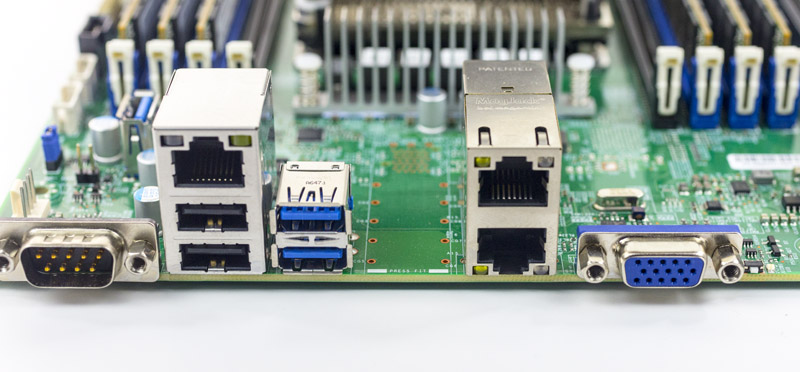

One can also see a new ASPEED AST2500 BMC. This is the latest generation BMC that powers the out of band management interface. The out of band management feature has its own dedicated NIC that sits between the serial port/ USB 3.0 blocks and atop the USB 2.0 block.

The two stacked RJ-45 ports are 10Gbase-T ports via the PCH Intel C622 chipset and that uses the Intel i40e driver.

Rounding out the I/O is a legacy VGA port to connect KVM carts in the data center.

Supermicro Management

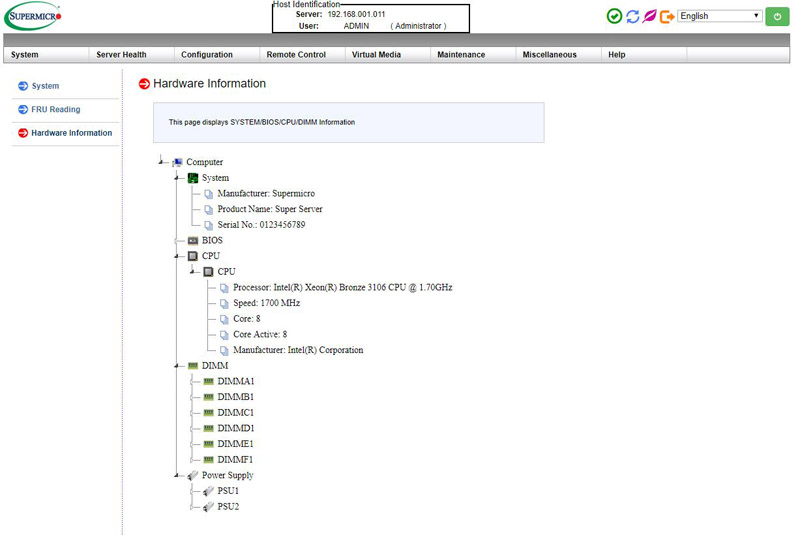

These days, out of band management is a standard feature on servers. Supermicro offers an industry standard solution for traditional management, including a WebGUI. The company is also supporting the Redfish management standard.

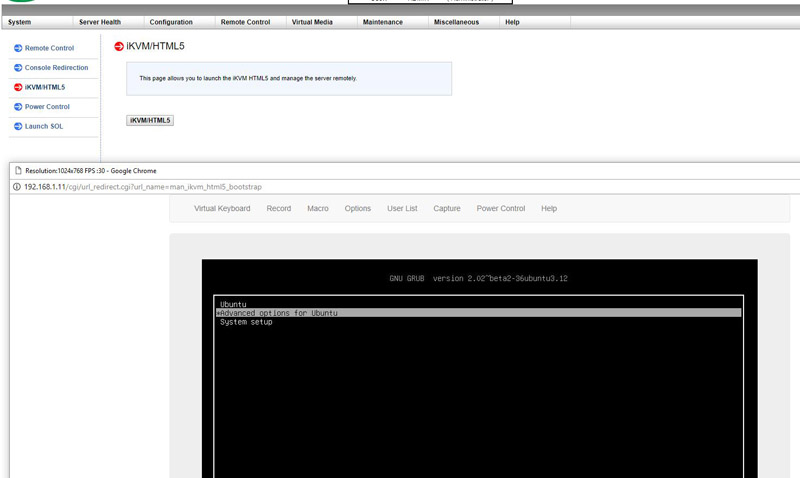

In the latest generation of Supermciro IPMI is a HTML5 iKVM. One no longer needs to use a Java console to get remote KVM access to their server.

Currently, Supermicro allows users to utilize Serial-over-LAN, Java or HTML5 consoles from before a system is turned on, all the way into the OS. Other vendors such as HPE, Dell EMC and Lenovo charge an additional license upgrade for this capability (among others with their higher license levels.) That is an extremely popular feature. One can also perform BIOS updates using the Web GUI but that feature does require a relatively low-cost license (around $20 street price.) That is a feature we wish Supermicro would include with their systems across product lines.

At STH, we do all of our testing in remote data centers. Having the ability to remote console into the machines means we do not need to make trips to the data center to service the lab even if BIOS changes or manual OS installs are required.

Power Consumption

In terms of power consumption, we measured using our APC PDUs on 208V data center power at 17.1 °C and 70% relative humidity. We are using the Intel Xeon Silver 4114 for these power tests:

- Power off BMC only: 6.1W

- OS Idle: 63.2W

- GROMACS AVX-512 Load: 110.3W

These numbers are great. The Intel Xeon Silver 4114 is a 85W TDP CPU (note TDP does not equal power consumption) and AVX-512 will stress the CPU considerably. The system idle figure is lower than similar configurations with SAS 3008 controllers and two active 10Gbase-T links (via add-in X550) by about 9W. If you compare this to previous-generation systems, one must remember that this system includes two SSDs as well as six DDR4-2400 DIMMs as well.

Conclusion

When we first saw the Supermicro X11SPH-nCTF it was due to one of our readers sharing their excitement about the platform. Upon reviewing the unit, and running it through almost two dozen CPU configurations, we can say it has held up extremely well. Many of our readers are using SFP+ DACs or optics for their 10GbE networking. There is a closely related variant of this platform called the Supermicro X11SPH-nCTPF (note the P) which trades the RJ-45 ports for SFP+ cages. We also asked about Oculink backplanes and will be publishing a review with this platform in a system making use of the two Oculink ports to connect U.2 NVMe drives.

Current street prices are hovering just over $500 USD. Combine this with a Intel Xeon Bronze 3014 and for $750 you have a 10Gbase-T enabled, SAS3 capable server platform. When you look at the cost of adding a Broadcom SAS 3008 controller and 10Gbase-T networking to a platform, it is considerably less expensive to get those features built-in.

If you are looking for a single socket 10Gbase-T storage server, this is going to be a solid platform.

I’d rather have the SFP+ but I’ve been obsessing over this motherboard since I saw it on their site.

Nice board, could you also have a look at the GigaByte MZ31-AR0 http://b2b.gigabyte.com/Server-Motherboard/MZ31-AR0-rev-10#sp It’s E-ATX, so a bit bigger but it has 2 10 Gb/s SFP+ (Broadcom® BCM 57810S) and with a cheap EPYC 7251 or 7351p it looks as a promising board for storage servers or basically for almost everything (with an EPYC7551P in it).

@Misha – we are going to look at the MZ31-AR0 when it is available/ released.

@ Patrick – Thanks for that, really looking forward to it.

I want to use it as a DIY workstation for video editing and rendering and this board comes the closest to my needs (there must be others who make similar boards but I haven’t found them).

Would love to see a workstation board with 112 PCIe lanes 5×16 + 4×16(8) or 6×16 + 2×16(8).

Surprised that this platform actually is a viable storage platform, looks like a good option.

However, i am in need of updating my NAS, waiting for the storage oriented Xeon-D products, cant believe how long we have had to wait for something that could replace something like the c2550d4i but on the Xeon-D platform. Not to hijack this article but surely the Xeon-D platforms should be rolling in right about now? They said summer 2017 and have not heard about any delays but the products are still not here. Have you heard anything new? Do you have them in your lab? When will the NDA be lifted? ;)

Hi Jesper – no comment on the Xeon D. I do agree that this board plus a Xeon Bronze or Silver 4108 trades off higher power consumption and a larger footprint for significantly more expandability.

On new Xeon D, Intel announced QAT and 4x 10Gb Xeon D parts earlier this year and the products are on ARK. I see those more as feature upgrades to the platform targeting specific workloads. Intel has not announced a successor.

Thanks for the answer Patrick. Seems like i am miss-remembering, i thought they where gonna update the Xeon-D platform this year with Skylake or even Kaby Lake cores but looking at the ARK, all 2017 releases are still Broadwell, kinda disappointing. I will see how viable the C3000 platform will be before deciding though.

This article makes the choice even harder, 750USD is not a big investment, i was ready to put down that on a Xeon-D motherboard anyway and after i would be done with it as a storage server i could get a used silver or even gold CPU 2-3 years down the line for not that much money and repurpose the board for compute.

The 10GbE NIC i am using in my storage server now cost me over 500USD back then and today you just add 250 more and you get a feature packed motherboard with an entry level Bronze CPU, thats a bargain in comparison. I still don’t like the Bronze/Silver/Gold/Platinum differentiation tactics, “artificial” PCI limitations, memory limitations and other feature pairing to the different Xeon metals, especially when Epyc has no or very small differentiation between SKU:s, but still, this article made a good case and its very tempting to pull the trigger ;)

We also just published Intel Xeon Bronze 3106 benchmarks and CPUs like the 3104 and 4108 were in most of the charts.

PCIe lanes are not differentiated. Memory is differentiated only by DDR4 speed (2133, 2400 or 2666) and capacity (M SKUs for up to 1.5TB/ socket at a hefty premium).

Legit question: Who actually uses 10Gbe? Every datacenter I’ve ever been in has used 1Gb for ILO/IPMI/Management, and 10Gb SFP+, if not bonded SFP+ for any sort of data (in Storage-centric applications). I have NEVER seen a 10GbE connection in any datacenter I’ve ever been in. Is this geared more towards home users/labs that have Cat6 in the walls?

Evan – I have heard hosting companies that like 10Gbase-T, for example, so they can deploy one 1GbE and one 10GbE connection with a single NIC. Also, they had an easy upgrade path from 1GbE to 10GbE.

@ Hans Vokler

Glad to hear that you like this product. As for SFP+, Supermicro has another SKU called X11SPH-nCTPF, which has the same features and also provides SFP+ for 10G.

Wonder how native avx-512 would effect zfs z2/z3 parity calculations vs power consumption.

I’m confused about one thing. You mentioned “there are a total of eighteen SATA 6.0gbps ports available to the platform”, but I only see two SFF-8087 connectors and 2 SATA connectors. If each SFF-8087 can connect to 4 SATA, how does that add up to 18?

Hi JS – if you are using SATA drives you can use the SFF-8643 ports as well for 8 more.

Ah, ok, cool. Yes, I would be using SATA drives. One other question: do you know if it’s possible to replace the fan on the heatsink with something quieter (Noctua or whatever)?

Sorry, I just realized the board you reviewed had a passive heat sink. But I’m curious if you know anything about the SNK-P0068APS4 active heat sink (or any other compatible heat sinks) and whether they could be adapted to use a quieter fan (since SuperMicro fans are typically not very quiet)?

JS people have done this in our forums.

Thanks, Patrick! I had searched for SNK-P0068APS4 and didn’t get any hits, but searching for LGA3647 gives a bunch of matches in the forums.

Does this motherboard support xeon phi?

Patrick, for this motherboard, if I only buy two DIMMs to start with, then (ignoring the performance disadvantages) can I use those two DIMMs to test all 6 “blue” DIMM slots? The manual for the motherboard has a very specific order for installing DIMMs, but I’d like if possible to test all “blue” DIMM slots now (I recognize I probably couldn’t test the “black” slots). Just curious if you ever tried installing fewer than all blue slots but not just in the first pair of slots specified by the manual? I’d rather avoid buying 6 smaller DIMMs just to test the motherboard, but it would be a bummer to not test all slots and find out a year or two from now that it has a defect when I want to add more DIMMs.

Usually, we tell folks to follow the recommended installation order. Not doing so sometimes creates issues on platforms across vendors.

OK, thanks. So there is no way to pre-test all DIMM slots without having as many DIMMs as slots?

I cannot give you a 100% that it will work. Running with 2 DIMMs should work following the motherboard/ servers installation guide.

Thanks, Patrick. I guess I’ll either have to buy six/eight 8GB sticks, or get two 16GB like I planned and take my chances on the other slots. Probably the latter given how expensive DIMMs are currently.