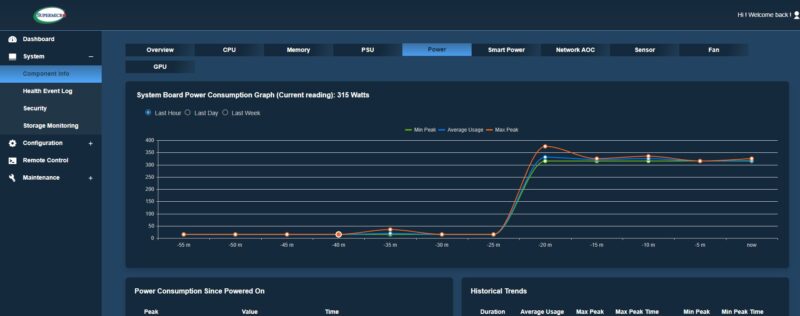

Power Consumption

In our Intel Xeon 6700E review we went into power figures using this system.

Using this Supermicro platform since and starting with the base BMC power consumption with the server off but the NICs connected, keyboard plugged in, and so forth, we are anywhere from about 6-12W range.

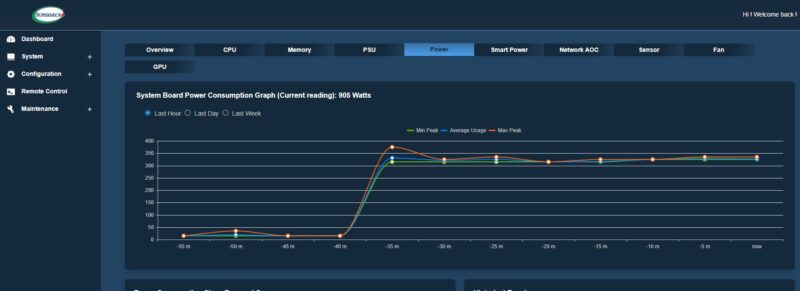

Turning the system on, at idle, we are at around 315W. That is higher than some would expect, but it is almost exactly what we would have expected to see from an all E-core CPU. These cores are not throttling down to oblivion. Instead, the min to max power states on E-core implementations we have seen tend to be relatively close. When we fire the system up, we hit a peak of 905W via the BMCs power supply readings.

As a quick note, we are losing a small amount here due to using redundant power supplies, so single power supply use would be under 900W. Second, this is not the typical lean configuration that we use for power consumption testing. Instead, we have 32x 64GB DDR5 DIMMs for a total of 2TB of memory. We had two 100GbE ports up, and even a quad Intel X710-T4 10Gbase-T NIC linked because we needed an RJ45 connection, given we only had time to test this in the studio, not the data center lab.

Shedding half of the DIMMs (~5W each) and peripherals, we got this under 800W and there was probably room to go down from there. That is quite good with two 330W TDP CPUs.

With the Xeon 6766E, we managed to get the power consumption in the full 2TB configuration to around 725W. Dropping 80W TDP per CPU gives us 160W total towards that drop, and cooling accounts for a few watts as well. That is absolutely a crazy figure. 288 cores, 2TB of DDR5 memory, 100GbE and 10Gbase-T networking, and only 725W? Going to 1DPC and only 1TB of memory, we were sub 650W maximum for around 2.25W / core, which lit up with 100GbE networking.

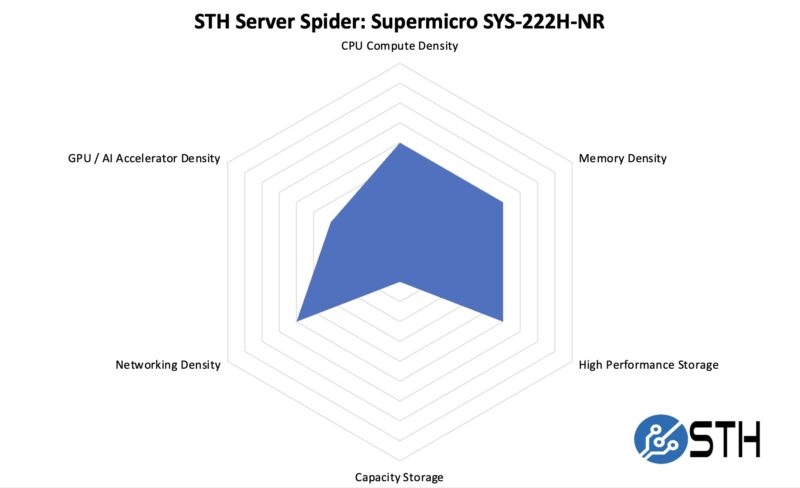

STH Server Spider: Supermicro SYS-222H-TN

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Our configuration was tilted more in the direction of using add-in cards. At the same time, with 288 physical cores, we get a lot of cores at very reasonable power consumption. In our STH Server Spider we do not look at things like watts per core, but this system performs very well there, while also having quite a lot of expandability. We only took a look at one configuration, but this is a platform that can handle three times as many SSDs compared to what we looked at just as an example. With the 122.88TB SSDs coming in 2025, this 2U server could have 288 cores and almost 3PB of storage which is great.

Final Words

This was a fun system to go into since we could show it both at the Xeon 6 launch but also with a nice little upgrade in the DapuStor H5100. Having the higher capacity was great, but also having a high-capacity SSD that could improve the application performance of the 288 core count system was neat as well. The Supermicro Hyper platforms, like this one, generally have a lot more configuration options but Supermicro also focuses on making the designs very serviceable. That is not a small accomplishment.

For those deploying Sierra Forest, Supermicro has a very versatile 2U dual socket server in the SYS-222H-TN. The best part may be the power efficiency. That Intel Xeon 6766E configuration allows you to fit a lot of physical cores and sticks of DDR5 into a low power envelope.

Honestly dreaming of a few single-CPU variants of these as VM clusters. 144 cores + 1TB of RAM x3 clustered together would take care of most of the virtual hosting needs I could think of for a lot of small clients.

Adding in a few hundred TB of fast PCIe5.0 SSD storage on each node federated as part of the cluster, and you have a very robust solution that doesn’t have a lot of bottlenecks.

Hopefully we get to review a 1P Sierra Forest as I agree that is a killer platform.

@James

I’ve been deploying setups like that with 3x 1U servers, a few SSDs in each for Ceph on dedicated 2x25G NICs (you can connect them to each other without a switch). With Proxmox as the platform.