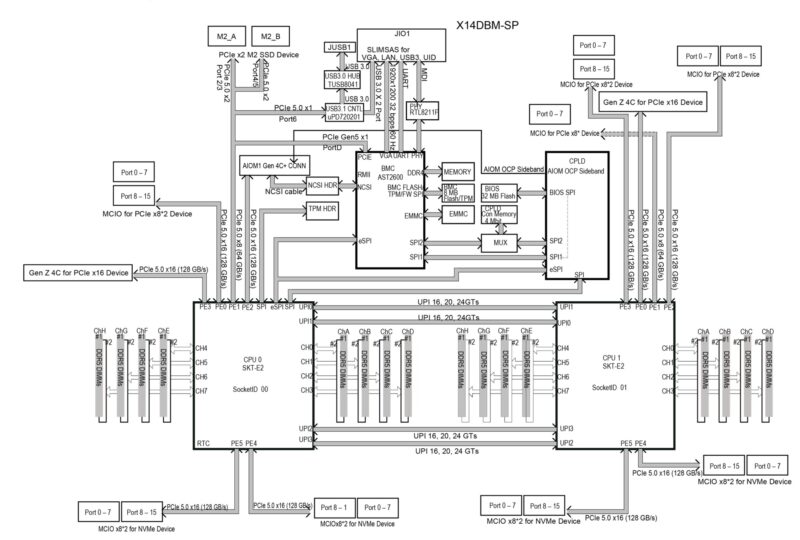

Supermicro SYS-222H-TN Block Diagram

Here is the block diagram for the X14DBM-SP motherboard.

Something very notable here is that there is no more PCH in the design. As a result, all of the I/O goes into the CPUs. There is then the BMC and CPLD that handle some of the low speed I/O. That is a big change with Xeon 6, and it brings the platform more in line with offerings from AMD and Ampere by not requiring a PCH.

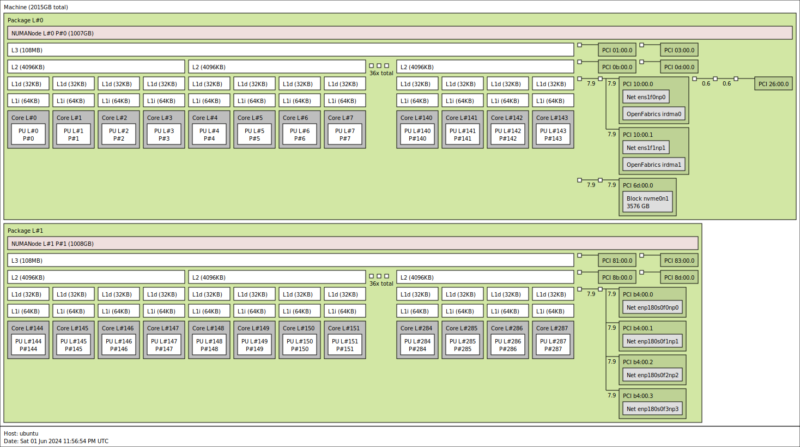

Here is a quick look at the system topology.

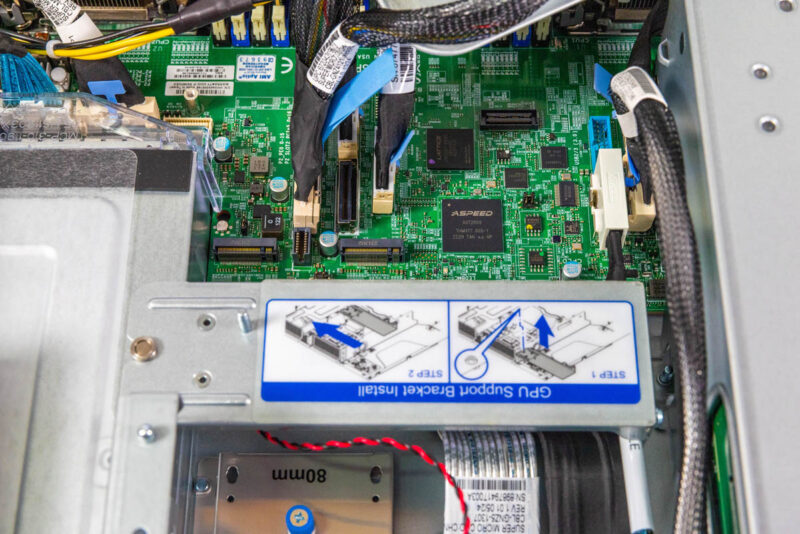

Supermicro SYS-222H-TN Management

The server uses an ASPEED AST2600 BMC for its out-of-band IPMI management functions.

In the interest of brevity, the Supermicro IPMI/ Redfish web management interface is what we would expect from a Supermicro server at this point.

Of course, there are features like the HTML5 iKVM as we would expect, along with a randomized password. You can learn more about why this is required so the old ADMIN/ ADMIN credentials will not work in Why Your Favorite Default Passwords Are Changing.

Next, let us talk about performance.

Supermicro SYS-222H-TN Performance

Since we used this server for our initial Intel Xeon 6 6700E Sierra Forest Shatters Xeon Expectations piece, we are going to have the performance summary that we already posted. The big difference is that we wanted to re-test with the DapuStor SSD to see if that makes an impact since that is something we found when doing our AMD EPYC 9005 Turin piece. It turns out that the huge multi-core CPUs can actually run into storage bottlenecks where we had never seen them before.

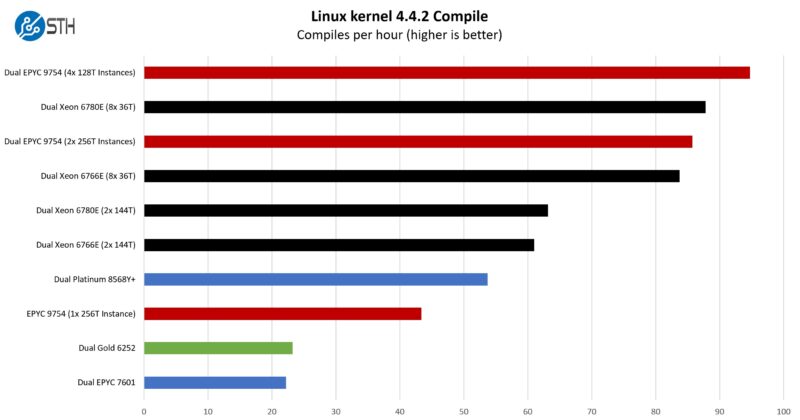

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple: we had a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and made the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read.

So this is really a cool result. This is one of the first tests that we started breaking up into multiple instances. The reason for that is that there are a few spots where we get the single thread points that just stall large processors, where you get sub 1% utilization. Moving to 4x 36 thread instances per CPU for 8x 36 thread instances per system, we saw a massive jump in performance.

When we discuss how benchmarking a single application on entire cloud native processors feels a bit wrong, this is a great example of why. If you are lift-and-shifting workloads from lower core count servers, then this is more like how they would run on the cloud-native processors. It is strange, but they perform better as work gets distributed and single-threaded moments do not induce sub 1% utilization.

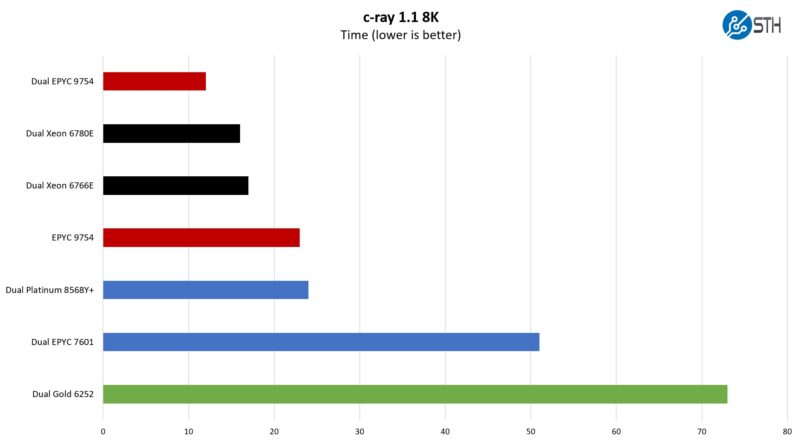

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular for showing differences in processors under multi-threaded workloads. Here are the 8K results:

This is one where we are going to have to split it up. Scaling with these larger CPUs is starting to get wonky.

SPEC CPU2017 Results

SPEC CPU2017 is perhaps the most widely known and used benchmark in server RFPs, and Supermicro actually submitted a result for this server, we are just going to point to the 1360 score for the dual Xeon 6780E chips in this server.

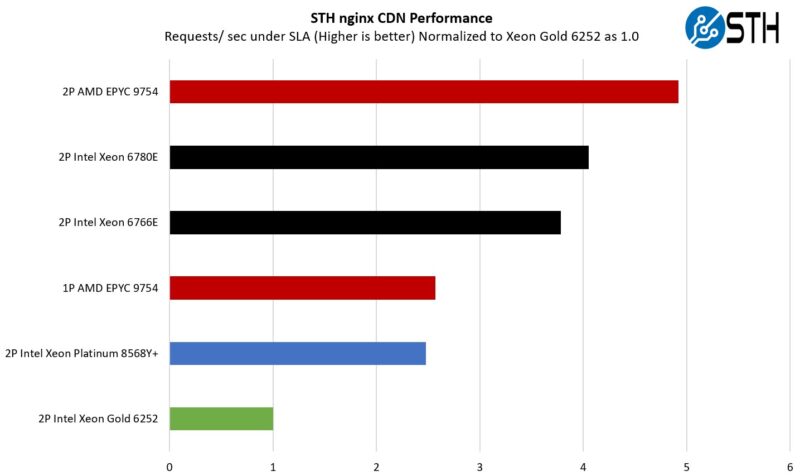

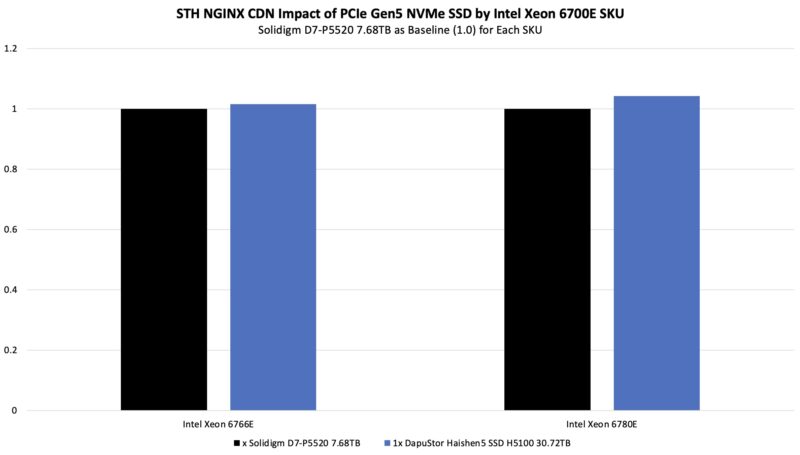

STH nginx CDN Performance

On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with DRAM caching disabled, to show what the performance looks like fetching data from disks. This requires low latency nginx operation but an additional step of low-latency I/O access, which makes it interesting at a server level. Here is a quick look at the distribution:

Here, we are seeing a familiar pattern. There are a few items to note, though. The Intel CPUs are doing really well. We are not, however, doing Intel QAT offload for OpenSSL and nginx. If you do not want to use acceleration, then this is a good result. If you are willing to use accelerators, then this is not ideal since there is silicon accelerators not being used. It is like not using a GPU or NPU for AI acceleration.

See Intel Xeon D-2700 Onboard QuickAssist QAT Acceleration Deep-Dive if you want to see how much performance QAT can add (albeit that would only impact a portion of this test.)

Even without QuickAssist and looking at cores only, Intel is doing really well. Imagine a 288 core part.

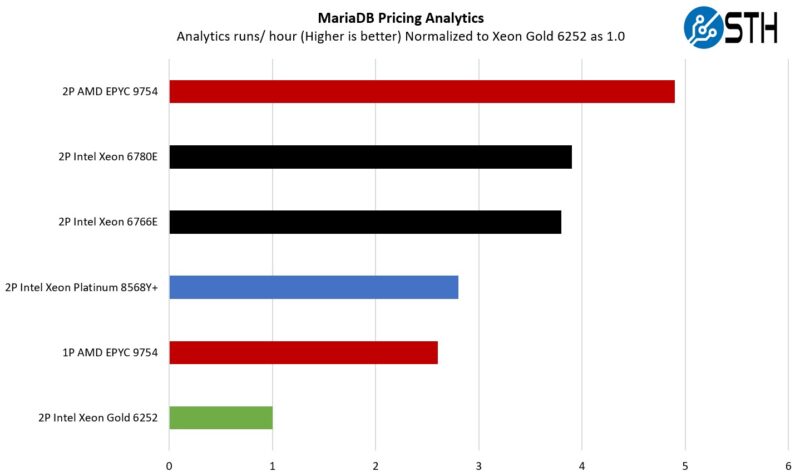

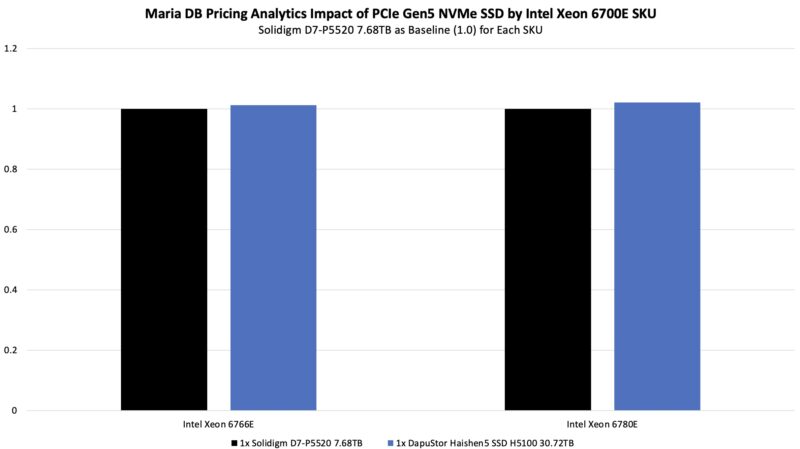

MariaDB Pricing Analytics

This is a very interesting one for me personally. The origin of this test is that we have a workload that runs deal management pricing analytics on a set of data that has been anonymized from a major data center OEM. The application effectively looks for pricing trends across product lines, regions, and channels to determine good deal/ bad deal guidance based on market trends to inform real-time BOM configurations. If this seems very specific, the big difference between this and something deployed at a major vendor is the data we are using. This is the kind of application that has moved to AI inference methodologies, but it is a great real-world example of something a business may run in the cloud.

Here SMT helps, but it is a lower percentage gain per thread. AMD still has a considerable lead with Bergamo since it is winning with 256 Zen 4c cores versus 288, but the E-cores are holding their own.

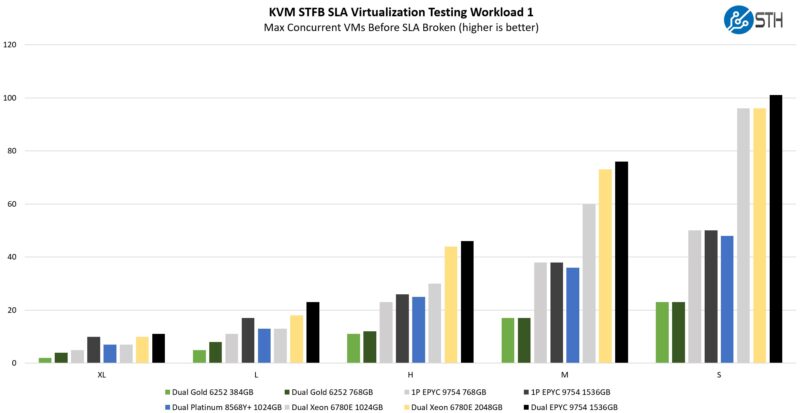

STH STFB KVM Virtualization Testing

One of the other workloads we wanted to share is from one of our DemoEval customers. We have permission to publish the results, but the application itself being tested is closed source. This is a KVM virtualization-based workload where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker. This is very akin to a VMware VMark in terms of what it is doing, just using KVM to be more general.

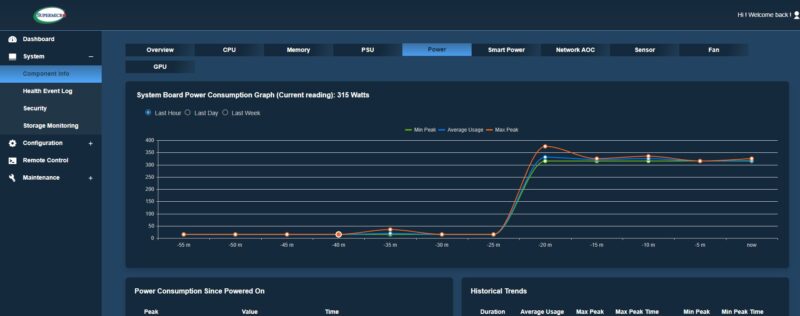

These are really great figures for Sierra Forest. Bergamo is still winning, and in some of the larger VM sizes, simply having 512 threads instead of 288 in a dual-socket server is really helping a lot here. Memory sizing does have some impact on the larger tests as well. Still, Sierra Forest is doing really well, and it is doing it at a lower power of 330W. The reason we are using the Intel Xeon 6780E here, not the Xeon 6766E, is simply that this takes too long to run, but our sense is that some of the results will be indistinguishable when they are memory and core-bound. Our guess is that would be the big winner from a performance per watt basis.

Overall, this is not AMD EPYC Bergamo-level consolidation. On the other hand, there is a big benefit over 5th Gen Intel Xeon both on a performance and power side, as well as raw performance.

DapuStor Haishen5 SSD H5100 30.72TB Impact

For this review, we wanted to see if adding a higher-speed PCIe Gen5 NVMe SSD would impact our application performance results. Since it is the newest SSD in our lab, we wanted to try the DapuStor Haishen5 SSD H5100 that the company sent in 30.72TB capacity. It is also a 1 DWPD drive, so it has a ton of write endurance at over 10PB/ year.

The drive combines huge capacity with big performance. Sequential read/ write is 14GB/s and 9.5GB/s respectively with 4K at 2.8M IOPS and 380K IOPS. The key here is big and fast.

Starting with our nginx CDN, we see a nice uplift especially with the higher clocked Xeon 6780E.

Usually we see lower core count and lower clocked parts hit smaller deltas in our testing. So much so that we did not really even look into this until this year’s 130+ core CPUs came in for testing.

Overall though, the DapuStor Haishen5 H5100 is a really neat drive. It can give you a few percent more performance from your system not just on synthetic benchmarks like fio, but also with real-world applications. For some applications, like the nginx CDN, having larger 30.72TB capacities can be very nice.

Next, let us get to our power consumption.

Honestly dreaming of a few single-CPU variants of these as VM clusters. 144 cores + 1TB of RAM x3 clustered together would take care of most of the virtual hosting needs I could think of for a lot of small clients.

Adding in a few hundred TB of fast PCIe5.0 SSD storage on each node federated as part of the cluster, and you have a very robust solution that doesn’t have a lot of bottlenecks.

Hopefully we get to review a 1P Sierra Forest as I agree that is a killer platform.

@James

I’ve been deploying setups like that with 3x 1U servers, a few SSDs in each for Ceph on dedicated 2x25G NICs (you can connect them to each other without a switch). With Proxmox as the platform.