Supermicro SYS-222H-TN Internal Hardware Overview

Here is a quick overview shot so you can get your orientation.

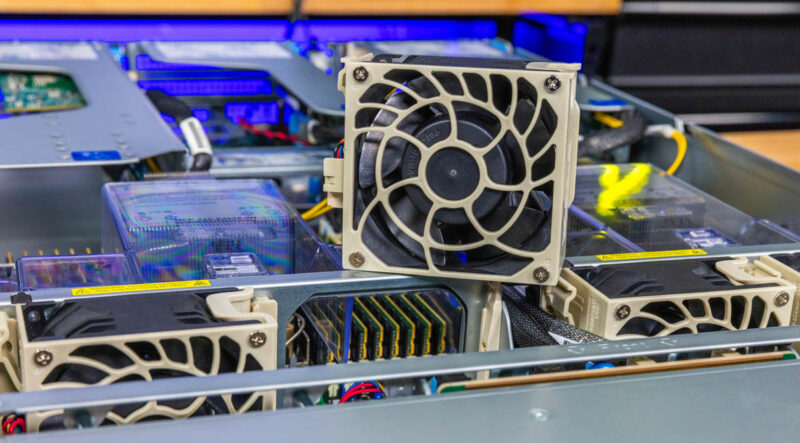

Behind the storage, we get a midplane with four fans. These provide redundant airflow paths through each CPU and to the rest of the system. The fans are hot swappable, but fans have also become extremely reliable.

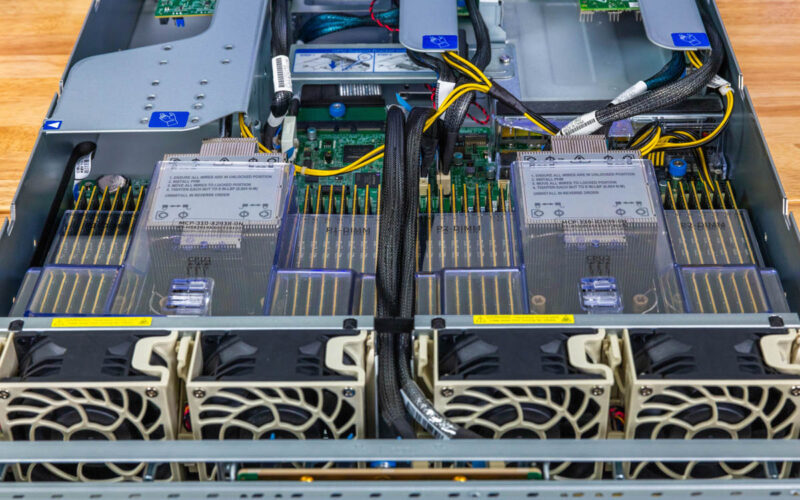

Each CPU and memory gets its own airflow guide, and then there is a center cable channel. This is a better design than something like the Dell EMC PowerEdge R750xa because replacing a DIMM does not require removing a MCIO cable.

Here is a look from the other angle.

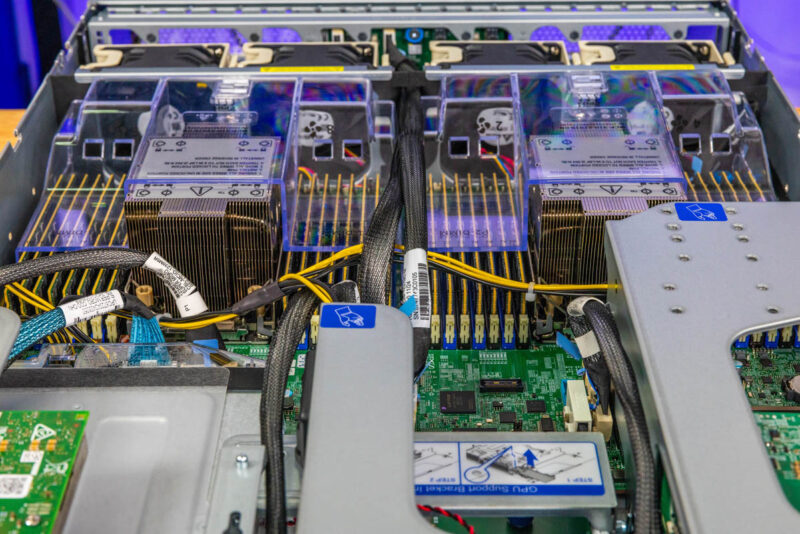

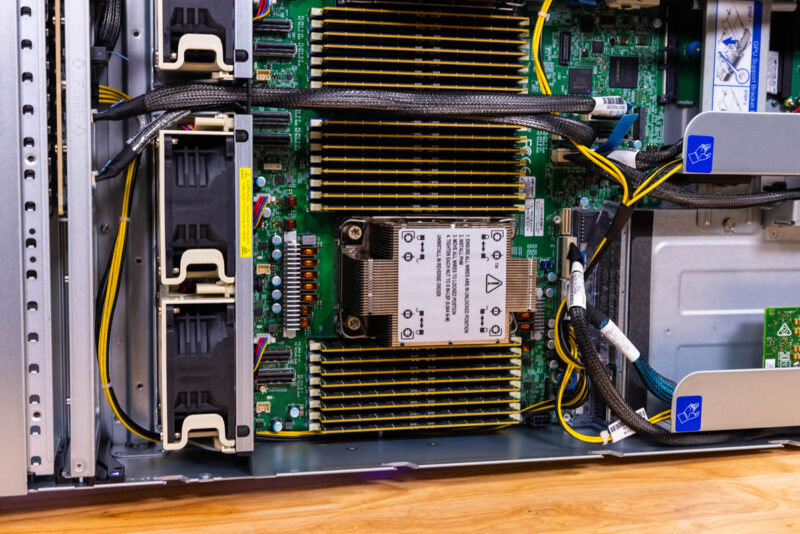

On the CPU side, we have dual Intel Socket E2 (LGA-4710) procesor sockets. We tested these with both the Intel Xeon 6780E and Xeon 6766E processors which each have 144 cores for 288 total. We have the air cooled version since 330W in a 2U is not bad, but apparently there is a liquid cooled option that we did not test.

Each processor has 8 channel DDR5 and supports 2DPC (two DIMMs per channel) so we get a total of 16 DIMMs per socket and 32 DIMMs total.

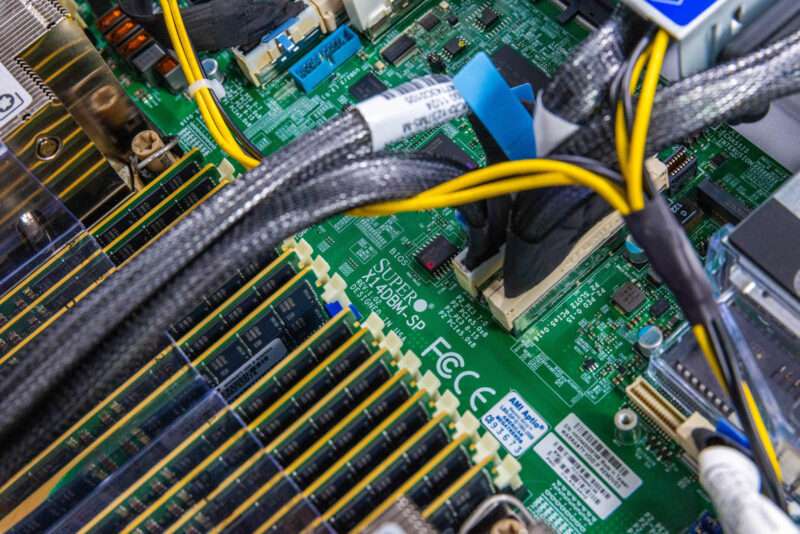

The motherboard if you want to look it up is the Supermicro X14DBM-SP.

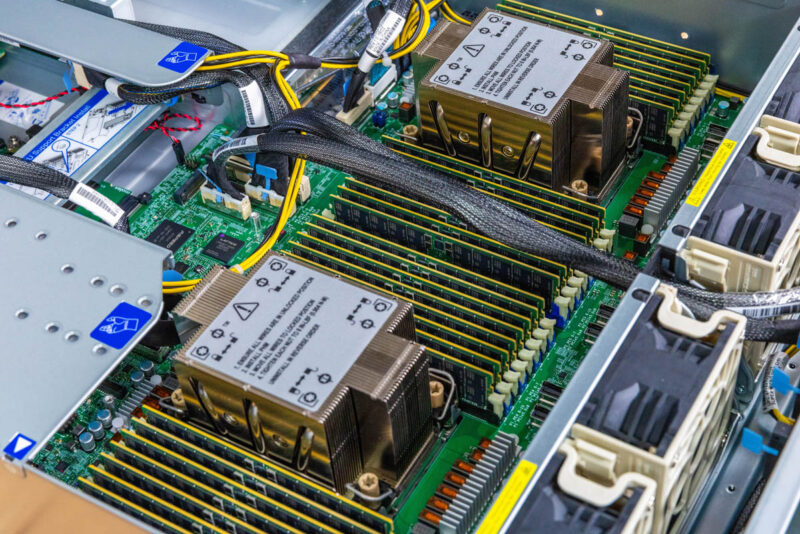

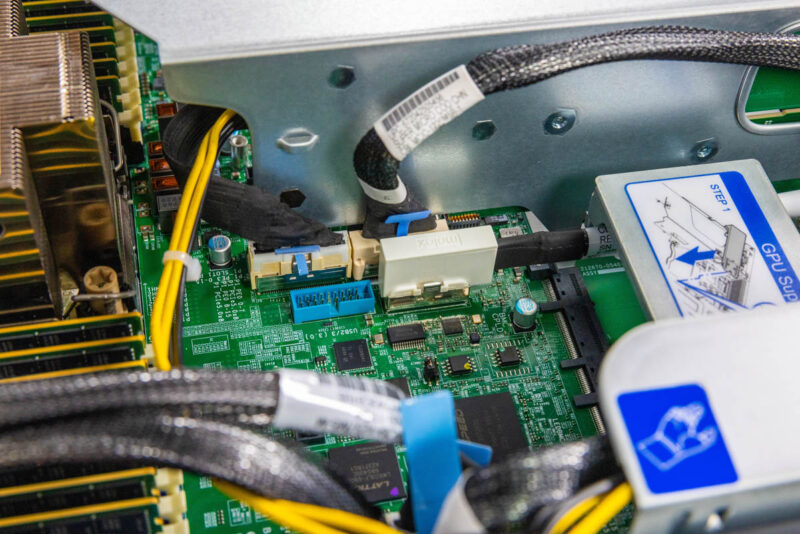

Soemthing that we mentioned previously with the risers and storage is that MCIO cables are the primary tool to connect PCIe/ storage slots in the server.

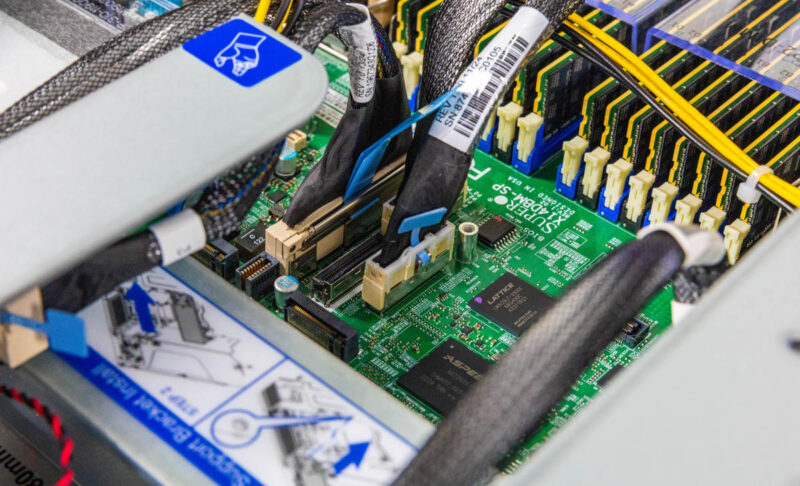

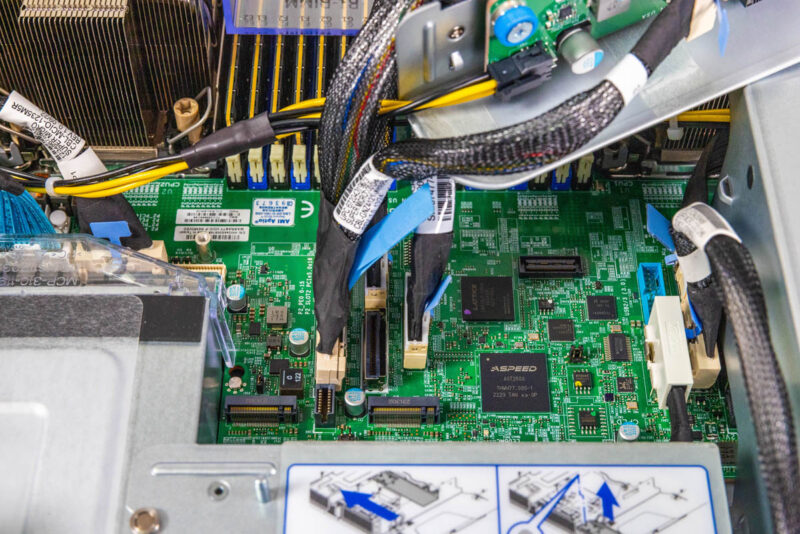

There are MCIO headers across the motherboard. Next to the center header cluster is the ASPEED AST2600 BMC.

Something that you will not see in this generation is a PCH. The Intel Platform Controller Hub has been retired with this generation making the Xeon platform much more modern in its design.

Next to the BMC, we have more MCIO cables.

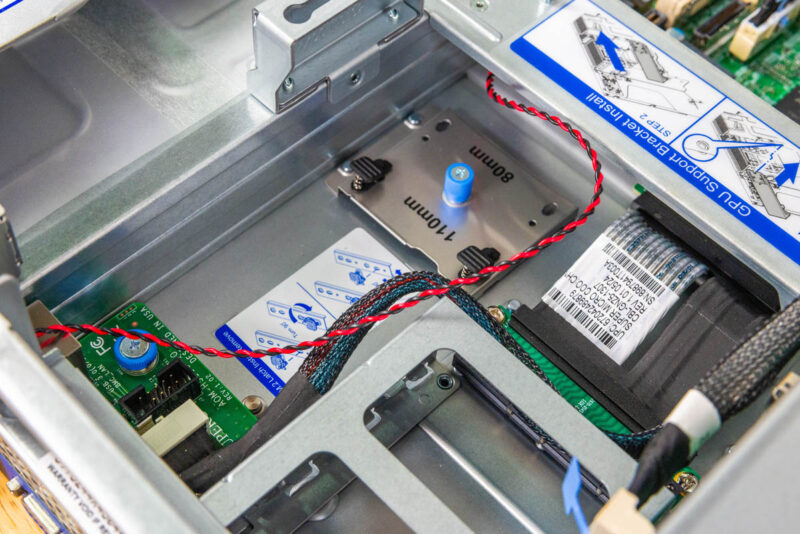

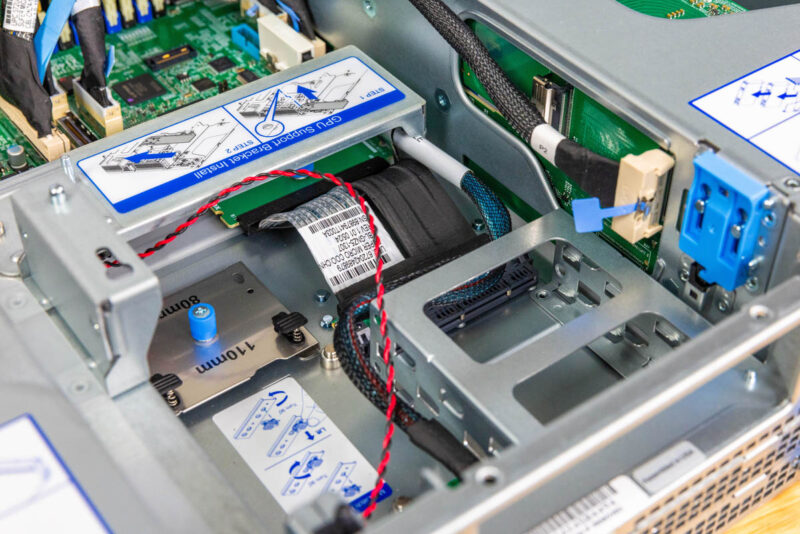

Something you can see here that is pretty neat is that the two M.2 drives are designed for tool-less service. The actual M.2 slots are on the edge of the motherboard. The drives then extend along the middle of the chassis behind the rear I/O.

Something also neat is that Supermicro uses a cabled connection for the AIOM / OCP NIC 3.0 slot.

Something worth noting is that the motherboard is quite short. It only extends from just past the power supplies to the power supply or just past the power supply. When we talk about PCIe Gen5 signaling being a challenge, this is a direct way that that challenge impacts design. Signal travels further over cables than over PCB.

Next, let us get to the block diagram.

Honestly dreaming of a few single-CPU variants of these as VM clusters. 144 cores + 1TB of RAM x3 clustered together would take care of most of the virtual hosting needs I could think of for a lot of small clients.

Adding in a few hundred TB of fast PCIe5.0 SSD storage on each node federated as part of the cluster, and you have a very robust solution that doesn’t have a lot of bottlenecks.

Hopefully we get to review a 1P Sierra Forest as I agree that is a killer platform.

@James

I’ve been deploying setups like that with 3x 1U servers, a few SSDs in each for Ceph on dedicated 2x25G NICs (you can connect them to each other without a switch). With Proxmox as the platform.