Supermicro SYS-221H-TNR Internal Hardware Overview

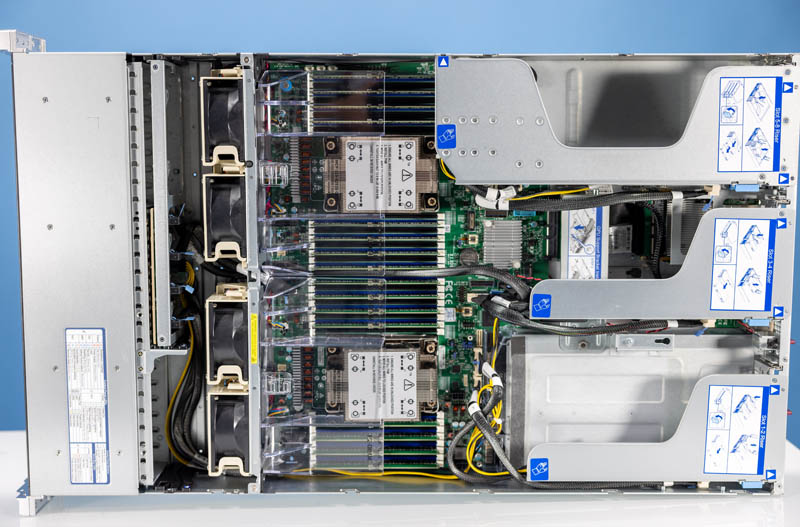

Here is a quick look at the system to help orient oneself for the internal overview.

Behind the storage bays, we have an array of four fans. These are easy hot-swap units.

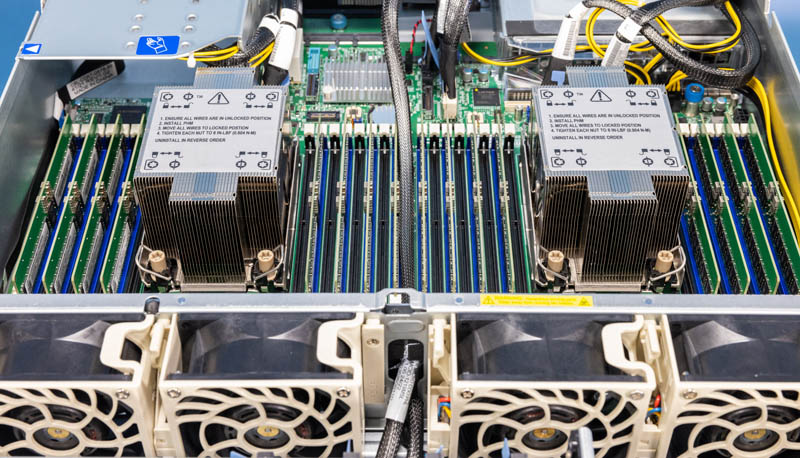

The fans push air through an airflow guide that ensures two fans are cooling each CPU for redundancy. Fans are very reliable these days, but thought is still given to what happens in terms of failures.

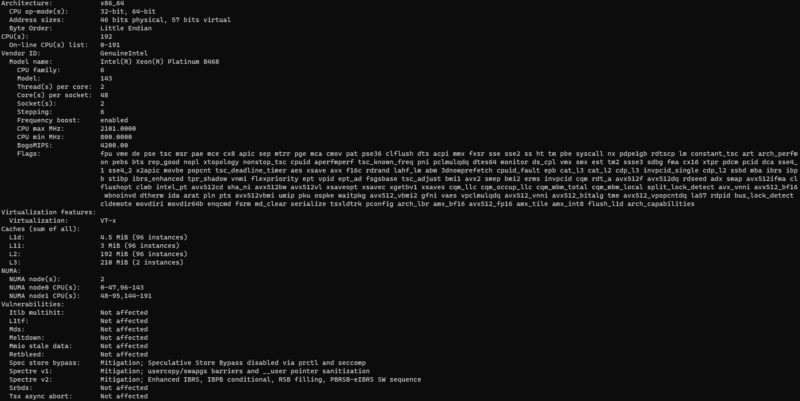

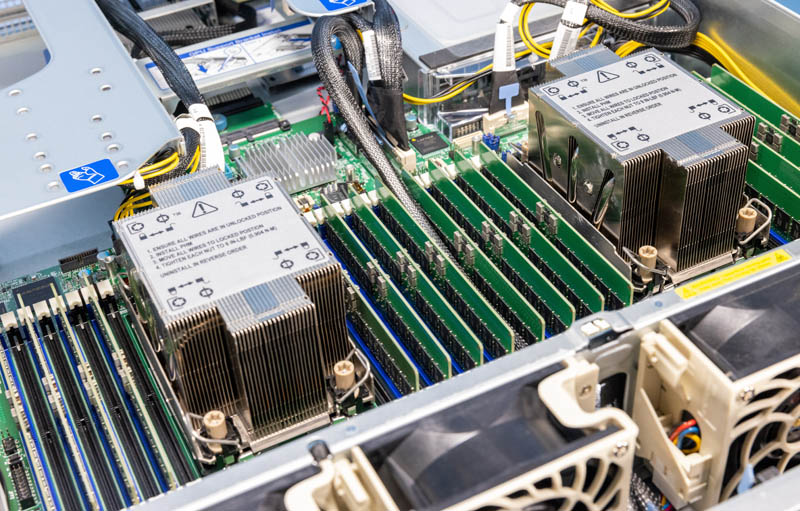

The CPUs are dual 4th Gen Intel Xeon Scalable “Sapphire Rapids” processors. These are Intel’s newest chips and this server is designed to handle high-power CPUs. There is even a liquid-cooled option that we are not trying here.

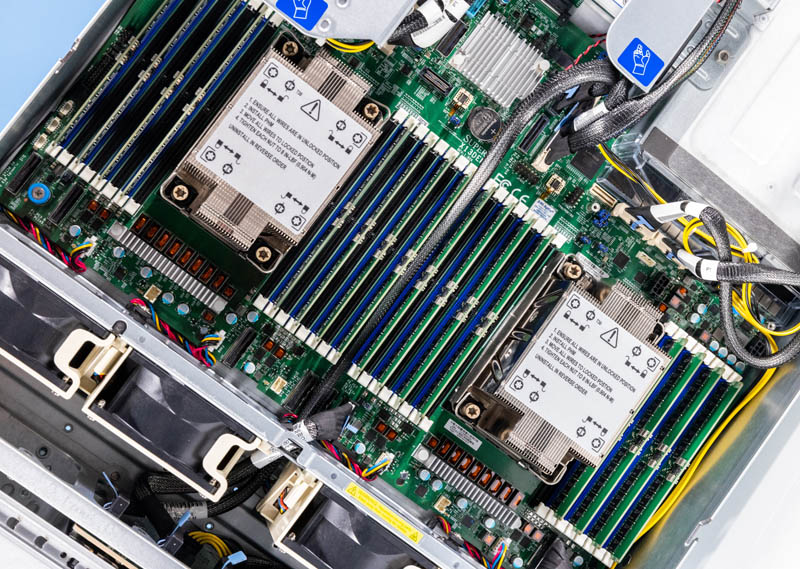

In our server, we have a pair of Intel Xeon Platinum 8468 CPUs. These are 48-core / 96-thread CPUs in each socket. While those are not the top-end SKUs, they are also 350W TDP parts so they are designed to offer more performance per core than the 56 or 60-core SKUs.

Surrounding the CPUs we get eight channels of memory per CPU, with two DIMMs per channel. That means each CPU can have up to 16 DDR5 DIMMs installed and the server can handle up to 32 DDR5 DIMMs.

In our server, we have 16x 32GB Micron DDR5-4800 memory modules.

If you want to learn more about DDR5, we have a piece on Why DDR5 is Absolutely Necessary in Modern Servers and an accompanying video:

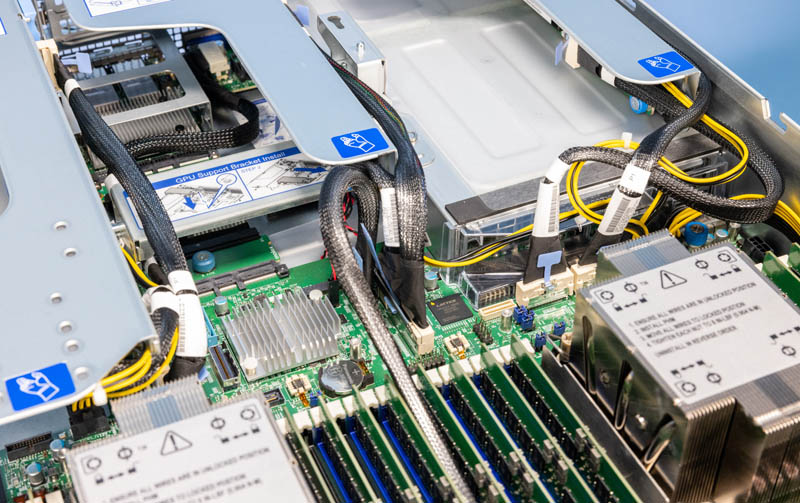

Behind the CPUs and memory, we get the I/O and PCH.

Supermicro’s motherboard in this generation takes advantage of a new design trend. We are seeing shorter server motherboards. Instead of slots for PCIe risers, instead the PCIe risers are cabled as are the front drive bays. As a result, this area is mostly just cable connections for PCIe Gen5 cables and the Emmitsburg PCH.

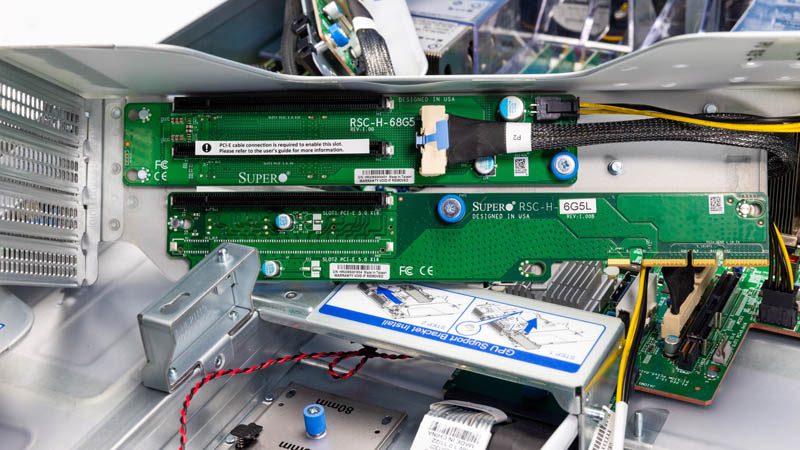

We mentioned the risers a bit in our external overview, but Supermicro has a new design that is worth noting here.

First, Supermicro’s new PCIe risers have a push tab. One pushes the blue part down, and then a pull tab becomes erect allowing for easy removal of the risers. This felt like a great feature.

The right riser had the bottom dual-width slot available via a direct motherboard connection. The top part of this riser, however, is one of the new cabled risers.

That same cabled riser PCB is used in all three riser segments except the right bottom.

Just for some sense, we needed a 1GbE management NIC, so we used a Silicom PE2G6I35-R 6-port Intel i350 NIC. Opening the case and installing the NIC, and closing the chassis took less than 30 seconds because everything is toolless. Part of that is also seen below as the latching mechanism for the top lid has a nice secure spot making it easy to use.

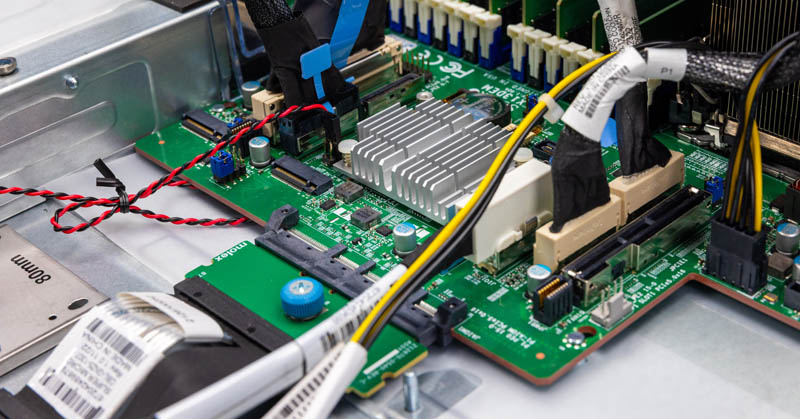

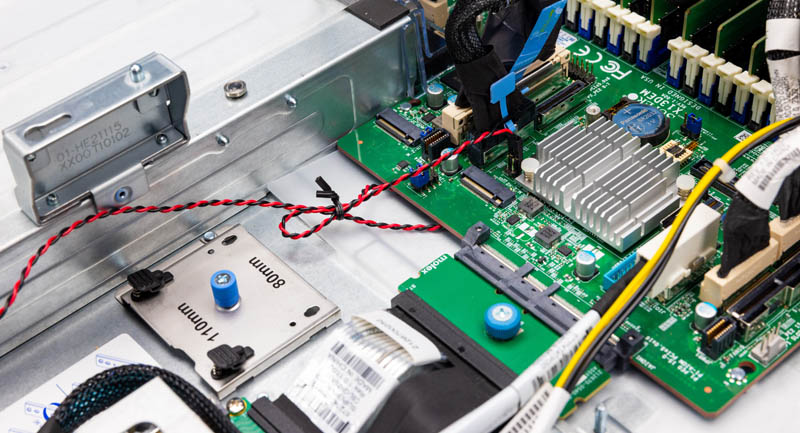

In the above picture, we can see another impact of the shorter PCIe Gen5 motherboard, the M.2 storage. Since there is less PCB, the M.2 slots are toolless and extend off of the PCB and to the chassis. Supermicro uses this toolless mounting mechanism to support M.2 2280 (80mm) or 22110 (110mm) SSDs. These are generally going to be used for boot SSDs.

Next, let us get to the system topology.

That looks beautiful. I’m just worried that Supermicro’s trying to be HPE instead of continuing to be the classic SMC

I’m curious if the chassis can fit consumer GPUs like the 4090 or 4080. RTX 6000 Ada/L40 pricing has forced a second look at consumer GPUs for ML workloads and figuring out which — if any — Supermicro systems can physically accommodate them has not been easy.

@ssnseawolf I’ve been building these up for Intel for the past year. They will accept the Arctic Sound/Flex GPU but that’s it.

STH level of detail: Let’s show everyone the new pop up pull tab

“DDR?-4800 memory modules” ??

I wonder if the SSDs get any cooling at all. With there being low-drag open grilles on both sides of the faceplace there is no reason for the air to be sucked through the high-drag SSD cage.

So bad they’re heading where HP already is – one-time use proprietary-everything server, going to trash after 5 years. Most of SM fans loved them for being conservative and standardized. Those plastic pins in toolless trays and tabs are weak and cracks in no time. X10-X11 and maybe H11-H12 probably were the last customisable server systems.

Another great article. And just jealous of all the amazing toys you get to review/play with :) Small textual correction, page 2 : “Instead of slots for PCIe risers, instead the PCIe risers are cabled as are the front drive bays.” I might be tired but somehow my brain can’t read this correctly.