Sometimes at STH, we review some intriguing hardware that expands the use case categories over previous generations. The Supermicro SYS-2049U-TR4 is one of those cases. In prior generations, four socket Intel Xeon systems came with significant price premiums over their dual socket counterparts. With changes in the Intel Xeon Scalable processor family’s multi-socket capabilities, along with Supermicro’s Ultra series design, Supermicro is delivering a four-socket server that will cost less to operate than two 1U dual-socket servers in most environments.

In this review, we are going to go through our normal test methodology, then show why the Supermicro SYS-2049U-TR4 delivers on substantial cost savings, and the caveats they come with.

Supermicro SYS-2049U-TR4 Hardware Overview

The front of the Supermicro SYS-2049U-TR4 2U server has twenty-four hot swap bays. 20 are primarily used for SAS/ SATA while four can be used for U.2 NVMe SSDs. This is a dense 2U storage configuration. Alternatively, these four NVMe ports can be used for SAS/ SATA as well.

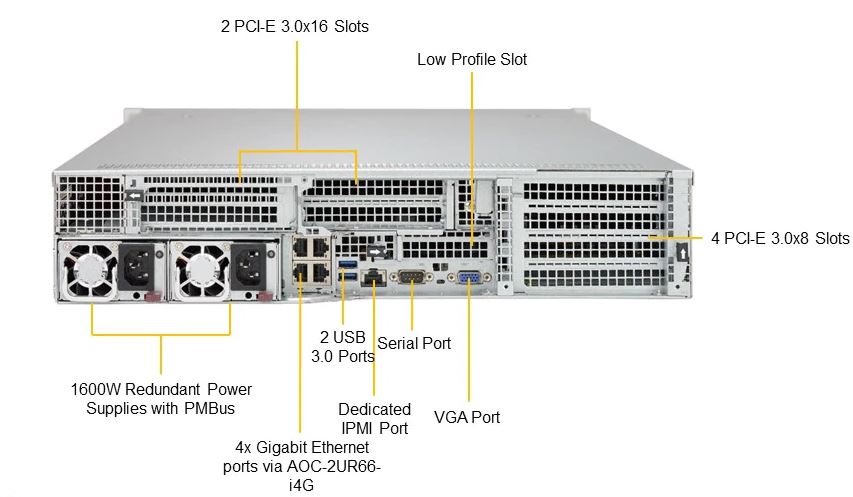

On the rear of the chassis, we find a selection of ports. These include quad 1GbE LAN and a dedicated IPMI out-of-band management port for networking. There is also a VGA port a serial port and dual USB 3.0 ports available. Supermicro has a nice diagram that we are going to use to label everything going on.

Power is provided by redundant 1.6kW power supplies. Supermicro is using 80Plus Titanium power supplies which offer extremely high efficiency. With the large system footprint, most configurations will drive these power supplies into their peak efficiency range.

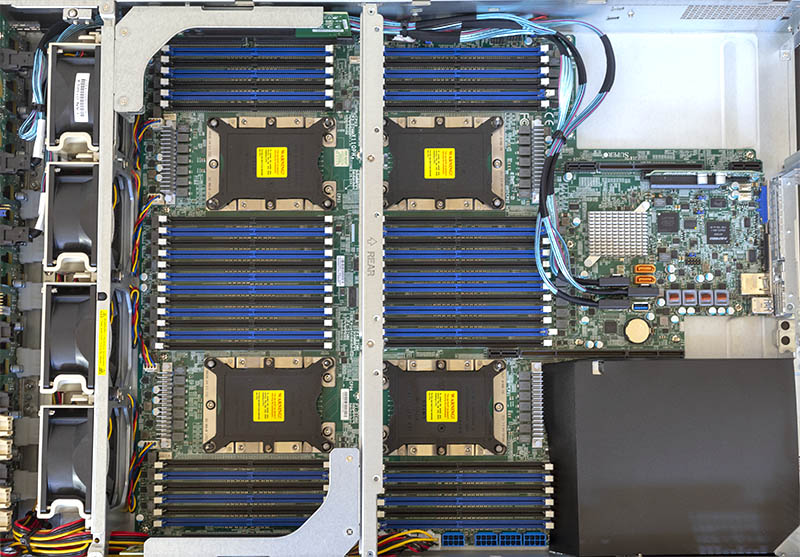

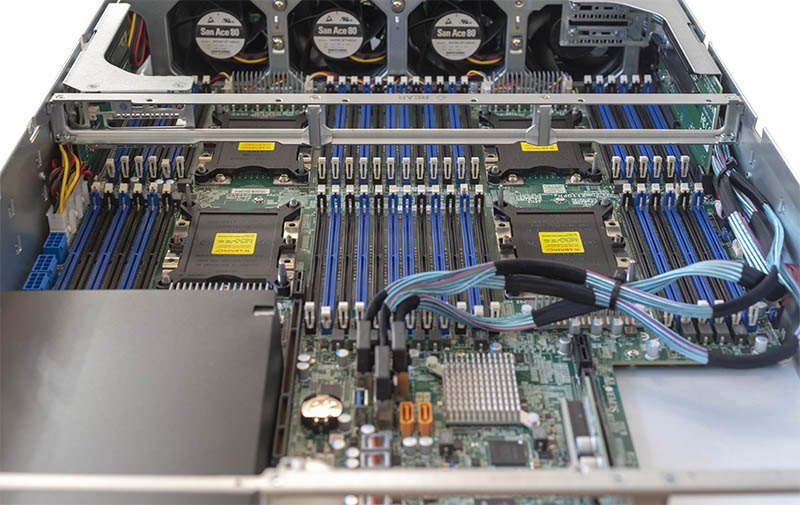

Inside the server, the chassis is dominated by CPUs and DIMM slots. We had to remove the risers except the PCIe 3.0 x8 riser labeled “Low Profile Slot” above just to see inside the system.

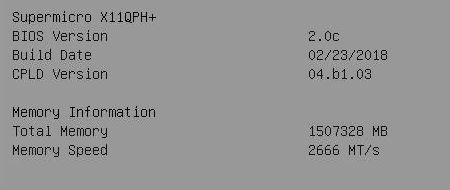

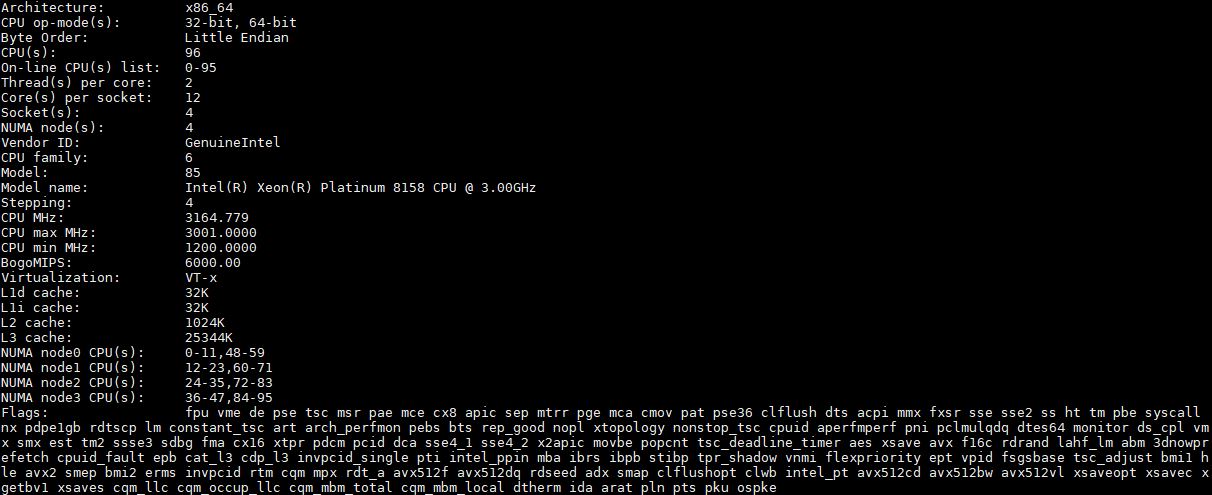

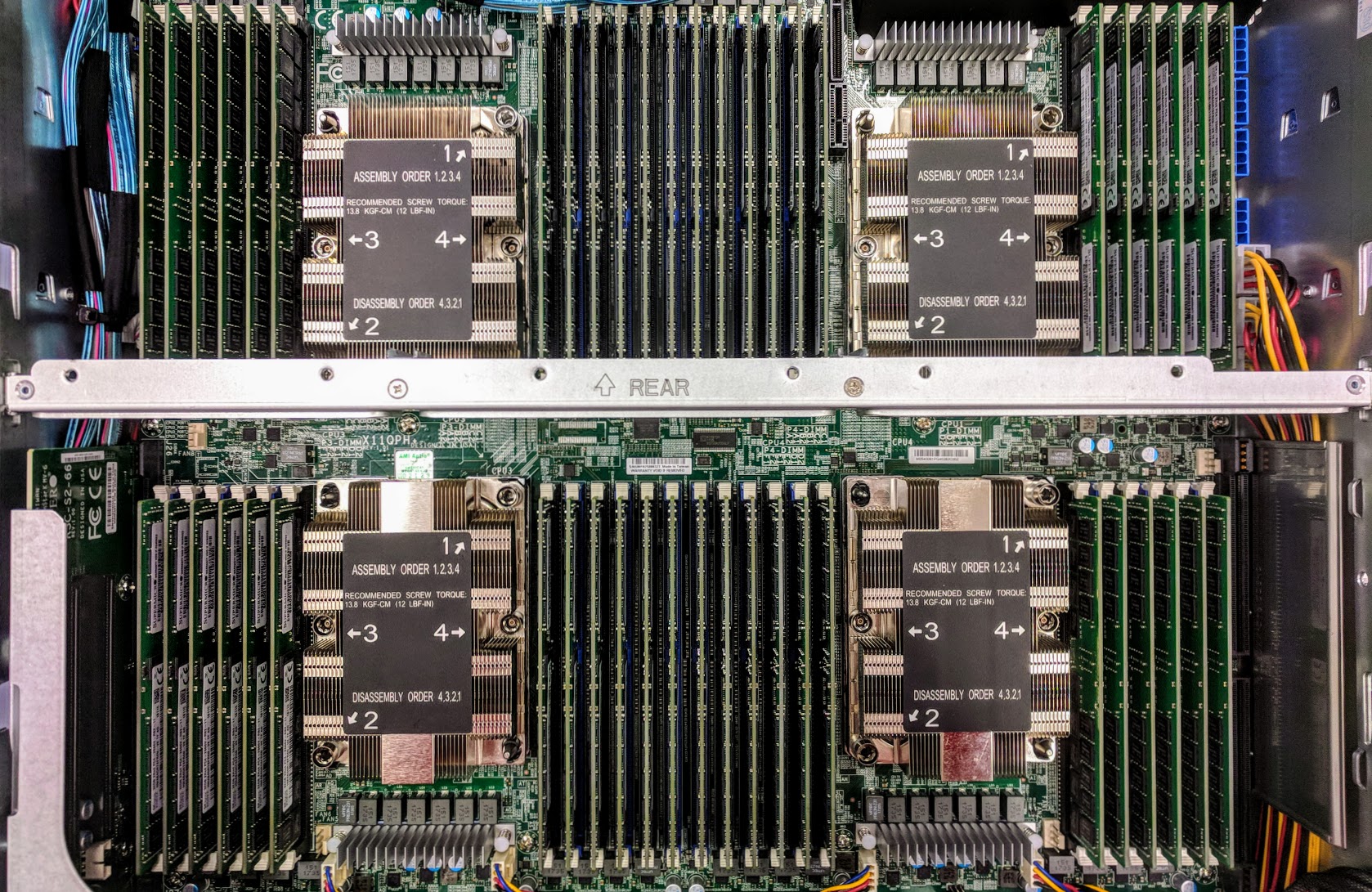

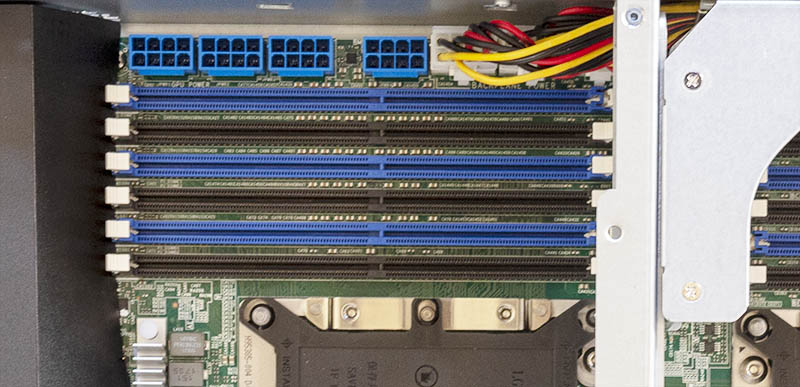

At the heart of the system is a quad Intel Xeon Scalable (LGA 3647) platform where each CPU on the Supermicro X11QPH+ motherboard has a total of 12 DIMM slots. These DIMM slots can be used for DDR4-2666 memory giving a total of 48 DIMMs and 128GB capacities or 6TB maximum with Intel Xeon Scalable first-generation CPUs. We expect that this type of system will be able to accept Intel Optane DC Persistent Memory in Cascade Lake generations as we covered in Intel Optane Persistent Memory Looking at Green Apache Pass DIMMs.

In our particular test configuration, we have quad Intel Xeon Platinum 8158 CPUs, but we tested the system with additional sets as well which you will see in our performance testing.

CPU heatsinks are different for the front and rear CPUs, similar to what we typically see in 2U4N platforms to enhance airflow. When all four CPUs are installed with 48x DDR4 DIMMs, it looks like our 1.5TB configuration.

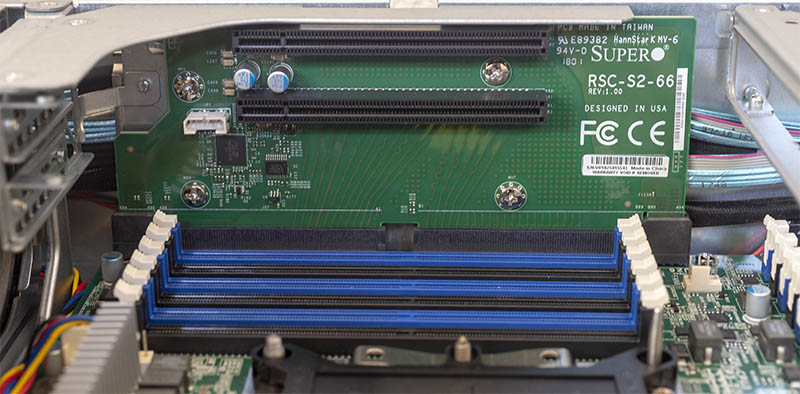

Above the front CPUs sit risers that have two PCIe 3.0 x16 slots each. In many quad socket servers, PCIe lanes from these CPUs are not available. Here, they can be used with cards such as NVMe connectivity or SAS3 connectivity.

The rear of the unit has five PCIe 3.0 x8 slots along with two PCIe 3.0 x16 slots. This is not the ideal platform if you want dense GPU to compute, for example with NVIDIA Tesla V100 GPUs, but if you need 100GbE NICs, or connectivity for massive storage arrays, the Supermicro SYS-2049U-TR4 has a total of 11x PCIe slots. All of the PCIe slots are on risers for easy installation.

There are even four PCIe power connectors onboard to power GPUs, FPGAs, and other higher-power add-in cards. The system can optionally support up to two actively cooled GPUs.

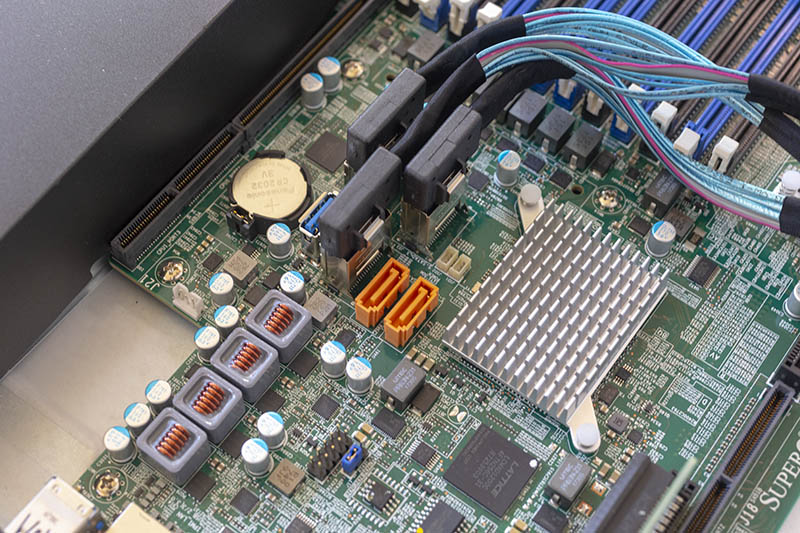

Onboard we also find familiar Supermicro features such as dual SATADOM gold slots that allow for mirrored boot devices without utilizing valuable front panel bays.

For those that need it, there is an internal Type-A USB 3.0 port. One can also drive a number of the front panel bays off of the Lewisburg PCH SATA controller via SFF-8087 headers.

In our exterior Supermicro SYS-2049U-TR4 overview, we mentioned how four bays on the front panel can be SAS/ SATA or NVMe. Supermicro has four white SFF-8643 headers on its backplane for NVMe cabling and uses black SFF-8643 headers for SAS/ SATA cabling.

The NVMe drives also support RAID functionality as can be enabled and upgraded via a VROC key. Hidden along the motherboard edge and beneath cables, we can find the key in its header.

Cooling the system, there are four large 80mm fans. This arrangement uses significantly fewer fans than two 1U dual-socket servers while also being able to cool high-end CPUs.

A quick note here. The overall system is designed to be very nice and serviceable. Supermicro is using SFF-8087 to SFF-8643 cables routed from the motherboard to the front panel for storage. This is great for channel partners and service teams as these cables are readily available. On the other hand, there is a delta in visual appearance and tidiness with these cables over some of the custom versions we have seen other vendors use. This is function over form with the idea that serviceability is more important than the internal appearance which customers are unlikely to see.

Overall, this hardware has worked great in our testing, and this is after going through 384GB, 768GB, and 1.5TB memory configurations, multiple CPU swaps, and changing topologies, for example moving from SATA to SAS and adding NVMe support.

Next, we will look at the Supermicro SYS-2049U-TR4 management and our test configuration before we move onto our system topologies and performance testing.

They maybe could have noticed that AMD has a socket called TR4 before they used that for their server designation…

Thomas Supermicro was using -TR4 since 2015 in the 2011 era https://www.supermicro.com/products/system/1U/1028/SYS-1028U-TR4_.cfm and they’ve used it on many servers.

Maybe AMD should have noticed Supermicro called Intel platforms -TR4 when they had certain specs

No worries, the prefix still will show clearly the server is AMD, AS-2124…..