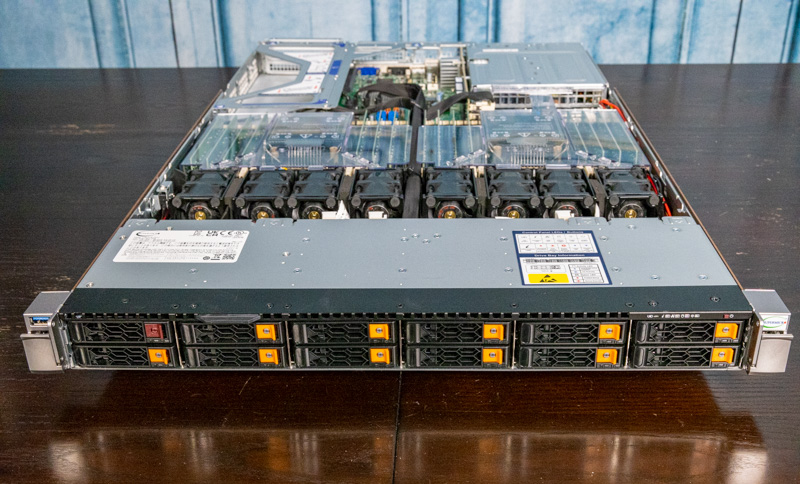

Supermicro SYS-120U-TNR Internal Overview

Inside the system, we are going to work from the front to the rear.

First, we wanted to discuss the fan partition. Here we have counter-rotating fan modules. That is a higher-end feature compared to single fan units that we have seen in some competitive servers we have reviewed recently.

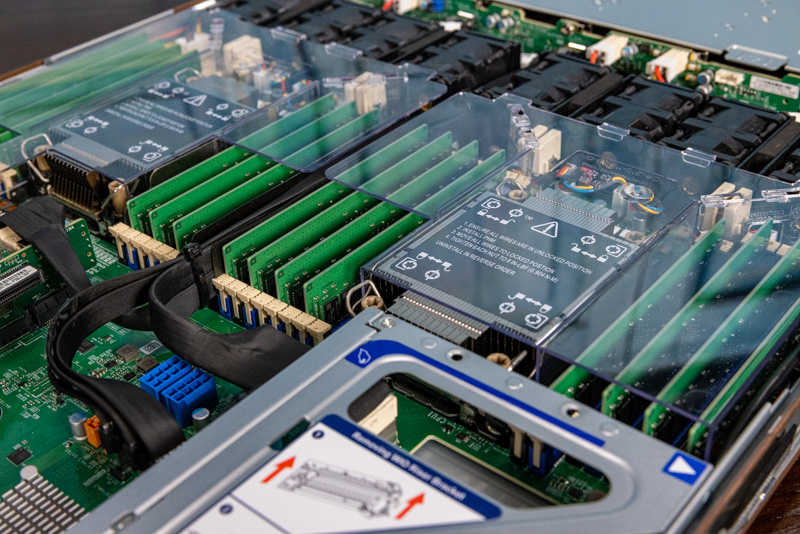

These fans cool the next feature we are going to look at which is the CPU and memory area. Here, we have two 3rd generation Intel Xeon Scalable Ice Lake generation sockets (LGA4189.) You can see our Installing a 3rd Generation Intel Xeon Scalable LGA4189 CPU and Cooler guide for more on the socket, as well as our main Ice Lake launch coverage.

Since we get many questions around the SKU stack, we have a video explaining the basics of the various Platinum, Gold, Silver, letter options, and the less obvious distinctions.

Around the CPU socket, we get a total of 32x DIMM slots. These can accept up to DDR4-3200 so long as the CPU supports it (see our 3rd Gen Intel Xeon Scalable Ice Lake SKU List and Value Analysis piece for more on that.) One can also add up to four DDR4 DIMMs alongside four Optane PMem 200 modules. We have more on how Optane modules work, since much of the documentation is not clear on that, here.

Supermicro designs its Ultra platforms in 1U and 2U form factors. As a result, the motherboard has a number of features such as GPU power connectors that may be used in the 1U system, but can also be used in 2U systems that have more space for accelerators. Designing a single motherboard allows Supermicro to drive up volumes. Higher volumes mean higher quality. They also mean that an organization can deploy the same servers based on the same motherboard in 1U or 2U configurations which is important for those looking to keep consistency in their lines. With the 12x 2.5″ front panel configuration, drive density also scales with the chassis height in a linear manner which it did not necessarily do in previous generations.

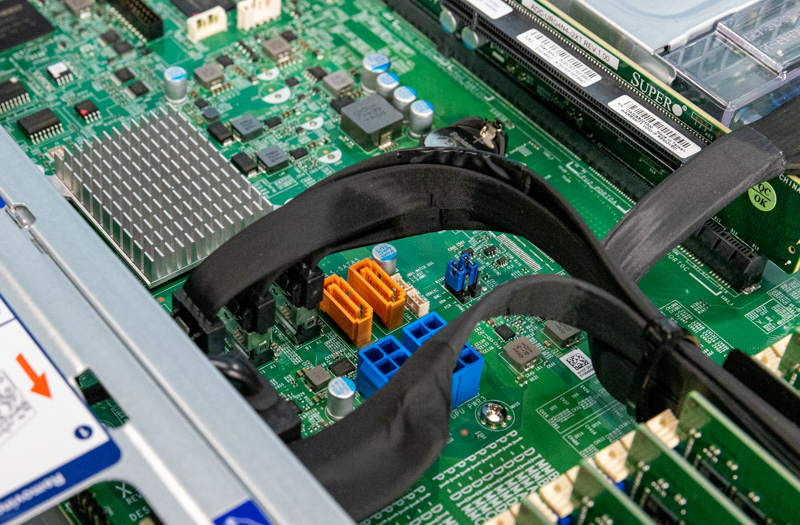

Behind the CPU and memory, we get the Intel C621A Lewisburg Refresh PCH. Next to these, we have two SATADOM slots as well as additional GPU power connectors.

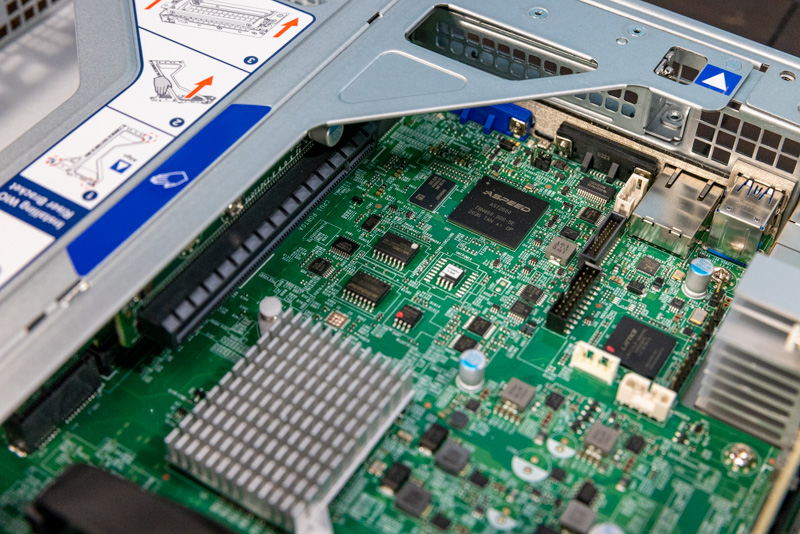

Also on the main motherboard, we have the ASPEED AST2600 BMC. This is a new generation of BMC in Supermicro’s latest servers.

The final feature we wanted to show in this server is the I/O riser card. There are actually several things going on here. We have the dual NIC solution and the controller is placed on this PCB with its heatsink. Having a LOM-based NIC is very common in this class of servers, however the way that Supermicro executes this with the integration on a 1U riser with additional features is different.

This riser also provides PCIe connectivity and not just NICs. One can see the PCIe Gen4 x16 internal slot. This is commonly used for PCIe connectivity that does not need to reach the rear faceplate. Examples include internal SAS RAID controllers and HBAs as well as cards with M.2 connectivity. Supermicro has used this format for generations but this is a full x16 slot (previous generations were PCIe Gen3 x8) making four total PCIe Gen4 x16 slots in the system. That is the advantage of Ice Lake’s enhanced PCIe capability.

Next, we are going to check out the block diagram as well as the management before moving on with our review.

Hi Patrick, according to Supermicro’s documentation, the two PCIe expansion slots should be FH, 10.5″L – which makes this one of the very few 1U servers that can be equipped with two NVIDIA A10 GPUs.

Can you confirm that it is the case ?

The only other 1U servers compatible with one or two A10 GPUs that I know of are the ASUS RS700-E10 that you recently reviewed, and the ASUS RS700A-E11 which is similar and equips AMD Milan CPUs.

For example, all 1U offers from Dell EMC only have FH 9.5″ slots.

This one supports 4 double width GPUs: https://www.supermicro.com/en/products/system/GPU/1U/SYS-120GQ-TNRT

Is supermicro viable from a support perspective for those around the 25 server range?

Like with any other product, the first thing you notice is the build quality. This is on the complete other end of the spectrum compared to Intel and Lenovo servers. Everything is fragile, complicated, tight, bendable. The fans; laughable.

IPMI usually works, but sometimes when you reboot, ipmi seems to reboot. Takes a minute or two and it’s back again.

HW changes updates in IPMI when it’s ready, it can take a couple of reboots to show up.

We tried a lot of different fun stuff due our vendor providing us with incompatible hardware, like;

Upgrading from 1 -> 2CPU’s with a Broadcom NIC in one of the PCIe slots. This does not work with Windows (for us).

Upgrading from 6 -> 12NVMe, drivers required which again requires .net 4.8.

Installing risers and recabling storage. How complicated does one have to make things ?

Hopefully we will soon start using the system. The road has so far been painful, not necessarily Supermicro’s fault.

It’s awesome that one can put 6 NVMe drives on the lanes of the first CPU. Making this a good cost-effective option for 1 CPU setups with regards to licensing.