Supermicro SYS-110D-16C-FRAN8TP Topology

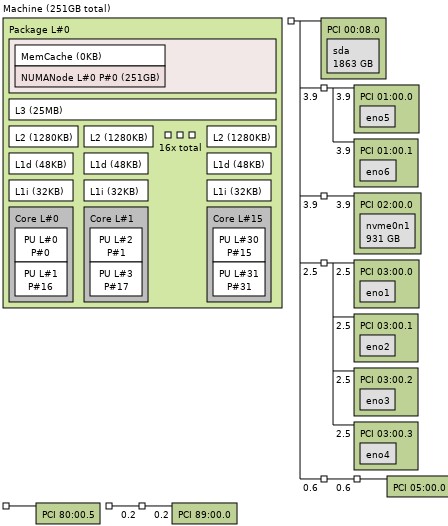

Here is the topology output of the system:

A key here is that this is a single NUMA node. The AMD EPYC 3451 is still a two NUMA node design with half of the DIMM channels and half of the PCIe lanes, networking, and so forth on each NUMA node. Effectively the Ice Lake D-2775TE looks like a single socket server while the EPYC 3451 looks like a dual-socket option. In the X12SDV-16C-SPT8F, that means that all resources are attached directly to the same CPU making communication faster.

Supermicro SYS-110D-16C-FRAN8TP Management

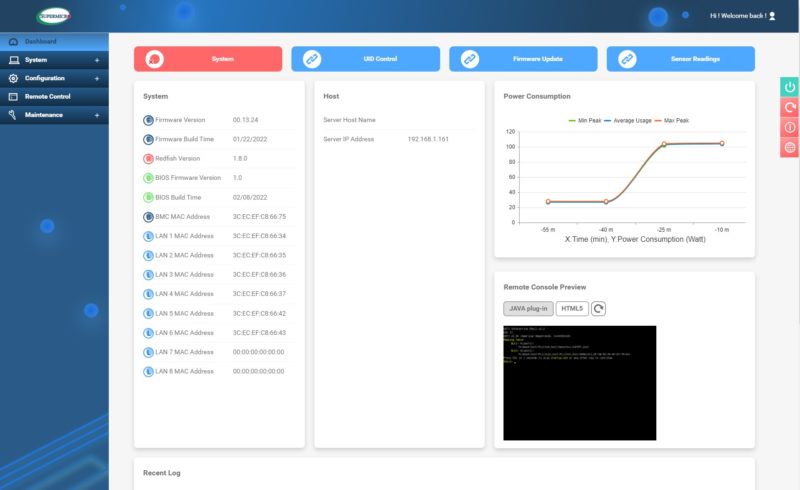

The Supermicro X12SDV-16C-SPT8F utilizes Supermicro’s newer web management interface. It also supports Redfish APIs. The newer web management portal has features like HTML5 iKVM but also a more responsive layout that is easier to access via mobile devices.

One item we will note is that this platform utilizes a random IPMI password and not ADMIN/ ADMIN like older generation systems. You can read more about this in Why Your Favorite Default Passwords Are Changing.

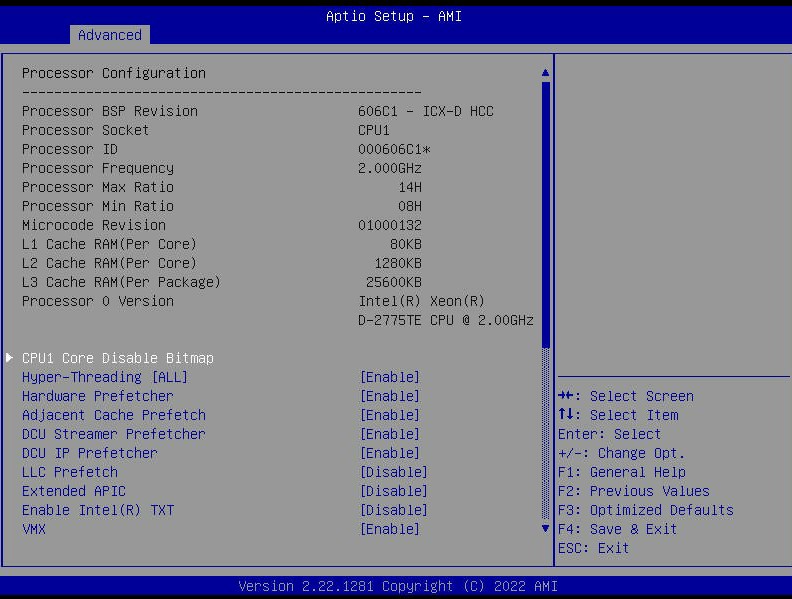

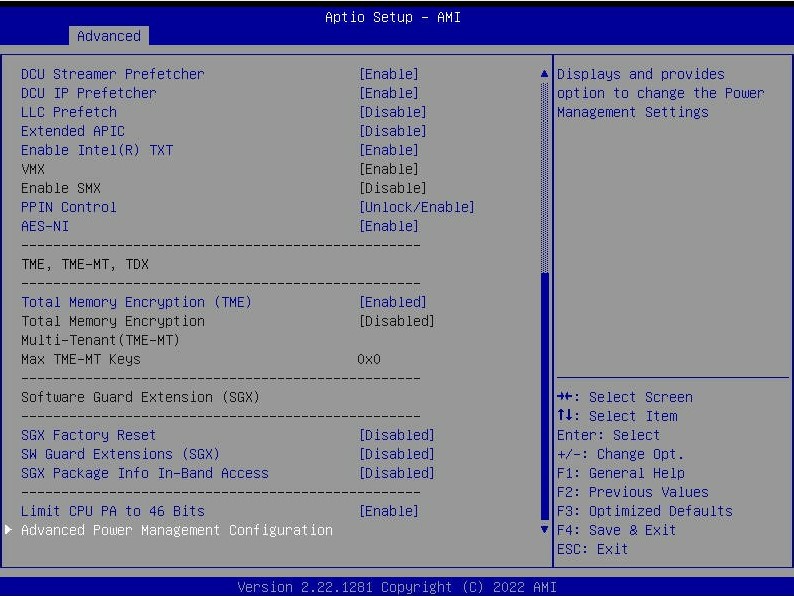

The BIOS are still the same AMI Aptio base that we have seen for generations.

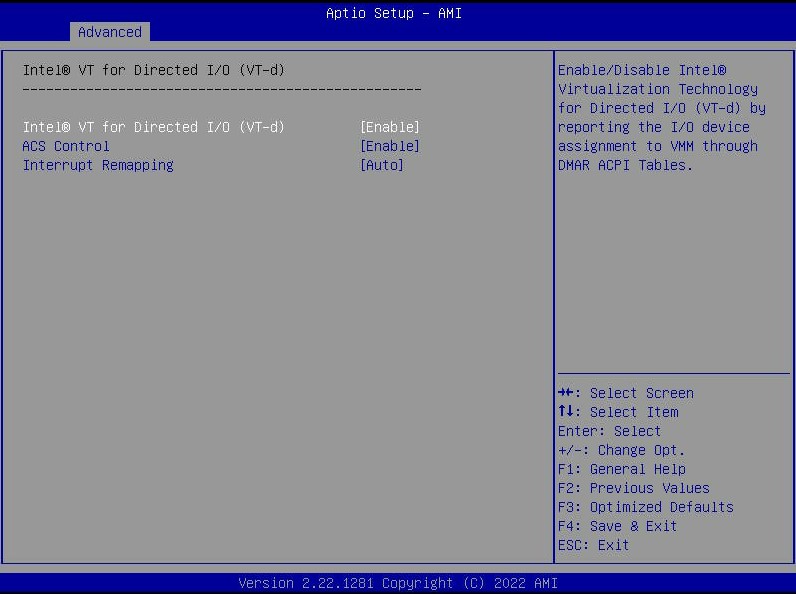

The new chips support features like VT-d and Intel has certainly made an effort to do a much better job supporting a common virtualization set between its embedded and mainstream Xeon lines.

That also means that we get features like TME and SGX in BIOS which are more advanced features with this generation.

Even though this is an embedded motherboard, it feels much more like a mainstream Supermicro server platform.

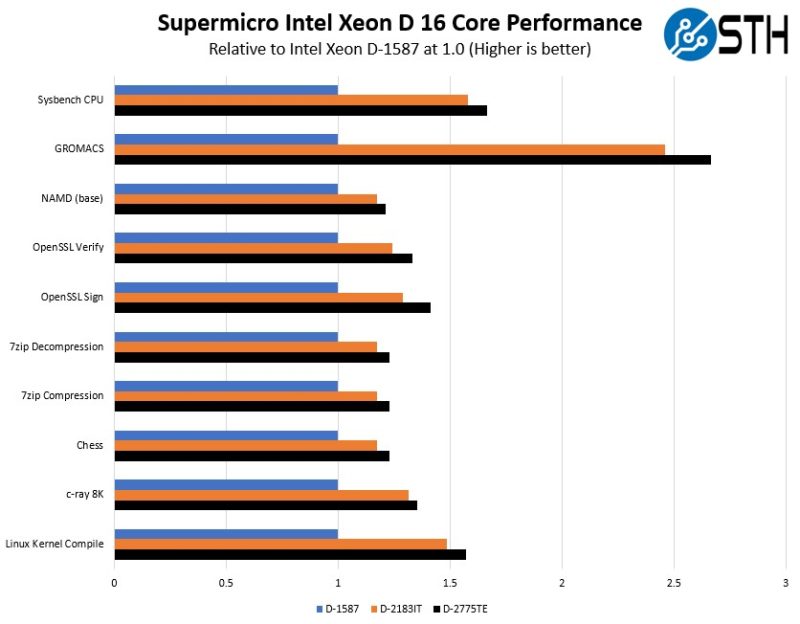

Supermicro Intel Xeon D-2775TE Performance

Taking a quick look at the generational performance we wanted to show a few benchmarks that we ran directly on the three generations of Supermicro platforms, X10SDV, X11SDV, and now X12SDV. We are not going to focus on things like AVX-512 and VNNI performance as we looked at those for the Ice Lake series more broadly. Part of the Ice Lake Xeon D’s appeal is the ability to use these extensions for things like AI inferencing. Intel’s theory is that by providing “enough” AI inference performance onboard, companies will not have to add additional PCIe accelerators thereby shrinking footprint and power consumption.

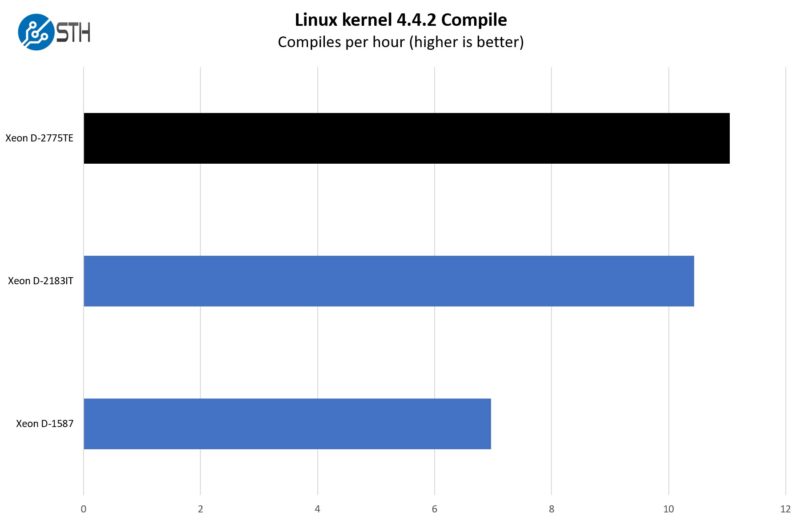

Instead, let us take a quick look at a few generational benchmarks, starting with our Linux Kernel Compile benchmark:

Here, we got a nice performance gain but this was not a 30-100% gain over the X11SDV generation’s D-2183IT. There is a much larger gain shown between the X10SDV generation’s Xeon D-1587. The D-1587 was lower power and had a “unique” architecture to fit that many cores into such a small space. The D-1500 series was also a dual-channel memory architecture. This is a big difference in performance.

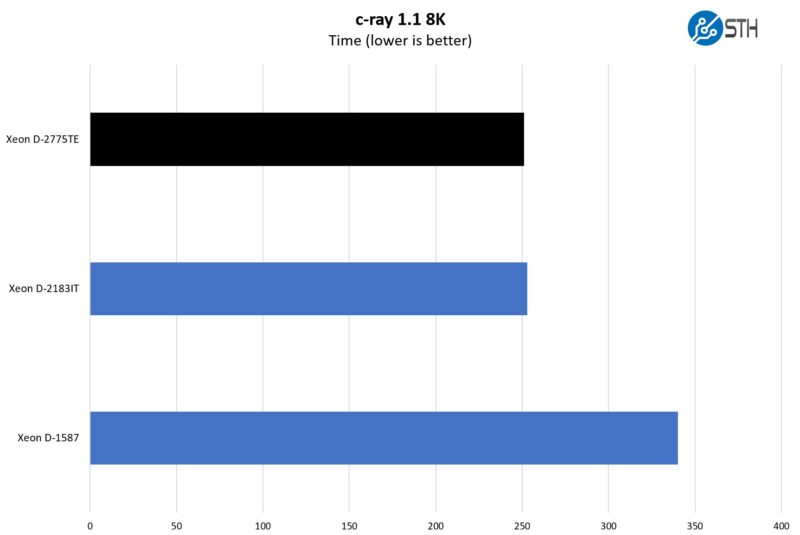

In our classic c-ray 8K test, we see another small gain. This is more akin to a Cinebench run on the consumer side, so we still see a big jump between Xeon D-1500 and D-2100 but a smaller jump to the D-2700.

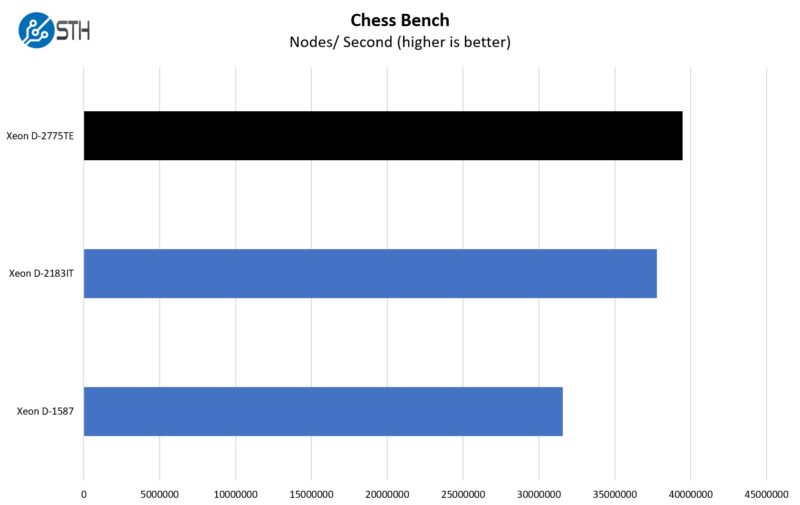

On the Chess Bench side, we get another solid gain.

Just taking a quick look at the performance deltas since Broadwell-DE.

There are certainly some IPC increases, but keeping cores constant, it seems like the biggest factor was adding memory channels and TDP since the Broadwell-DE generation.

A quick note on the networking performance, we used iperf3 and could push line rate from both SFP28 ports. We also tested the X550-at2 10Gbase-T ports and the i350-am4 1GbE ports and saw line rate from those as well. This is an area that has improved since we saw our first pre-production samples, but it appears as though networking performs as one would expect at this point.

Next, let us move to power consumption before our final words.