Power Consumption and Noise

From a power perspective, we have two 300W redundant power supplies. Since these are lower-power designs, they are 80Plus Gold power supplies.

The system itself, even with the 10Gbase-T NIC installed, only used around 67W at idle. For a 16-core, 64GB memory system with 8x 1GbE, 2x 25GbE, and 2x 10Gbase-T ports that is solid. When we fired up stress-ng to get loaded power consumption it was surprisingly low at only around 86W. This may seem really strange to some of our readers as usually we see load power as being a multiple of idle power. The reason for this is very simple. The system is designed as a network appliance. As a result, we do not see clock speeds that vary between, say, 800MHz and 4.8GHz. Instead, these are designed to stay at more constant clock speeds.

Whenever a CPU transitions to a different power level and frequency, it takes a few cycles to make that adjustment. That adjustment has been known to cause jitter as data gets stuck waiting for the state transition. As a result, the Snow Ridge SoC is designed to minimize this and maintain a more constant clock speed. Intel has other network focused SKUs in its stack that perform in a similar manner.

In terms of noise, this server is far from quiet. At idle in our 34dba noise floor studio at 1m away we saw around 40dba. Under load, we could get in the 45-50dba range. For those who want a silent system, this is probably not the right answer without some modification. On the other hand, those that are spending $1800-2000+ for a network appliance will likely have this racked outside of earshot. In a decent office equipment closet, this will not be a distracting level of noise.

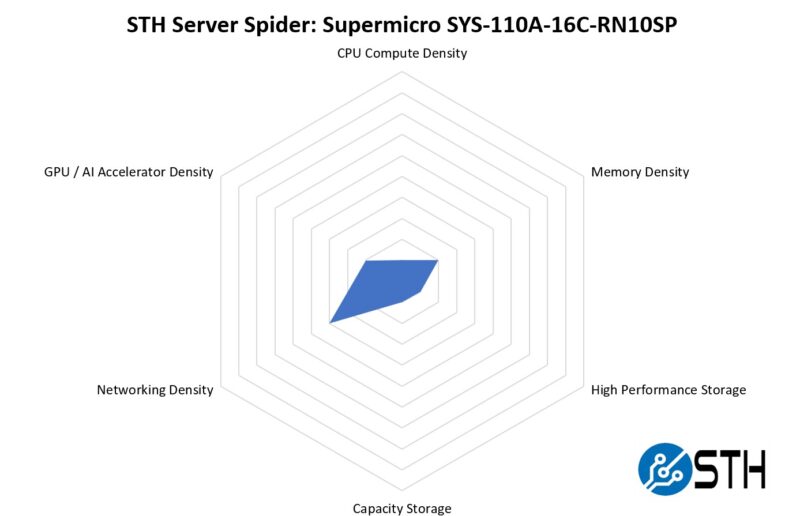

STH Server Spider: Supermicro SYS-110A-16C-RN10SP

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

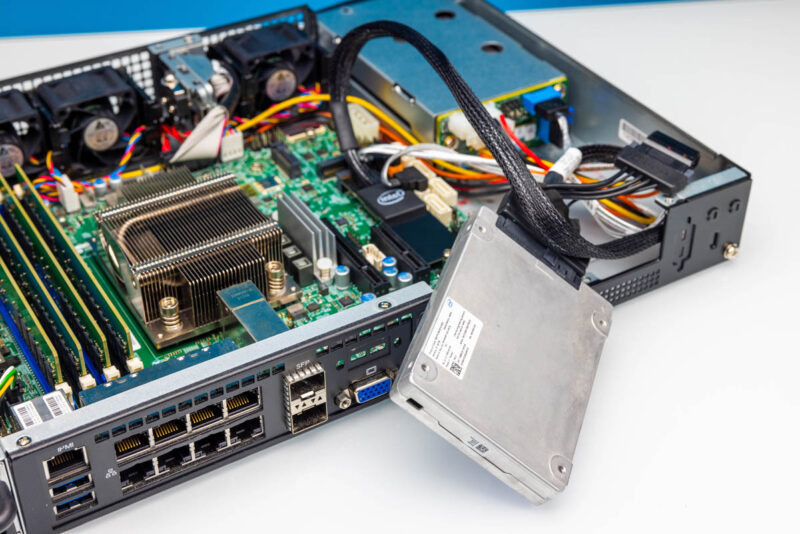

This is not meant to be the densest CPU, memory, or storage system. At the same time, it has a decent amount of onboard networking and we managed to get a NVIDIA T4 working in this platform cooled by the chassis fan so it can also add AI acceleration capabilities.

Key Lessons Learned: A Missed Opportunity

We felt like there was a substantial missed opportunity throughout our review of this system. At one point, we used the system in a Pelican Hardigg case strapped to the bed of the Cybertruck as a high-quality video capture server.

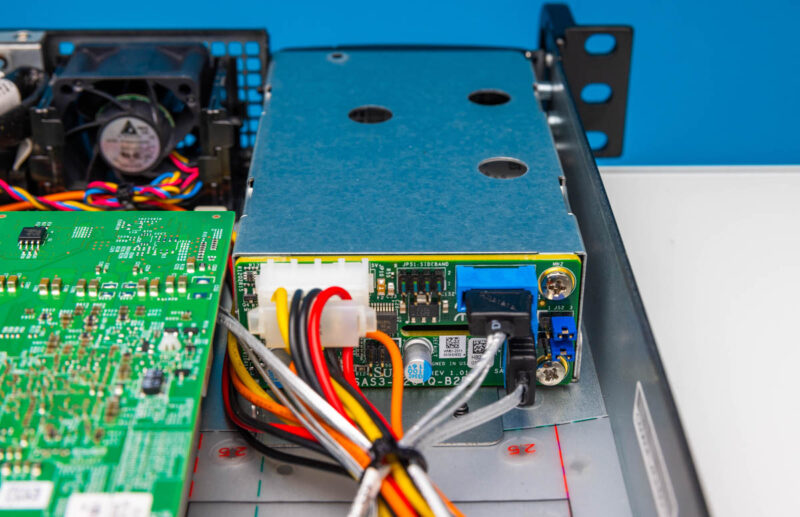

That is when the limitations of SATA storage felt. Two SATA drive bays are OK, but it might have been a better use of space to allow for other storage options. It has been several years since SATA SSD vendors have tried to keep up with their NVMe offerings. For a hard drive, the 2.5″ hard drive capacity, density, and performance are nowhere near what modern NVMe drives offer. If this were a dual NVMe hot-swap bay with a fan behind it, it would be immensely more useful.

Instead, to get more storage, we had to rig an M.2 to U.2 adapter and velcro a drive into the system.

The net result was very cool since that gave us 61.44TB in such a compact server.

While this will most likely be used as a network appliance, with modern NVMe SSDs, it was two drive bays, a fan, and a few cables away from being able to also act as a useful storage platform.

Final Words

Overall, this is one of our favorite segments since we tend to get great performance bumps in each generation. Also, when we started reviewing the Intel Atom C2000 series platforms, and even a few before then, over a decade ago, 10GbE was a big deal that had to be done via add-in cards and 25GbE in an embedded edge platform would have been almost science fiction.

Still, with the march of technology, we now have a 16-core E-core processor that is roughly as fast as 16 cores worth of P-core processors from a decade ago and built-in networking.

These compact platforms are absolutely awesome, although the prospect of 2.5GbE and 100GbE-enabled future platforms has us excited about what this segment will bring.

For storage use, how about install a PCIe 3.0 or higher SATA/SAS card in the riser slot, assuming it will fit. Use the onboard NMvE slots as the OS/cache drives.

If such a card has external connectors then you can connect to external storage chassis via appropriate cabling.

Init7 users in Switzerland will probably snap this up as it finally looks like a homelab type box that can route 25Gbs

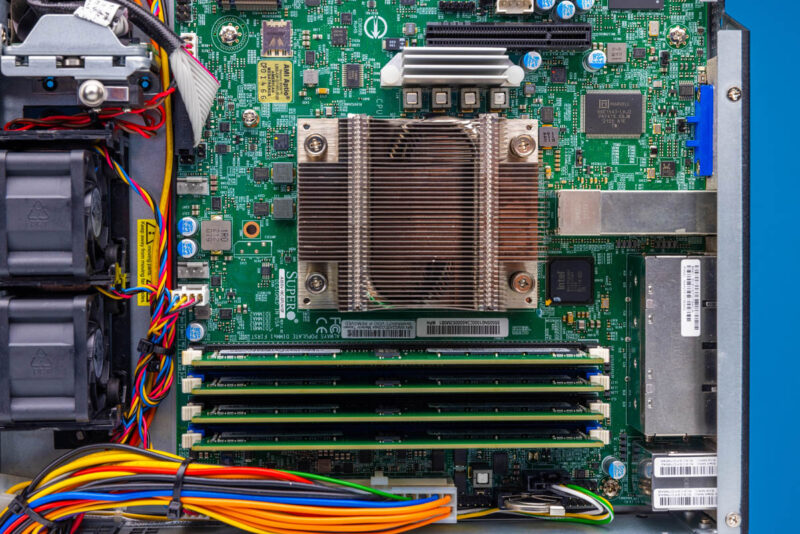

Interesting they’re still using wires for all the power distribution. All the Dell servers I’ve seen for years now route power on the PCB itself, and the PSUs, fans and drive bays all slot into connectors directly on the motherboard so there’s no need to route any power cables through tight spaces.

Not that there’s anything wrong with using standard connectors, it just seems like it would require more manufacturing labour and thus increased cost.

I love reading about all the cool new hardware, but how much is this is this thing? I’m guessing in 2000 – 3000 dollar range – which waaay over a normal “serving the home” budget I think. I really think these high end products should be separated to another website or something, because so many or the articles (while cool) are not serving the home anymore.

@owen I belive Patrick has answered that question many times in the future, the serve the “home” part is now way in the past. I understand that thr name servethehome.com has a brand recognition thing attached to it and this is probably why they still use it, but I would have expected a rebrand of this website at this point in time to be honest. It would take some work but maybe they can refer to the old website name for a year or two while thr new rebranded name is getting traction.

The past. Not future:-)

Malvineous that’s why Supermicro has so many SKUs and Dell has so few. They’ve got one motherboard in many form factors with wired power inputs. They’ll even keep the same case for generations. FlexATX you can get C3000, P5000, D-1500, D-2100, and there’s older C2000 I’d think. Then you can use mITX in FlexATX cases too. It’s like 100 different motherboard SKUs that can be fit into the same chassis. If you’re selling these as an OEM appliance you can get the same look and make models with different perf levels.

Owen I’ve been reading STH for years. I don’t understand that comment at all. They do lots of inexpensive gear. Lots of very expensive gear. I’d wager most here have homelabs because we use servers at work. That work gear in 2-3 years becomes homelab gear.

This is also a machine that homes with high-end internet like Korev is mentioning can use. If you’re in silicon valley there’s people spending $11,000 USD for 1m^2 on homes not even in the nicer areas or downtown San Francisco. If they’ve got fiber at a few hundred a month because US broadband prices are very high, then a $2000 machine as a firewall is not even a year’s worth of broadband service.

I’d also understand if you’re coming from a place like Albania, Rawanda, Alabama, Argentina homes are cheap, but then they’re covering cheap gear too that’s more applicable. Homes aren’t just in those cheap places.

Owen the barebones cost is $1800 retail for this edge server. By no means is that high end. If you go and get a Cisco 4200 series firewall (only the 9000 series is above that by them) you are looking at $200,000 or more for one device. For a home this would be high end but that’s it.

@owen, I believe due to the nature of readers here, it serves home IT professionals. For many, this price suffices for homelab, small business and high end home clients. I will probably end up buying a D-1700 series myself….

For provisioning a homelab, it would be interesting what the minimum spend would be for a system that can effectively route 25 gigabit Ethernet. A further study on the effects of firewall rules and operating systems at that level of performance would also be useful.

The pictures and measured time to build a Python interpreter from source are also nice.

How does a Raspberry Pi with a 2.5 gigabit USB Ethernet dongle compare? What about those router style mini PCs with multiple built-in Ethernet ports? How does increasing the complexity of the firewall rules affect these cheaper solutions?

@Owen has a reasonable point, in that this is not what most would think of as a home server; however some of my friends do have racks in their basement, and for many the office has migrated to the home as well.

Personally I’m still satisfied with the HP MicroServer Gen 8 that I got for <£200 shipped, and upgraded to 12GB RAM and an i5-3470T once it was cheaper to do so. But I find this site useful for reviews of equipment I may lease in 3-6 years' time, or possibly get second-hand.

As for a new name, may I suggest ServeTheOffice?

You know, it’d be interesting to see a series on 10+ Gbps office/lab/home routing options with performance tests. What can you really push through a Snow Ridge CPU running pfsense? What about VyOS? TNSR? How do N100s/Xeon D/Ryzen 7xxx CPUs compare?

I’m seeing it just today and felt that missing opportunity on design, if they rotated 180° the board facing all the back ports at the front (only redundant power at the back) that could have been a pretty cool all around 1U rackable multirole appliance, and with a couple of 2.5″ U.2 compatible bays, oooofff.

Eric Olson: For provisioning a homelab, it would be interesting what the minimum spend would be for a system that can effectively route 25 gigabit Ethernet.

See https://michael.stapelberg.ch/posts/2021-07-10-linux-25gbit-internet-router-pc-build/ for a good example – there are other interesting 25Gb internet posts there too

I love these short-depth supermicro servers. I’ve got a stack of them.

Those air shrouds are always mylar in this supermicro form factor. I don’t mind it. I’ve even copied the approach to make my own shrouds when building short-depth 1U servers.

@Scott Laird: I think this is brilliant idea. Seeing how these e-cores, p-cores etc. and even older “TinyMiniMicro” devices translate into real world networking performance.