Power Consumption

The redundant power supplies in the server are 500W units. These are 80Plus Platinum rated units. At this point, most power supplies we see in this class of server are 80Plus Platinum rated. Almost none are 80Plus Gold at this point and a few are now Titanium rated.

For this, we wanted to get some sense of how much power the system is using with an Intel Xeon Gold 6240R CPU, 9x SSDs, a 25GbE NIC, and a RAID controller.

- Idle: 0.106kW

- STH 70% CPU Load: 0.284kW

- 100% Load: 0.338kW

- Maximum Recorded: 0.375kW

There is room to further expand the configuration which can make these numbers higher than what we achieved, but the 500W PSU seems reasonable. We are also starting to use kW instead of W as our base for reporting power consumption. This is a forward-looking feature since we are planning for higher TDP processors in the near future.

Note these results were taken using a 208V Schneider Electric / APC PDU at 17.5C and 71% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance.

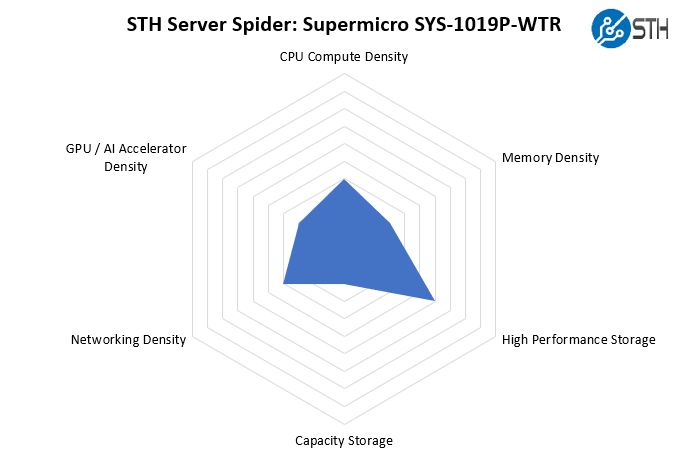

STH Server Spider: Supermicro SYS-1019P-WTR

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Here we see the Supermicro SYS-1019P-WTR providing a lot of different types of functionality. As a single-socket 1U and relatively short-depth server, it is not going to reset density expectations. At the same time, it packs a lot of flexibility into this small chassis.

Final Words

The allure of the Supermicro SYS-1019P-WTR is fairly simple. It is a compact platform at only 23.5″ deep which makes it suitable for a wide variety of racks. It is inexpensive with a $1350-1400 barebones (street price.) Configurations allow for a solid range from ultra-low-cost to some intriguing storage and expansion card options. With a single CPU and built-in 10Gbase-T networking via a Lewisburg PCH, costs to get a system configured are further reduced.

There are a few small details we would like to see updated in future X12 revisions. For example, it would be nice to have larger barcode/ printouts for the IPMI MAC and default password printed, and to have a label near the rear I/O. Also, many of Supermicro’s competitors now have service guides printed on the chassis or on the inside of the top cover. It would be nice to see Supermicro adopt that practice as well. Supermicro does have quick reference guides and manuals, but it helps to have these without requiring an external reference. Finally, we would like to see a further push to go tool-less for servicing to make those exercises faster.

While one may immediately reason “why not EPYC” for single-socket platforms like this, this type of configuration makes a lot of sense outside of the basic Xeon v. EPYC scalability discussions. Xeon motherboards can cost less to produce. Intel also has lower-end Xeon Silver/ Bronze CPUs that scale to lower price points that AMD is not currently matching. Also, some simply prefer using Xeons. That is not to say there is no competition there, it is just that this system has a specific place.

Overall, this is a surprisingly good platform. In year’s past for STH’s hosting, this would have been an absolutely perfect web hosting platform for us to build upon. We have grown into larger systems, but one aspect of experiencing this growth is that we completely understand the appeal of a system like the SYS-1019P-WTR. It may not be the perfect platform for every application, but if you are looking for a compact Intel Xeon Scalable single-socket server, the Supermicro SYS-1019P-WTR has a lot to offer.

One thing I’d like you to focus more on is field serviceability. Since you mention the continued lack of a service guide plus the small print SN labels I do not understand the 9.1 design grade – why a server gets an ‘aesthetics’ grade is another point of contention.

Regarding the field serviceability and what I will file under design (in general, not necessarily true for this review):

– Are the PCIe slots tool-less?

– Are the fan connectors on the motherboard and easily reachable with the fan cables or do the fans slot into connectors directly?

– Are cable guides available at all?

– Are cable arms available and if yes, do they stay in place without drooping too much and how easy they’re to use? Do they require assembly, maybe even with tools?

– How easy the rails are installed (both server and rack-wise)

– Are the hard drive caddies tool-less and are they easily slotted in?

– Even seemingly mundane things like PSU and HDD numbering or the lack thereof

All these cost time and money before and during deployment and – this has been my experience – is something Supermicro is sorely in need of improvement. This is also not to say everything is rosy with their direct or indirect competitors; I’ve seen both good and questionable design decisions from HPE, Dell, IBM / Lenovo, Fujitsu, Inspur, Blackcore, Ciara and Asus.

Still, thanks for the indepth review.

To confirm – did the server barebones ship with the rackmount rail kit? I can’t see mention of it either on the product page at Supermicro’s website or in the review.

Thank you.