Every so often a product comes into our lab that makes us wish we had waited another few months. The Supermicro SuperStorage SSG-5029P-E1CTR12L is a 2U server that left us feeling that way. Several months ago we were building our latest ZFS storage array and ended up using two chassis to get the right mix of features. The Supermicro SSG-5029P-E1CTR12L is all that we really needed and shows off what the Intel Xeon Scalable platform can do even in a single socket configuration.

Key stats for the Supermicro SuperStorage SSG-5029P-E1CTR12L is that it is a 2U storage server with 12x 3.5″ SAS3 / SATA III bays in the front, 2x U.2 NVMe SSD rear hot swap bays. It utilizes a single Intel Xeon Silver CPU and can handle up to 192GB RAM in 8 DIMM slots.

Test Configuration

For this review, we had the opportunity to try a number of different test configurations.

- System: Supermicro SuperStorage SSG-5029P-E1CTR12L with 2x 2.5″ NVMe hot swap rear option

- CPUs Tested: Intel Xeon Gold 6132, Intel Xeon Silver 4108, Intel Xeon Bronze 3104

- RAM: 6x 16GB DDR4-2666 RDIMMs

- NVMe SSDs: Intel DC P3700 400GB, Intel DC P3520 2TB

- HDDs: 12x 10TB HGST He10 7200RPM

- Additional NIC: Mellanox ConnectX-3 EN Pro 40GbE

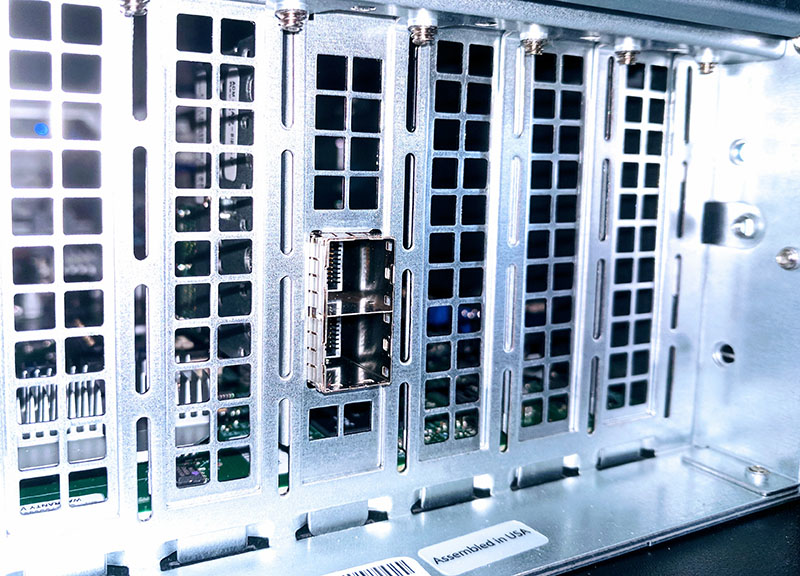

Our test configuration also had a dual SFF-8644 for eight SAS3 ports of rear connectivity. This can be used to hook up additional disk shelves. We were able to use an SFF-8644 to SFF-8088 cable and hook the system up to our Supermicro SC847E26-R1K28JBOD 45-bay JBOD disk shelf.

Supermicro SuperStorage SSG-5029P-E1CTR12L Overview

If you look at the Supermicro SuperStorage SSG-5029P-E1CTR12L from the front of the chassis, it would blend in with years of previous-generation 3.5″ servers. You can use the same drive trays in the 3.5″ chassis as you did on previous generations and there are 12x internal 3.5″ drive bays to work with. These 12x drive bays are connected to a SAS3 expander on the backplane.

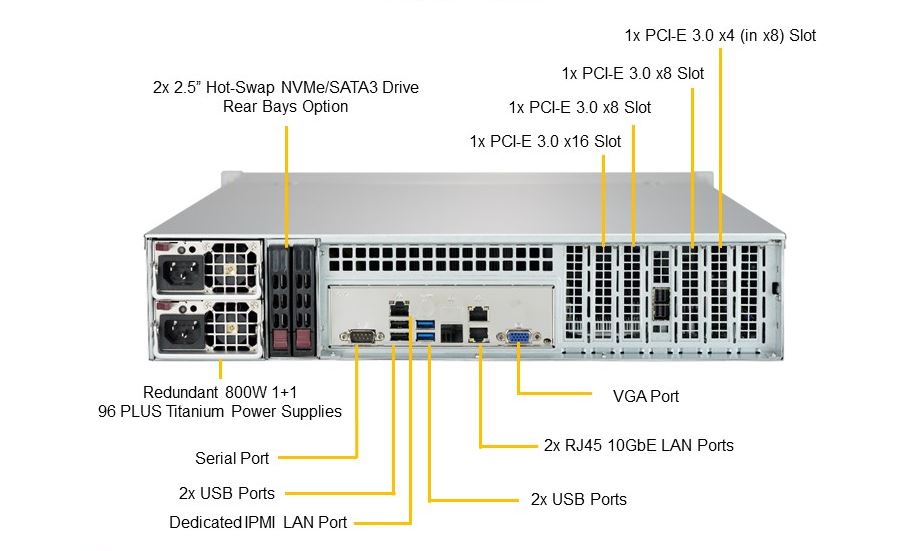

The rear of the unit is substantially more interesting. Here is the stock image going through all of the features including the 10Gbase-T networking.

The server sports hot-swap redundant 800W 80Plus Titanium power supplies, some of the highest on the market. There are a pair of USB 2.0 and 3.0 ports along with legacy serial and VGA for local management. For remote management, there is an IPMI port.

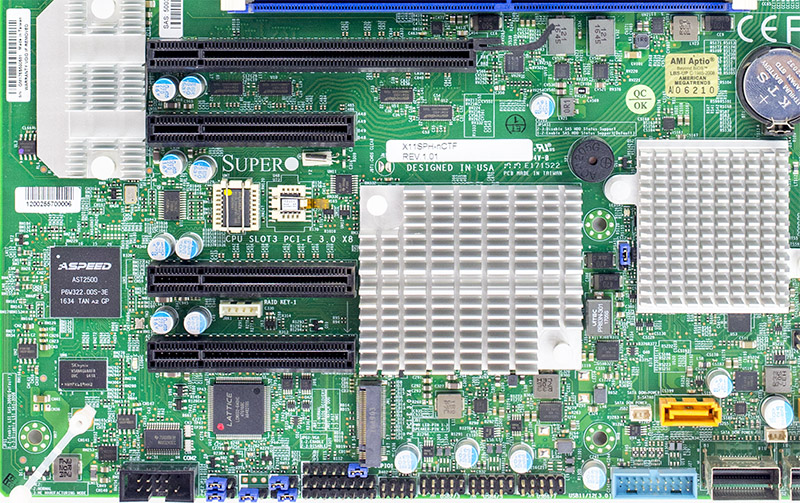

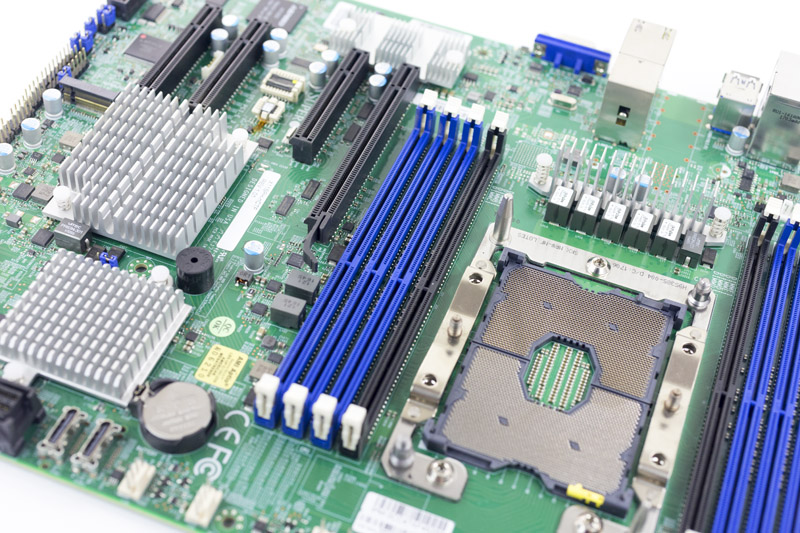

Powering the server there is the Supermicro X11SPH-nCTF we reviewed previously. You can read our Supermicro X11SPH-nCTF ATX Storage Motherboard Review for an in-depth view of this, but we wanted to highlight a few pertinent points here. The first being PCIe and expansion. We know from the chassis that this motherboard supports low profile cards.

Key points here are that there are four open PCIe 3.0 slots. A PCIe x16 slot, two PCI-E 3.0 x8, and one x4 electrical in an x8 physical slot give the system ample expansion. New in the X11 generation we see an m.2 slot for NVMe SSDs. The m.2 form factor has become increasingly popular. There are another 8x PCIe lanes dedicated to two Oculink connectors to power 2x 2.5″ U.2 hot swap NVMe rear bays. Here is what ours looked like:

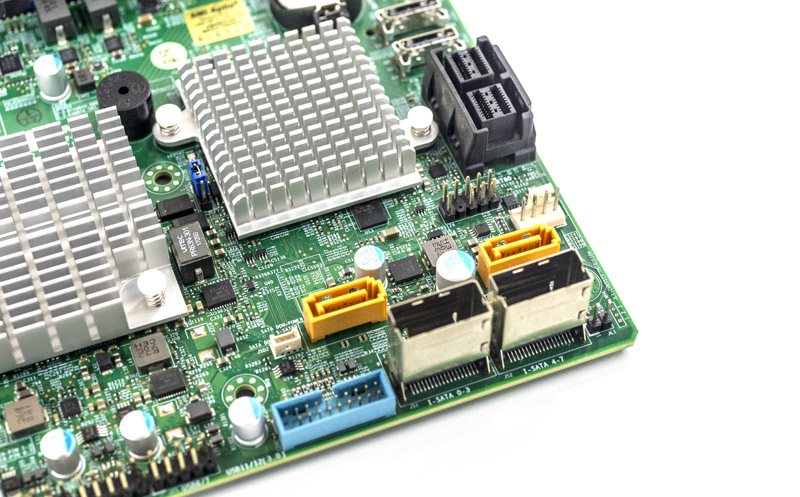

The real gem, of course, is the SAS 3008 controller providing 8x SAS 3 12gbps ports for storage connectivity as well as 8x onboard SATA III via SFF-8087 connectors. There are also two Supermicro orange 7-pin SATA headers for SATA DOMs.

Putting this into perspective, in this server one can have 12x 3.5″ drive bays via SAS3 plus shelves connected via external SAS3 ports. One can then add two SATA DOMs and an m.2 NVMe drive without adding a single expansion slot nor using the onboard SATA SFF-8087 ports. Hre are the rear SFF-8644 ports just above the Assembled in USA sticker:

By utilizing the SAS expander, Supermicro is effectively offering a total of 20x SAS3 ports (8x external and 12x for the 3.5″ bays) on this server.

For those upgrading from DDR3 generation Xeon E5 platforms, DDR4 on the single socket Xeon Scalable platform can provide more bandwidth in a single socket configuration than DDR3 had in a dual socket configuration.

Beyond this, the platform provides dual 10Gbase-T without requiring an add-on NIC which makes the hardware extremely versatile without having to utilize the four PCIe expansion slots.

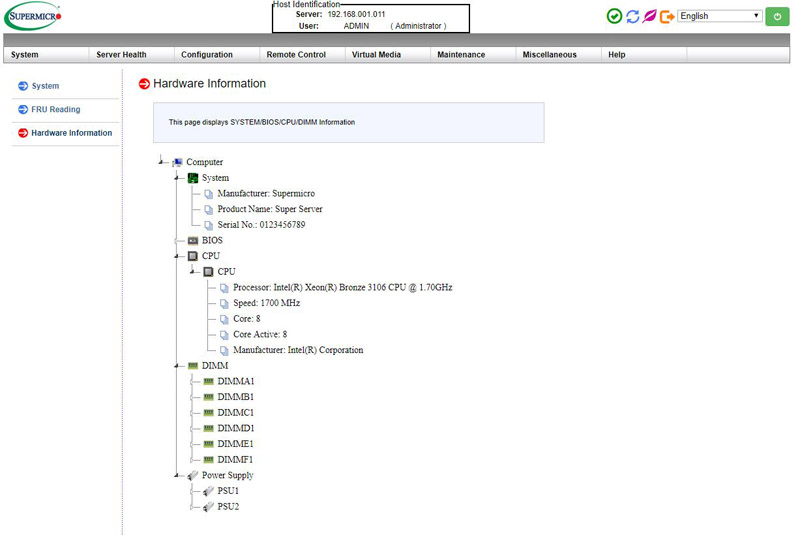

Supermicro Management

These days, out of band management is a standard feature on servers. Supermicro offers an industry standard solution for traditional management, including a WebGUI. The company is also supporting the Redfish management standard.

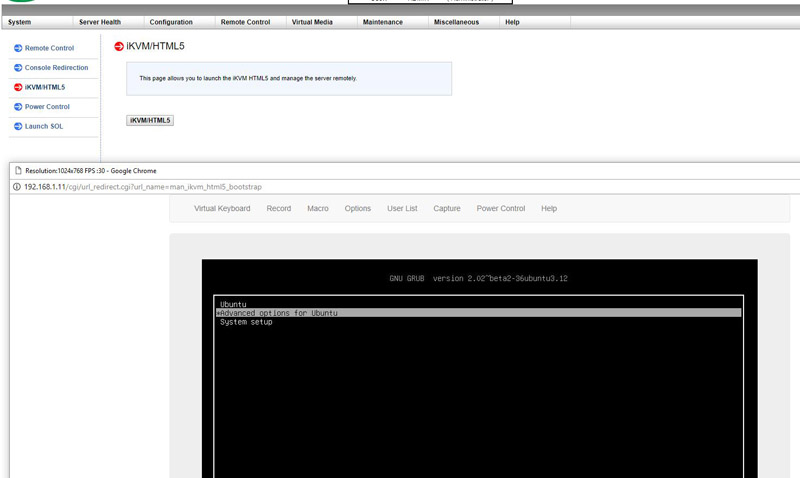

In the latest generation of Supermciro IPMI is a HTML5 iKVM. One no longer needs to use a Java console to get remote KVM access to their server.

Currently, Supermicro allows users to utilize Serial-over-LAN, Java or HTML5 consoles from before a system is turned on, all the way into the OS. Other vendors such as HPE, Dell EMC and Lenovo charge an additional license upgrade for this capability (among others with their higher license levels.) That is an extremely popular feature. One can also perform BIOS updates using the Web GUI but that feature does require a relatively low-cost license (around $20 street price.) That is a feature we wish Supermicro would include with their systems across product lines.

At STH, we do all of our testing in remote data centers. Having the ability to remote console into the machines means we do not need to make trips to the data center to service the lab even if BIOS changes or manual OS installs are required.

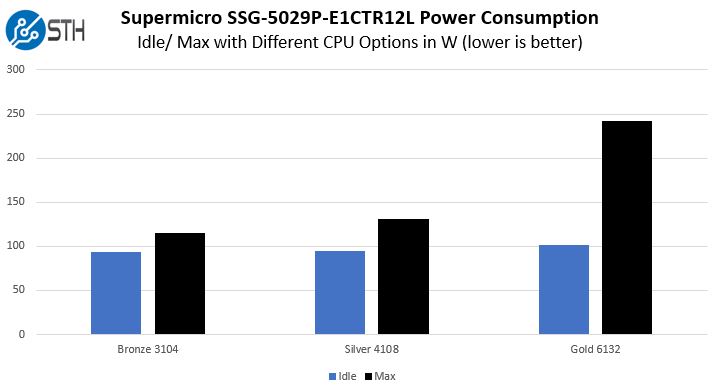

Power Consumption

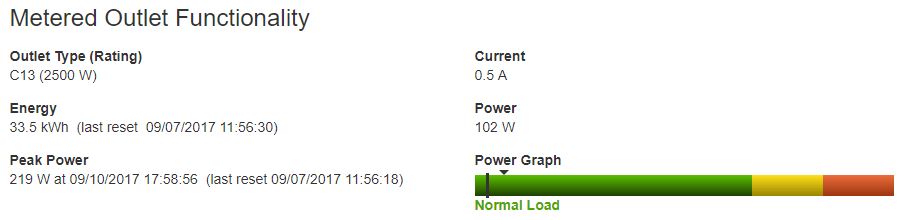

We tested our system on our 208V APC Schneider Electric metered PDUs in a data center at 17.8C and 71% RH. We removed hard drives and left only one Intel DC S3710, to ensure the SAS3 controllers/ expanders were operating, and one Intel DC P3520 2TB drive to show how much the base system varies based on installed CPU. Here is an example from our Intel Xeon Gold 6132:

On the numbers, the 102W idle is extremely good. For a similar configuration, especially using dual socket E5-2630 V1/ V2 CPUs we would have expected at least double the power consumption. Likewise, the 219W maximum was achieved during a 100% CPU utilization workload. We did see an AVX-512 peak earlier of 242W. That does not represent typical storage server power consumption. Still, those are great figures.

When you vary the CPU, you get some interesting results. We are showing idle and AVX-512 figures here (so best and worst case) to give you a sense of how sensitive the system is to CPU variances and so you can budget power accordingly.

The Xeon Bronze 3104 is the absolute lowest power and cost CPU you can put in this system. It carries an 85W TDP, but that is extremely generous. Those CPUs sip power. The Intel Xeon Silver line we expect to be a popular CPU for storage servers. The step from the Intel Xeon Bronze to the Xeon Silver 4108 is a large one in terms of performance but not price. Adding 12x 7200rpm hard drives will increase this power but it will be well below what one of the the redundant power supples (80Plus Titanium) can handle.

Performance

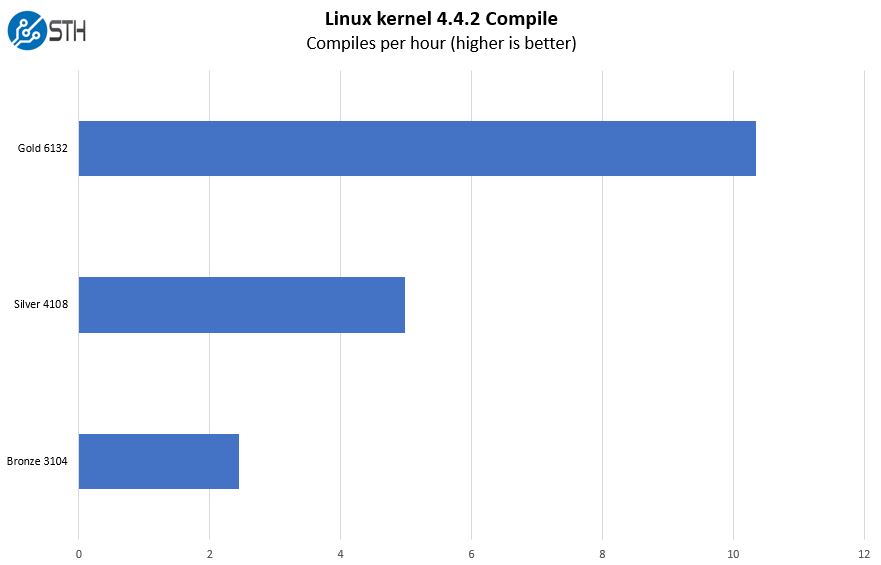

Since we are early in the cycle of the new Intel Xeon Scalable platform, we wanted to provide some measure of how well the performance scales between CPUs that you could use. Storage performance is going to largely depend on the drives and software configuration you have in the system. With clustered storage server rebuild times requiring a surprising amount of CPU grunt, we wanted to instead focus on something that will be applicable to everyone, CPU performance. More specifically, which CPU should you equip in the new server. Here is an example from our Linux Kernel compile benchmark:

As you can see, moving up the stack produces a significantly better performance. With the new Intel Xeon Scalable series, you can customize the server with different CPU options to meet performance needs. Oftentimes, you can get dual socket Intel Xeon E5 V1/ V2 performance in a single socket Intel Xeon Scalable CPU.

Final Words

This is a right-sized system to handle storage tasks for small to medium sized arrays. It would likewise make a great Ceph node with disks for storage and NVMe SSDs for caching. One has the option to customize the server with more PCIe than previous generations. One can also use Xeon Gold for higher performance and RAS features or Bronze and Silver for lower power operation and lower costs.

Nice box! Put 5 in my Q4 budget with Silver 4114’s for a ceph cluster. Wish it used sfp+ not base-t since base-t is such a power hog. I’d add 40g anyway so it doesn’t really matter.

“In the latest generation of Supermciro IPMI is a HTML5 iKVM. One no longer needs to use a Java console to get remote KVM access to their server.”

THANK GOODNESS! only 5 years overdue

ube109, the X11SPH-nCTPF is the same board but with SFP+ instead of copper.

https://www.supermicro.com/products/motherboard/Xeon/C620/X11SPH-nCTPF.cfm

“The Supermicro SuperStorage SSG-5029P-E1CTR12L us a 2U server” . “us a 2U server”? should be “is”.

Great article…too bad they haven’t updated their chassis design in a while…wish they had something more like DELL’s chassis.

@Evan Richardson:

What are you missing from the Design compared to Dell?

Nice article!! I’m going to buy one of these for my home server. Could you make some noise tests, as I’m going to have it in a rack next to my work table.

Thanks!!

Apart from the Dell server, they need to bring the look of their servers up to date, the are ugly. Look at companies like Gooxi and how their server look, light years ahead of Supermicro.