Supermicro sent over two SuperBlade SBE-710Q-R90 chassis for review along with six GPU compute nodes. We were able to test both inter and intra-chassis networking as well as two generations of GPU blades, both Broadwell-EP and Haswell-EP generations. We are splitting this review into several parts but we did want to focus on the blade chassis itself and an overview of the capabilities. The Supermicro SuperBlade 10-node systems we have been testing are a great way to consolidate servers to higher-density platforms. In our performance section, you can see how each 7U SuperBlade GPU system can replace a rack or two of legacy compute and networking gear. That is only the tip of the iceberg as Supermicro does offer even higher density solutions.

Supermicro SuperBlade Overview and SBE-710Q-R90 Chassis

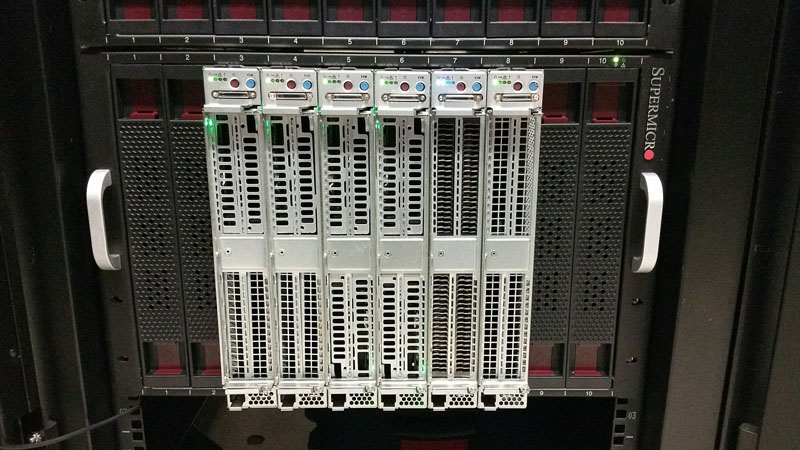

The Supermicro Supermicro SuperBlade SBE-710Q-R90 chassis is a 10 blade chassis designed for higher-configuration blades (e.g. not simple dual processor blades.) Each blade spot is numbered from 1-10 in a left to right manner. These ten slots can either hold blades are can hold blanks (spots 1, 2, 9 and 10 below) to improve airflow. A key value proposition of using blade chassis is that one can expand from a partially full blade chassis and add nodes at a later date. We had two generations of blades but we were told Sandy Bridge and Ivy Bridge generation SuperBlade chassis can accept the new Haswell and Broadwell generation blades. As a result, these blade chassis were meant to be installed and work for generations of processors allowing for easy expansion and upgrades.

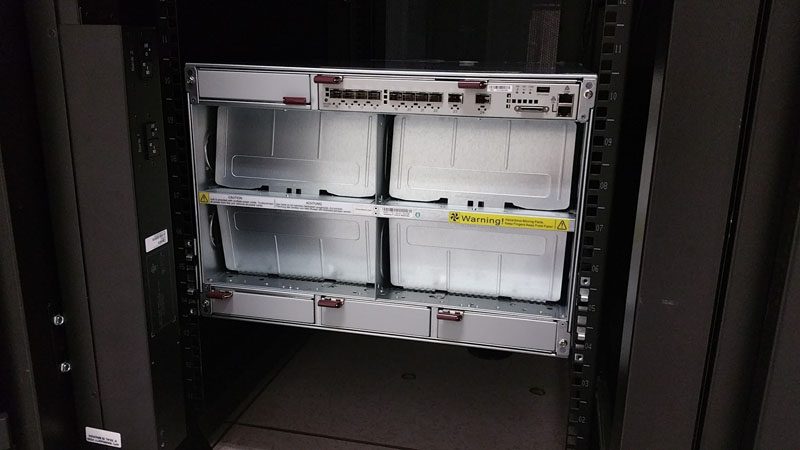

In the rear of the chassis, we see the 10GbE switch installed along with four slots for external chassis management modules and/ or additional networking modules. Our configuration had the base 10GbE networking (other options offer higher speed networking like QDR/ FDR Infiniband) and there are options to add 1GbE networking modules as well.

The four large doors are bays for the massive 3kW power supplies that provide up to 12,000w raw power to the Supermicro SBE-710Q-R90 chassis (or 9kW in n+1 configurations.)

Here is an example of the 3kW SuperBlade PSU versus a Supermicro 1kW 80Plus Titanium power supply. These units are very large as they provide power and cooling for up to 10 servers.

Here is a view of the Supermicro SBE-710Q-R90 chassis with all four power supplies installed. As one can see, these PSUs and associated fans cover a large portion of the rear of the unit. As one can imagine, the high-quality Delta fans can move a lot of air.

As we were setting up the Supermicro SuperBlade servers, we did validate that they would be operational even if you only had one power cable active. This is useful both in power failure scenarios as well as in scenarios where you need to swap rack power distribution units. Note, as will be discussed later, we did keep loads down to under 3kW while doing this testings so as not to overload a PSU.

The SuperBlade chassis greatly reduces cabling. One could easily manage these systems with just six or seven cables installed. 1x RJ-45 1GbE management cable for out-of-band management, 1-2 SFP+ cables for networking (quite common in compute clusters that do not need extreme bandwidth) and 4x power supply cables. This setup provide roughly the equivalent of many more cables if you were using individual nodes. Here is a rough breakdown of what these 7 SuperBlade cables replace:

- 11x out of band management cables (1 for switch and 10 for nodes)

- 12x SFP+ connections – (10 nodes and 2 uplinks)

- 14x PSU cables (20 for nodes and 2 for switch)

- Total: 37 cables

That means one is able to use 7 cables or 30 cables less using the SuperBlade platform versus standard nodes and switches. The unit also has the ability to further consolidate as one could greatly expand this number with 1GbE switches and and additional 10GbE switch.

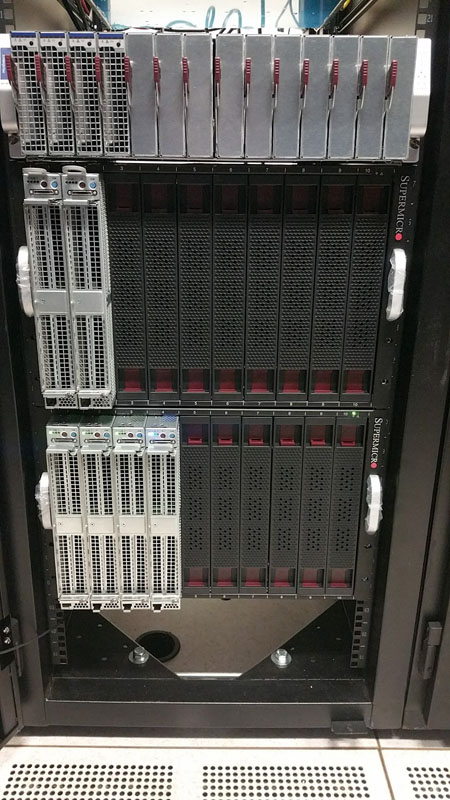

We had a number of chassis to use in our testing. The top chassis in these pictures is a Supermicro 3U MicroBlade chassis which will be the subject of a subsequent review.

The major benefit of these chassis is that once the chassis is installed in a rack, it is extremely easy to expand to use more nodes, add hot swap switches, upgrade/ replace power supplies, upgrade nodes, perform maintenance on the nodes and etc. Compared to standard 1U/ 2U servers, blade chassis are very easy to maintain. We manage racks of gear in the lab including many standard form factor servers and networking switches. From a maintenance perspective, the SuperBlade form factor is orders of magnitude easier to maintain.

The SuperBlade platform is also dense. We had perhaps the lowest density SuperBlade variant (Supermicro makes 14 blade 7U chassis and Twin node blades), but we could still fit 10 server nodes with two processors each and 2 GPU cards per node. Supermicro offers other options but compared to using 10x 1U nodes with a 1U external switch, this saves 36% space. If one were to add additional 1GbE and additional high-speed networking switches, this consolidation ratio would only go up.

Test Configuration

This is a longer configuration than we usually have simply because there is so much going on in this chassis. The temperature in the datacenter ranged between 20.9C and 21.1C during testing with relative humidity at 41%. We use 208V power in the STH colocation lab.

SuperBlade Chassis and Components

- Chassis: Supermicro SuperBlade SBE-710Q-R90 chassis

- Power Supplies: 4x 3000W PSUs

- Switch and CMM: Supermicro SBM-XEM-X10SM 10GbE switch

SuperBlade Configurations

4x Broadwell-EP BI-7128RG-X Blades

- Supermicro B10DRG motherboard

- 2x Intel Xeon E5-2698 V4 Blades

- 128GB DDR4-2400 (8x 16GB RDIMMs)

- 1x 32GB Supermicro SATA DOM

- 1x Intel DC S3700 400GB

- 2x NVIDIA GRID M40 GPUs (4x Maxwell GPUs and 16GB RAM per card)

2x Haswell-EP BI-7128RG-X Blades

- Supermicro B10DRG motherboard

- 2x Intel Xeon E5-2690 V3 Blades

- 128GB DDR4-2133 (8x 16GB RDIMMs)

- 1x 32GB Supermicro SATA DOM

- 1x Intel DC S3700 400GB

- 2x NVIDIA GRID M40 GPUs (4x Maxwell GPUs and 16GB RAM per card)

Note we did swap components for different tests over the past few weeks. These are the configurations we utilized for power consumption data. You will notice we took pictures during the process as we were testing different GPU configurations.

A few notes on Power Consumption

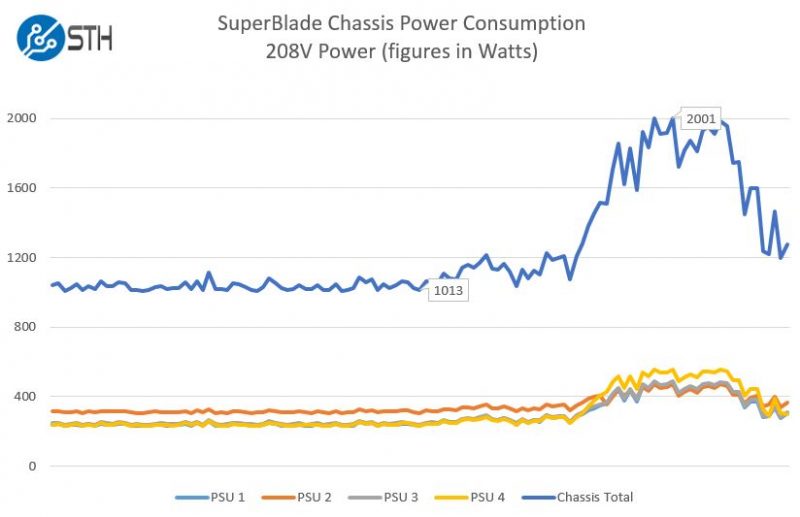

One of the big questions we get with shared chassis enclosures is exactly how much power they use and save. With shared chassis, like the Supermicro SuperBlade, the power and cooling duties are shared. We attempted to take a few views of the power consumption at 1 minute intervals for each outlet on our Schneider Electric / APC PDUs. We also had the opportunity to test with one bare chassis to get some baseline data. We did not get to test the SuperBlade chassis in anywhere near a fully populated scenario.

SuperBlade chassis without blades

We had the opportunity to test the Supermicro SBE-710Q-R90 chassis with a SBM-XEM-X10SM 10GbE switch, integrated chassis management module (CMM) and 4x 3000w power supplies. This configuration has no blades so the fans are in a minimal speed. We did this just to test the power, cooling, management and networking power consumption.

Without blades powered up, we see the unit utilized around 341W. That is quite good. The 10GbE switch did have 10x 3M SFP+ DACs installed. It also was far below the efficiency curve of the power supplies. While we had 12,000W of total power supply capacity, we were only using about 2.8% of the total capacity for the switch, CMM, fans and power supplies. At 2.8% loading, the power supplies are well below rated specs. Fans were also set to automatic control so they are running at their lowest speeds in this test.

SuperBlade VMware idle and sample CPU loading

We ran a quick test to transition from ESXi idle to CPU loading on four of the six nodes. On this test we had two E5-2690 V3 Haswell-EP nodes each with 2x NVIDIA GRID M40 cards (4 GPUs and 16GB RAM per card) mostly idle running VMware ESXi at around 5% CPU utilization. We then simultaneously loaded benchmarking scripts on the four dual Intel Xeon E5-2698 V4 nodes. Each node had dual Intel Xeon E5-2698 V4 chips in VMs where we assigned all of the CPU resources, one VM per CPU. These are the latest Broadwell-EP parts with 20 cores and 40 threads per chip. We set the GPUs in the four CPU benchmark nodes to This produced a nice spike with all 160 cores in those four nodes running simultaneously.

These results are phenomenal. We had just around 1kW of idle power consumption and added about 1kW more when we loaded the 160 Broadwell-EP cores/ 320 threads on the four nodes. Given what we have seen from the E5-2698 V4 in single server configurations, we are starting to see the power supply inefficiencies rise which is why the delta was lower than we expected as we loaded machines. Even with these four nodes running non-AVX CPU loads, we were still only at around 16.7% utilization of the power supplies which is extremely low.

SuperBlade Power Consumption with GPUs

We will have additional numbers including GPU results when new GPUs arrive from NVIDIA. We received a request from the Supermicro SuperBlade team to publish the interim review prior to receiving the new GPUs for testing. In this case we are honoring the request due to delays in getting appropriate cards from NVIDIA.

Additional power testing notes

For those unfamiliar with seeing multi-PSU power consumption results, the fact that we see one PSU using more power than others is expected. This behavior exists in redundant PSU setups across manufacturers. Most hardware review sites cannot log PSU differences so this is not the most well known fact. One should take this slightly offset loading into account when doing their power planning.

Also, we did try a very scary scenario where three of the four power supplies failed (e.g. were removed.) We ran a similar set of tests above to see if we had this extreme type of failure, could the chassis stay powered. We were happy to report the hot swap redundant PSUs allowed the benchmark scripts to continue running without interruption through three PSUs being removed and then re-inserted. Our test was specifically designed to allow for this as we used a load under a single PSU’s 3kW capacity.

As we get new GPUs from NVIDIA, we will publish figures with the GPUs being loaded.

Final words

Having to manage constantly changing hardware that we have in the lab is difficult. Using the Supermicro SuperBlade platform we quickly saw the benefits in terms of: higher density, higher power supply efficiency, easier maintenance, significantly reduced cabling, and easier upgrades/ expansion. We were impressed by how easy it was to use and manage the system. As a result, we will be looking into getting 1-2 SuperBlade platforms in the lab for our needs such as VDI nodes and load generation nodes.

In terms of management, networking, and the GPU blades themselves (including performance) we can direct you to the other parts of this review:

- Part 1: Supermicro SuperBlade System Review: Overview and Supermicro SBE-710Q-R90 Chassis

- Part 2: Supermicro SuperBlade System Review: Management

- Part 3: Supermicro SuperBlade System Review: 10GbE Networking

- Part 4: Supermicro SuperBlade System Review: The GPU SuperBlade

Check out the other parts of this review to explore more aspects of the system.