If you walk around colocation facilities, you are likely to see a Supermicro MicroBlade system laboring away in a rack. The Supermicro MicroBlade 3U system we have in our data center lab for review is half the size of the original 6U system. It takes a total of 14 blades that can each house 1-4 nodes. In its densest configurations it can handle 56 nodes (quad node Intel Atom C2750 blades) or up to 504 cores (Intel Xeon E5 V4 blades.)

The variant we have has four blades populated with a total of 6x Intel Xeon D-1541 nodes which is far from a maximum configuration. Still we are able to see why these systems are so popular. We had a look at the Supermicro SuperBlade GPU platform a few weeks ago, and we are now giving the Supermicro MicroBlade system a thorough review. We also have a HPE Moonshot in the lab and are going to sprinkle in some comparisons as the MicroBlade and Moonshot do cover some of the same market segments.

Supermicro MicroBlade 3U System Overview

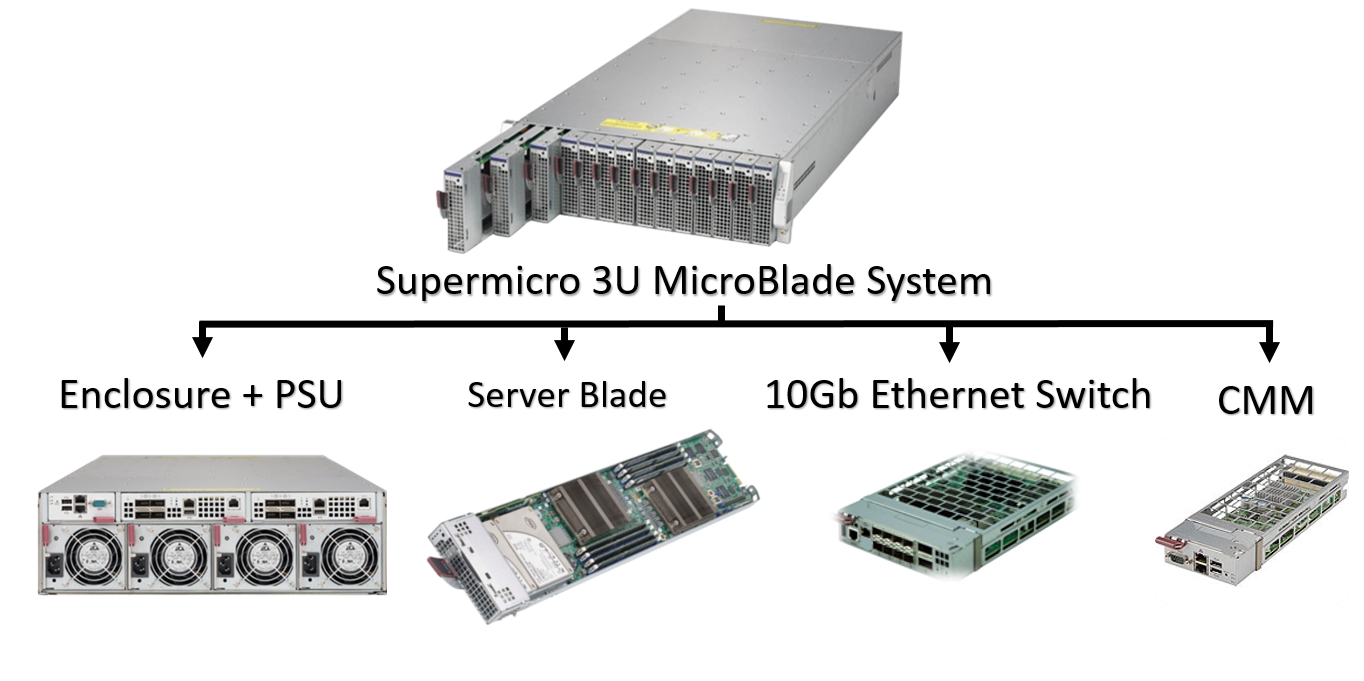

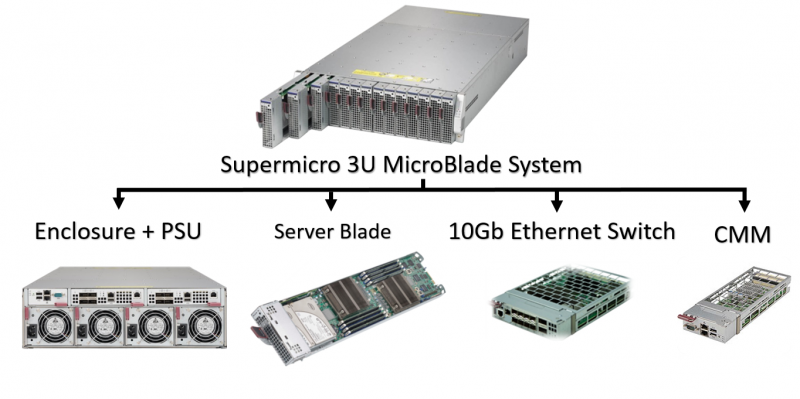

Traditional blade systems are very large, usually 6U or larger. The Supermicro MicroBlade in contrast is 6U or smaller. Our unit has four power supplies, two high-speed network switch slots and a chassis management module slot. What really makes the platform are the 14 blade slots. Here is a conceptual diagram of the key Supermicro MicroBlade platform components:

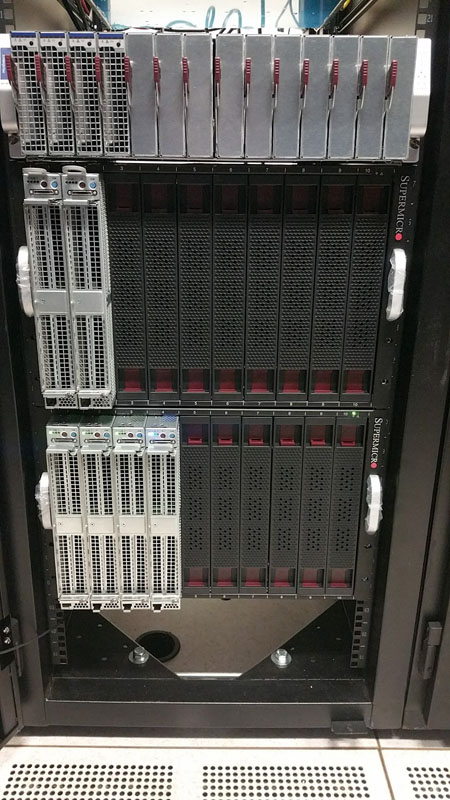

We had four of the fourteen blade slots filled with two different Intel Xeon D-1541 based nodes, two storage focused nodes and two compute focused. Later in this review we will review those nodes in detail (Part 4 and Part 5.) The entire front face of the chassis is dedicated to these blades. There are no fancy LCD screens, no superfluous ornamentation, just blades and latches. Here is a view of our system being racked atop the Supermicro SuperBlade GPU systems in our data center lab.

While we have two blade variants based on the Intel Xeon D-1541 8 core/ 16 thread CPU, there are many more options available. For starters, Supermicro has versions of the Intel Xeon D blade with up to 16 core processors, a feat that the HPE Moonshot just recently added. Supermicro has nodes with the Atom C2000 series (e.g. the C2750 and C2550) with up to four nodes per blade or 56 nodes per 3U chassis. Supermicro has multi-node Intel Xeon E3 blades both with and without the Intel Iris Pro GPU. There are even nodes available that can support up to dual 120w TDP 18 core/ 36 thread Intel Xeon E5 V4 processors. The flexibility of the design allows one to mix and match MicroBlade nodes in the same chassis.

Moving to the rear of the unit, you can see the four PSUs. Each PSU is a 2000w unit that also includes fans. These PSU and fan units provide power and cooling redundancy and can be upgraded to higher power units.

There are also rear slots for one chassis management module (CMM) and either one or two switches. In Part 2 of this review we are going to cover management functionality. Our test system came with an Intel based 10GbE switch which we will look at in Part 3 of this review.

Space and Cabling Savings Example

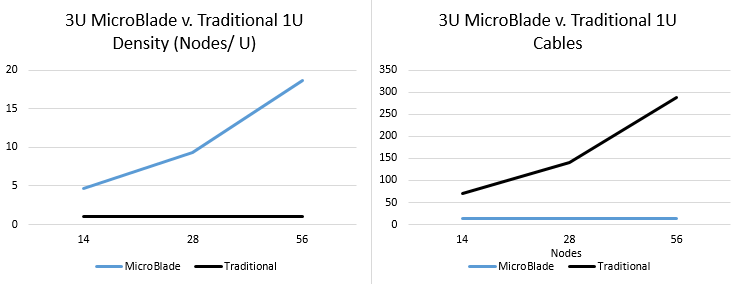

As we set this system up, we saw an immediate benefit: cabling. Four QSFP+ 40GbE cables for our single switch, one out-of-band management Ethernet cable and four power supply cables are all that we needed to get the system up and running. With these nine cables we could have easily serviced 28 Xeon D nodes if we had that number of nodes on hand. Another switch to provide twice the uplink bandwidth or redundancy would have only required adding four additional QSFP+ cables for 13 total. Compare this to the alternative of having 28 nodes each with redundant power, redundant networking, out of band management cables and two switches adding more cables and the picture is clear: if you want high density in a rack and easy cabling, you need a solution like the MicroBlade.

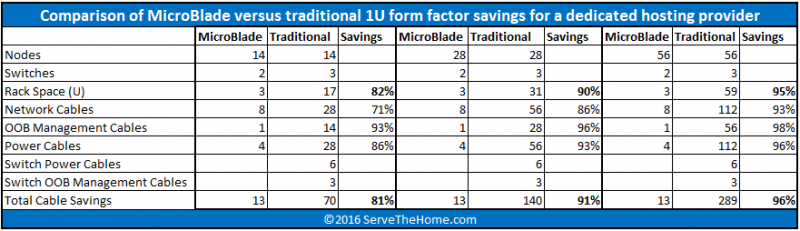

Last November, well before this review was set in motion, I was working with a web hosting company who was looking at Atom C2550 class servers for dedicated hosting. The owners’ plan was to purchase low cost consumer grade systems and use inexpensive 1U chassis. As we got to discussing the project, I made a spreadsheet to estimate the number of cables that it would take and it was staggering. We then compared this to the Supermicro MicroBlade and you can clearly see why so many hosting companies use these systems.

At 56 nodes we had space and cabling savings of 95% – 96%. Here are the results plotted graphically once we converted the space savings to density:

Once we arrived at this view, the hosting provider quickly realized that the economics of low density 1U dedicated server nodes simply did not work. All four of the data centers we now use for our colocation also service dedicated hosting providers. In all four, we see many MicroBlade chassis likely due to these savings. The Intel Xeon D nodes we are testing in this review would allow one to achieve either the 14 or 28 node cases in terms of density and cabling savings.

Test Configuration

Here is the test configuration we had access two with a total of four blades and 6 nodes. All nodes were Intel Xeon D nodes.

- Chassis: Supermicro MicroBlade 3U with 4x 2000W power supplies and single CMM

- Storage Blades: Intel Xeon D-1541, 64GB DDR4-2400, 4x Intel DC S3500 480GB SSds

- Compute Blades: Two nodes each with Intel Xeon D-1541, 64GB DDR4-2400, 1x Intel DC S3500,

- Switch: MBM-XEM-001

Hopefully in the future we can try some of the other node types, e.g. E3 V5, E5 V4, Atom C2000. Using this configuration we were able to get a good sense of how the system performs.

Power Consumption

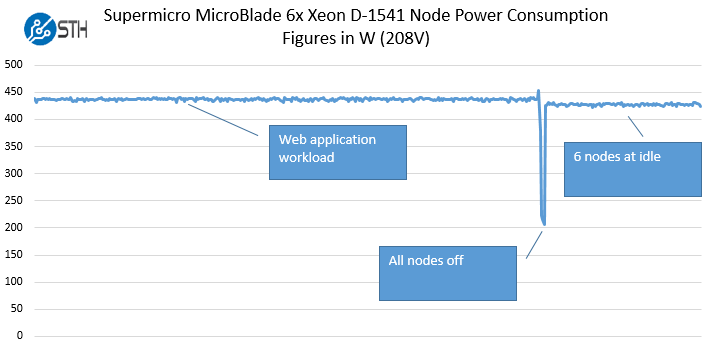

We are not going to spend too much time on the power consumption. Generally with these types of systems there is a great benefit to having shared power and cooling. In our particular system with 4 blades and 6 low power nodes the switch power consumption dominates the setup.

As you can see, on our 208V circuit the four blade, 6-node and 1 switch MicroBlade platform is using very little power. Even with the power supplies well below their target loads, we can see that idle power consumption of the setup is nearin 75w per node including SSDs and networking. We would expect this number to fall as the number of nodes increases.

Competitive View

In terms of density, the comparison to the HPE Moonshot is an interesting one. We have a 4.3U HPE Moonshot that can handle up to 180 very low end nodes. If your baseline was having an Intel Atom C2750 with a 2.5″ disk and a boot disk, then you would have a platform that both the HPE Moonshot and the Supermicro MicroBlade can support. We have on our HPE ProLiant m300 nodes in the lab which means a 4.3U HPE Moonshot handles 45 nodes in 4.3U while the Supermicro MicroBlade would handle 56 of such nodes. The HPE ProLiant m300 can support 4x SODIMMs per node for 32GB maximum versus 32GB in 2x SODIMMs per node on the MicroBlade. Supermicro also has the Intel Atom C2550 variant for lower cost and power dedicated server/ web nodes. That is just one example but each platform has a set of unique capabilities. For example, the HPE Moonshot has low-end AMD nodes for VDI while the MicroBlade platform can take dual Xeon E5 V4 blades.

The Supermicro MicroBlade 3U system is quite complex. As such we have a multi-part article for the review. See the other portions of the review here:

- Supermicro MicroBlade Review Part 1: Overview

- Supermicro MicroBlade Review Part 2: Management

- Supermicro MicroBlade Review Part 3: Networking

- Supermicro MicroBlade Review Part 4: Dual node Xeon D compute blade

- Supermicro MicroBlade Review Part 5: Xeon D storage Blade

Check out the other parts of this review to explore more aspects of the system.