Supermicro MegaDC ARS-211M-NR Topology

Unlike most Supermicro servers, the manual for this one is not out yet with the block diagram. Instead, here is a quick look at the system topology.

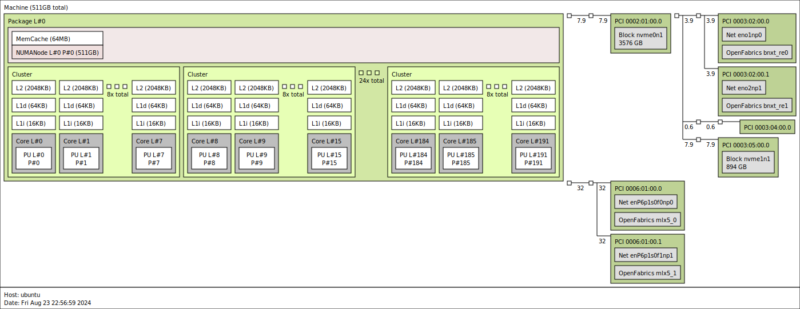

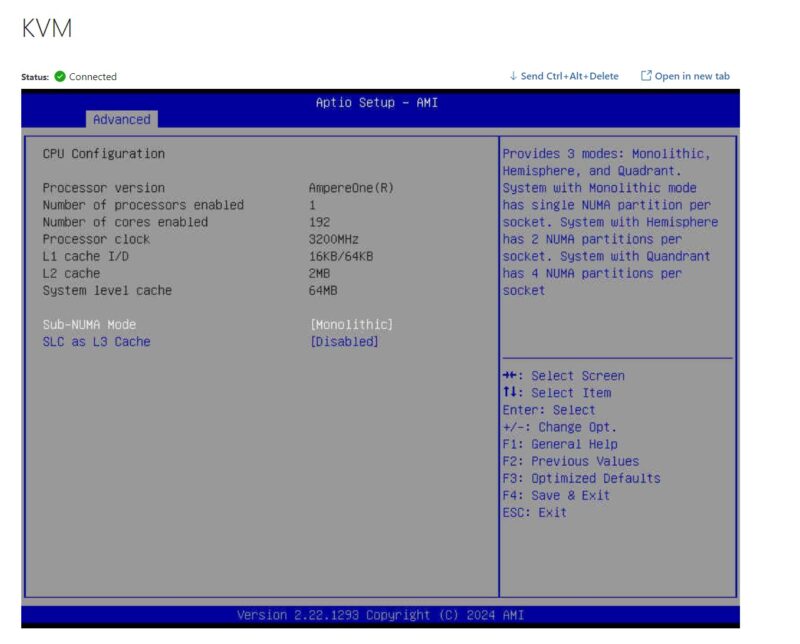

Here we can see the 192 core Ampere one made up of 24x 8 core clusters and the relatively smaller 64MB L3 cache. That is a design to keep the caches close to the cores for cloud VMs. It may also not be a coincidence that most cloud VMs are 8 vCPUs and smaller, and the AmpereOne has these 8 core clusters.

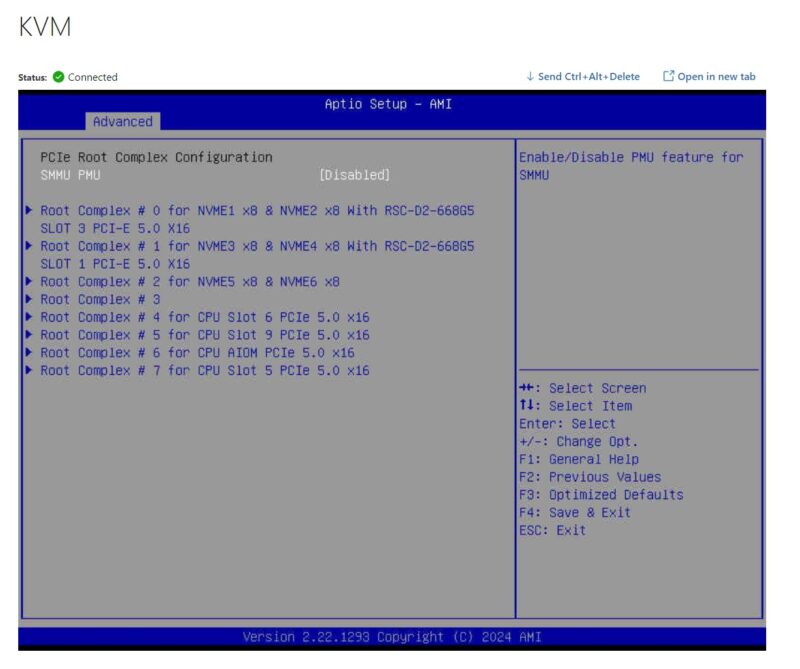

On the PCIe side, this is a PCH-less design, so all of the PCIe lanes terminate at the CPU. Since we do not have a block diagram, one item we want to call out is that there is a NC-SI channel between the baseboard management controller and the Broadcom BCM57414 25GbE NIC for those who do not want to use the dedicated IPMI port.

Next, let us get to management.

Supermicro MegaDC ARS-211M-NR Management

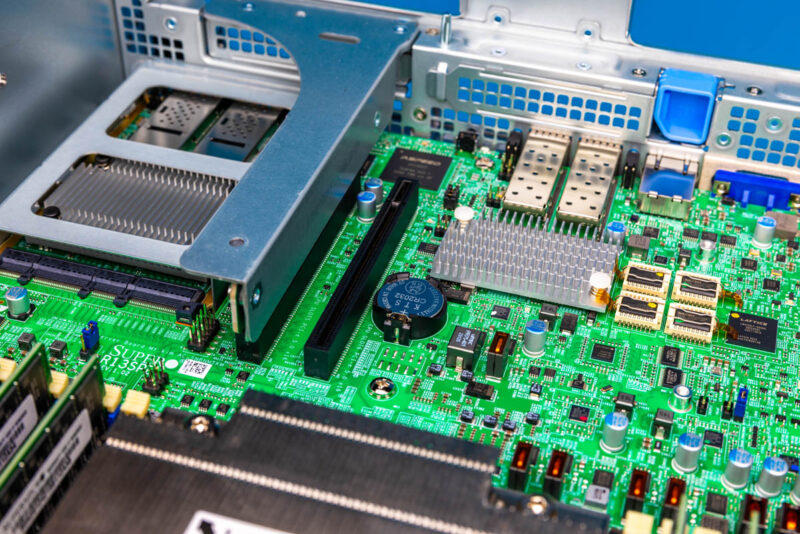

Onboard the server, we have an industry-standard ASPEED AST2600 BMC.

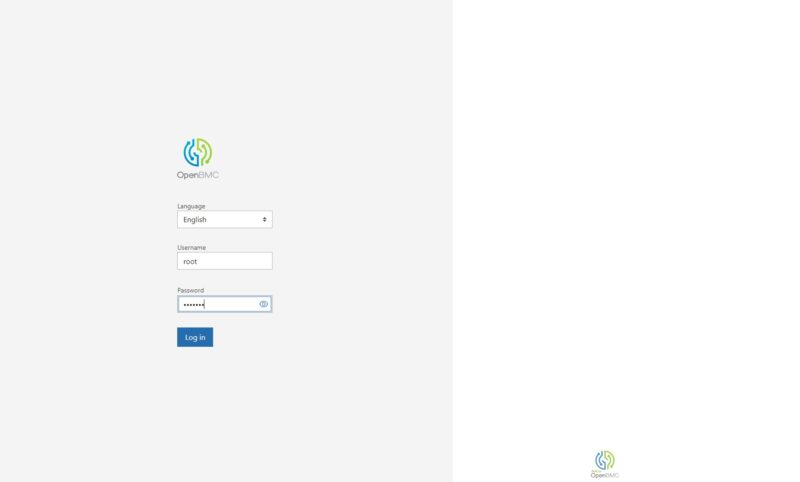

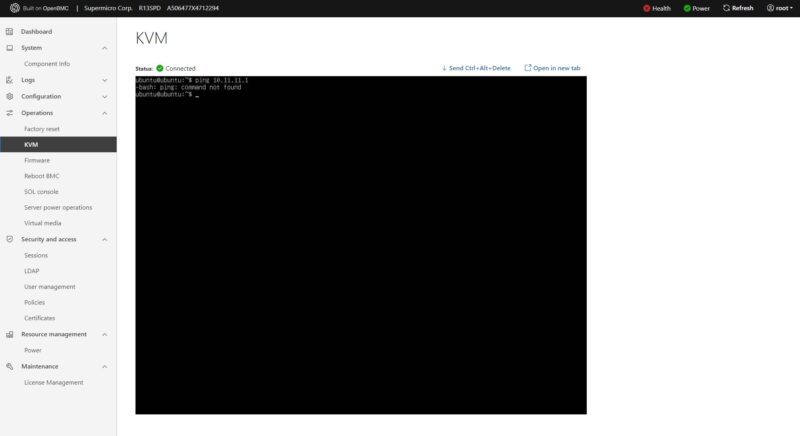

This server does not use Supermicro’s standard IPMI interface. Instead, it uses OpenBMC which we are seeing more often across vendors, and we really like it.

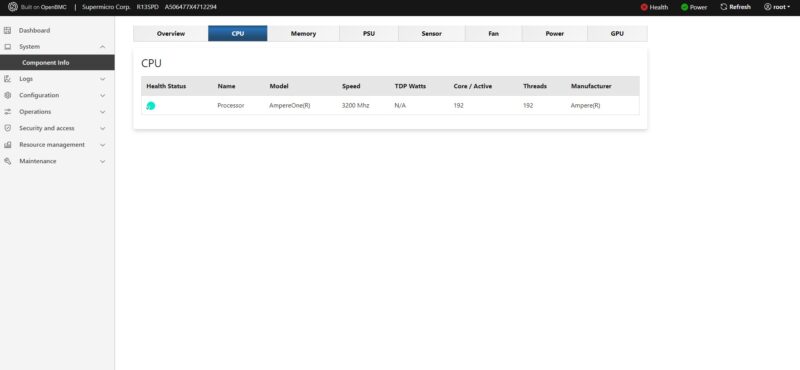

Once in, we can see our 192 core AmpereOne CPU.

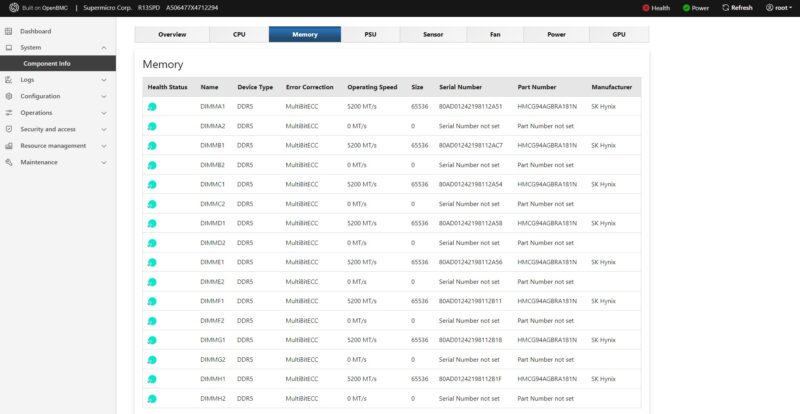

We can also see our 8x 64GB memory array of DDR5-5600 is actually running at DDR5-5200 speeds.

OpenBMC has serial-over-LAN as well as HTML5 iKVM functionality with remote media support.

The BIOS looks very standard if you are accustomed to Supermicro AMI Aptio. The settings might be in slightly different spots than Intel Xeon and AMD EPYC servers, but most will feel right at home here.

Here we can see the eight PCIe Gen5 root complexes and their configuration as stock in this system. Remember, with the various cables and risers, these lanes can be assigned elsewhere.

Also, since a lot of folks assume that Arm servers are very different from x86 servers, here is the Supermicro POST screen.

Since we first reviewed a Cavium ThunderX server in 2016, the Arm ecosystem has made continued strides to make Arm servers feel more like their x86 counterparts. They are now very close and a lot of ground has been covered to make this happen since 2016.

Next, let us get to the performance.

That’s great performance per dollar at least

I don’t get why you’d want a 1S not a 2S for these. If you’re trying to save money, then 2S 1 NIC shares common components except CPUs and mem so that’s much cheaper.

At idling Ampere’s power consumption seems/feels “broken” – Phoronix in its latest benchmarks with EPYC 5c does too, make this same observation.