Supermicro AS-5014A-TT Power Consumption

The Supermicro AS-5014A-TT comes with a large 80Plus Platinum power supply. Depending on the input voltage, it can supply 1.2kW to 2kW of power.

In terms of power consumption, we had this system generally running under 500W when we just had high-speed NICs/ DPUs and without GPUs. That is an interesting case since if one just needs CPU compute, the onboard ASPEED BMC’s basic GPU means one can save the expense and power consumption associated with a PCIe GPU. When we added the NVIDIA RTX A6000 and another BlueField-2 100GbE DPU, we saw power reach close to 1kW.

Given all of the expansion possibilities in this platform, we can easily see how some will want to build systems in the 1.5-2kW range and actually utilize the maximum headroom in the PSU, but we did not get there.

Final Words

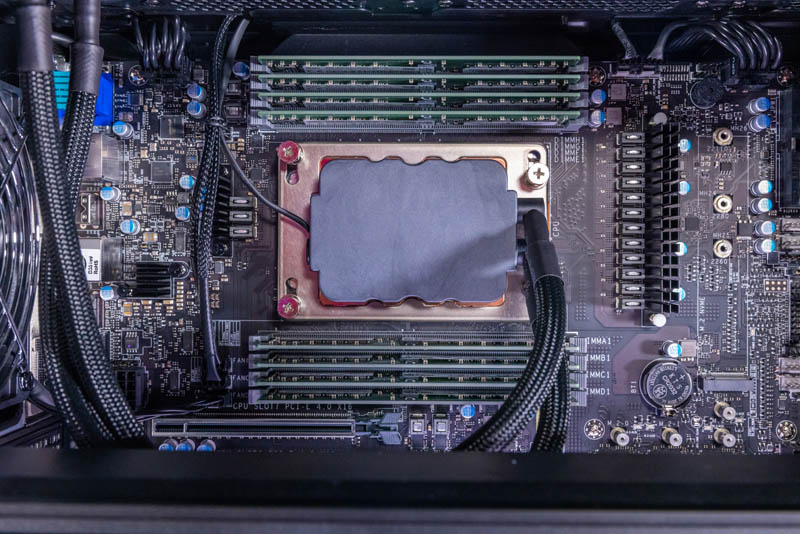

This was a really interesting system. At STH, we have reviewed Threadripper Pro platforms from four different vendors. Doing the head-to-head with the Lenovo ThinkStation P620, it became very clear that Supermicro outclasses Lenovo on expandability and onboard features. Lenovo has the smaller chassis of the two, but that and the on-site warranty were the only two reasons we might recommend the Lenovo P620 over the Supermicro AS-5014A-TT. For our money, it is not even close, the Supermicro AS-5014A-TT is the better platform doing everything the Lenovo P620 does, but just better.

Supermicro also does not utilize AMD PSB. Lenovo vendor-locks its AMD CPUs, including in the P620, limiting the re-use of processors down the road. Even when using the systems out-of-the-box, Supermicro’s liquid cooling allowed the Threadripper Pro 5995WX to better sustain performance over time versus Lenovo’s solution.

Many times when we review systems, they are either reviewed in isolation or are very similar. This is one of the starkest examples in recent memory when we can do a head-to-head, and there is a clear winner: the AS-5014A-TT. In future versions, we would like to see an emphasis on toolless service, but this system was still relatively easy to work on. Overall, it gets our recommendation in the Threadripper Pro workstation category.

Nice. Good PCIe slot layout, accomodate two GPU without blocking other slots. Some ppl (me for example) cannot have too many GPUs in a machine, it’d be great if a revision of the case had off board physical mounting for a couple more GPU to be connected with riser cables.

Nevertheless compared to, say, certain other mfr’s two slot TR Pro offerings this is brilliant.

That’s a sharp looking workstation case.

Would like to see these real Chess bench nodes/sec. numbers!

Nice reviews as always..

Yes – nice workstation – I just bought one. The only thing I really miss (so far) is the PMBUS for the power supply – it doesn’t support this. So, no monitoring power usage via IPMI. Which I do miss.

Also, had to move a NVIDIA 3060 grade consumer grade card around in the PCIE slots until I found the one where it stopped acting “flaky” – of course this could be the card itself.

One last gripe, SuperMicro store does NOT carry the conversion kit to have this rack mounted, you have to purchase it (if you can find it) at other outlets.

Overall, I do give it a thumbs up :-)

these machines are onerous overpriced anachronisms from the get go due to pricing and marketing decisions – they will only be around for a little while due to these factors – the future of hedt is in a lull but the lull won’t last long – think arm and tachyum options plus clustering – you can get many machines for the same price in diy mode and surpass overall performance – this is not innovative or clever but more like unclear. final answer

@opensourceservers most people or rather companies running these have production workloads where expert salary and software licences are the main cost factors. Even if such a system is changed every ~2-3 years it will be <= 10% of the total costs related to that employee. What you are talking about with arm clusters is a totally different type of scenario.

These would probably be monster scale-up mixed usage database servers. I’d love to see a set of SQL benchmarks (but the MS SQL enterprise license cost of such a system would be scary). If your workload doesn’t shard and parallelize well, a classic SQL server scale up system can perform much better at a lower cost, and these can have huge RAM and extremely fast storage direct attached, with even more storage as NMVEoF with DPUs

@David Artz. Can you point me towards a suitable rack mount kit vendor please.

Not a fan of this new SuperChassis GS7A-2000B with its LED lighting bar.

I much prefer the older designs: CSE-743AC-1K26B-SQ or CSE-745BAC-R1K23B-SQ, CSE-745BAC-R1K23B or 11 slot CSE-747BTQ-R2K04B, all in 4U Form Factor.