Power Consumption

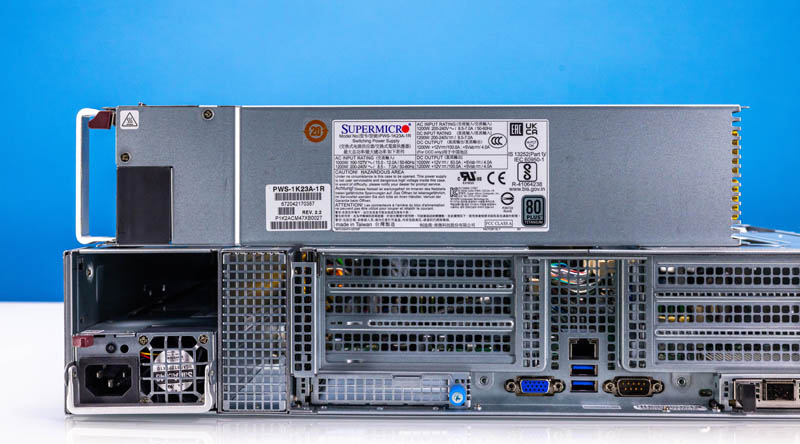

In the Supermicro AS-2015CS-TNR, we get two 1.2kW 80Plus Titanium power supplies. These are high-efficiency power supplies and are standard in the server.

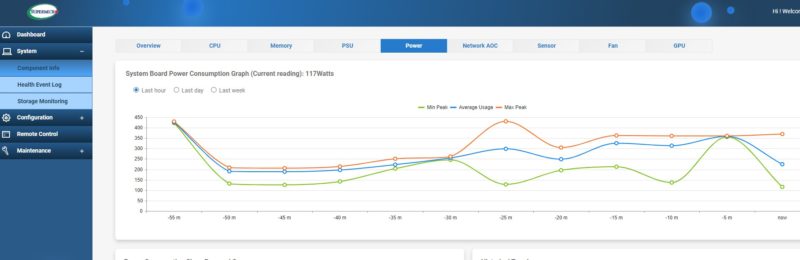

The power consumption was fascinating. Here is a quick snapshot from the onboard IPMI interface. We were at 117W on the IPMI and 120W at the PDU here. Still, the peak for the system was under 500W with 84 cores. Adding higher-TDP SP5 parts, we were still only seeing up to around 600W maximum, but the majority of the time the server would run at about half that.

Frankly, with the AMD EPYC 9634, it was a bit shocking to see how low the power consumption was compared to many of the servers we have tested recently.

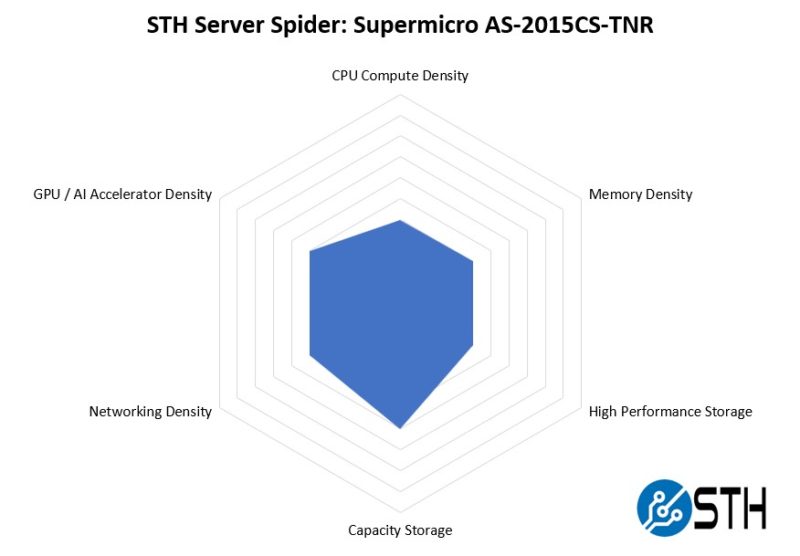

STH Server Spider: Supermicro AS-2015CS-TNR

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This is a very well-rounded server. The goal with a single socket 2U server is not to be the absolute densest solution. Instead, it is to provide efficient use of often static rack power budgets. Many modern racks cannot handle 1kW/U, so systems like these mostly operating in the 60-250W/U range can make a lot of sense. Supermicro’s focus here was putting together a well-rounded platform for those types of applications.

Final Words

While the biggest and fastest servers are always interesting. The Supermicro CloudDC line is one that a lot of STH readers might think of switching to. While the server itself is flexible in its configuration, inside we saw a more cloud-like approach. Connectors are more standardized instead of a bevy of proprietary connectors many OEMs use and there is a focus on quick and easy service.

The 84-core, 384GB configuration was fun, but it is also one that is important. If you still have Intel Xeon E5 V4 servers in use, one can think of this as four times the cores per socket and twice the performance per core. 8:1 socket consolidation ratios for many in the high-volume midrange markets would be reasonable.

Overall, the Supermicro CloudDC single socket AMD EPYC solution performed very well with Genoa as an all-around platform. Stay tuned to STH for Bergamo and Genoa-X.

very nice review, but

nvme with pci-e v3 x2 in 2023, in a server with cpu supporting pci-e v5?

Why is price never mentioned in these articles?

Mentioning price would be useful, but frankly for this type of unit there really isn’t “a” price. You talk to your supplier and spec out the machine you want, and they give you a quote. It’s higher than you want so you ask a different supplier who gives you a different quote. After a couple rounds of back and forth you end up buying from one of them, for about half what the “asking” price would otherwise have been.

Or more, or less, depending on how large of a customer you are.

@erik why would you waste PCIe 5 lanes on boot drives? Because that m.2 is useful for.

The boot drives are off the PCH lanes. They’re on slow lanes because it’s 2023 and nobody wants to waste Gen5 lanes on boot drives

@BadCo and @GregUT….i dont need pci-e v5 boot drive. that is indeed waste

but at least pci-e v3 x4 or pci-e v4 x4.

@erik You have to think of the EPYC CPU design to understand what you’re working with. There are (8) PCIe 5.0 x16 controllers for a total of 128 high speed PCIe lanes. Those all get routed to high speed stuff. Typically a few expansion slots and an OCP NIC or two, and then the rest go to cable connectors to route to drive bays. Whether or not you’re using all of that, the board designer has to route them that way to not waste any high speed I/O.

In addition to the 128 fast PCIe lanes, SP5 processors also have 8 slow “bonus” PCIe lanes which are limited to PCIe 3.0 speed. They can’t do PCIe 4.0. And you only get 8 of them. The platform needs a BMC, so you immediately have to use a PCIe lane on the BMC, so now you have 7 PCIe lanes left. You could do one PCIe 3.0 x4 M.2, but then people bitch that you only have one M.2. So instead of one PCIe 3.0 x4 M.2, the design guy puts down two 3.0 x2 M.2 ports instead and you can do some kind of redundancy on your boot drives.

You just run out of PCIe lanes. 136 total PCIe lanes seems like a lot until you sit down and try to layout a board that you have to reuse in as many different servers as possible and you realize that boot drive performance doesn’t matter.