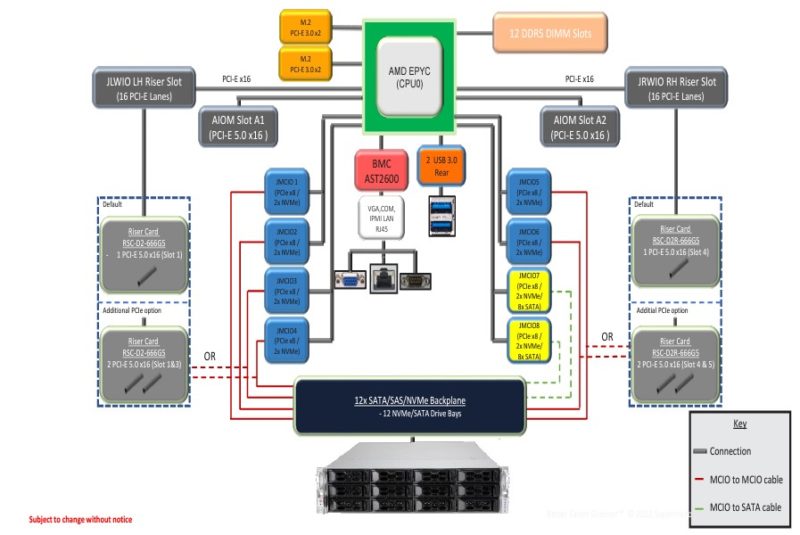

Supermicro AS-2015CS-TNR Topology

Here is the system topology. One major point is that with all of the PCIe slots.

Here is the system topology.

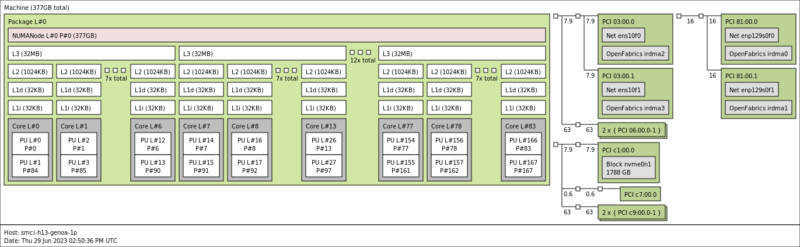

One fun point, is that since we have 84 cores and 384GB of RAM, we see a RAM total that we have not seen since the Intel Xeon Skylake/ Cascade Lake era where this RAM capacity was common on two-socket servers, albeit at much slower speeds and with far fewer cores.

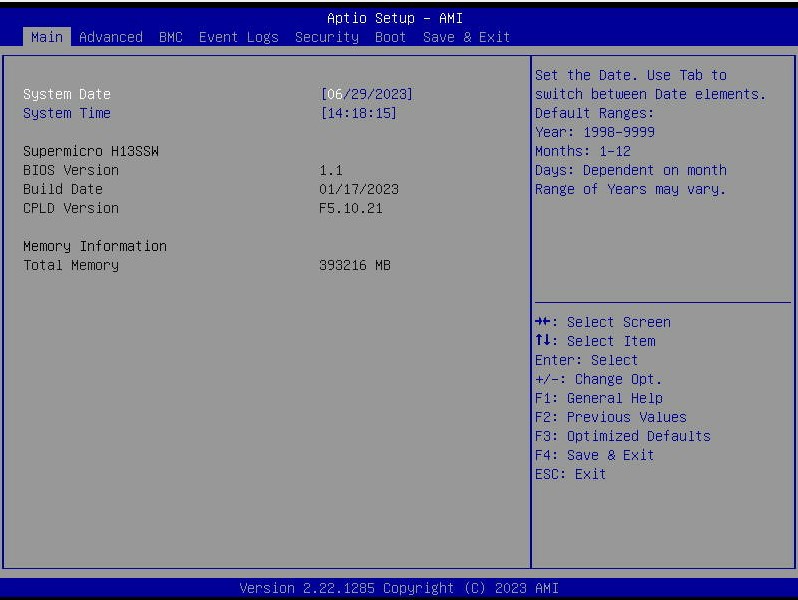

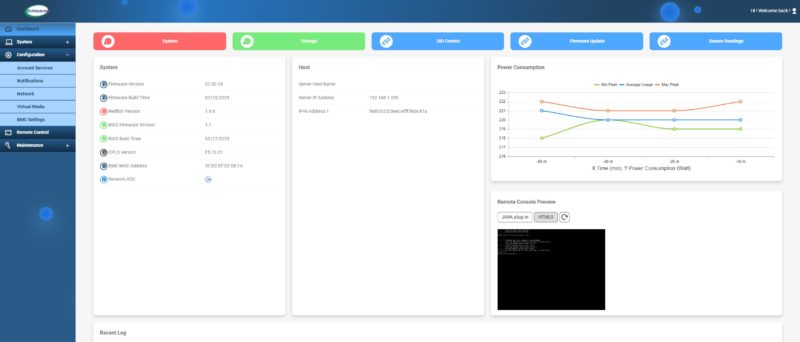

Next, let us get to management.

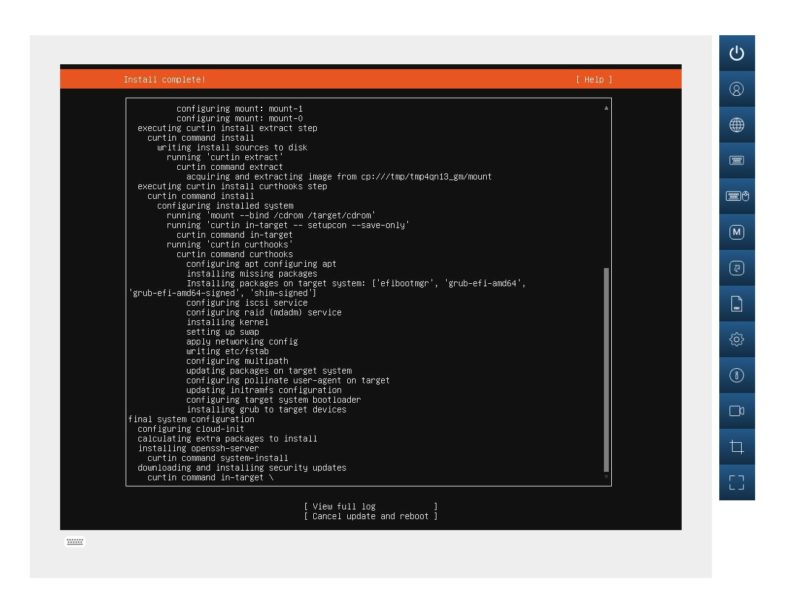

Supermicro AS-2015CS-TNR Management

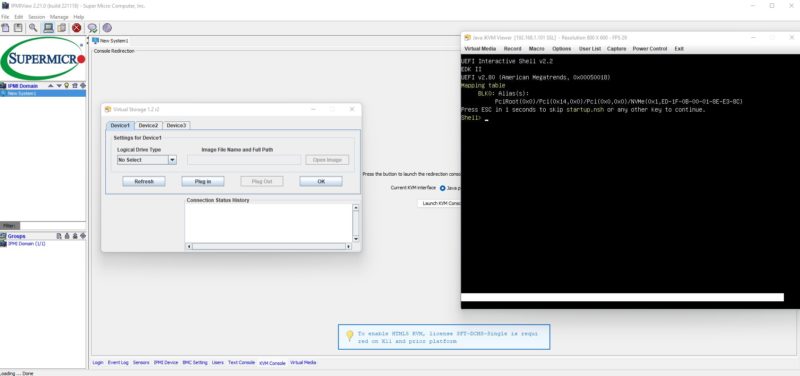

This is one of Supermicro’s H13 generation platforms. As such, it is using the newer ASPEED AST2600 BMC. That means we get an updated Supermicro IPMI management interface (and Redfish API) solution versus what we saw on the older generation platforms.

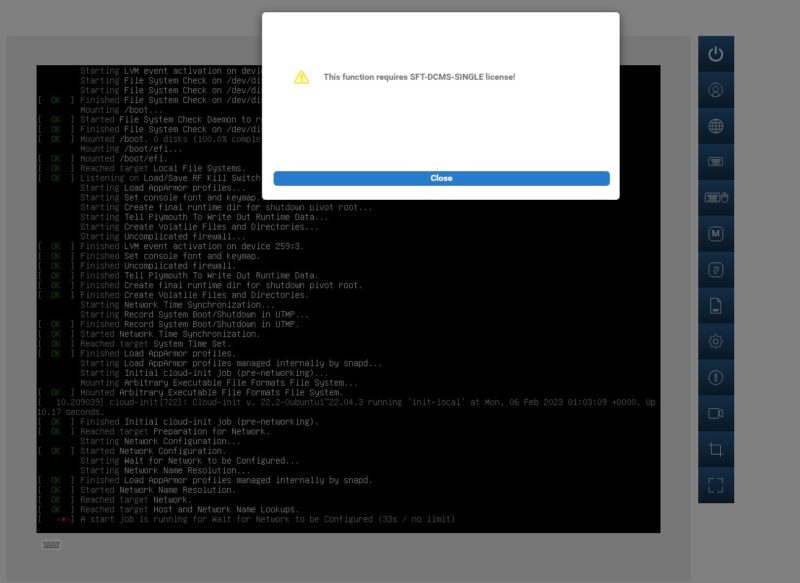

That includes features like HTML5 iKVM functionality that can be used to install OSes and troubleshoot remotely.

One change that we highlighted recently is that with the HTML5 iKVM, one now needs a license to remote mount media directly when using this solution. We discuss other ways to mount media in the new era of Supermicro management in How to Add Virtual Media to a Supermicro Server via HTML5 iKVM Web IPMI Interface.

Aside from what is on the motherboard, Supermicro has a number of other tools to help manage its platforms.

The BMC password is on the front tag. You can learn more about why this is required so the old ADMIN/ ADMIN credentials will not work in Why Your Favorite Default Passwords Are Changing.

Next, let us get to the performance.

Performance

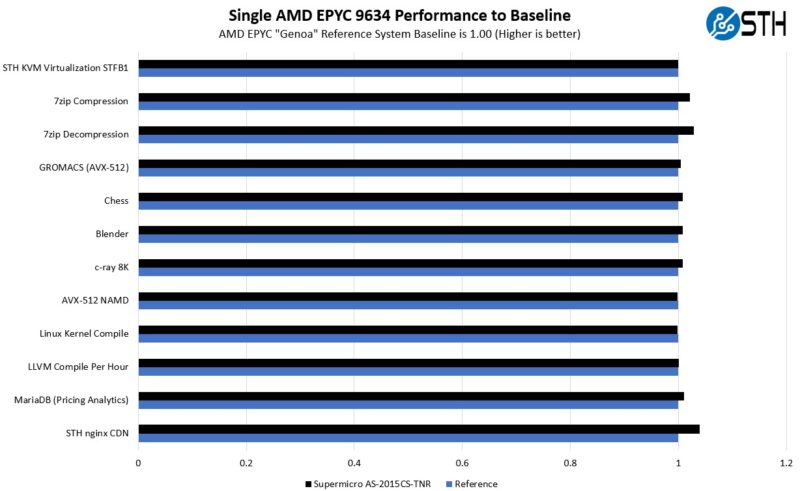

We are publishing this review before being able to show Genoa-X and Bergamo’s performance on a platform like this. Still, we wanted to give a sense of how the system performed compared to Intel’s reference platform. The AMD EPYC 9634 chips have 84 cores, but they are also only 290W default TDP parts that can run in the 240W to 300W range. Here is what we saw:

Here the performance is great. Even with only three fans, we get plenty of cooling for these parts. We also tried higher-end 96-core AMD EPYC 9654 parts in the server and saw just about the same results.

Overall, cooling is not a problem so performance should be in-line with expectations even with higher-end parts.

Next, let us get to the power consumption.

very nice review, but

nvme with pci-e v3 x2 in 2023, in a server with cpu supporting pci-e v5?

Why is price never mentioned in these articles?

Mentioning price would be useful, but frankly for this type of unit there really isn’t “a” price. You talk to your supplier and spec out the machine you want, and they give you a quote. It’s higher than you want so you ask a different supplier who gives you a different quote. After a couple rounds of back and forth you end up buying from one of them, for about half what the “asking” price would otherwise have been.

Or more, or less, depending on how large of a customer you are.

@erik why would you waste PCIe 5 lanes on boot drives? Because that m.2 is useful for.

The boot drives are off the PCH lanes. They’re on slow lanes because it’s 2023 and nobody wants to waste Gen5 lanes on boot drives

@BadCo and @GregUT….i dont need pci-e v5 boot drive. that is indeed waste

but at least pci-e v3 x4 or pci-e v4 x4.

@erik You have to think of the EPYC CPU design to understand what you’re working with. There are (8) PCIe 5.0 x16 controllers for a total of 128 high speed PCIe lanes. Those all get routed to high speed stuff. Typically a few expansion slots and an OCP NIC or two, and then the rest go to cable connectors to route to drive bays. Whether or not you’re using all of that, the board designer has to route them that way to not waste any high speed I/O.

In addition to the 128 fast PCIe lanes, SP5 processors also have 8 slow “bonus” PCIe lanes which are limited to PCIe 3.0 speed. They can’t do PCIe 4.0. And you only get 8 of them. The platform needs a BMC, so you immediately have to use a PCIe lane on the BMC, so now you have 7 PCIe lanes left. You could do one PCIe 3.0 x4 M.2, but then people bitch that you only have one M.2. So instead of one PCIe 3.0 x4 M.2, the design guy puts down two 3.0 x2 M.2 ports instead and you can do some kind of redundancy on your boot drives.

You just run out of PCIe lanes. 136 total PCIe lanes seems like a lot until you sit down and try to layout a board that you have to reuse in as many different servers as possible and you realize that boot drive performance doesn’t matter.