Supermicro AS-1123US-TR4 Overview

The Supermicro AS-1123US-TR4 as the name suggests is one of the company’s A+ servers (“AS”.) The 1U platform supports dual AMD EPYC 7000 series CPUs, 10x front 2.5″ hot swap bays, and a surprising number of PCIe slots. In this overview, we are going to delve into the inner workings of the server and explain how it can be customized to meet the needs of users.

We are not going to spend much time on the front panel except to note that there are 10x 2.5″ bays as well as two USB 3.0 ports as well as normal status LEDs and power functions. Instead, let us get into the chassis.

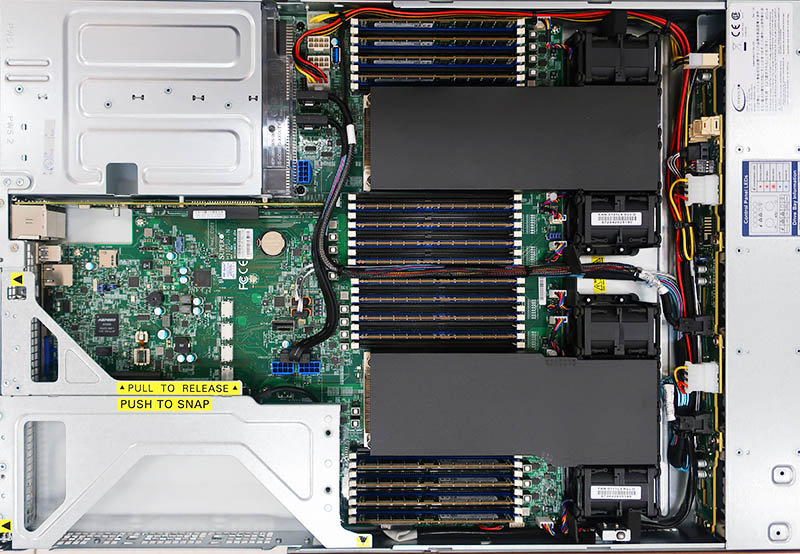

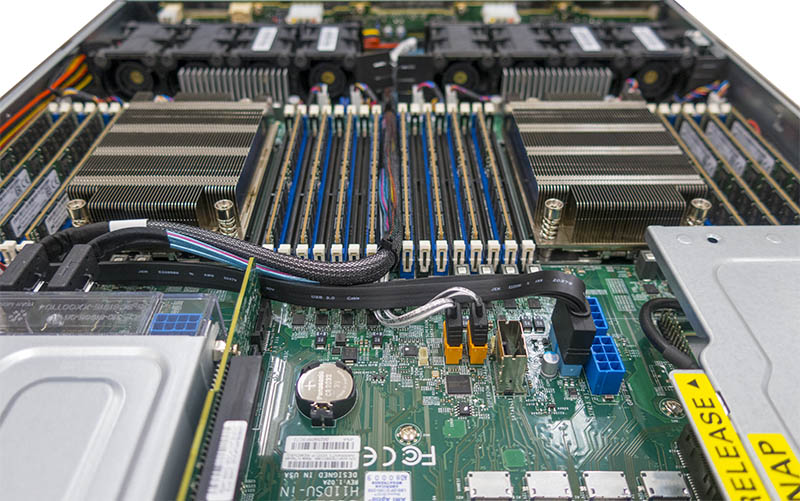

Inside the base Supermicro AS-1123US-TR4 server, one can see that there are two CPUs flanked by 16 DIMM slots each. Virtually the entire center of the system is either DDR4 DIMM slots or CPU/ heatsinks. This allows for up to 64 cores/ 128 threads in the current AMD EPYC 7000 generation using EPYC 7501, EPYC 7551, or EPYC 7601 SKUs. It also allows for up to 4TB of RAM using 128GB DIMMs. Both the 64 cores in a dual socket system, and the 4TB of RAM in a dual socket system are specs Intel Xeon Scalable (Skylake-SP) generation CPUs cannot match. In fact, if you exclude the “M” series Intel Xeon Scalable SKUs that can address up to 1.5TB each for a $3000 per CPU premium, this is more like 2.67x as much RAM as standard Intel Xeon Scalable SKUs can address.

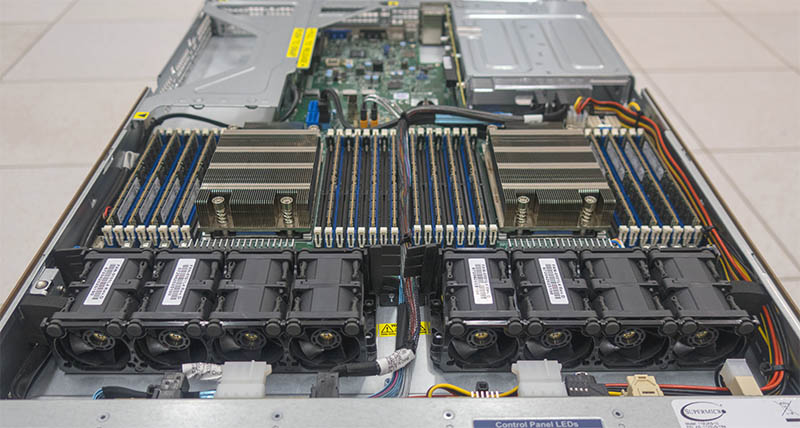

Airflow is managed by a series of fans and air shrouds that ensure two sets of fans cool each CPU for redundancy. The air shroud is perfectly functional, but it is a bit harder to re-seat than some of the hard plastic shrouds we see from Supermicro and its competitors in higher-end servers.

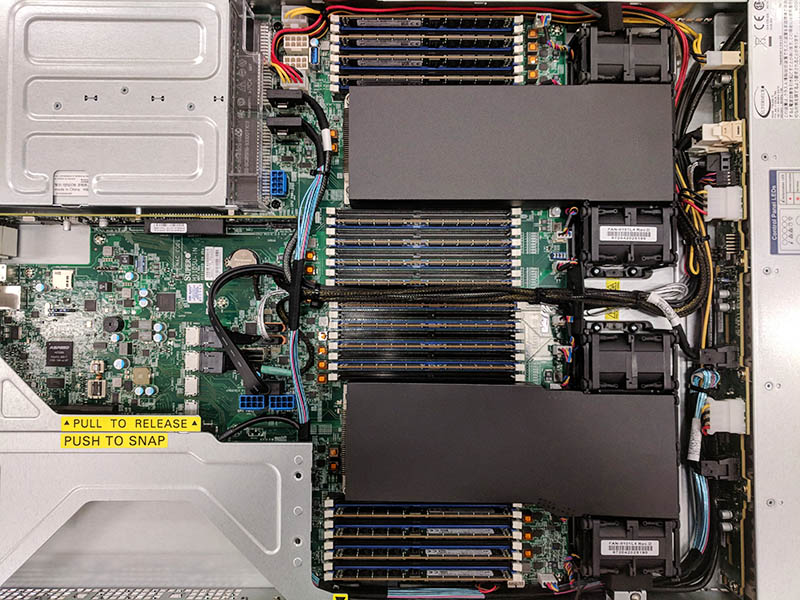

We wanted to show the system in two different configurations that we can see being common. The top configuration is wired for 10x SATA III 6.0gbps front bays. The below configuration is wired for 2x NVMe U.2 / SATA III bays plus 8x SATA III 6.0gbps bays.

Key to this change is the rightmost hot swap bays where the backplane provides both SATA III (7-pin) and SFF-8643 connections for U.2 drives. You will also notice that changing the U.2 drives also changes the default cabling of the chassis. For system integrators, there is a BOM cost associated with enabling the U.2 support, but it is relatively small and we feel this is an option many customers will want.

Here is the airflow shot that runs from front to the rear of the chassis and how cables only have three paths from the motherboard to the front panel due to the massive CPU and RAM array.

The fans are held into place by carrier cages and rubber feet. This is a design that Supermicro and other manufacturers have used for years. What this design does not incorporate is the easy hot-swap mechanisms we see on many other Supermicro systems like the Supermicro AS-4023S-TRT we reviewed. Instead, fans must be detached from their 4-pin PWM headers and then removed/ replaced. The procedure is not difficult, but replacing one of the two sets of fans cooling the CPUs will be trickier if the system is under load since the airflow shrouds also need to be removed.

Looking at the system from the opposite view, one can see how that massive array of DIMMs impacts cabling and resulting cooling options for the server.

Supermicro AS-1123US-TR4 Internal Storage Headers

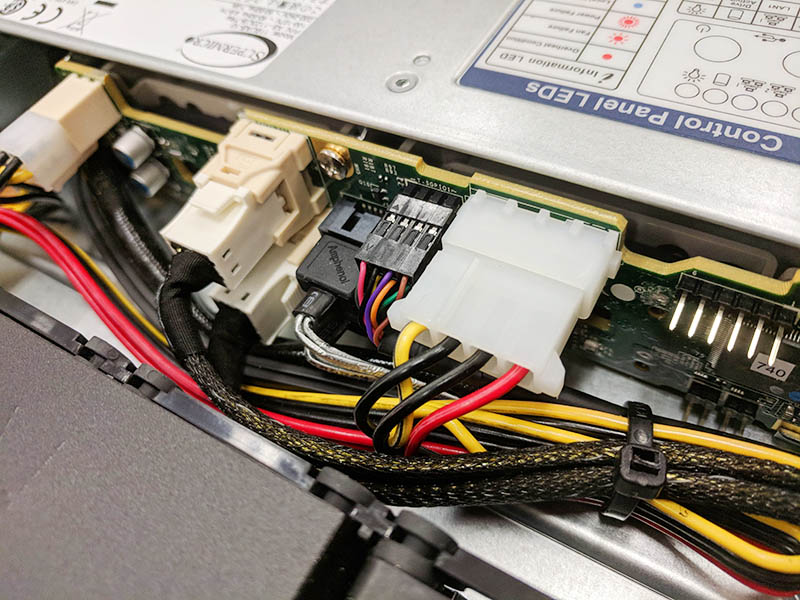

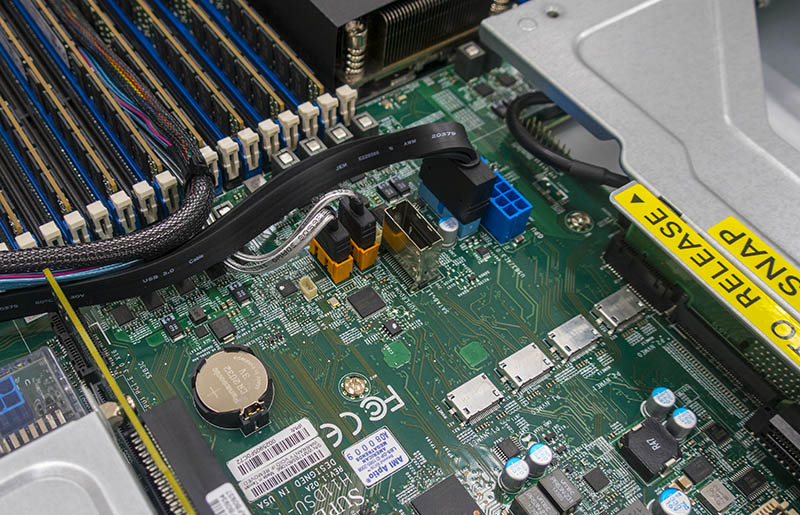

For the primary storage, there are two “gold” 7-pin headers which support SATADOM power. There is also a SATA III SFF-8087 port for another 4x SATA III ports. That SFF-8087 connector is going unused in our test system configurations, however, it can be used for additional SATA III ports if the gold ports are used for SATADOMs.

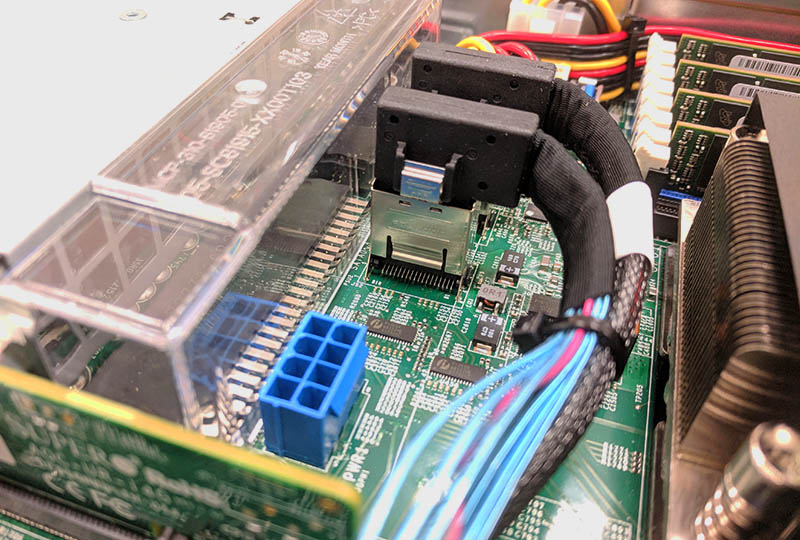

The four small connectors that are unwired in this picture are Oculink ports for NVMe. Two go to CPU0 and the other two go to CPU1. Although we show these hooked up in an earlier overview picture, there are only two hooked up. This is because the same motherboard is used in the 2U “Ultra” series of servers which you can see here where there can be 4x front panel NVMe ports. On this 1U system, only two of the ports are used.

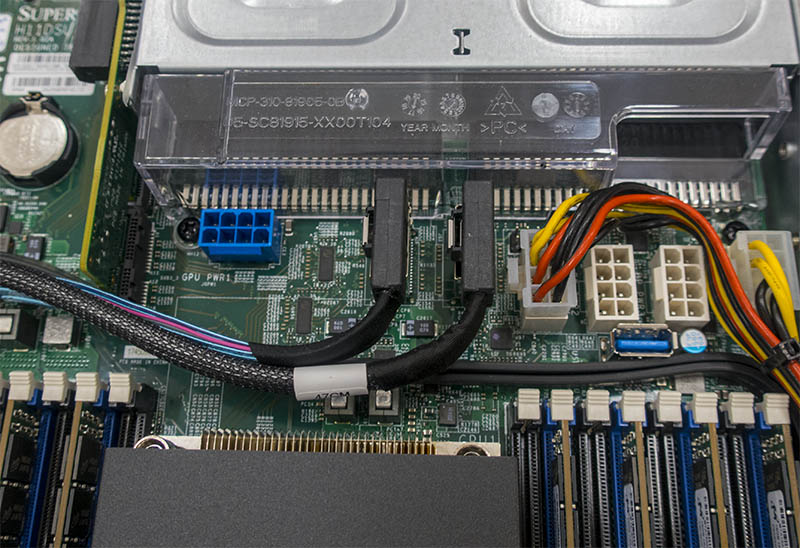

In front of the power supplies, we have two SFF-8087 connectors each providing 4x SATA III 6.0gbps ports. One can also see features such as a USB Type-A header for embedded OS or license key installation. The GPU power connector is meant for the 2U chassis when a GPU is installed above the GPUs. We showed how this is used in the Supermicro AS-2023U in our piece: NVIDIA GPU in an AMD EPYC Server Tips for Tensorflow and Cryptomining.

The redundant power supplies are PWS-1K02A-1R models with front to rear airflow. They are 1kW models with 80Plus Titanium ratings for 96%+ efficiency.

These power supplies function as one would expect and we tested the system under full load powering off a PDU port to ensure proper failover.

Supermicro AS-1123US-TR4 Connectivity and Expansion

One of the more unique features of the Supermicro AS-1123US-TR4 are the expansion slots. Unlike we have seen with other offerings that use channel motherboards (e.g. ATX/ EATX size boards) the custom motherboard form factor allows for efficient use of multiple PCIe risers to maximize expansion options.

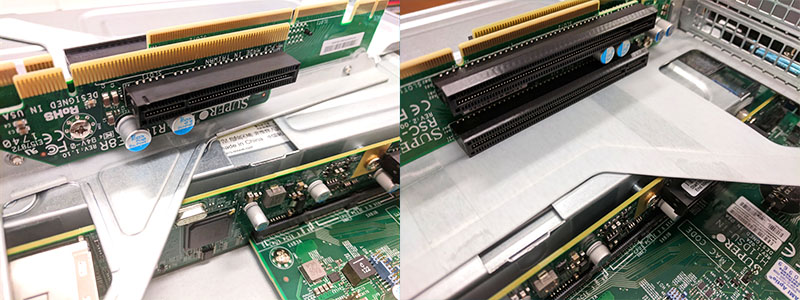

The left slots are PCIe 3.0 x16 slots in full height/ half length configurations. The right slot above the VGA/ serial port is a PCIe 3.0 x8 low profile/ half-length slot.

We pulled the risers out and flipped them over to show what they look like as it is hard to see when they are installed in the chassis.

Showing the impact of this, one can see that the half-height slot is perfect for 40GbE / 56Gbps FDR Infiniband which we have installed on the top chassis.

In terms of rear I/O there are two USB 3.0 ports, a VGA and a serial console port for physical KVM access in a data center. The management port is for out-of-band IPMI management which we will discuss in the next section.

Base networking on this model is found in 4x 1GbE via an Intel i350 chipset. The NICs and controller are found on the AOC-UR-i4G riser. These risers allow Supermicro to add additional functionality and change a single riser to offer 10Gbase-T, SFP+, SFP28 25GbE and other offerings using a base model design similar to other top-tier vendors. The 4x 1GbE configuration we have is essentially the base configuration and is intended to be used in conjunction with a higher-speed NIC in the add-on card slots.

That riser has one other bit of functionality we wanted to mention. It has a PCIe 3.0 x8 low profile, half-length PCIe slot which is primarily used to install additional SAS3 controllers. One can install a SAS3 controller in this slot to provide an 8x 2.5″ SAS3 capability up front, with the other two slots being either NVMe or SATA III based on configuration. Using this internal slot is handy as it frees up valuable rear I/O slots.

Next, we will cover the block diagram and the management features before moving onto the performance, power consumption and our final thoughts on the platform.

A criticism of STH reviews is that they don’t put price in. These barebones are like $1600 and available from channel partners. For a barebones that is about right but if you’re loading with RAM, CPUs, a 25/100Gb NIC, and 10 drives the $1600 is a small cost overall.

When are we gonna see 10x nvme? That’s really the sweet spot for EPYC.

Good lookin’ system though and really thorough review. You guys have kicked it up a notch on the server reviews.

Too bad this isn’t ten NVMe like the Dell R6415. It looks really nice and since we’re doing NVMe-oF attached storage these days with less local it’s fine for us. Something to talk to our reseller about. Price is really reasonable here Tyrone.

KILLS me that they didn’t do 4 NVMe.

Why did they do a x8 internal on the riser not an x16? They’ve got risers with that. Since 8 SAS3 isn’t going to do us much good an x16 internal slot filled with 4 M.2’s I’d say is ideal.

I’m with these guys. I want one. If you could get the 7401’s at 7401P price I’d have a stack of these already.

@Harold Walters, @Rao76

Have you look at TYAN’s GT62F-B8026?

It got 10 x NVMe + 2 x NVMe M.2.

https://www.tyan.com/Barebones_GT62FB8026_B8026G62FE10HR

@George not the same class right? Single socket.

Have you also noticed that you have to disable “above 4G decoding” in bios in order for 100G Connect-x 4 to initialize properly?

Also, it should be worth mentioning that if you fully populate dimm slots, memory frequency goes down to 2133MHz.

For those looking for NVMe, the SMC site shows two versions of the AS-1123US.

AS-1123US-TR4 = 10 x 2.5 SATA + 2 x NVMe

AS-1123US-TN10RT = 10 x U.2 NVMe

Really great review STH. Looking forward to getting a few of these.

@Jure: please check the manualon page 34. Pick the right dimms and in most situations you wil have 2666mhz.

@tyrone saddleman

Yes it is rather unfortunate that NAND and RAM aren’t going to be cheaper any time soon. Which means the overall cost of Intel / AMD is becoming much smaller in % of TCO. Although right now AMD is selling as much EPYC as they could.