Supermicro AS-1014S-WTRT Performance

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts. Starting with our 2nd Generation Intel Xeon Scalable benchmarks, we are adding a number of our workload testing features to the mix as the next evolution of our platform.

At this point, our benchmarking sessions take days to run and we are generating well over a thousand data points. We are also running workloads for software companies that want to see how their software works on the latest hardware. As a result, this is a small sample of the data we are collecting and can share publicly. Our position is always that we are happy to provide some free data but we also have services to let companies run their own workloads in our lab, such as with our DemoEval service. What we do provide is an extremely controlled environment where we know every step is exactly the same and each run is done in a real-world data center, not a test bench.

We are going to show off a few results, and highlight a number of interesting data points in this article.

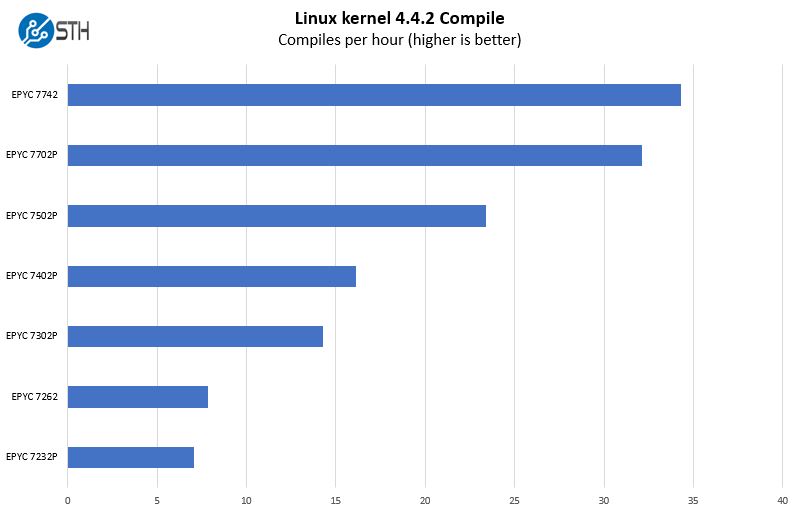

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

We are giving a fairly broad set of performance figures here ranging from the $450 8-core AMD EPYC 7232P to the 64-core AMD EPYC 7742. The ability of the Supermicro AS-1014S-WTRT to support up to not just the 225W 48 and 64 core parts, but the high configurable TDP 240W limits in this platform is impressive.

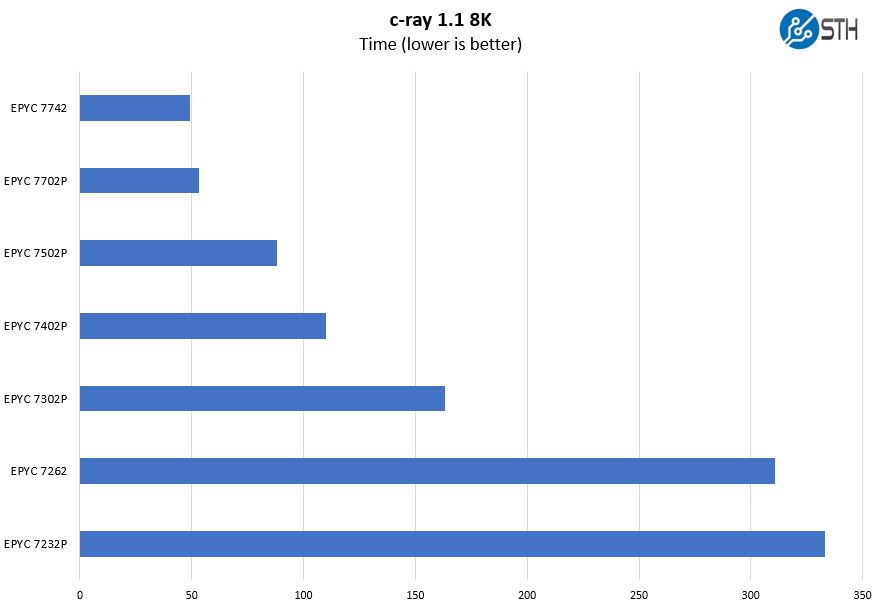

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

Here you can see that those options span a fairly large performance spectrum. If you just want to get a single 4-bay 3.5″ or U.2 NVMe platform connected with some RAM and a 10Gbase-T networking option, the AMD EPYC 7232P provides a lot of value.

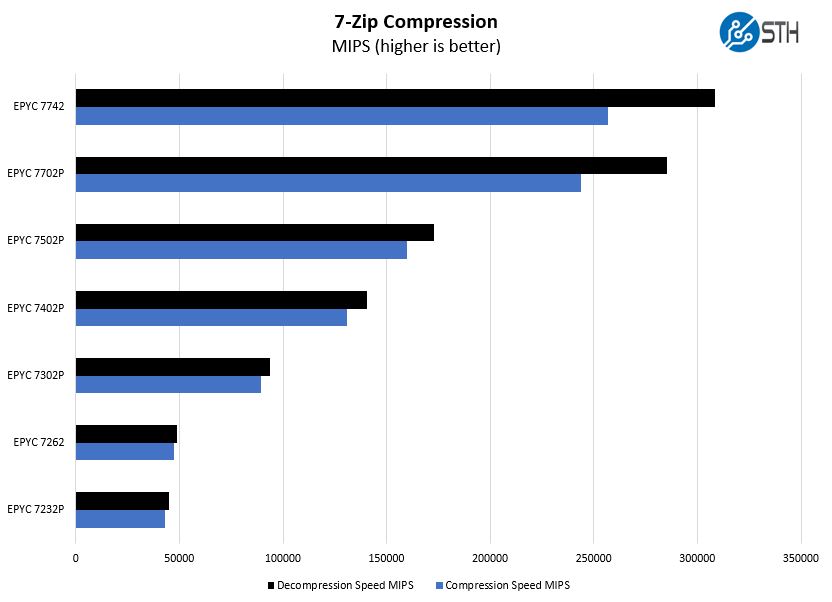

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

Upgrading from the EPYC 7232P to the EPYC 7302P is only around $400 or about $50 per core. If you want to run applications on the Supermicro AS-1014S-WTRT and not just use it as a basic storage box, then this is probably the option we would suggest. Also, we think for the dedicated hosting market, this is going to be a very strong option.

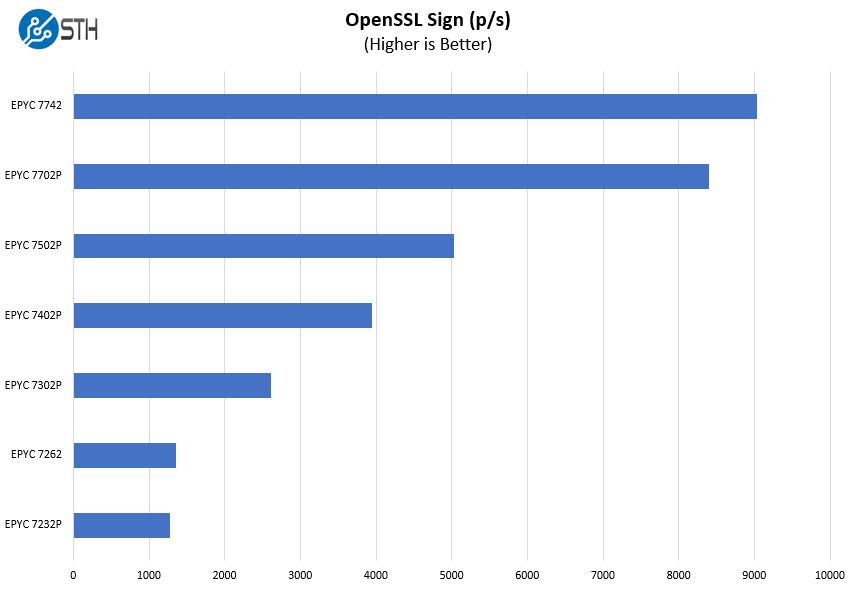

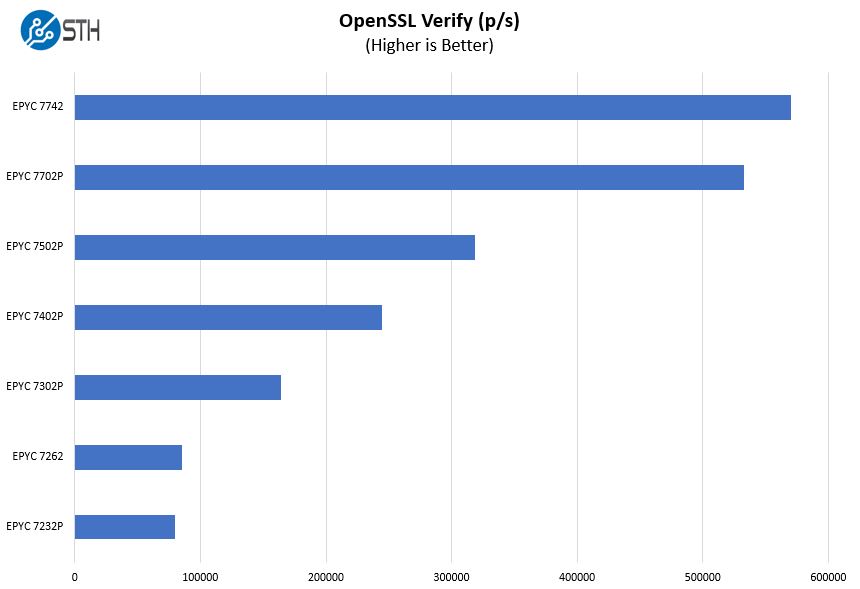

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

Here are the verify results:

OpenSSL continues to show a similar trend. We also think that the AMD EPYC 7402P may be a very popular choice in this server. It only adds $400-500 incremental cost to the server over the EPYC 7302P, but again adds eight more cores. For a lower-cost platform like the Supermicro AS-1014S-WTRT, we think this is probably the sweet spot for many low-cost applications.

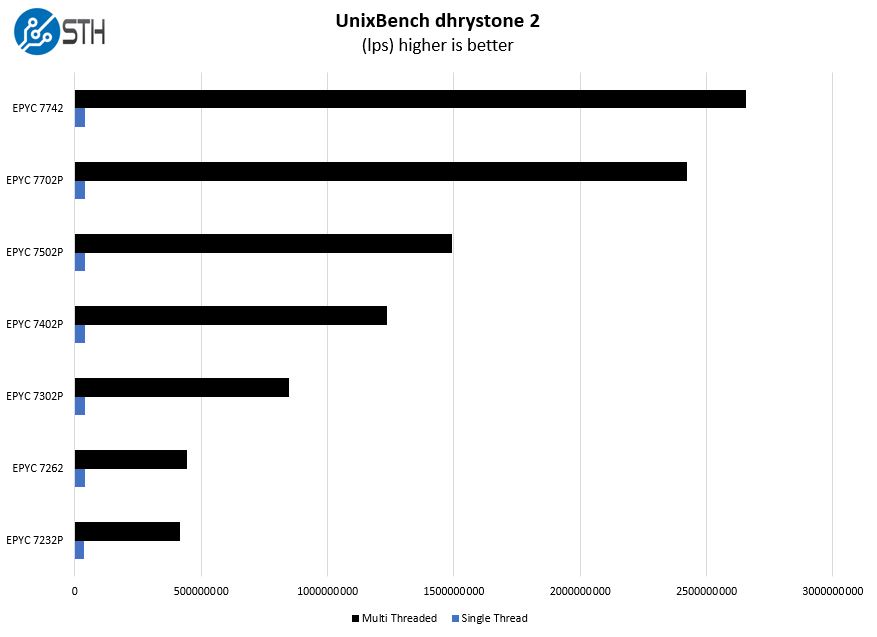

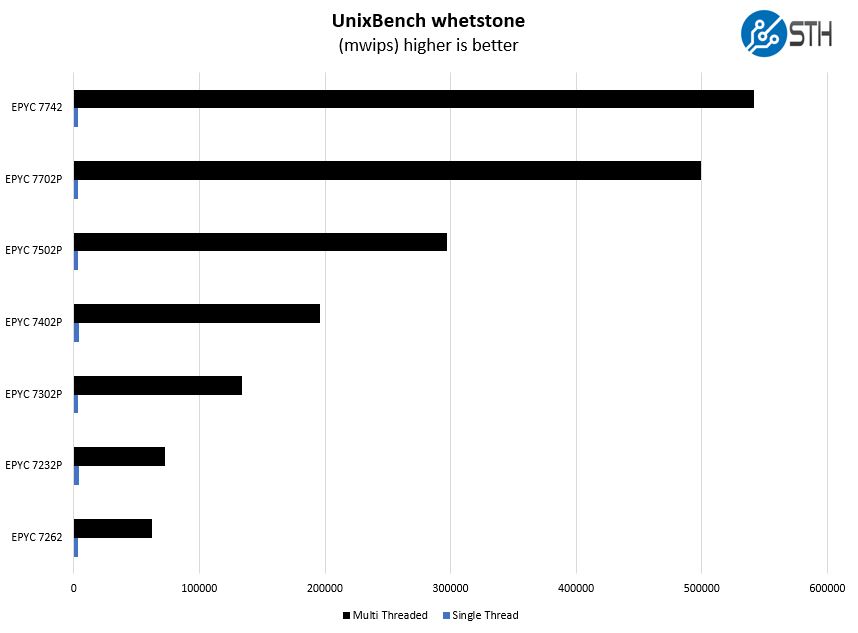

UnixBench Dhrystone 2 and Whetstone Benchmarks

Some of the longest-running tests at STH are the venerable UnixBench 5.1.3 Dhrystone 2 and Whetstone results. They are certainly aging, however, we constantly get requests for them, and many angry notes when we leave them out. UnixBench is widely used so we are including it in this data set. Here are the Dhrystone 2 results:

Here are the whetstone results:

If you are focused on an 8GB of memory per core ratio as many cloud providers use, using an AMD EPYC 7502P with 8x 32GB DIMMs may make a lot of sense. It also helps consolidate multiple smaller nodes into a single socket. If you have high per-socket VMware license costs and are using Intel Xeon Gold 5200 or Xeon Silver CPUs, then consolidating to the Supermicro AS-1014S-WTRT may make the CPU pricing seem very small.

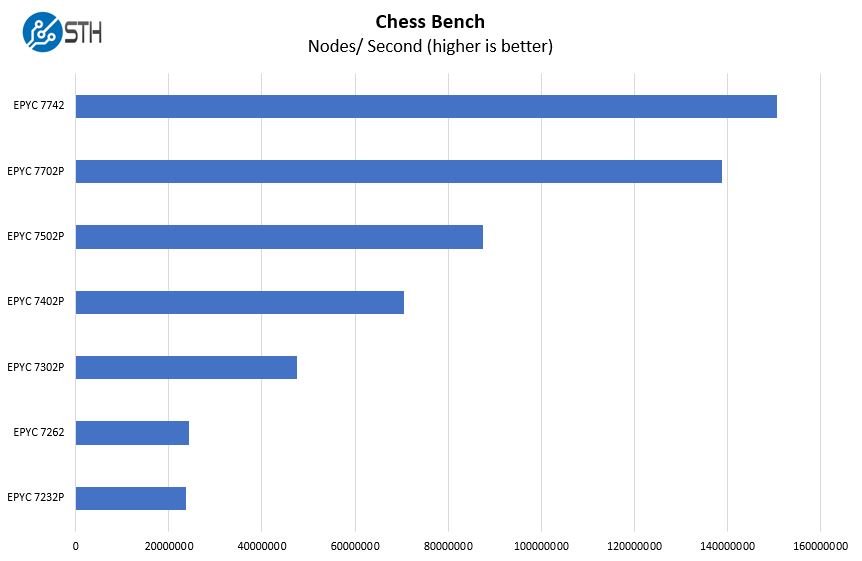

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

One of the great features of the Supermicro AS-1014S-WTRT is the ability to support 64-core AMD EPYC SKUs. That includes the top-end EPYC 7742 and its maximum cTDP of 240W. We think that the more natural pairing with the Supermicro AS-1014S-WTRT is the AMD EPYC 7702P which is under $5000 for 64 cores. That $5000 CPU in the Supermicro platform can replace two to four Intel Xeon Scalable Platinum or Gold SKUs. The SKUs the 64 core Supermicro platform replaces can cost more for a single CPU than the entire AMD server.

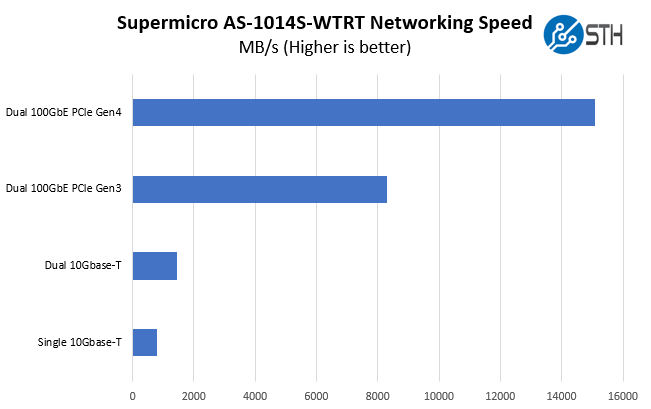

Supermicro AS-1014S-WTRT Network Performance

Taking a quick look at network performance shows some of the primary benefits of the Supermicro-AMD solution over a traditional Xeon server.

We have the onboard 10Gbase-T (Broadcom) figures in the chart, but there is more to the networking story than the onboard. Utilizing the PCIe Gen4 slots allows for two 100GbE or a single 200GbE port to be driven from a single NIC. Practically that means one gets around twice the bandwidth per Mellanox ConnectX-5 PCIe Gen4 NIC than it does with the Gne3 versions of the same cards. For customers, that means higher performance per card, or potentially significantly lower costs by reducing the number of NICs, switch ports, and transceivers/ cables required to hit given performance targets. For this Supermicro platform, this is a big deal.

Next, we are going to look at power consumption, our STH Server Spider, and give our final words.

256gb and 24 cores might be right for us. With dual 100gbe who needs local storage?

It is worth noting the H12SSW motherboard will support One M2 drive with x4 PCI-E lanes…

Nice server, nice review. Work on the scoring system becouse its pointless right now.

I’m hoping these will be available with 8 or 10 bay 2,5″.

This format would be nice for an all-flash ceph cluster.

michael the Supermicro AS-1114S-WTRT is the 10x 2.5″ version of this system. I agree that is something we would look at for our all-flash Ceph cluster as well.

Patrick, you mentioned that 16GB, 32GB and 64GB DIMMs are going to be popular configurations. However is there any 128GB DIMMs option still at 3200MHz available within Supermicro servers? For example within Supermicro AS-1114S-WTRT or any other else?

Mike – 128GB and 256GB DIMMs are supported. Technically this AS-1014S-WTRT can take up to 2TB of memory (8x 256GB.) The comment was more around the idea that I think the majority of configurations will focus on 16GB-64GB DIMMs given the current state of pricing.

Supermicro publishes QVL for the different parts you can put into the servers, eg.:

https://www.supermicro.com/support/resources/memory/display.cfm?sz=64&mspd=3.2&mtyp=139&id=08392F6FB14F085B29B5A223923DA551&prid=86918&type=DDR4%201.2V&ecc=1®=1&fbd=0

They don’t list anything beyond 64 GB though.

@Patrick would you be so kind and cover with more detail the BIOS settings related to the CPU ?

I’m intrigued about the possibility of fine tuning numa configuration – I’m interested into checking up whether it is possible to divide CPU into NUMA nodes based on LLC node boundaries.

I’m under impression that this could help Windows server scheduler to handle with the way how AMD Rome CPU is constructed.

Unfortunately I did not see anything about it on the vendor support pages yet.

Yeah, its such a shame that the M.2 slots only is x2, would be great to throw a couple of 905P or P4801X in there, still can but i wonder how much they will throttle/bottleneck.

And i guess they cant be remapped/switched to 2x x4 instead of 4x x2 as some motherboards can?

Ey, don’t mind me, read it wrong, i could have sworn it had 4 M.2 slots, got it backwards in my head. Yeah, then its even worse, such a weird design choice

Does anyone know if it is possible to Raid1 2xM.2 slots in the Bios?

#Typo: this review says: 25.6″ (597mm) but 25.6″ is actually 650mm (as confirmed on the SM website).