Supermicro ARS-111GL-NHR Internal Hardware Overview

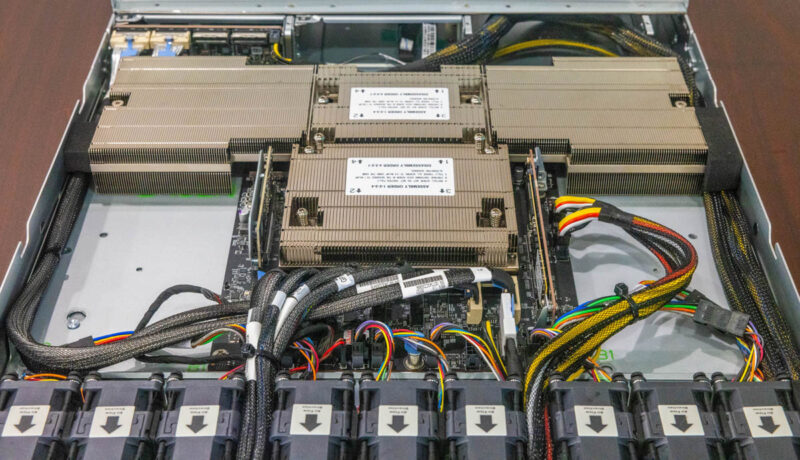

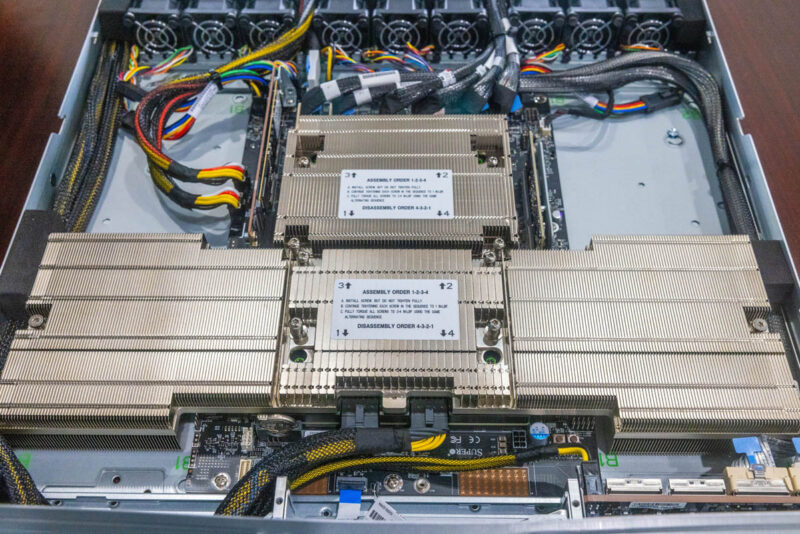

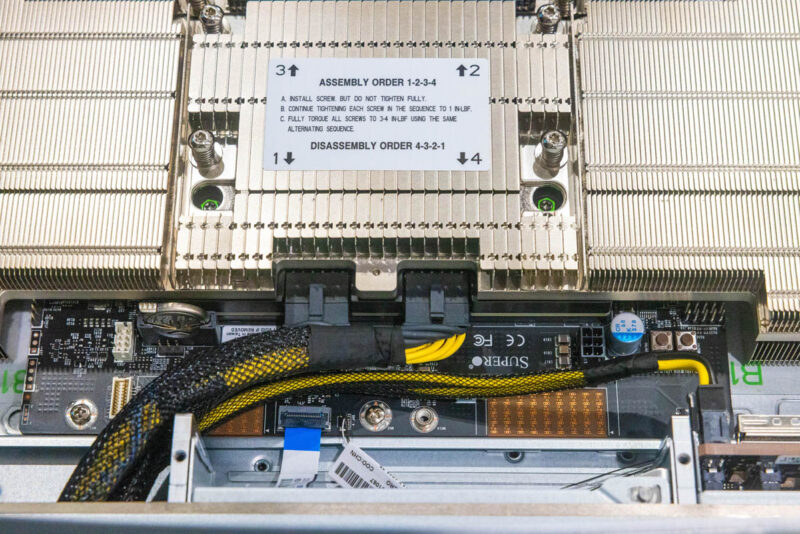

Inside the system, the first thing we wanted to touch on is cooling. A four-part heatsink takes up the entire width of the chassis along with the center of the system.

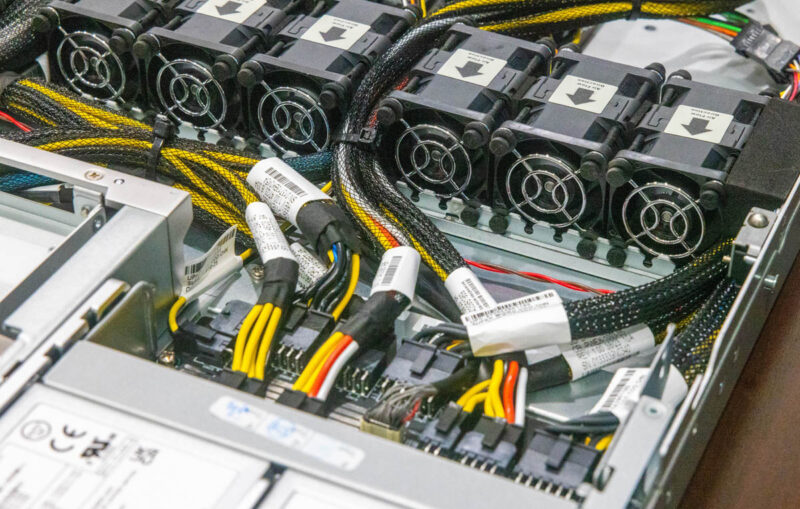

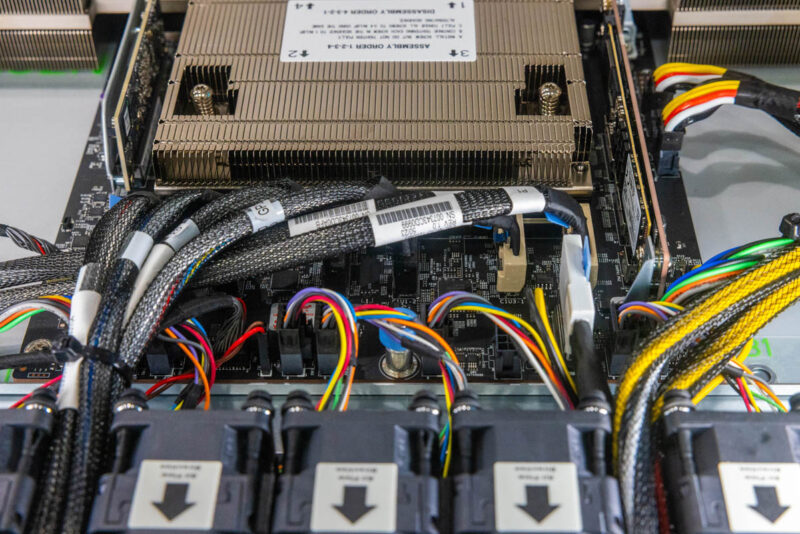

We also get nine dual fan modules because this server needs to move a ton of air. Something quite different in this design is that the fans sit between the PCIe slots / power supply and the CPU in the rear half of the chassis. Most servers we see today have fans between the storage and the CPU.

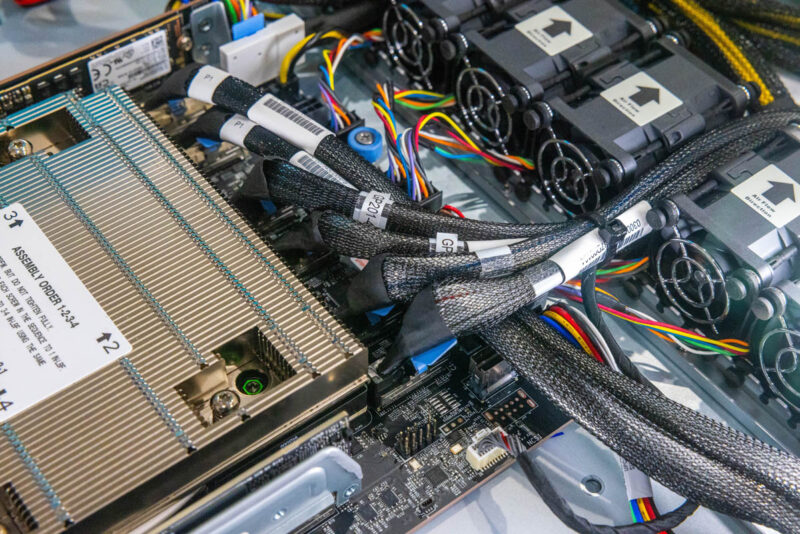

The 1U heatsink for this needs to use heat pipes, different size fins, and it is so wide that the only way to route cables between the front and rear of the chassis is by using small channels on either side of this heatsink.

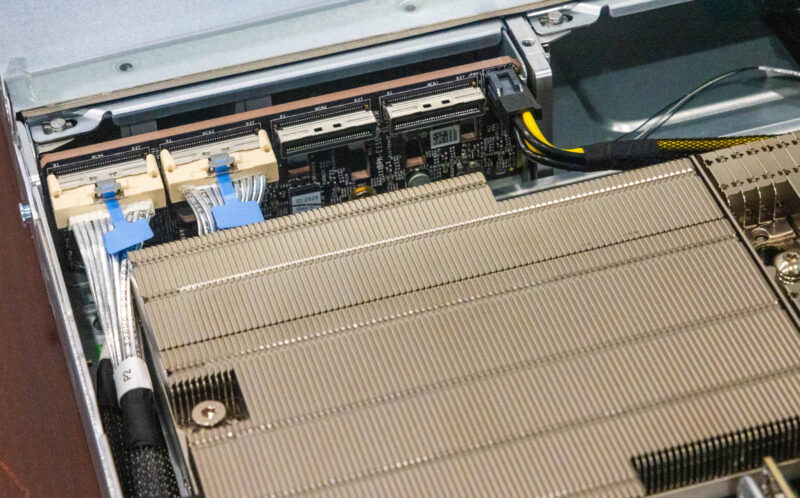

On the front storage, you can see that we have two MCIO x8 connectors. This means that only not all of the eight front E1.S bays have PCIe Gen5 connectivity in this configuration.

The other two MCIO x8 connectors are not populated.

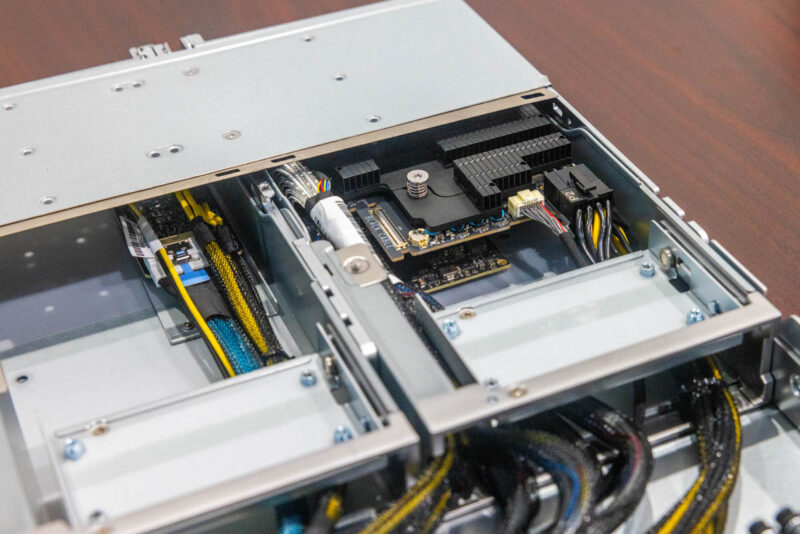

The GH200 integrates the CPU, RAM, and primary accelerator onto a single package, so the “motherboard” in this platform is probably better thought of as an I/O expansion board. Off of that I/O expansion board, we have a replaceable BMC card. This is in a DC-SCM module. OCP DC-SCM modules are becoming more popular since they allow customers to swap BMCs for different models instead of requiring a different motherboard.

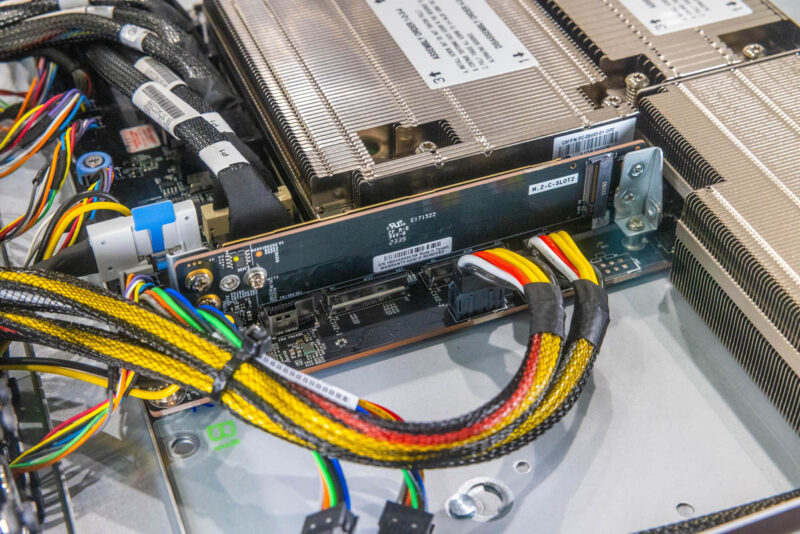

Boot storage is provided by a dual M.2 riser.

Here we can see the boot drive installed on the other side of the dual M.2 riser.

It may be hard to see the I/O expansion board or motherboard under the giant GH200 module and heatsink. There is not much actually on this motherboard and most connections are cabled off of it.

One set of cables is from the power supplies. The power supplies plug directly into a power distribution board, and then the power is delivered to the various backplanes, motherboards, expansion slots, and even the GH200.

The other set of cables is the MCIO cable set. Years ago, as we were entering the PCIe Gen4 era, we discussed how motherboards were going to start removing physical PCIe slots and instead opt for cabled connections to various slots. NVIDIA MGX platforms adopt that approach and use MCIO x8 connections heavily to carry PCIe lanes throughout a system.

Some of the PCIe lanes via MCIO cables have to go through the fan partition, others need to be routed around the giant GH200 heatsink.

One can also see fan headers on the fan side of the motherboard.

Something many folks do not know is that the NVIDIA GH200 receives power directly from the PSUs/ power distribution board. In standard Intel Xeon, AMD EPYC, and Ampere servers, power is delivered through the CPU socket to the CPU. In Grace Hopper, power is delivered directly to the board.

Here is another look at the two power connections. We went into those unpopulated pads recently on our Substack but they are not used in this system.

At the rear of the system we have cabled connections for the PCIe slots. Here we can see the NVIDIA BlueField-3 above the NVIDIA ConnectX-7 InfiniBand card. Since the GH200 only has 64 PCIe lanes, these two x16 cards use half of the connectivity. The front panel PCIe Gen5 E1.S uses another 4×4 lanes. That leaves only 16 lanes leftover for other devices such as the M.2 drives. The other implication is that there is not a PCIe switch with a NIC and possibly a storage device on it for the Hopper GPU side. Instead, Ethernet and Infiniband goes through the CPU PCIe root complexes.

You might notice these two pieces of metal and think they are for battery backup units, super caps, or even SSDs. That is not the case. We go into what these hold and why they are there for another potentially multi-billion dollar market in our Substack. Do not miss that one if you read these not just to see cool hardware or as a potential customer.

Here is the rear I/O board that has the mini DisplayPort, Ethernet, and USB Type-A port.

All told, there is quite a bit here, but there are certainly things one gives up with a GH200 platform.

Next, let us take a look at the Block Diagram and Topology.

“You might notice these two pieces of metal” but you’ll have to click through to substack to discover what they are!

Maybe the clickbait shouldn’t come at the cost of making the article incomplete?

@A Concerned Listener – They are not being used in this system and it is an entirely different topic that was almost as big as this entire review.

I know folks may not love the fact that we have multiple publications now, but a lot of work is happening to decide what goes on the main YouTube channel versus short-form and STH versus Substack. We will not always get it right, but that is a very different topic since it is not in this server.

I can’t make sense of the spider graph for this system, how does this rank higher for networking density than GPU Density? Also adding to the above regarding the clickbait, that’s not why we come to STH

I’m subbed to the Substack via my company. It’s like another STH less about the hardware and more about the business of what’s going on through the lens of hardware. I’m enjoying it so far. I’ve been reading STH for more than a decade so I’m happy to spend some of my subscription allocation on supporting them. I’d rather do that and get exclusive content then see STH turn into Tomshardware with spammy auto-play video ads.

I’ll just tell you, the paywalled article that links to is worth the monthly sub alone to me. It’s a great PK article that I didn’t know about.

I don’t understand why people are complaining. They’re promoting another pub they have and this is longer than their average server review so it’s not like they took half of a review away.

I’m getting approval to sub at work.

Accolades belong to the STH team for this review. With this and last week’s GH200 I’m learning too much here so thanks