Intel Ethernet 800 Series Functionality

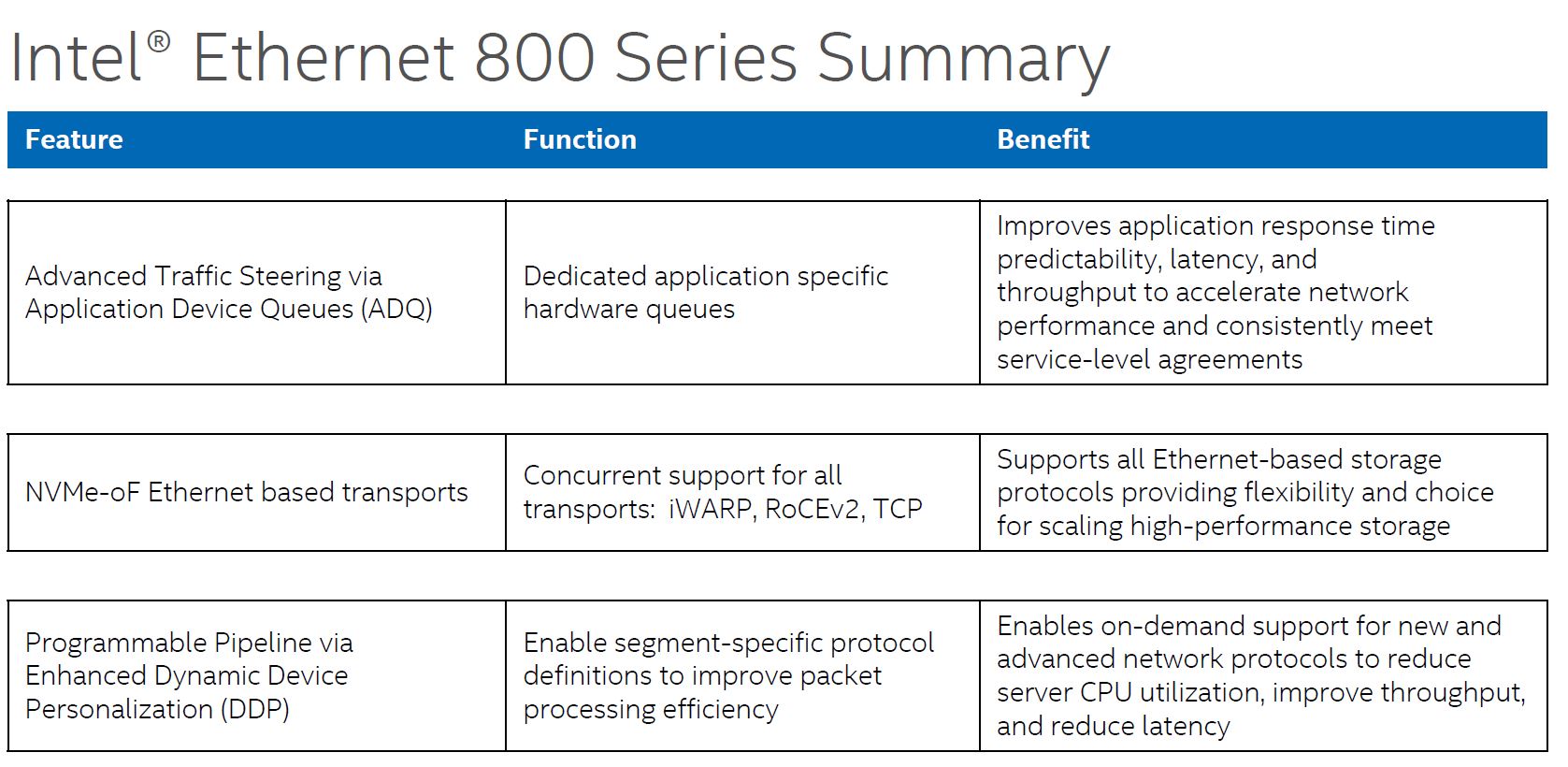

As we move into the 25GbE/ 100GbE generation, NICs simply require more offload functionality. Functions that at 10GbE or 25GbE were manageable on CPU cores simply create too much CPU utilization at 100GbE speeds. Remember, dual 100GbE is not too far off from a PCIe Gen4 x16 slots bandwidth, which puts a lot of pressure on a system. With modern CPUs, if you fill every PCIe x16 slot with 100GbE NICs and try to run them at full speed with an application behind them, systems will simply not be able to cope. The Fortville dual 40GbE (XL710) NICs were on the edge of not having enough offload capabilities for many applications. They were known as lower-cost NICs, but not necessarily the most feature-rich which makes sense. Still, with the Ethernet 800 series, Intel needed to increase feature sets to stay competitive. To do so, they primarily have three new technologies ADQ, NVMeoF, and DDP. We are going to discuss each.

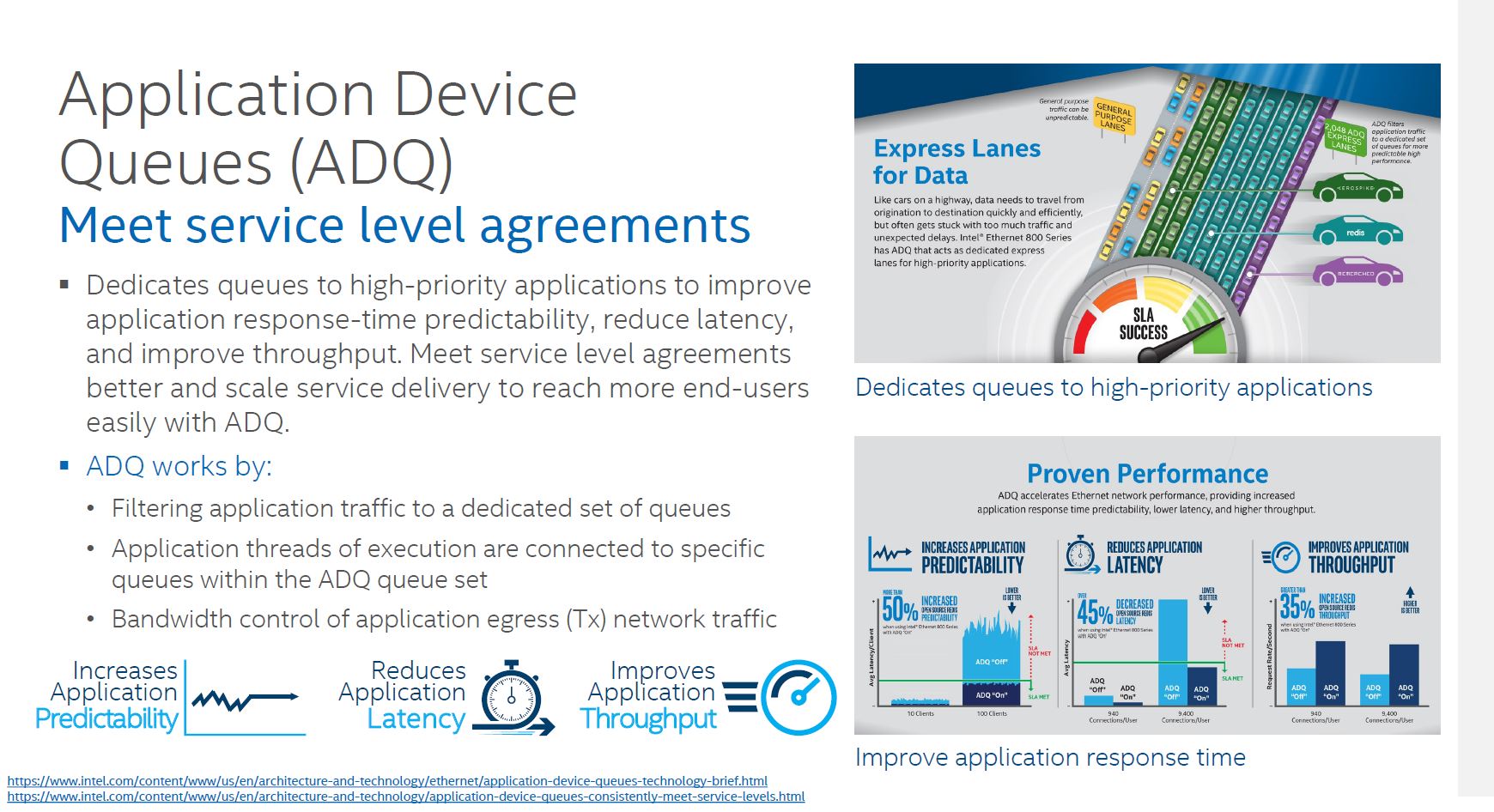

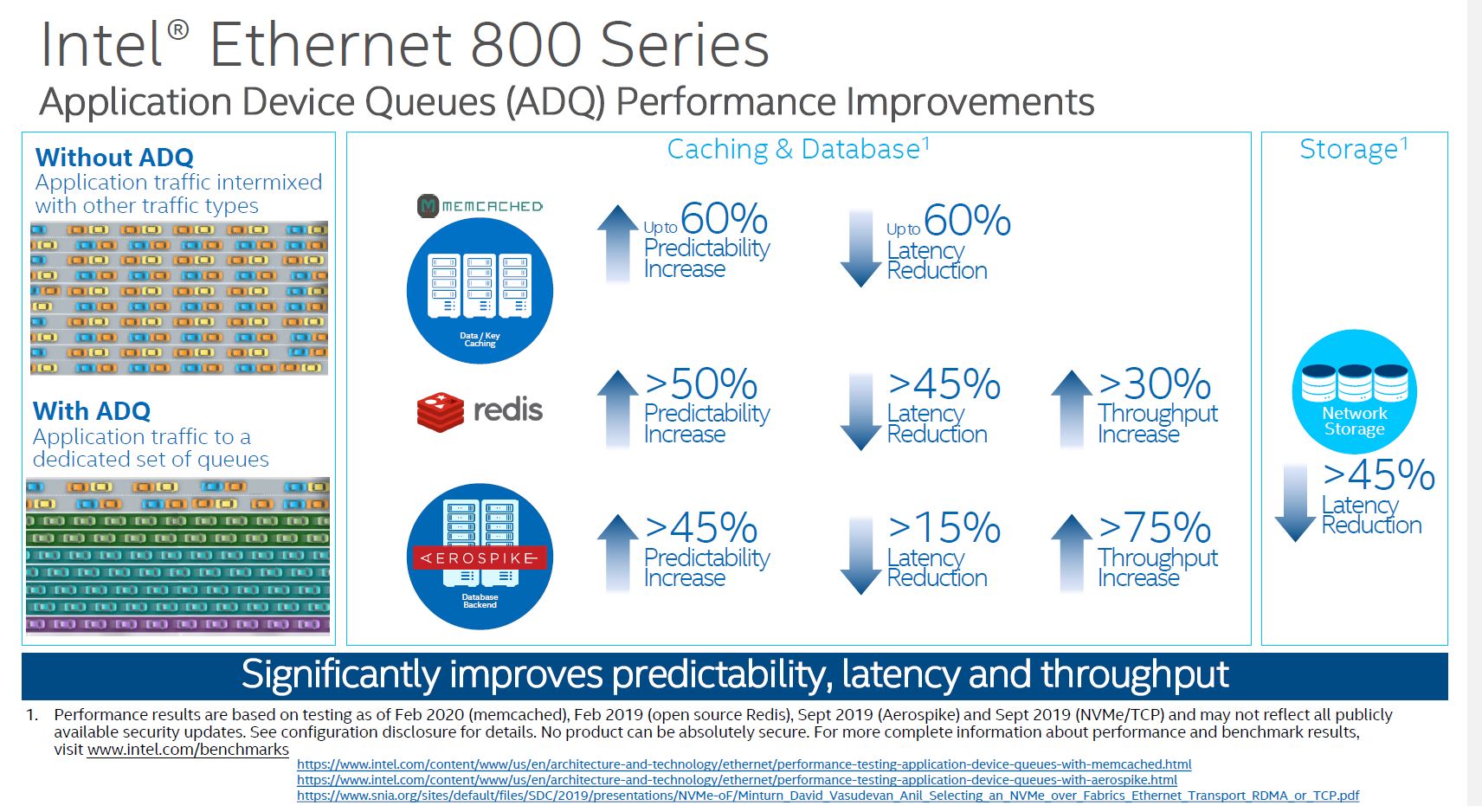

Application Device Queues (ADQ) are important on 100Gbps links (although we have 50Gbps (2x 25GbE) on this NIC. At 100GbE speeds, there are likely different types of traffic on the link. For example, there may be an internal management UI application that is OK with a 1ms delay every so often, but there can be a critical sensor network or web front-end application that needs a predictable SLA. That is the differentiated treatment that ADQ is trying to address.

Effectively, with ADQ, Intel NICs are able to prioritize network traffic based on the application.

When we looked into ADQ, one of the important aspects is that prioritization needs to be defined. That is an extra step so this is not necessarily a “free” feature since there is likely some development work. Intel has some great examples with Memcached for example, but in one server Memcached may be a primary application, and in another, it may be an ancillary function which means that prioritization needs to happen at the customer/ solution level. Intel is making this relatively easy, but it is an extra step.

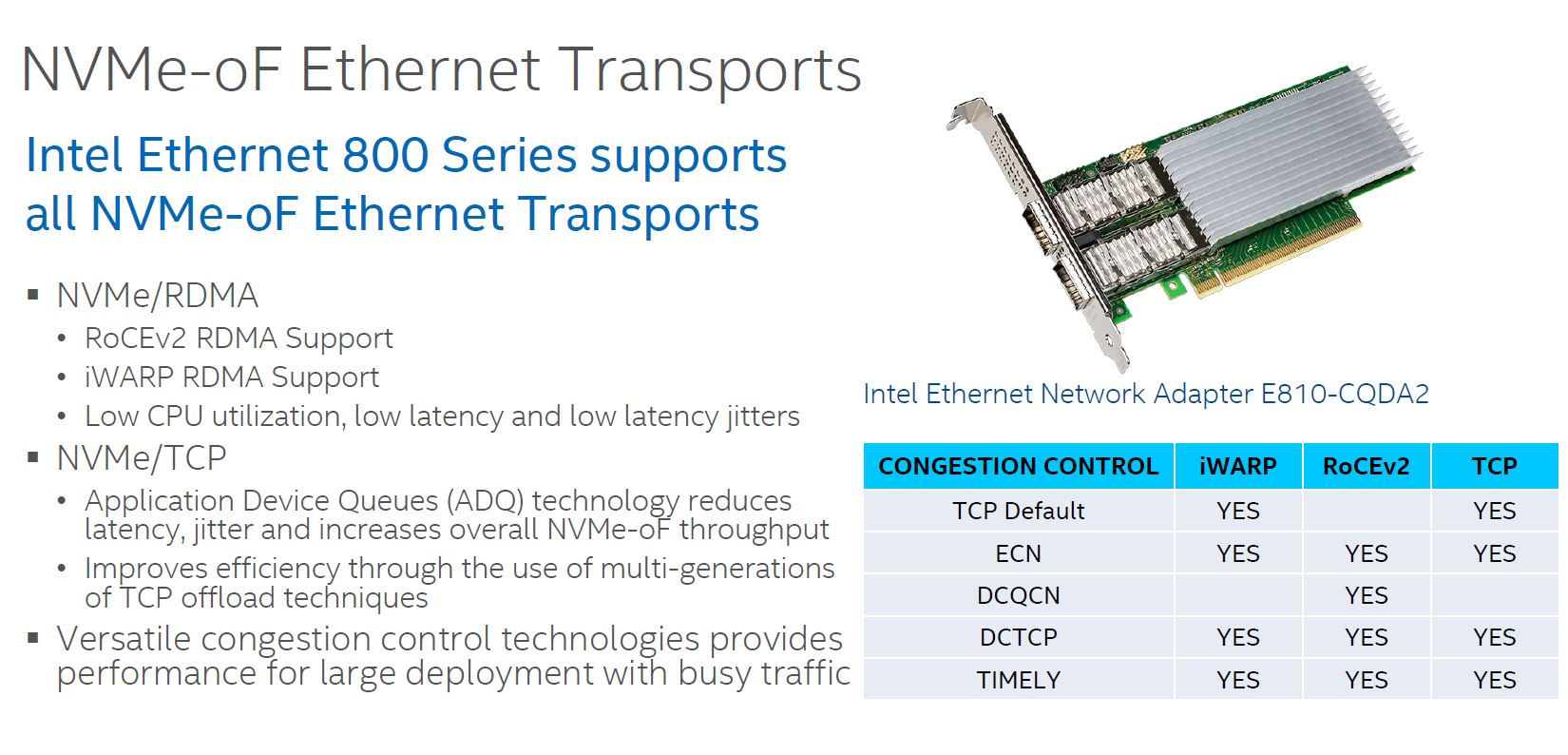

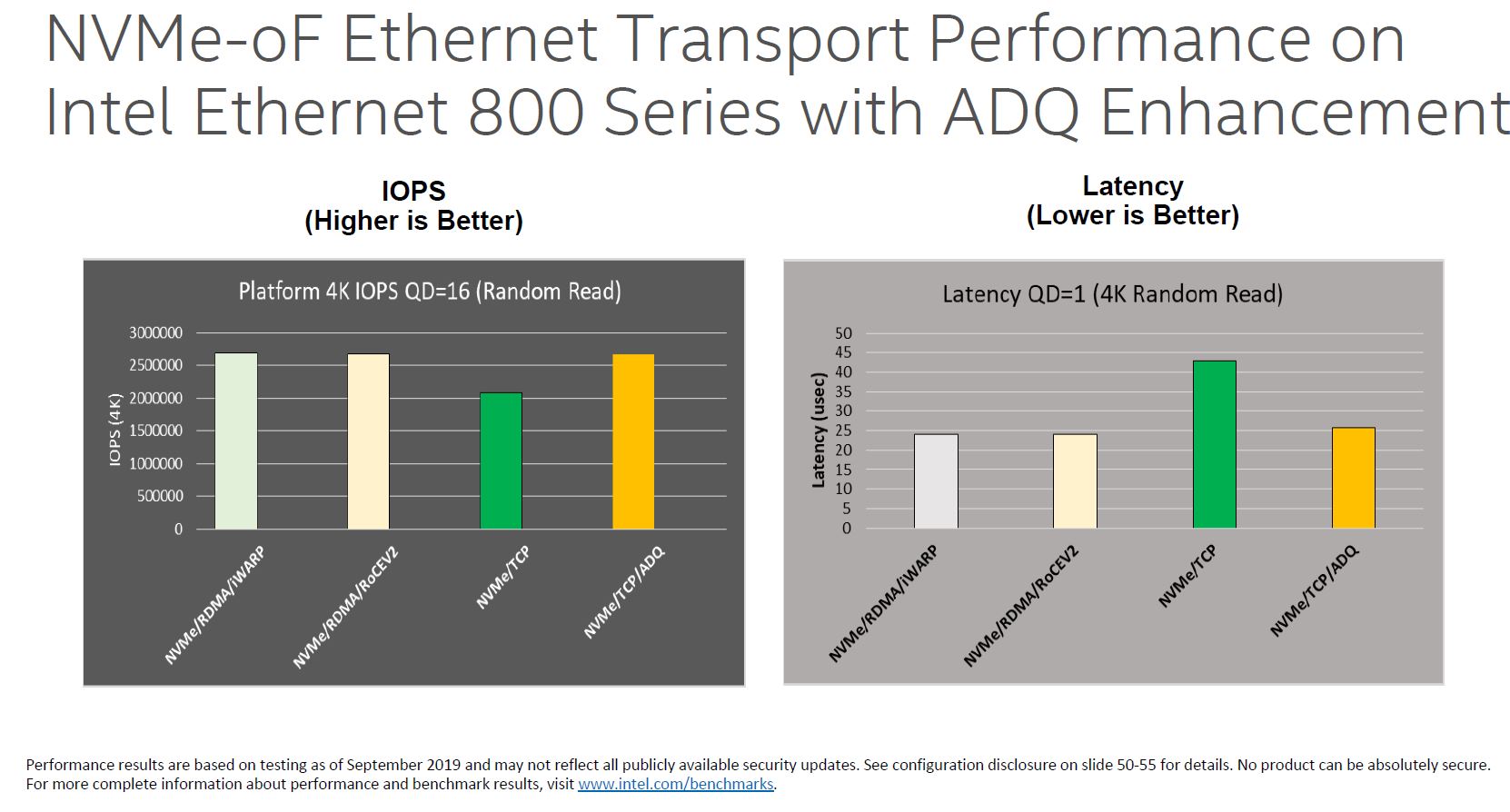

NVMeoF is another area where there is a huge upgrade. In the Intel Ethernet 700 series, Intel focused on iWARP for its NVMeoF efforts. At the same time, some of its competitors bet on RoCE. Today, RoCEv2 has become extremely popular. Intel is supporting both iWARP and RoCEv2 in the Ethernet 800 series.

The NVMeoF feature is important since that is a major application area for 100GbE NICs. A PCIe Gen3 x4 NVMe SSD is roughly equivalent to a 25GbE port worth of bandwidth so a dual 100GbE NIC provides about as much bandwidth as 8x NVMe SSDs in the Gen3 era. The PCIe Gen4 NVMe SSDs like the Kioxia CD6 PCIe Gen4 Data Center SSD we reviewed are getting close to being twice that speed, but many are still using Gen3 NVMe SSDs. By increasing support for NVMeoF, the Intel 800 series Ethernet NICs such as these become more useful.

What is more, one can combine NVMe/TCP and ADQ to get closer to some of the iWARP and RoCEv2 performance figures.

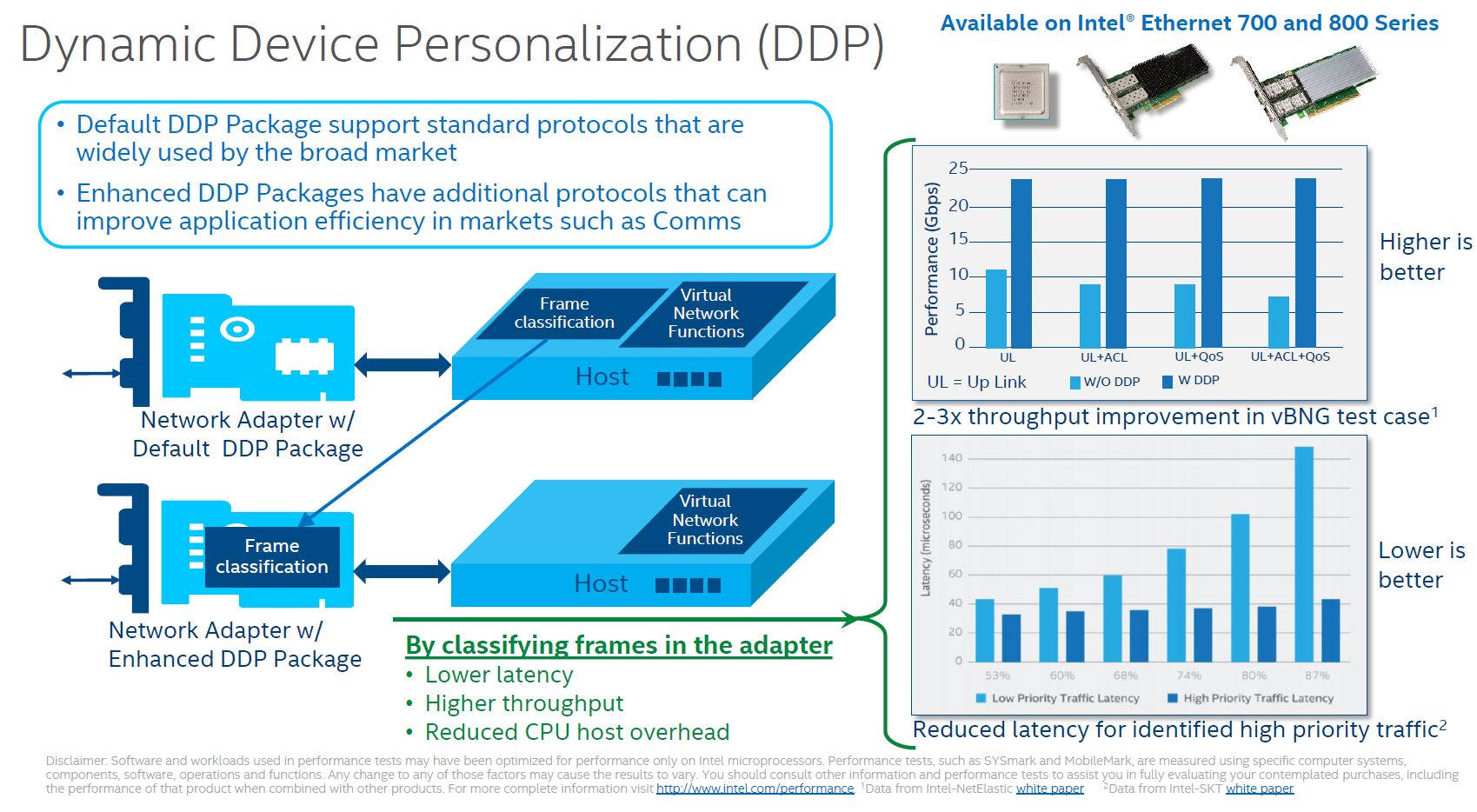

Dynamic Device Personalization or DDP is perhaps the other big feature of this NIC. Part of Intel’s vision for its foundational NIC series is that the costs are relatively low. As such, there is only so big of an ASIC one can build to keep costs reasonable. While Mellanox tends to just add more acceleration/ offload in each generation, Intel built some logic that is customizable.

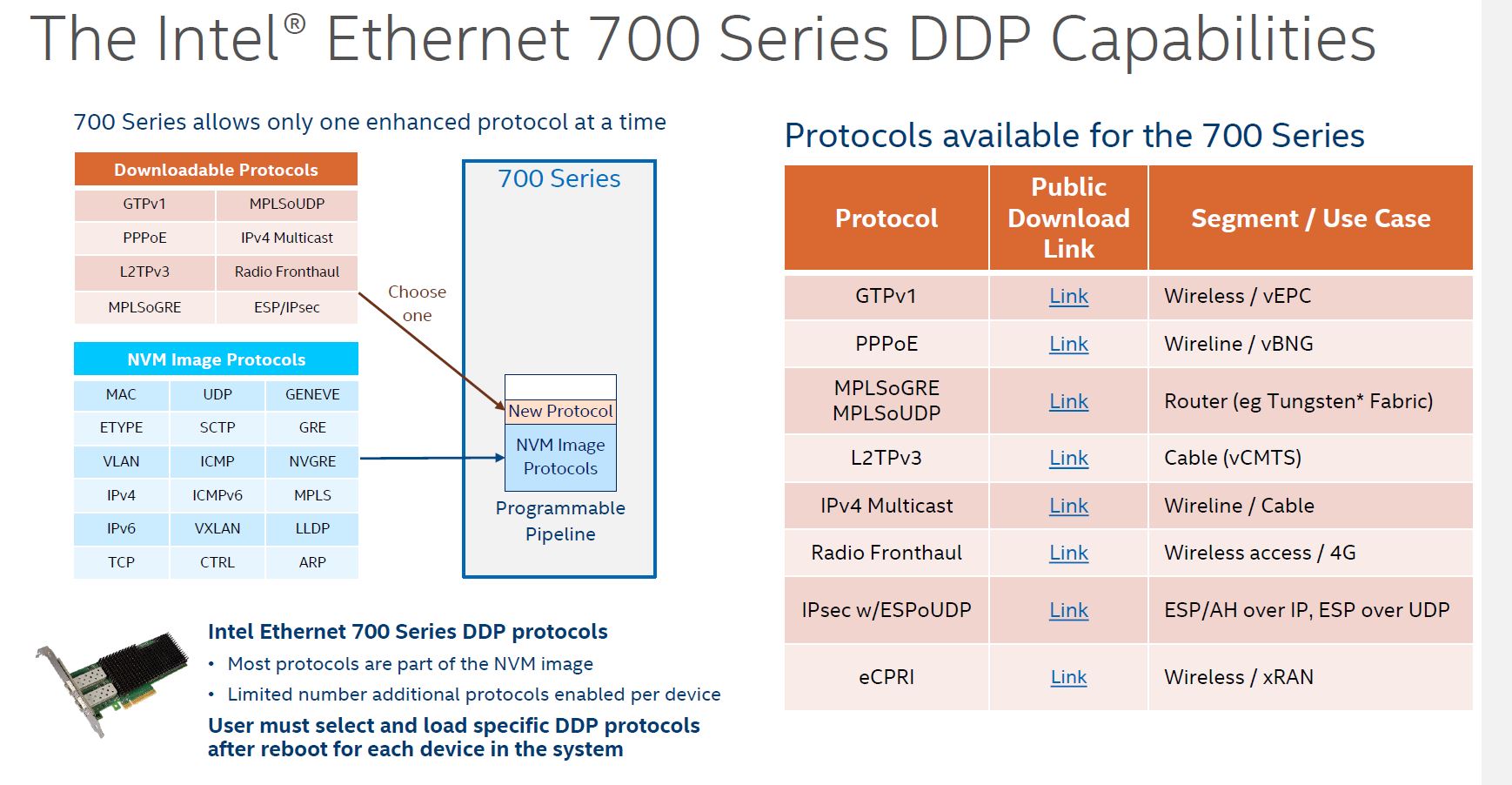

This is not a new technology. The Ethernet 700 series of Fortville adapters had the feature, however, it was limited in scope. Not only were there fewer options, but the customization was effectively limited to adding a single DDP protocol given the limited ASIC capacity.

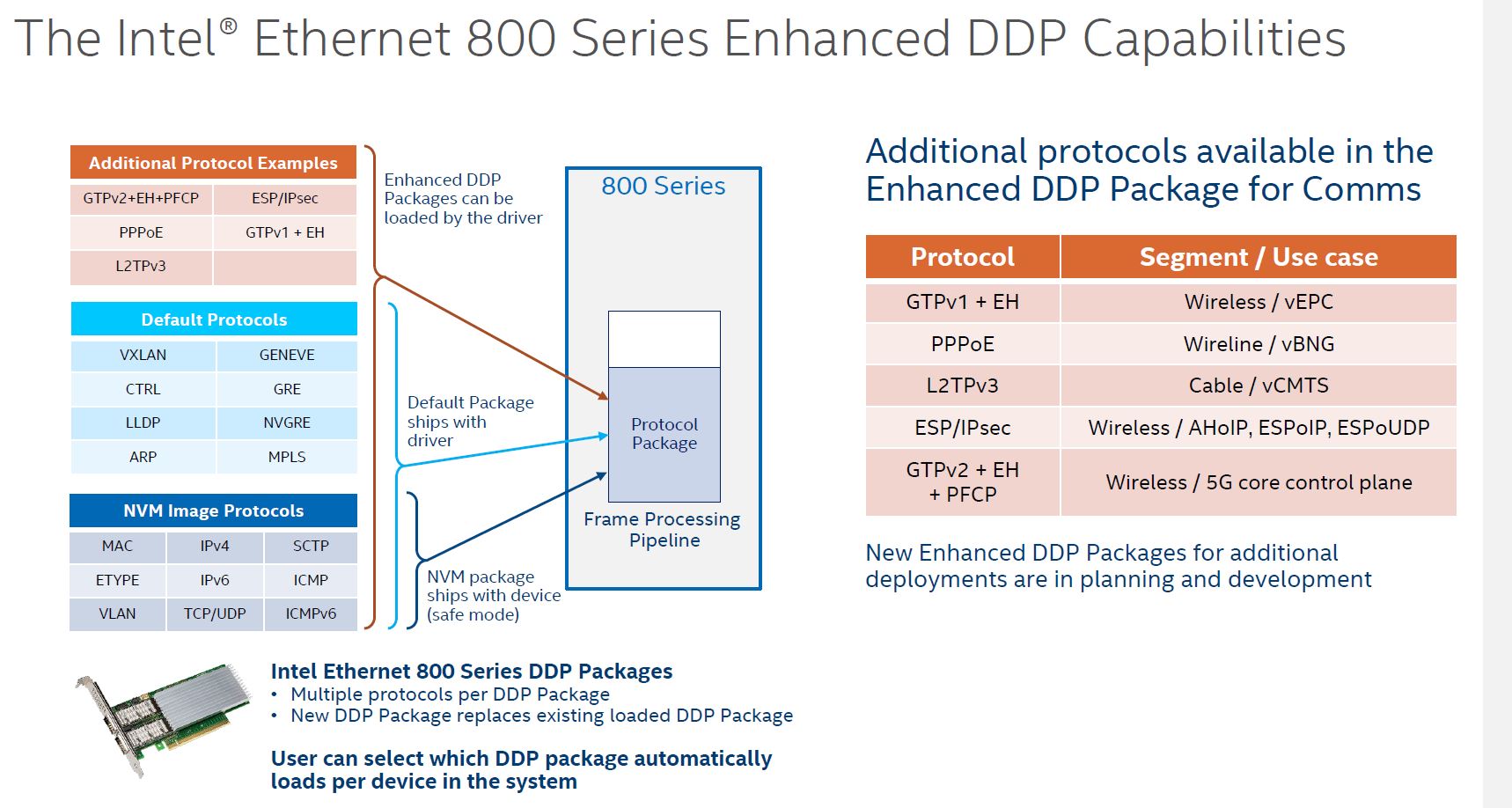

With the Intel Ethernet 800 series, we get more capacity to load custom protocol packages in the NIC. Aside from the default package, the DDP for communications package was a very early package that was freely available from early in the process.

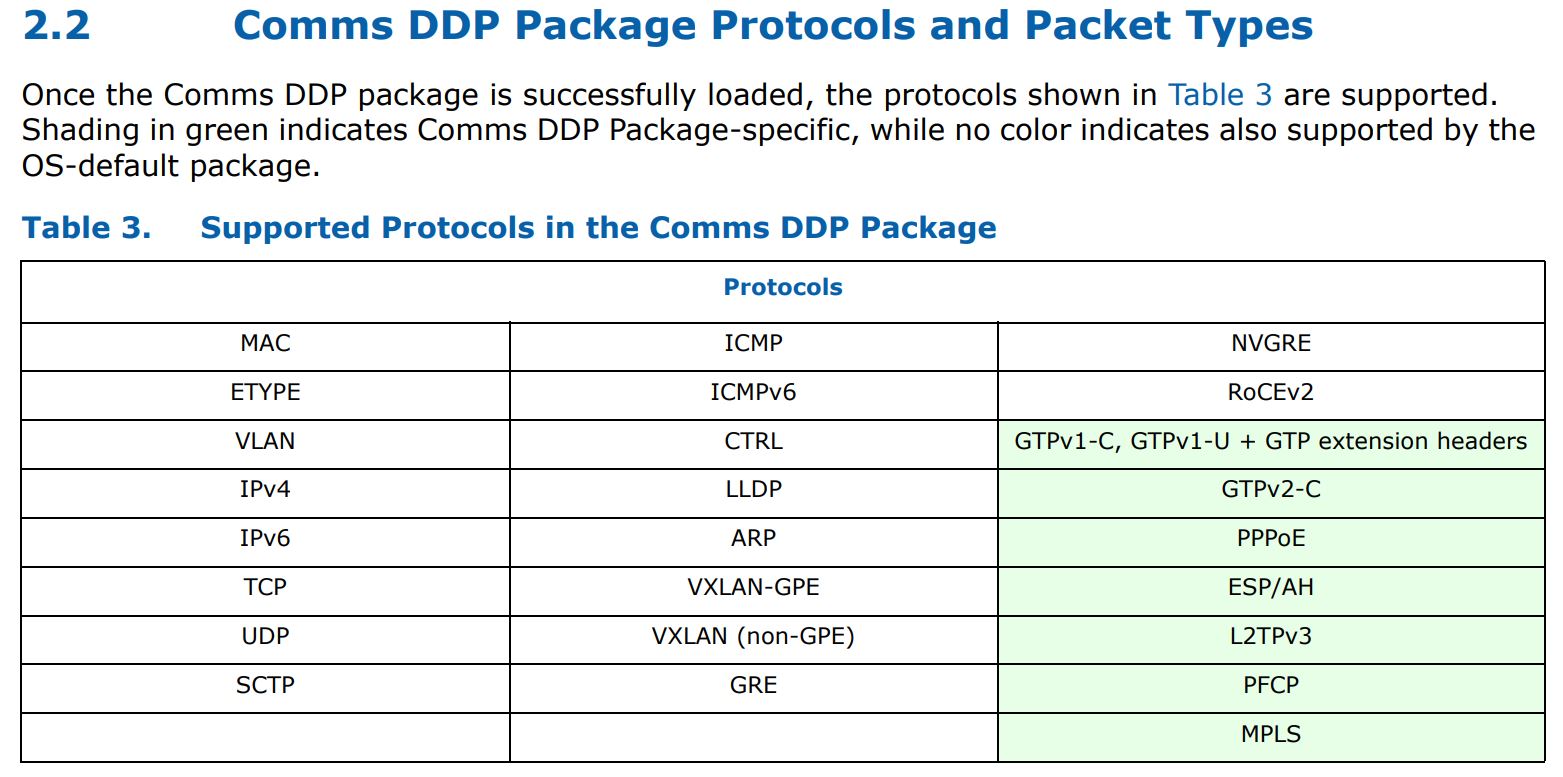

Here is a table of what one gets for protocols and packet types both by default, and added by the Comms DDP package:

As you can see, we get features such as MPLS processing added with the Comms package. These DDP portions can be customized as well so one can use the set of protocols that matter and have them load at boot while trimming extraneous functionality.

Performance

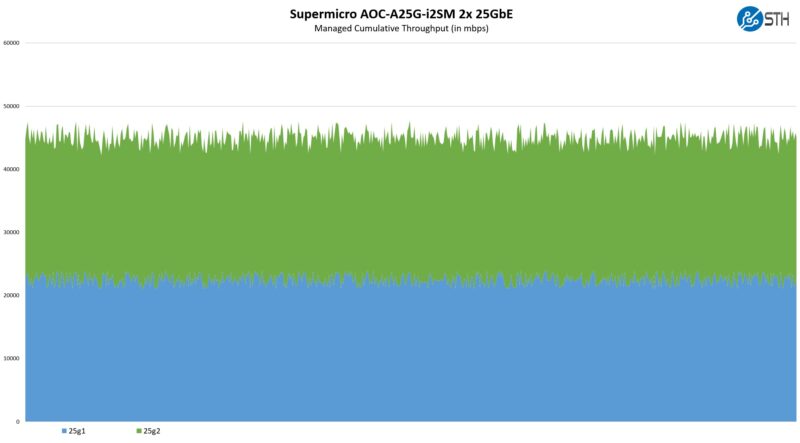

In our testing, we were able to hit 2x 25Gbps speeds in basic testing, as we would expect from a NIC like this.

Of course, this is Intel’s 25/100Gbps NIC generation running at dual 25GbE speeds for 50Gbps total, so the performance we would expect to be relatively good.

Power Consumption

In terms of power consumption, we have generally seen power consumption in the 9-12W range. The rated maximum power consumption is around 12.8W. The advantage of this NIC versus some of the other NICs like 100GbE NICs, DPUs, and so forth is that the power is much lower. Some servers, especially 1U servers, have challenges cooling OCP NIC 3.0 cards in the back of the chassis after the air has already been pre-heated by drives, CPUs, and so forth. As a result, having a lower TDP card is important.

Final Words

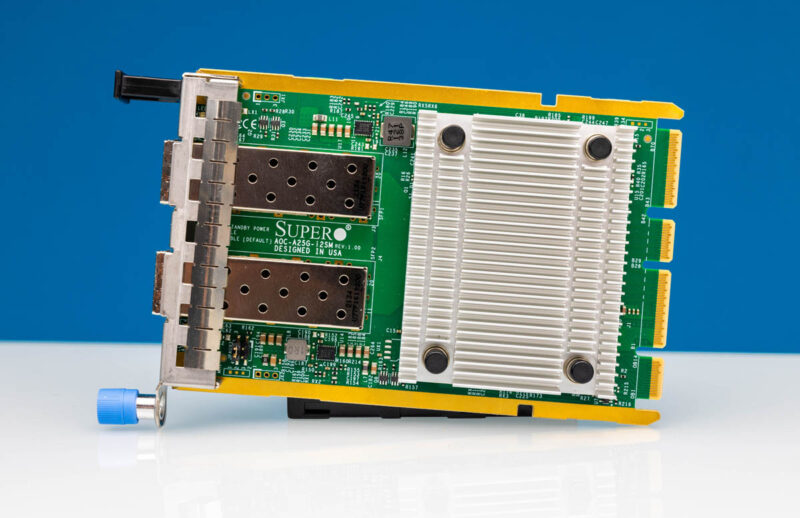

Overall, the Supermicro AOC-A25G-i2SM is about what we expected. Given this is an Intel Ethernet 800 series NIC, we generally see support in just about every modern OS. The ability to integrate into Supermicro’s server management via the NC-SI header makes it extremely interesting if you are looking for an Intel Ethernet 800 series NIC in your Supermicro server.

Of course, if you are purchasing a server from another vendor, this may not be the right NIC to use. On the other hand, if you are buying a Supermicro server, then having a nice and lower-power 25GbE NIC that integrates with server management and has the right rear faceplate may be worthwhile

This article reads like an Intel advertisement. You haven’t tested the impact of any of those custom technologies, but instead used PR slides without any verification.

NVMEoF is brilliant even at 40Gb/s on QDR IB. Highly underappreciated tech.