Today we are taking a look at one of the largest single systems STH has ever reviewed. The Supermicro 4028GR-TR GPU SuperServer houses up to 8x GPU/Xeon Phi, dual Xeon E5 processors and 24x 2.5” drive bays all in a single 4U chassis. Recently we reviewed one of Supermicro GPU/Xeon Phi SuperServers, namely the 1028GQ-TRT which is equipped with 4x AMD FirePro S9150’s in a compact 1U server case. See our Supermicro 1028GQ-TRT Quad GPU 1U SuperServer Review. This is essentially this is the larger sibling.

Supermicro SuperServer 4028GR-TR Key Features:

- Dual socket R3 (LGA 2011) supports Intel Xeon processor E5-2600 v3 family; QPI up to 9.6GT/s

- Up to 1.5TB ECC, up to DDR4 2133MHz; 24x DIMM slots (3 DIMMs/ channel design)

- Expansion slots:

- 8 PCIe 3.0 x16 (double-width) slots

- 2 PCIe 3.0 x8 (in x16) slots

- 1 PCIe 2.0 x4 (in x16) slot

- Intel i350 gigabit Ethernet (GbE) dual port networking

- 24x 2.5″ hot-swap drive bays

- 8x 92mm RPM hot-Swap cooling fans

- 1600W redundant (2+2) power supplies; 80Plus Platinum Level (94%+)

Like we saw with the Supermicro 1028GQ-TRT the Supermicro 4028GR-TR offers direct connection direct CPU connection to GPU’s through 8x PCIe 3.0 x16 slots to achieve low latency and reduce power consumption. This system supports up to 8x Nvidia Tesla cards including K80 dual-GPU accelerators up to 300watts, AMD FirePro Server GPUs or Intel Xeon Phi cards. Installing GPU’s is simple and no complex cabling or repeaters are required.

Storage provisions include front mounted 24x 2.5” hot-swap bays. Network needs are supplied with 2x 1GBase-T LAN and remote management ports, in addition 2x PCIe 3.0 x8 (in x16) and 1x PCIe 2.0 x4 (in x16) slots are available for expansion cards in the back of the server.

Supermicro offers two versions of this SuperServer. The first is the 4028GR-TR that comes equipped with dual Intel I350 gigabit Ethernet (GbE) network adapters, this is the server we will be reviewing today. The second version is the 4028GR-TRT which comes with dual 10GBase-T LAN using an Intel X540 controller. The difference between the two models is essentially the network controller.

Supermicro 4028GR-TR overview

The Supermicro SuperServer 4028GR-TR server comes in a 4U package (Height: 7.0″ (178mm), Width: 17.2″ (437mm), Depth: 29″ (737mm)) and weighs in at a hefty 80 lbs (36.2 kg) before GPU’s and drives are installed.

The front is divided up into 2x 2U sections with the bottom portion holding all 24x 2.5” Hot-swap drive bays.

The top 2U portion is left open for air-flow which feeds directly into the 8x 92mm RPM hot-swappable cooling fans found in the mid plane cooling bar.

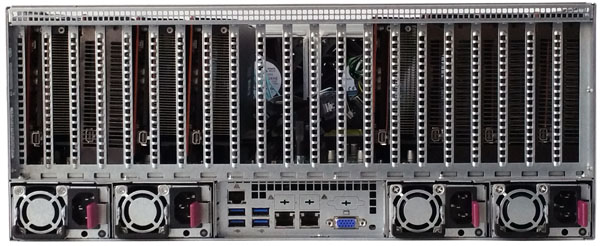

At the back of the server we can see the rear exhaust ports for all 8x GPU’s and the middle expansion bay area.

Across the bottom we find 4x 1600W redundant (2+2) power supplies. The middle IO area has 4x USB 3.0 ports, 2x 1GBase-T Ethernet LAN ports, 1x remote management port and a VGA connector.

Here we have taken off the top lid to show the internal layout. One can see that all eight GPUs are housed at the rear of the chassis.

Each of the 24x 2.5” Hot-swap drive bays come equipped with standard Supermicro 2.5” locking drive trays.

The mid-plane cooling bar houses 8x 92mm RPM hot-swappable cooling fans.

These are impressive cooling fans that can move a large amount of air through the server.

At the bottom rear of the server we have 4x 1600W redundant (2+2) power supplies; 80Plus Platinum Level (94%+).

As with other Supermicro servers, these simply unlock and slide out if replacement is needed.

The front left side of the server has the control panel which has typical functions found in Supermicro servers.

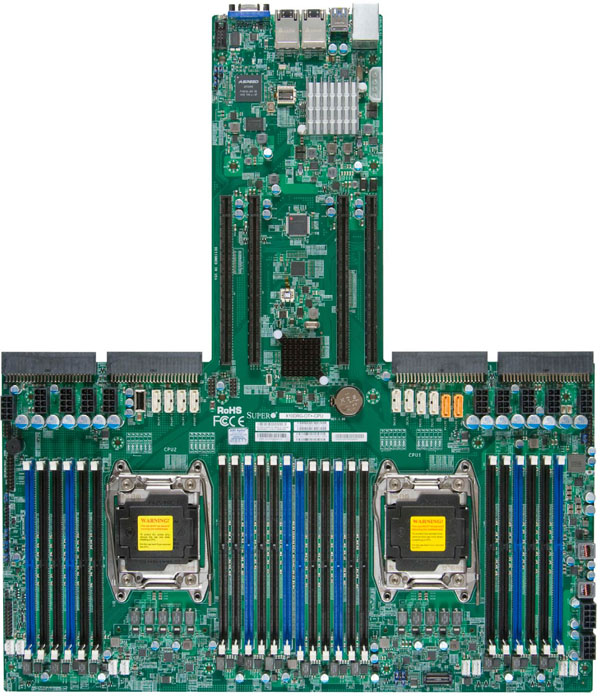

The heart of the server is the Supermicro X10DRG-OT-CPU motherboard which ties all the PCIe slots and storage together. This is a custom PCB specifically made for this chassis.

The four connectors which are located on either side of the main PCIe expansion, connect the system to the four power supplies. This helps to reduce cable clutter and provides clean connections to the PSU’s. The motherboard also provides the necessary power connections for all eight GPU’s which will be mounted on the PCIe daughter board which plus into the four PCIe slots.

Before we started testing the Supermicro 4028GR-TR CPU’s and RAM needed to be installed.

Directly behind the main cooling assembly is where the CPU’s are located which provides optimal cooling for these components. Here we see all 24x DIMM slots which provide a capacity up to 1.5TB ECC, up to DDR4 2133MHz. Also we find the dual Socket R3 (LGA 2011) which each support the Intel Xeon processor E5-2600 v3 family.

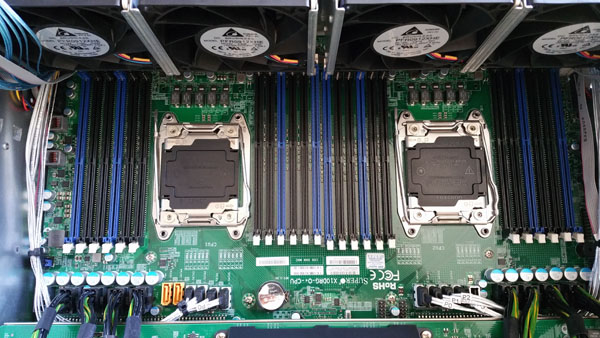

Here we can see this area after CPU’s and RAM have been installed.

We found it much easier to install the CPU’s and RAM after we removed the cooling fans and no GPU’s were installed at that time. This gave us clear access to all the RAM slots and CPU sockets.

Power to the GPU’s is provided by power connections on the motherboard and custom length cables.

Just behind the CPU/RAM area on the left side of the server we find power connections to 4x GPU’s and also 4x SATA cables which route to the front back plane.

On the right side we find the other 4x power connectors and an additional 4x SATA cables that connect to the front back plane. In addition we find 2x orange SATA ports which are SATA SuperDOM connectors from Intel PCH. These orange SATA ports can be used as standard SATA ports or can power SATADOMs without requiring additional cables. There is also an additional power connector to power expansion cards if needed.

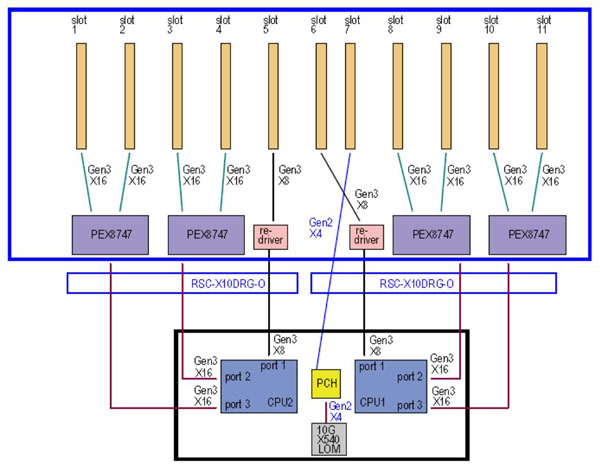

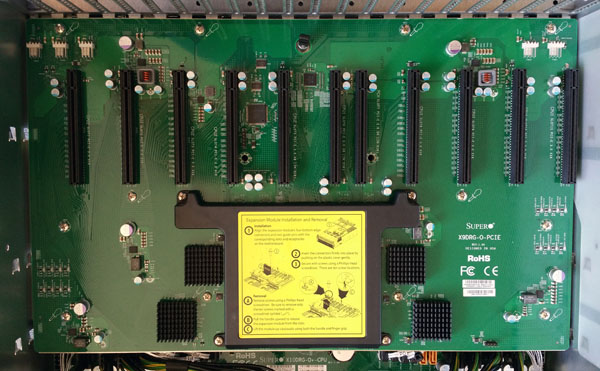

Here we see the block diagram for the PCIe slots.

A series of Avago Technologies PEX8747 chips expand the PCIe slots from 4 found on the Supermicro X10DRG-O(T) motherboard to 8 on the daughter board. Additional circuits provide the remaining 2x x8 PCIe slots and 1x x4 PCIe slot.

Here we see the PCIe Daughter Board before GPU’s have been installed.

After we have finished installing the CPU/RAM and GPU’s we take a finial look at the system all setup and ready to begin testing.

You can see blue cables coming from both sides of the server, these connect to the front drive back plane and allow these to be connected to optional back mounted storage expansion cards. These are not required but provide for higher performing storage options, or these expansion slots can be used for high performance network cards.

A top down view shows the complete system layout. We should also note that for complete systems there are two air shrouds that fit into this system, we did not have these. We also noted no ill effects when running the system without them.

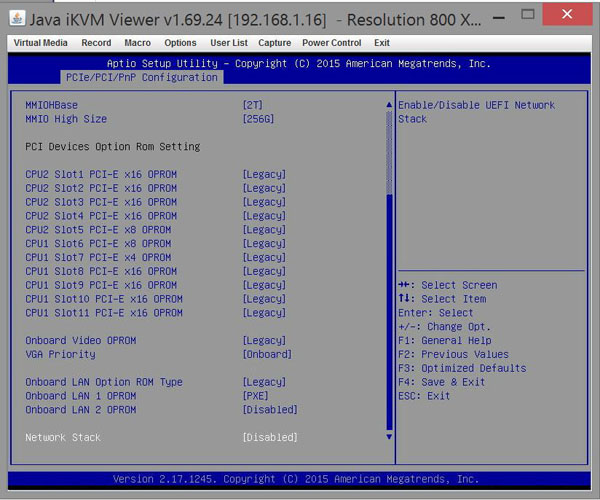

BIOS

With so many expansion slots, having the ability to adjust BIOS options can be critical to troubleshoot compatibility issues with such a large and complex system.

Looking at the BIOS we can see how it reports the PCIe slots. Each of the 8x slots used for the GPU’s are PCIe x16 and the 3x expansion slots are 2x x8 slots and 1x x4 slot.

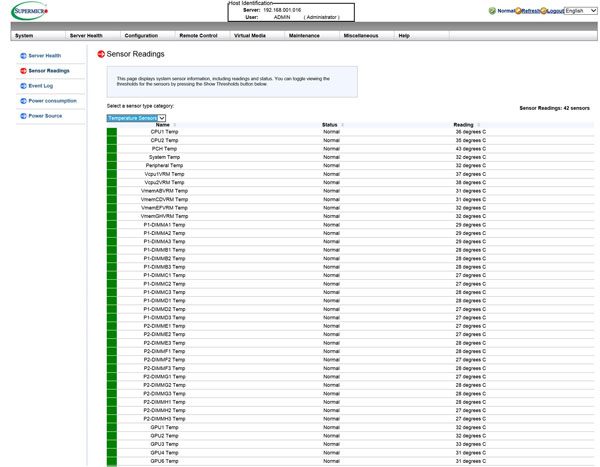

Remote Management

For remote management simply enter the IP address for the server into your browser and login. The Supermicro remote functionality additionally can be accessed via IPMIview or tools like ipmitool.

The login information for the 4028GR-TR

- Username: ADMIN

- Password: ADMIN

We ran our tests completely at STH’s new colocation facility using this remote management.

Remote management also reports the temperature of each CPU/GPU and also all the other aspects that are typical of Supermicro remote management solutions.

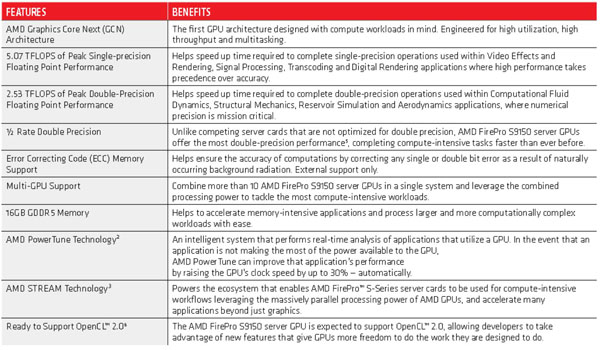

AMD FirePro S9150 Server GPU’s

As you have seen in previous pictures our test server came equipped with 8x AMD FirePro S9150 Server GPU’s. Each is a double-width passively cooled design which is perfect for this server.

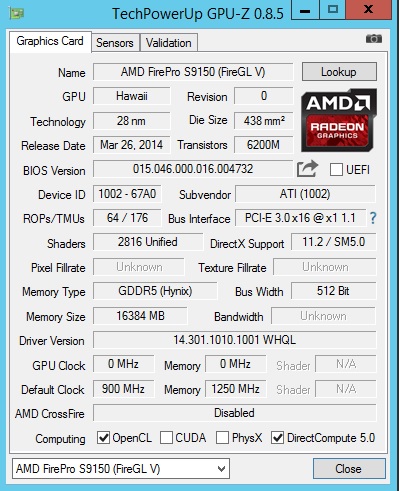

We showed stats of these cards on the 1028GQ-TRT, let’s include the specifications for these cards here also.

Key AMD FirePro S9150 Cooling/Power/Form Factor Specs:

- Cooling/Power/Form Factor:

- Max Power: 235W

- Bus Interface: PCIe 3.0 x16

- Slots: Two

- Form Factor: Full height/ Full length

- Cooling: Passive heat sink

The AMD FirePro S9150 is an interesting GPU, it offers excellent performance per watt with its 235watts of power consumption and is the first GPU to break the 2.0 TFLOPS barrier with enhanced support for double precision computations. The S9150 also offers support for OpenGL 4.4, OpenCL 2.0, OpenACC 2.0, OpenMP 4.0 and DirectX 12 through AMD’s software ecosystem.

This is how GPUz reports these cards.

Test Configuration

The Supermicro 4028GR-TR does not include a DVD drive for installing software so in our case, we used a USB DVD drive to install Windows Server 2012 R2 and run our Ubuntu Disk directly off of the USB drive. We were also able to use remote KVM-over-IP to install relevant software while int he datacenter. We had no issues getting a OS installed on the system, drivers were used directly from Supermicro’s website.

- Processors: 2x Intel Xeon E5-2697 V3 (14 cores each)

- Memory: 24x 16GB Crucial DDR4 ECC RDIMMs (384GB Total)

- Storage: 1x SanDisk X210 512GB

SSD

- GPU’s: 8x AMD FirePro S9150

Server GPU’s

- Operating Systems: Ubuntu 14.04 LTS and Windows Server 2012 R2

Recently STH has started setting up our big test machines in a new colocation. With the growing need to review larger and larger systems we quickly surpased our power limits at our previous labs. We run on 120v 20amp mains in the old lab and the Supermicro 4028GR-TR can quickly surpase that. The new racks in the data center can run up to 208V 30amp loads which is perfect for these multi GPU servers. Not only does this work out well for power needs, these servers generate a tremendous amount of noise. We now operate these machine fully through remote access and everything is working very well so far. When the server was installed in the new c0location we used remote management to install the operating system and operate the server.

Here is the first server to be installed at the new location. There are already a number of additional machines along with 10Gb/ 40Gb Fiber and copper switches installed.

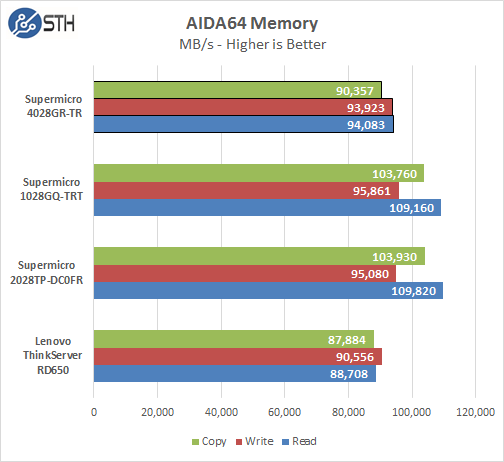

AIDA64 Memory Test

AIDA64 is our traditional stress test mechanism for memory subsystems in Windows.

Memory Latency ranged at ~86.9ns and our average systems using 24x 16GB DIMM’s ranged about ~78ns.

With 24x 16GB DDR4 memory sticks installed we saw memory speeds of 1600MHz. When moving to large memory load outs like this server provides, memory speeds will be reduced when populating all 24x memory slots. To note that if this was a system based on DDR3 we would see speeds down to 800MHz. We can see a clear advantage here on new systems using DDR4 based memory.

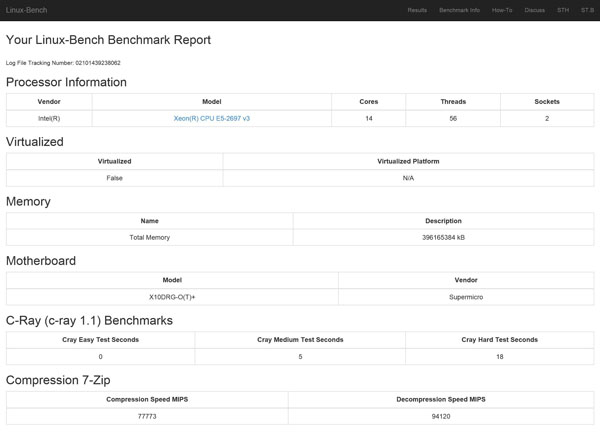

Linux-Bench Test

Linux-Bench is our standard Linux benchmarking suite. It is highly scripted and very simple to run. It is available to anyone to compare their with their systems and reviews from other sites. See Linux-Bench.

Example full test results for our Linux-Bench run can be found here: Supermicro 4028GR-TR Linux-Bench Results

The E5-2697 V3 processors are rated at 145watts TDP and are equipped with 14 cores at 2.6GHz/3.6GHz Turbo speed. For higher memory requirements the system can support up to 1.5TB ECC LRDIMM, 768GB ECC RDIMM.

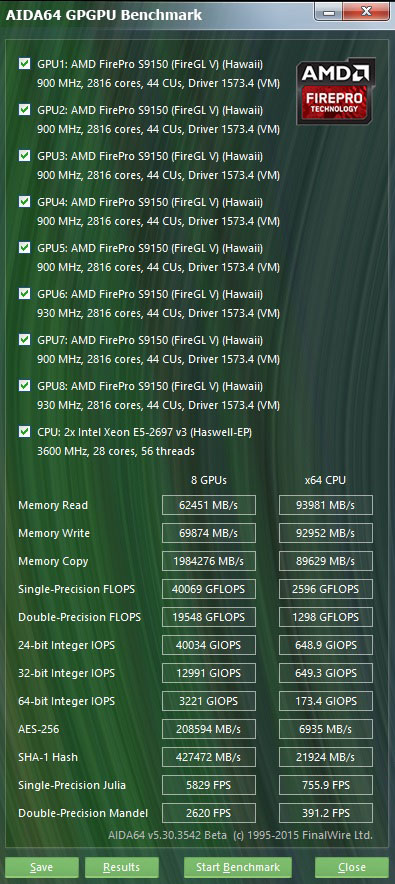

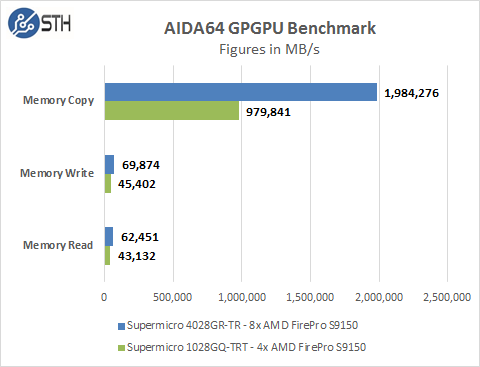

AIDA64 GPGPU Test

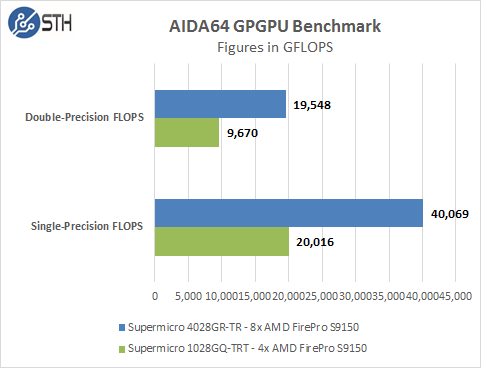

For our GPU testing we use AIDA64 Engineer to run the provided GPGPU tests which has the ability to run on all eight GPU’s and the CPU’s.

The first test we ran was AIDA64 GPGPU benchmark. This test see’s all GPU/CPU present in the system and goes through a lengthy benchmark test. Each of the S9150 GPU’s are rated for 5.07 TFLOPS of single-precision performance and we can see our cards perform right in these specifications. Our combined single-precision performance came in at 40,069 GFLOPS or 40 TFLOPS of performance!

This is double the performance we saw on the Supermicro 1028GQ-TRT with 4x AMD FirePro S9150’s installed and shows that these systems scale up very well.

Memory Read: Measures the bandwidth between the GPU device and the CPU, effectively measuring the performance the GPU could copy data from its own device memory into the system memory. It is also called Device-to-Host Bandwidth. (The CPU benchmark measures the classic memory read bandwidth, the performance the CPU could read data from the system memory.)

Memory Write: Measures the bandwidth between the CPU and the GPU device, effectively measuring the performance the GPU could copy data from the system memory into its own device memory. It is also called Host-to-Device Bandwidth. (The CPU benchmark measures the classic memory write bandwidth, the performance the CPU could write data into the system memory.)

Memory Copy: Measures the performance of the GPU’s own device memory, effectively measuring the performance the GPU could copy data from its own device memory to another place in the same device memory. It is also called Device-to-Device Bandwidth. (The CPU benchmark measures the classic memory copy bandwidth, the performance the CPU could move data in the system memory from one place to another.)

Single-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with single-precision (32-bit, “float”) floating-point data.

Double-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with double-precision (64-bit, “double”) floating-point data. Not all GPUs support double-precision floating-point operations. For example, all current Intel desktop and mobile graphics devices only support single-precision floating-point operations.

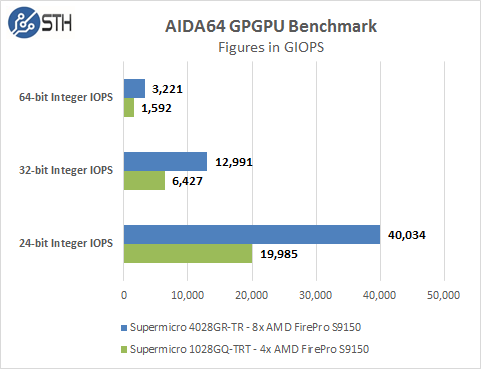

24-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 24-bit integer (“int24”) data. This special data type are defined in OpenCL on the basis that many GPUs are capable of executing int24 operations via their floating-point units, effectively increasing the integer performance by a factor of 3 to 5, as compared to using 32-bit integer operations.

32-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 32-bit integer (“int”) data.

64-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 64-bit integer (“long”) data. Most GPUs do not have dedicated execution resources for 64-bit integer operations, so instead they emulate the 64-bit integer operations via existing 32-bit integer execution units. In such case 64-bit integer performance could be very low.

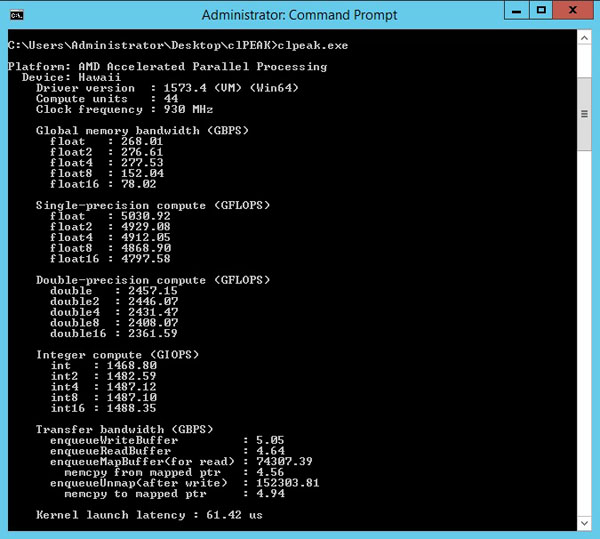

clPEAK Benchmark

We also ran clPEAK benchmark on the system and saw numbers right where we expected them to be.

This test runs on only one GPU at a time so the screen shown was repeated for each of the eight GPUs.

Our clPeak tests results were exactly the same as on the Supermicro 1028GQ-TRT, this is as expected because it is based on the AMD Radeon S9150’s only.

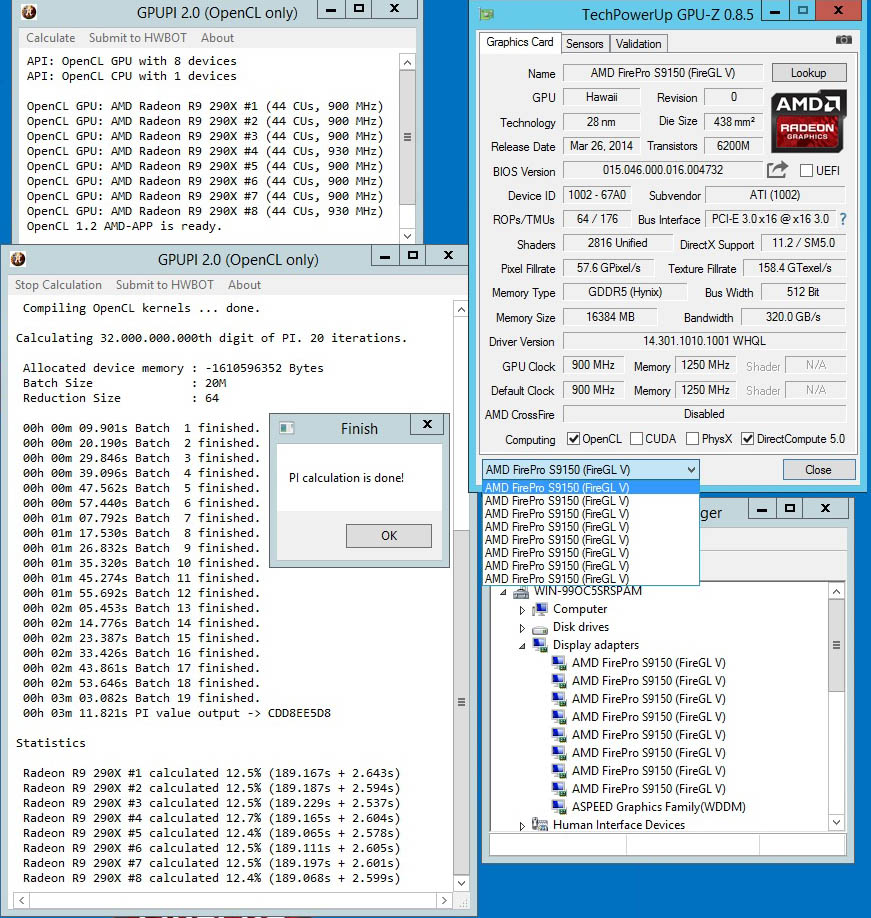

GPUPI 2.0

GPUPI is the first General Purpose GPU benchmark officially listed on HWBOT and also the first that can simultaneously use different video cards. Only the same driver is required. More information can be found here. GPUPI 2.0

This was the last benchmark we ran on this system and we received results that would rank as a World Record with a time of 3 minutes, 11.821 seconds to complete. We think this time can be lowered even more if more time was spent on tuning the system but is quite an amazing result.

Power Tests

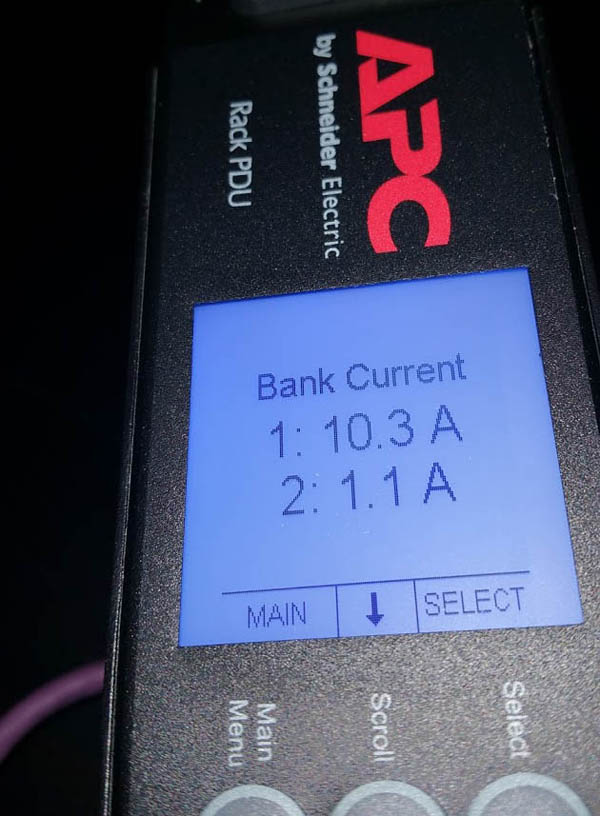

Normally in our lab we use a Yokogawa WT310 for power testing and we did start off using this with the 4028GR-TR and power on tests. After the Supermicro 4028GR-TR was moved to the new server location we started using this APC Rack PDU for our power monitoring.

This Schneider Electric APC AP8441 PDU is commonly used in colocation environments to provide power usage data for billing. It has accuracy of 1% and perhaps most interestingly, can provide per-outlet power metering.

Fully Loaded Stress Tests Power Use

At this point we have moved the Supermicro 4028GR-TR to the new data center for finial testing.

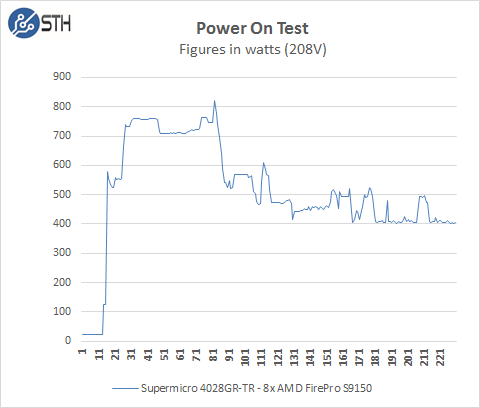

We start with the power needs of the system when it is turned off. The 4028GR-TR pulls ~24watts to keep remote management on. When the power button is pressed the boot process starts and we go through various boot up processes, initializing various components and finally the OS start up. Our test system jumps to ~750watts very quickly, peaks at ~820watts and finally settles down ~400watts on idle, depending on what the system is doing and other BIOS settings this can increase. For our tests we use default BIOS settings and we make no changes to these.

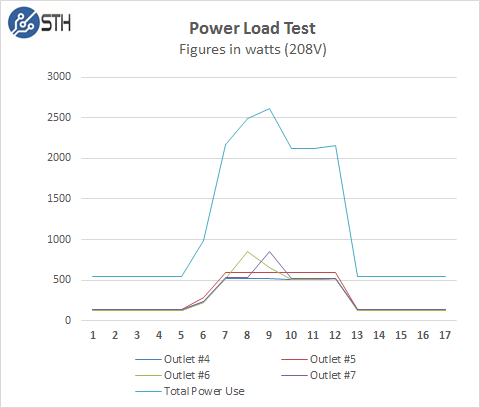

Next we use a Schneider Electric APC AP8441 PDU to remotely monitor power use. It does not have the granularity that the Yokogawa WT310 provides but it gives us good data. This graph comes directly from the APC and shows that we have the 4028GR-TR connected through 4 outlets numbered 4, 5, 6, 7. The graph shows what each outlet’s power use is and we summed them up to arrive at total power consumption.

For our tests we use AIDA64 Stress test which allows us to stress all aspects of the system. We start off with our system at idle which is pulling ~500watts and press the stress test start button. You can see power use quickly jumps to over 2,000watts and continues to climb until we cap out at ~2,613watts.

Thermal Testing

To measure this we used HWiNFO sensor monitoring functions and saved that data to a file while we ran our stress tests.

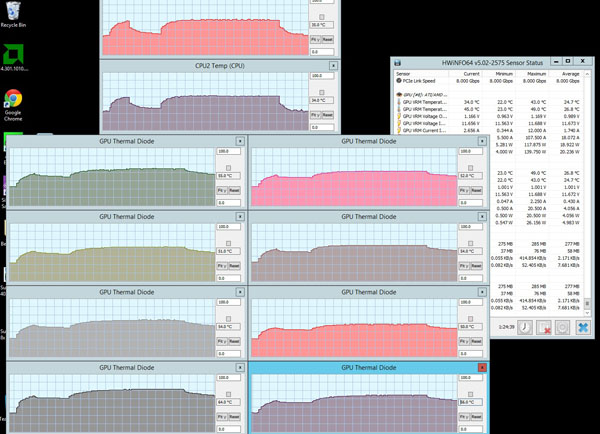

Here we see what our HWiNFO sensor layout looks like while we test.

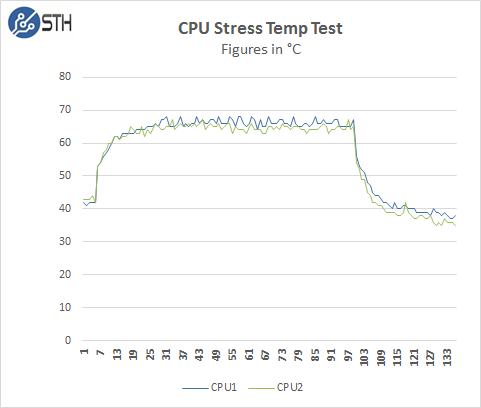

Now let us take a look at how heat from the stress test loads were handled by the cooling system in the 4028GR-TR. This shows that the servers 8x 92mm RPM Hot-Swap cooling fans are very powerful and cools the CPU’s very well.

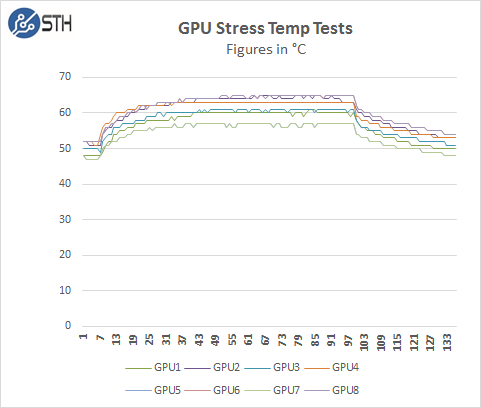

Here we see the temperatures of the GPU’s while the stress test is running. Just like the CPU’s the servers 8x 92mm RPM Hot-Swap cooling fans really do a nice job at keep all 8x AMD Radeon S9150’s nice and cool under full loads.

Conclusion

With the new colocation we now have the ability to test large systems that draw much more power than we had in our previous lab. We started off with the massive Supermicro 4028GR-TR which equips 2x Xeon E5-2600 V3 processors, up to 1.5TB of DDR4 and an incredible load out of 8x GPU’s.

Housing a total of 8x GPU’s in the 4028GR-TR allows these GPU’s to be treated as a single node and double the computational of smaller systems that only hold 4x GPU’s. This system also has the capacity to use up to 24x hot-swap storage bays and expanded storage cards to provide high speed storage nodes so storage needs do not have to be off loaded onto other servers, this increases performance of the system.

One of the draw backs of large systems like this is heat output. Dual CPU systems and 8x GPU’s running at full loads generate a tremendous amount of heat, in addition the AMD FirePro S9150’s do not have active cooling which adds to the server cooling needs. In our tests we found the 8x 92mm RPM hot-swap cooling fans that come equipped in the 4028GR-TR are more than capable of handling the entire server cooling needs and kept system temps well within operating tempatures. We also find that the server can handle more intense work loads with the cooling headroom this system provides. The large redundant cooling fans are an efficient way to cool this load.

Overall we find the 4028GR-TR is a well designed system that has the ability to handle high performance work loads. Moving to a large 4U server allows larger capacity cooling systems to be installed that keep the system cool while running extreme work loads. This is a trade off vs smaller 1U systems which have higher density but operate at close to maximum heat load capacities. For many datacenters, having 10x of these machines each at 2.6kW is more than the power/ cooling of racks can handle so one does need to be careful planning installation.

That is a big honkin’ box for sure. We have a few older NVIDIA GPU servers we use for VDI. It’d be cool if you got some fast storage and saw how many virtual desktops you could launch on the box.

Can you stick any other 8 PCI Cards in it ?

Here is a Gen3 x16 PCIe card with three Intel Xeon processor E3 v4 CPUs, which each contain the Intel® Iris™ Pro graphics P6300 GPU.

TWENTY FOUR more Processors (and GPUs, etc.) In that Box would be great to see. Especially a Transcoding Benchmark.

The VCA Card: http://www.intelserveredge.com/intelvca

Still working on getting those cards. In theory they would work in the GPU compute systems and are relatively lower power.

Has anyone looked at how well the number of pcie channels support the xeon phis? It is a fantastic case but I wonder whether 8 cards would be saturating the interconnect with 1 processor per 4 Phis?

2 x10drg’s seem like a better solution in the cases where the analytical models can be held within a single.

Is this a stop gap for the new Knight’s Landing architecture?

Thanks

Robert

Wonderful review, as always. I noticed the ASRock 3U8G-C612 8-Way GPU Server has a 1u add-on “cap” that increases the clearance for GPUs that have top mounted power connectors (e.g. an Nvidia GTX Titan or 1080).

Is there anything like that for this Supermicro 8-GPU server? If not, would a super low profile GPU power connector fit (e.g. is there 10-15mm of space between the GPU top and the inside lid of the server?) Thanks as that is a deal-maker/breaker for us.

Hi Chris,

We no longer have this machine. I was told by SM that you would need part: MCP-230-41830-0N to give you the extra headroom to use those cards.

But can it run Crysis?

Hi

please send me quotation and delivery for this configuration:

SUPERMICRO SYS-4028GR-TR CHASSIS

2 X INTEL XEON 12 CORE E5-2650V4 2.2GHZ 30MB SMART CACHE PROCESSORS

256GB (16 X 16GB) DDR4-2400 MEMORY

2 X 400GB INTEL S3710 2.5 INCH SSD DRIVES

8 X NVIDIA GEFORCE GTX 1080 TI 11GB VIDEO CARDS

1 X MELLANOX CONECTX-3 PRO DUAL PORT ADAPTER

May I know the ambient temperature when doing the stress test? Thanks!

17.5C and 71% RH